Modeling Lexical Tones for Mandarin Large Vocabulary Continuous Speech Recognition

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

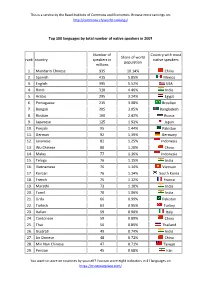

Top 100 Languages by Total Number of Native Speakers in 2007 Rank

This is a service by the Basel Institute of Commons and Economics. Browse more rankings on: http://commons.ch/world-rankings/ Top 100 languages by total number of native speakers in 2007 Number of Country with most Share of world rank country speakers in native speakers population millions 1. Mandarin Chinese 935 10.14% China 2. Spanish 415 5.85% Mexico 3. English 395 5.52% USA 4. Hindi 310 4.46% India 5. Arabic 295 3.24% Egypt 6. Portuguese 215 3.08% Brasilien 7. Bengali 205 3.05% Bangladesh 8. Russian 160 2.42% Russia 9. Japanese 125 1.92% Japan 10. Punjabi 95 1.44% Pakistan 11. German 92 1.39% Germany 12. Javanese 82 1.25% Indonesia 13. Wu Chinese 80 1.20% China 14. Malay 77 1.16% Indonesia 15. Telugu 76 1.15% India 16. Vietnamese 76 1.14% Vietnam 17. Korean 76 1.14% South Korea 18. French 75 1.12% France 19. Marathi 73 1.10% India 20. Tamil 70 1.06% India 21. Urdu 66 0.99% Pakistan 22. Turkish 63 0.95% Turkey 23. Italian 59 0.90% Italy 24. Cantonese 59 0.89% China 25. Thai 56 0.85% Thailand 26. Gujarati 49 0.74% India 27. Jin Chinese 48 0.72% China 28. Min Nan Chinese 47 0.71% Taiwan 29. Persian 45 0.68% Iran You want to score on countries by yourself? You can score eight indicators in 41 languages on https://trustyourplace.com/ This is a service by the Basel Institute of Commons and Economics. -

Women and the Law Reprinted Congressional

WOMEN AND THE LAW REPRINTED FROM THE 2007 ANNUAL REPORT OF THE CONGRESSIONAL-EXECUTIVE COMMISSION ON CHINA ONE HUNDRED TENTH CONGRESS FIRST SESSION OCTOBER 10, 2007 Printed for the use of the Congressional-Executive Commission on China ( Available via the World Wide Web: http://www.cecc.gov U.S. GOVERNMENT PRINTING OFFICE 40–784 PDF WASHINGTON : 2007 For sale by the Superintendent of Documents, U.S. Government Printing Office Internet: bookstore.gpo.gov Phone: toll free (866) 512–1800; DC area (202) 512–1800 Fax: (202) 512–2104 Mail: Stop IDCC, Washington, DC 20402–0001 VerDate 0ct 09 2002 13:14 Feb 20, 2008 Jkt 000000 PO 00000 Frm 00001 Fmt 5011 Sfmt 5011 U:\DOCS\40784.TXT DEIDRE CONGRESSIONAL-EXECUTIVE COMMISSION ON CHINA LEGISLATIVE BRANCH COMMISSIONERS House Senate SANDER LEVIN, Michigan, Chairman BYRON DORGAN, North Dakota, Co-Chairman MARCY KAPTUR, Ohio MAX BAUCUS, Montana MICHAEL M. HONDA, California CARL LEVIN, Michigan TOM UDALL, New Mexico DIANNE FEINSTEIN, California TIMOTHY J. WALZ, Minnesota SHERROD BROWN, Ohio DONALD A. MANZULLO, Illinois SAM BROWNBACK, Kansas JOSEPH R. PITTS, Pennsylvania CHUCK HAGEL, Nebraska EDWARD R. ROYCE, California GORDON H. SMITH, Oregon CHRISTOPHER H. SMITH, New Jersey MEL MARTINEZ, Florida EXECUTIVE BRANCH COMMISSIONERS PAULA DOBRIANSKY, Department of State CHRISTOPHER R. HILL, Department of State HOWARD M. RADZELY, Department of Labor DOUGLAS GROB, Staff Director MURRAY SCOT TANNER, Deputy Staff Director (II) VerDate 0ct 09 2002 13:14 Feb 20, 2008 Jkt 000000 PO 00000 Frm 00002 Fmt 0486 Sfmt 0486 U:\DOCS\40784.TXT DEIDRE C O N T E N T S Page Status of Women ............................................................................................. -

Official Colours of Chinese Regimes: a Panchronic Philological Study with Historical Accounts of China

TRAMES, 2012, 16(66/61), 3, 237–285 OFFICIAL COLOURS OF CHINESE REGIMES: A PANCHRONIC PHILOLOGICAL STUDY WITH HISTORICAL ACCOUNTS OF CHINA Jingyi Gao Institute of the Estonian Language, University of Tartu, and Tallinn University Abstract. The paper reports a panchronic philological study on the official colours of Chinese regimes. The historical accounts of the Chinese regimes are introduced. The official colours are summarised with philological references of archaic texts. Remarkably, it has been suggested that the official colours of the most ancient regimes should be the three primitive colours: (1) white-yellow, (2) black-grue yellow, and (3) red-yellow, instead of the simple colours. There were inconsistent historical records on the official colours of the most ancient regimes because the composite colour categories had been split. It has solved the historical problem with the linguistic theory of composite colour categories. Besides, it is concluded how the official colours were determined: At first, the official colour might be naturally determined according to the substance of the ruling population. There might be three groups of people in the Far East. (1) The developed hunter gatherers with livestock preferred the white-yellow colour of milk. (2) The farmers preferred the red-yellow colour of sun and fire. (3) The herders preferred the black-grue-yellow colour of water bodies. Later, after the Han-Chinese consolidation, the official colour could be politically determined according to the main property of the five elements in Sino-metaphysics. The red colour has been predominate in China for many reasons. Keywords: colour symbolism, official colours, national colours, five elements, philology, Chinese history, Chinese language, etymology, basic colour terms DOI: 10.3176/tr.2012.3.03 1. -

Life, Thought and Image of Wang Zheng, a Confucian-Christian in Late Ming China

Life, Thought and Image of Wang Zheng, a Confucian-Christian in Late Ming China Inaugural-Dissertation zur Erlangung der Doktorwürde der Philosophischen Fakultät der Rheinischen Friedrich-Wilhelms-Universität zu Bonn vorgelegt von Ruizhong Ding aus Qishan, VR. China Bonn, 2019 Gedruckt mit der Genehmigung der Philosophischen Fakultät der Rheinischen Friedrich-Wilhelms-Universität Bonn Zusammensetzung der Prüfungskommission: Prof. Dr. Dr. Manfred Hutter, Institut für Orient- und Asienwissenschaften (Vorsitzender) Prof. Dr. Wolfgang Kubin, Institut für Orient- und Asienwissenschaften (Betreuer und Gutachter) Prof. Dr. Ralph Kauz, Institut für Orient- und Asienwissenschaften (Gutachter) Prof. Dr. Veronika Veit, Institut für Orient- und Asienwissenschaften (weiteres prüfungsberechtigtes Mitglied) Tag der mündlichen Prüfung:22.07.2019 Acknowledgements Currently, when this dissertation is finished, I look out of the window with joyfulness and I would like to express many words to all of you who helped me. Prof. Wolfgang Kubin accepted me as his Ph.D student and in these years he warmly helped me a lot, not only with my research but also with my life. In every meeting, I am impressed by his personality and erudition deeply. I remember one time in his seminar he pointed out my minor errors in the speech paper frankly and patiently. I am indulged in his beautiful German and brilliant poetry. His translations are full of insightful wisdom. Every time when I meet him, I hope it is a long time. I am so grateful that Prof. Ralph Kauz in the past years gave me unlimited help. In his seminars, his academic methods and sights opened my horizons. Usually, he supported and encouraged me to study more fields of research. -

Chinese Zheng and Identity Politics in Taiwan A

CHINESE ZHENG AND IDENTITY POLITICS IN TAIWAN A DISSERTATION SUBMITTED TO THE GRADUATE DIVISION OF THE UNIVERSITY OF HAWAI‘I AT MĀNOA IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY IN MUSIC DECEMBER 2018 By Yi-Chieh Lai Dissertation Committee: Frederick Lau, Chairperson Byong Won Lee R. Anderson Sutton Chet-Yeng Loong Cathryn H. Clayton Acknowledgement The completion of this dissertation would not have been possible without the support of many individuals. First of all, I would like to express my deep gratitude to my advisor, Dr. Frederick Lau, for his professional guidelines and mentoring that helped build up my academic skills. I am also indebted to my committee, Dr. Byong Won Lee, Dr. Anderson Sutton, Dr. Chet- Yeng Loong, and Dr. Cathryn Clayton. Thank you for your patience and providing valuable advice. I am also grateful to Emeritus Professor Barbara Smith and Dr. Fred Blake for their intellectual comments and support of my doctoral studies. I would like to thank all of my interviewees from my fieldwork, in particular my zheng teachers—Prof. Wang Ruei-yu, Prof. Chang Li-chiung, Prof. Chen I-yu, Prof. Rao Ningxin, and Prof. Zhou Wang—and Prof. Sun Wenyan, Prof. Fan Wei-tsu, Prof. Li Meng, and Prof. Rao Shuhang. Thank you for your trust and sharing your insights with me. My doctoral study and fieldwork could not have been completed without financial support from several institutions. I would like to first thank the Studying Abroad Scholarship of the Ministry of Education, Taiwan and the East-West Center Graduate Degree Fellowship funded by Gary Lin. -

The Investigation and Recording of Contemporary Taiwanese Calligraphers the Ink Trend Association and Xu Yong-Jin

The Investigation and Recording of Contemporary Taiwanese Calligraphers The Ink Trend Association and Xu Yong-jin Ching-Hua LIAO Submitted in partial fulfilment of the requirements of the Degree of Professional Doctorate in Design National Institute for Design Research Faculty of Design Swinburne University of Technology March 2008 Ching-Hua LIAO Submitted in partial fulfilment of the requirements of the Degree of Professional Doctorate in Design National Institute for Design Research Faculty of Design Swinburne University of Technology March 2008 Abstract The aim of this thesis is both to highlight the intrinsic value and uniqueness of the traditional Chinese character and to provide an analysis of contemporary Taiwanese calligraphy. This project uses both the thesis and the film documentary to analyse and record the achievement of the calligraphic art of the first contemporary Taiwanese calligraphy group, the Ink Trend Association, and the major Taiwanese calligrapher, Xu Yong-jin. The significance of the recording of the work of the Ink Trend Association and Xu Yong- jin lies not only in their skills in executing Chinese calligraphy, but also in how they broke with tradition and established a contemporary Taiwanese calligraphy. The documentary is one of the methods used to record history. Art documentaries are in a minority in Taiwan, and especially documentaries that explore calligraphy. This project recorded the Ink Trend Association and Xu Yong-jin over a period of five years. It aims to help scholars researching Chinese culture to cherish the beauty of the Chinese character, that they may endeavour to protect it from being sacrificed on the altar of political power, and that more research in this field may be stimulated. -

On the Status of “Prenuclear” Glides in Mandarin Chinese: Articulatory

Temporal Organization of On-glides across Sinitic languages Feng-fan Hsieh, Guan-sheng Li and Yueh- chin Chang National Tsing Hua University Acknowledgements • We would like to thank Louis Goldstein, Mark Tiede, Jason Shaw, Donald Derrick, Cathi Best, Michael Kenstowicz, Yen-Hwei Lin, Chiu-yu Tseng for assistance, questions, and/or comments. • This work is funded by a Ta-you Wu Memorial Award Grant (103-2410-H-007-036-MY3) from Ministry of Science and Technology, Taiwan. Struck in the “medial”... • This work is a cross-linguistic/dialectal study of on-glides (a.k.a. pre-nuclear glides, the medial, etc.) in Sinitic languages. • How is the medial represented phonologically? • The medial (distinct sub-syllabic constituent) • Part of the onset • Part of the rime • Doubly linked/X-bar-based approach • Flat structure (no sub-syllabic constituents) Some previous attempts • See, e.g., Myers (2015) for a recent overview: • Rhyming • Phonotactics (static phonology) • Language game/syllable manipulation experiments • Acceptability judgment tasks • Speech errors • First language acquisition data • Acoustic measurements Puzzling diversity of results in the literature • Those conflicting results support either one of the following interpretations: • Part of the onset: Consonant cluster or secondary articulation • Part of the rime: Onglide or “true” diphthong • Mixed: E.g., /w/ belongs to the onset vs. /j/ belongs to the rime • Flat structure What about articulation? • Regarding syllables such as <suan> ‘sour’ in Mandarin Chinese, • Chao (1934) comments -

《中国学术期刊文摘》赠阅 《中国学术期刊文摘》赠阅 CHINESE SCIENCE ABSTRACTS (Monthly, Established in 2006) Vol.10 No.1, 2015 (Sum No.103) Published on January 15, 2015

《中国学术期刊文摘》赠阅 《中国学术期刊文摘》赠阅 CHINESE SCIENCE ABSTRACTS (Monthly, Established in 2006) Vol.10 No.1, 2015 (Sum No.103) Published on January 15, 2015 Chinese Science Abstracts Competent Authority: China Association for Science and Technology Contents Supporting Organization: Department of Society Affairs and Academic Activities China Association for Science and Technology Hot Topic Sponsor: Science and Technology Review Publishing House Ebola Virus………………………………………………………………………1 Publisher: Science and Technology Review Publishing House High Impact Papers Editor-in-chief: CHEN Zhangliang Highly Cited Papers TOP5……………………………………………………11 Chief of the Staff/Deputy Editor-in-chief: Acoustics (11) Agricultural Engineering (12) Agriculture Dairy Animal Science (14) SU Qing [email protected] Agriculture Multidisciplinary (16) Agronomy (18) Allergy (20) Deputy Chief of the Staff/Deputy Editor-in-chief: SHI Yongchao [email protected] Anatomy Morphology (22) Andrology (24) Anesthesiology (26) Deputy Editor-in-chief: SONG Jun Architecture (28) Astronomy Astrophysics (29) Automation Control Systems (31) Deputy Director of Editorial Department: Biochemical Research Methods (33) Biochemistry Molecular Biology (34) WANG Shuaishuai [email protected] WANG Xiaobin Biodiversity Conservation (35) Biology (37) Biophysics (39) Biotechnology Applied Microbiology (41) Cell Biology (42) Executive Editor of this issue: WANG Shuaishuai Cell Tissue Engineering (43) Chemistry Analytical (45) Director of Circulation Department: Chemistry Applied (47) Chemistry Inorganic -

THE MEDIA's INFLUENCE on SUCCESS and FAILURE of DIALECTS: the CASE of CANTONESE and SHAAN'xi DIALECTS Yuhan Mao a Thesis Su

THE MEDIA’S INFLUENCE ON SUCCESS AND FAILURE OF DIALECTS: THE CASE OF CANTONESE AND SHAAN’XI DIALECTS Yuhan Mao A Thesis Submitted in Partial Fulfillment of the Requirements for the Degree of Master of Arts (Language and Communication) School of Language and Communication National Institute of Development Administration 2013 ABSTRACT Title of Thesis The Media’s Influence on Success and Failure of Dialects: The Case of Cantonese and Shaan’xi Dialects Author Miss Yuhan Mao Degree Master of Arts in Language and Communication Year 2013 In this thesis the researcher addresses an important set of issues - how language maintenance (LM) between dominant and vernacular varieties of speech (also known as dialects) - are conditioned by increasingly globalized mass media industries. In particular, how the television and film industries (as an outgrowth of the mass media) related to social dialectology help maintain and promote one regional variety of speech over others is examined. These issues and data addressed in the current study have the potential to make a contribution to the current understanding of social dialectology literature - a sub-branch of sociolinguistics - particularly with respect to LM literature. The researcher adopts a multi-method approach (literature review, interviews and observations) to collect and analyze data. The researcher found support to confirm two positive correlations: the correlative relationship between the number of productions of dialectal television series (and films) and the distribution of the dialect in question, as well as the number of dialectal speakers and the maintenance of the dialect under investigation. ACKNOWLEDGMENTS The author would like to express sincere thanks to my advisors and all the people who gave me invaluable suggestions and help. -

JIAO, WEI, D.M.A. Chinese and Western Elements in Contemporary

JIAO, WEI, D.M.A. Chinese and Western Elements in Contemporary Chinese Composer Zhou Long’s Works for Solo Piano Mongolian Folk-Tune Variations, Wu Kui, and Pianogongs. (2014) Directed by Dr. Andrew Willis. 136 pp. Zhou Long is a Chinese American composer who strives to combine traditional Chinese musical techniques with modern Western compositional ideas. His three piano pieces, Mongolian Folk Tune Variations, Wu Kui, and Pianogongs each display his synthesis of Eastern and Western techniques. A brief cultural, social and political review of China throughout Zhou Long’s upbringing will provide readers with a historical perspective on the influence of Chinese culture on his works. Study of Mongolian Folk Tune Variations will reveal the composers early attempts at Western structure and harmonic ideas. Wu Kui provides evidence of the composer’s desire to integrate Chinese cultural ideas with modern and dissonant harmony. Finally, the analysis of Pianogongs will provide historical context to the use of traditional Chinese percussion instruments and his integration of these instruments with the piano. Zhou Long comes from an important generation of Chinese composers including, Chen Yi and Tan Dun, that were able to leave China achieve great success with the combination of Eastern and Western ideas. This study will deepen the readers’ understanding of the Chinese cultural influences in Zhou Long’s piano compositions. CHINESE AND WESTERN ELEMENTS IN CONTEMPORARY CHINESE COMPOSER ZHOU LONG’S WORKS FOR SOLO PIANO MONGOLIAN FOLK-TUNE VARIATIONS, WU KUI, AND PIANOGONGS by Wei Jiao A Dissertation Submitted to the Faculty of the Graduate School at The University of North Carolina at Greensboro in Partial Fulfillment of the Requirements for the Degree Doctor of Musical Arts Greensboro 2014 Approved by _________________________________ Committee Chair © 2014 Wei Jiao APPROVAL PAGE This dissertation has been approved by the following committee of the Faculty of The Graduate School at The University of North Carolina at Greensboro. -

C:\Users\Wind\Documents\Tencent Files\1322870972\Filerecv\IJES

The 8th Asia-Pacific Services Computing Conference APSCC 2014 http://grid.hust.edu.cn/apscc2014 Fujian West Lake Hotel Fuzhou, China December 4-6, 2014 Program Sponsored by Message from Program Chairs Welcome to APSCC 2014, the Eighth Asia-Pacific Services Computing Conference. APSCC 2014 is an important forum for researchers and industry practitioners to exchange information regarding advancements in the state of art and practice of Services Computing, as well as to identify emerging research topics and define the future directions of Services Computing. This year, APSCC attracted 205 submissions. The task of selecting papers involved more than 160 members from 13 countries/regions of the Program Committee. Almost all the papers were peer reviewed by at least three referees. After a thorough examination, 55 papers were accepted in the main track, which results in an acceptance ratio of 26.8%. Beside the main track, APSCC 2014 launched five special tracks, focusing on various emerging topics related to services computing. The papers submitted to each special track were examined by the separated PC committee. After a thorough examination, 34 papers were accepted by the five special tracks. The acceptance ratio of the special tracks is 30.4%. APSCC 2014 has a large number of additional components over the main conference, which greatly adds to its attractiveness. The conference starts with the keynote by Professor Carl K. Chang, 2004 President of IEEE Computer Society, and the winner of CCF Award of Excellent Contribution for Overseas Chinese, and the keynote by Dr. Zhen Liu, Director and Head of China Innovation Group, Microsoft Asia R&D Center. -

Norm Orientation of Chinese English: a Sociohistorical Perspective

Norm Orientation of Chinese English: a Sociohistorical Perspective Zhenjiang TIAN Norm Orientation of Chinese English: a Sociohistorical Perspective Inauguraldissertation zur Erlangung des akademischen Grades eines Doktors der Philosophie im Fachbereich Philosophie und Geisteswissenschaften (Institut für Englische Philologie) der Freien Unversität Berlin vorgelegt von: Zhenjiang Tian Berlin, November 2010 Erstgutachter: Prof. Dr. Gerhard Leitner Zweitgutachter: Prof. Dr. Wolfgang Zydatiß Datum der Disputation: 2. Februar 2011 Acknowledgements I have benefited from the support of numerous people in the process of writing this dissertation. First of all, I would like to express my heartfelt gratitude to my supervisor, Prof. Dr. Gerhard Leitner, who provided me a generous opportunity to do my doctoral study in Berlin and used the facilities of English Department of the Free University, offered me many precious suggestions on how to carry out the study and how to make the dissertation well-organized. I am also indebted to Prof. Dr. Wolfgang Zydatiss, who read through the drafts of this dissertation, gave me valuable guidance, and did most organizing work for me. Special thanks also go to Prof. Dr. Azirah Hashim from University of Malaya for her helpful insights into the subject. My thanks also go to Dr. Shi Xin for his valuable introduction in reading the history of China. Thanks extend to Prof. Mei Renyi and Dr. Lian Jichun, who accepted my interview for this dissertation. I would like to thank Prof. Song Jie and her colleagues and students from Beijing Capital Normal Universtiy, and my colleagues and students from Hulunbeir University for their patient cooperation in the response of the questionnaire.