Modular SIMD Arithmetic in Mathemagix Joris Van Der Hoeven, Grégoire Lecerf, Guillaume Quintin

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

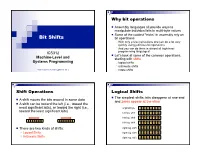

Bit Shifts Bit Operations, Logical Shifts, Arithmetic Shifts, Rotate Shifts

Why bit operations Assembly languages all provide ways to manipulate individual bits in multi-byte values Some of the coolest “tricks” in assembly rely on Bit Shifts bit operations With only a few instructions one can do a lot very quickly using judicious bit operations And you can do them in almost all high-level ICS312 programming languages! Let’s look at some of the common operations, Machine-Level and starting with shifts Systems Programming logical shifts arithmetic shifts Henri Casanova ([email protected]) rotate shifts Shift Operations Logical Shifts The simplest shifts: bits disappear at one end A shift moves the bits around in some data and zeros appear at the other A shift can be toward the left (i.e., toward the most significant bits), or toward the right (i.e., original byte 1 0 1 1 0 1 0 1 toward the least significant bits) left log. shift 0 1 1 0 1 0 1 0 left log. shift 1 1 0 1 0 1 0 0 left log. shift 1 0 1 0 1 0 0 0 There are two kinds of shifts: right log. shift 0 1 0 1 0 1 0 0 Logical Shifts right log. shift 0 0 1 0 1 0 1 0 Arithmetic Shifts right log. shift 0 0 0 1 0 1 0 1 Logical Shift Instructions Shifts and Numbers Two instructions: shl and shr The common use for shifts: quickly multiply and divide by powers of 2 One specifies by how many bits the data is shifted In decimal, for instance: multiplying 0013 by 10 amounts to doing one left shift to obtain 0130 Either by just passing a constant to the instruction multiplying by 100=102 amounts to doing two left shifts to obtain 1300 Or by using whatever -

A Cross-Platform Programmer's Calculator

– Independent Work Report Fall, 2015 – A Cross-Platform Programmer’s Calculator Brian Rosenfeld Advisor: Robert Dondero Abstract This paper details the development of the first cross-platform programmer’s calculator. As users of programmer’s calculators, we wanted to address limitations of existing, platform-specific options in order to make a new, better calculator for us and others. And rather than develop for only one- platform, we wanted to make an application that could run on multiple ones, maximizing its reach and utility. From the start, we emphasized software-engineering and human-computer-interaction best practices, prioritizing portability, robustness, and usability. In this paper, we explain the decision to build a Google Chrome Application and illustrate how using the developer-preview Chrome Apps for Mobile Toolchain enabled us to build an application that could also run as native iOS and Android applications [18]. We discuss how we achieved support of signed and unsigned 8, 16, 32, and 64-bit integral types in JavaScript, a language with only one numerical type [15], and we demonstrate how we adapted the user interface for different devices. Lastly, we describe our usability testing and explain how we addressed learnability concerns in a second version. The end result is a user-friendly and versatile calculator that offers value to programmers, students, and educators alike. 1. Introduction This project originated from a conversation with Dr. Dondero in which I expressed an interest in software engineering, and he mentioned a need for a good programmer’s calculator. Dr. Dondero uses a programmer’s calculator while teaching Introduction to Programming Systems at Princeton (COS 217), and he had found that the pre-installed Mac OS X calculator did not handle all of his use cases. -

Bitwise Operators

Logical operations ANDORNOTXORAND,OR,NOT,XOR •Loggpical operations are the o perations that have its result as a true or false. • The logical operations can be: • Unary operations that has only one operand (NOT) • ex. NOT operand • Binary operations that has two operands (AND,OR,XOR) • ex. operand 1 AND operand 2 operand 1 OR operand 2 operand 1 XOR operand 2 1 Dr.AbuArqoub Logical operations ANDORNOTXORAND,OR,NOT,XOR • Operands of logical operations can be: - operands that have values true or false - operands that have binary digits 0 or 1. (in this case the operations called bitwise operations). • In computer programming ,a bitwise operation operates on one or two bit patterns or binary numerals at the level of their individual bits. 2 Dr.AbuArqoub Truth tables • The following tables (truth tables ) that shows the result of logical operations that operates on values true, false. x y Z=x AND y x y Z=x OR y F F F F F F F T F F T T T F F T F T T T T T T T x y Z=x XOR y x NOT X F F F F T F T T T F T F T T T F 3 Dr.AbuArqoub Bitwise Operations • In computer programming ,a bitwise operation operates on one or two bit patterns or binary numerals at the level of their individual bits. • Bitwise operators • NOT • The bitwise NOT, or complement, is an unary operation that performs logical negation on each bit, forming the ones' complement of the given binary value. -

Bits, Bytes, and Integers Today: Bits, Bytes, and Integers

Bits, Bytes, and Integers Today: Bits, Bytes, and Integers Representing information as bits Bit-level manipulations Integers . Representation: unsigned and signed . Conversion, casting . Expanding, truncating . Addition, negation, multiplication, shifting . Summary Representations in memory, pointers, strings Everything is bits Each bit is 0 or 1 By encoding/interpreting sets of bits in various ways . Computers determine what to do (instructions) . … and represent and manipulate numbers, sets, strings, etc… Why bits? Electronic Implementation . Easy to store with bistable elements . Reliably transmitted on noisy and inaccurate wires 0 1 0 1.1V 0.9V 0.2V 0.0V For example, can count in binary Base 2 Number Representation . Represent 1521310 as 111011011011012 . Represent 1.2010 as 1.0011001100110011[0011]…2 4 13 . Represent 1.5213 X 10 as 1.11011011011012 X 2 Encoding Byte Values Byte = 8 bits . Binary 000000002 to 111111112 0 0 0000 . Decimal: 010 to 25510 1 1 0001 2 2 0010 . Hexadecimal 0016 to FF16 3 3 0011 . 4 4 0100 Base 16 number representation 5 5 0101 . Use characters ‘0’ to ‘9’ and ‘A’ to ‘F’ 6 6 0110 7 7 0111 . Write FA1D37B16 in C as 8 8 1000 – 0xFA1D37B 9 9 1001 A 10 1010 – 0xfa1d37b B 11 1011 C 12 1100 D 13 1101 E 14 1110 F 15 1111 Example Data Representations C Data Type Typical 32-bit Typical 64-bit x86-64 char 1 1 1 short 2 2 2 int 4 4 4 long 4 8 8 float 4 4 4 double 8 8 8 long double − − 10/16 pointer 4 8 8 Today: Bits, Bytes, and Integers Representing information as bits Bit-level manipulations Integers . -

Integer Arithmetic and Undefined Behavior in C Brad Karp UCL Computer Science

Carnegie Mellon Integer Arithmetic and Undefined Behavior in C Brad Karp UCL Computer Science CS 3007 23rd January 2018 (lecture notes derived from material from Eddie Kohler, John Regehr, Phil Gibbons, Randy Bryant, and Dave O’Hallaron) 1 Carnegie Mellon Outline: Integer Arithmetic and Undefined Behavior in C ¢ C primitive data types ¢ C integer arithmetic § Unsigned and signed (two’s complement) representations § Maximum and minimum values; conversions and casts § Perils of C integer arithmetic, unsigned and especially signed ¢ Undefined behavior (UB) in C § As defined in the C99 language standard § Consequences for program behavior § Consequences for compiler and optimizer behavior § Examples § Prevalence of UB in real-world Linux application-level code ¢ Recommendations for defensive programming in C 2 Carnegie Mellon Example Data Representations C Data Type Typical 32-bit Typical 64-bit x86-64 char 1 1 1 signed short (default) 2 2 2 and int 4 4 4 unsigned variants long 4 8 8 float 4 4 4 double 8 8 8 pointer 4 8 8 3 Carnegie Mellon Portable C types with Fixed Sizes C Data Type all archs {u}int8_t 1 {u}int16_t 2 {u}int32_t 4 {u}int64_t 8 uintptr_t 4 or 8 Type definitions available in #include <stdint.h> 4 Carnegie Mellon Shift Operations ¢ Left Shift: x << y Argument x 01100010 § Shift bit-vector x left y positions << 3 00010000 – Throw away extra bits on left Log. >> 2 00011000 § Fill with 0’s on right Arith. >> 2 00011000 ¢ Right Shift: x >> y § Shift bit-vector x right y positions Argument 10100010 § Throw away extra bits on right x § Logical shift << 3 00010000 § Fill with 0’s on left Log. -

Episode 7.03 – Coding Bitwise Operations

Episode 7.03 – Coding Bitwise Operations Welcome to the Geek Author series on Computer Organization and Design Fundamentals. I’m David Tarnoff, and in this series, we are working our way through the topics of Computer Organization, Computer Architecture, Digital Design, and Embedded System Design. If you’re interested in the inner workings of a computer, then you’re in the right place. The only background you’ll need for this series is an understanding of integer math, and if possible, a little experience with a programming language such as Java. And one more thing. This episode has direct consequences for our code. You can find coding examples on the episode worksheet, a link to which can be found on the transcript page at intermation.com. Way back in Episode 2.2 – Unsigned Binary Conversion, we introduced three operators that allow us to manipulate integers at the bit level: the logical shift left (represented with two adjacent less-than operators, <<), the arithmetic shift right (represented with two adjacent greater-than operators, >>), and the logical shift right (represented with three adjacent greater-than operators, >>>). These special operators allow us to take all of the bits in a binary integer and move them left or right by a specified number of bit positions. The syntax of all three of these operators is to place the integer we wish to shift on the left side of the operator and the number of bits we wish to shift it by on the right side of the operator. In that episode, we introduced these operators to show how bit shifts could take the place of multiplication or division by powers of two. -

Openvms Linker Utility Manual

OpenVMS Linker Utility Manual Order Number: AA–PV6CD–TK April 2001 This manual describes the OpenVMS Linker utility. Revision/Update Information: This manual supersedes the OpenVMS Linker Utility Manual, Version 7.1 Software Version: OpenVMS Alpha Version 7.3 OpenVMS VAX Version 7.3 Compaq Computer Corporation Houston, Texas © 2001 Compaq Computer Corporation Compaq, VAX, VMS, and the Compaq logo Registered in U.S. Patent and Trademark Office. OpenVMS is a trademark of Compaq Information Technologies Group, L.P. in the United States and other countries. UNIX is a trademark of The Open Group. All other product names mentioned herein may be the trademarks or registered trademarks of their respective companies. Confidential computer software. Valid license from Compaq required for possession, use, or copying. Consistent with FAR 12.211 and 12.212, Commercial Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under vendor’s standard commercial license. Compaq shall not be liable for technical or editorial errors or omissions contained herein. The information in this document is provided "as is" without warranty of any kind and is subject to change without notice. The warranties for Compaq products are set forth in the express limited warranty statements accompanying such products. Nothing herein should be construed as constituting an additional warranty. ZK4548 The Compaq OpenVMS documentation set is available on CD–ROM. This document was prepared using VAX DOCUMENT Version 2.1. Contents Preface ............................................................ xi Part I Linker Utility Description 1 Introduction 1.1 Overview . ................................................ 1–1 1.1.1 Linker Functions . -

VAX MACRO and Instruction Set Reference Manual

VAX MACRO and Instruction Set Reference Manual Order Number: AA–PS6GD–TE April 2001 This document describes the features of the VAX MACRO instruction set and assembler. It includes a detailed description of MACRO directives and instructions, as well as information about MACRO source program syntax. Revision/Update Information: This manual supersedes the VAX MACRO and Instruction Set Reference Manual, Version 7.1 Software Version: OpenVMS VAX Version 7.3 Compaq Computer Corporation Houston, Texas © 2001 Compaq Computer Corporation Compaq, VAX, VMS, and the Compaq logo Registered in U.S. Patent and Trademark Office. OpenVMS is a trademark of Compaq Information Technologies Group, L.P. in the United States and other countries. All other product names mentioned herein may be trademarks of their respective companies. Confidential computer software. Valid license from Compaq required for possession, use, or copying. Consistent with FAR 12.211 and 12.212, Commercial Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under vendor’s standard commercial license. Compaq shall not be liable for technical or editorial errors or omissions contained herein. The information in this document is provided "as is" without warranty of any kind and is subject to change without notice. The warranties for Compaq products are set forth in the express limited warranty statements accompanying such products. Nothing herein should be construed as constituting an additional warranty. ZK4515 The Compaq OpenVMS documentation set is available on CD-ROM. This document was prepared using DECdocument, Version 3.3-1b. Contents Preface ............................................................ xv VAX MACRO Language 1 Introduction 2 VAX MACRO Source Statement Format 2.1 Label Field . -

Textbook Section 6.1 Draft

Chapter Six A Computing Machine 6.1 Representing Information . 810 6.2 TOY Machine. 842 6.3 Machine Language Programming . 860 6.4 Simulator . 910 UR GOAL IN THIS CHAPTER IS to show you how simple the computer that you’re Ousing really is. We will describe in detail a simple imaginary machine that has many of the characteristics of real processors at the heart of the computational devices that surround us. You may be surprised to learn that many, many machines share these same properties, even some of the very first computers that were developed. Accordingly, we are able to tell the story in historical context. Imagine a world without comput- ers, and what sort of device might be received with enthusiasm, and that is not far from what we have! We tell out story from the standpoint of scientific computing— there is an equally fascinating story from the standpoint of commercial computing that we touch on just briefly. Next, our aim is to convince you that the basic concepts and constructs that we covered in Java programming are not so difficult to implement on a simple machine, using its own machine language. We will consider in detail conditionals, loops, functions, arrays, and linked structures. Since these are the same basic tools that we examined for Java, it follows that several of the computational tasks that we addressed in the first part of this book are not difficult to address at a lower level. This simple machine is a link on the continuum between your computer and the actual hardware circuits that change state to reflect the action of your programs. -

Fall 2014 Course Schedule

Fall 2014 Course Schedule TPL011: Introduction to C Programming ................................................................................................................................ 2 TPL036: Introduction to Ruby and Rails ................................................................................................................................. 2 TPL064: An Introduction to Computer Vision and Automated Object Recognition ................................................................ 3 TPL103: Introduction to Intel x86-64 ....................................................................................................................................... 4 TPL109: Introduction to ARM (Advanced/Acorn RISC Machine) Architecture & Software Systems ..................................... 5 TPL465: Intermediate Intel x86: Architecture, Assembly, and Applications ........................................................................... 6 TPL477: Introduction to Android Forensics and Security Testing ........................................................................................... 8 TPL482: JSON and JSON Schema ........................................................................................................................................ 9 TST414: Introduction to Bayesian Data Analysis.................................................................................................................. 10 TSV062: Privacy Engineering .............................................................................................................................................. -

Binary Arithmetic and Bit Operations

3 BINARY ARITHMETIC AND BIT OPERATIONS Understanding how computers represent data in binary is a prerequisite to writing software that works well on those computers. Of equal importance, of course, is under- standing how computers operate on binary data. Exploring arithmetic, logical, and bit operations on binary data is the purpose of this chapter. 3.1 Arithmetic Operations on Binary and Hexadecimal Numbers Because computers use binary representation, programmers who write great code often have to work with binary (and hexadecimal) values. Often, when writing code, you may need to manually operate on two binary values in order to use the result in your source code. Although calculators are available to compute such results, you should be able to perform simple arithmetic operations on binary operands by hand. Write Great Code www.nostarch.com Hexadecimal arithmetic is sufficiently painful that a hexadecimal calculator belongs on every programmer’s desk (or, at the very least, use a software-based calculator that supports hexadecimal operations, such as the Windows calculator). Arithmetic operations on binary values, however, are actually easier than decimal arithmetic. Knowing how to manually compute binary arithmetic results is essential because several important algorithms use these operations (or variants of them). Therefore, the next several subsections describe how to manually add, subtract, multiply, and divide binary values, and how to perform various logical operations on them. 3.1.1 Adding Binary Values Adding two binary values is easy; there are only eight rules to learn. (If this sounds like a lot, just realize that you had to memorize approximately 200 rules for decimal addition!) Here are the rules for binary addition: 0 + 0 = 0 0 + 1 = 1 1 + 0 = 1 1 + 1 = 0 with carry Carry + 0 + 0 = 1 Carry + 0 + 1 = 0 with carry Carry + 1 + 0 = 0 with carry Carry + 1 + 1 = 1 with carry Once you know these eight rules you can add any two binary values together. -

VSI Openvms VAX MACRO and Instruction Set Reference Manual

VSI OpenVMS VAX MACRO and Instruction Set Reference Manual Document Number: DO-VMACRM-01A Publication Date: April 2019 This document describes the features of the VAX MACRO instruction set and assembler. It includes a detailed description of MACRO directives and instructions, as well as information about MACRO source program syntax. Revision Update Information: This is a new manual. Operating System and Version: OpenVMS VAX Version 7.3 VMS Software, Inc., (VSI) Bolton, Massachusetts, USA VAX MACRO and Instruction Set Reference Manual Copyright © 2019 VMS Software, Inc. (VSI), Bolton, Massachusetts, USA Legal Notice Confidential computer software. Valid license from VSI required for possession, use or copying. Consistent with FAR 12.211 and 12.212, Commercial Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under vendor's standard commercial license. The information contained herein is subject to change without notice. The only warranties for VSI products and services are set forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. VSI shall not be liable for technical or editorial errors or omissions contained herein. HPE, HPE Integrity, HPE Alpha, and HPE Proliant are trademarks or registered trademarks of Hewlett Packard Enterprise. Intel, Itanium, and IA-64 are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States and other countries. The VSI OpenVMS documentation set is available on CD. ii VAX MACRO and Instruction Set Reference Manual Preface .................................................................................................................................... xi 1. About VSI ..................................................................................................................... xi 2. Intended Audience ......................................................................................................... xi 3.