Local Independence and Residual Covariance: a Study of Olympic Figure Skating Ratings

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Ukrainian Weekly 1999, No.17

www.ukrweekly.com INSIDE:• Switzerland to seek Lazarenko’s extradition – page 2. • Senators question State Department’s reorganizaton — page 3. • Dynamo out of the running — page 11. Published by the Ukrainian National Association Inc., a fraternal non-profit association Vol. LXVII HE KRAINIANNo. 17 THE UKRAINIAN WEEKLY SUNDAY, APRIL 25, 1999 EEKLY$1.25/$2 in Ukraine BalkanT crisis in forefrontUHillary Rodham Clinton honored withW CCRF achievement award on eve of NATO summit by Irene Jarosewich NEW YORK – America’s First Lady, by Roman Woronowycz Hillary Rodham Clinton was honored with Kyiv Press Bureau the Children of Chornobyl’s Relief Fund Lifetime Humanitarian Achievement Award KYIV – Viktor Chernomyrdin, Russia’s on April 19 at The Ukrainian Institute of newly appointed special envoy on the America for her commitment to improving Balkan crisis, met with Ukraine’s President the health of women and children in Leonid Kuchma on April 20, part of a flurry Ukraine, as well as around the world. of political activity in Kyiv regarding the Referring to a poem by American poet Balkan crisis on the eve of the NATO’s Maya Angelou, titled “A Phenomenal 50th anniversary summit to be held in Woman,” CCRF’s Executive Director Washington. Nadia Matkiwsky, introduced the first lady Unlike Russia, which did not plan to as “a woman who stands on her own attend the Washington summit as a protest achievements, a woman of vision and com- against the bombing of rump Yugoslavia by passion and intellectual strength – indeed a NATO (at the last minute it was reported phenomenal woman.” Noting that “a nation that Russia would attend), Ukraine will without healthy children is a nation without attend and has indicated it is taking steps to a future,” Mrs. -

Copy of Will and Till

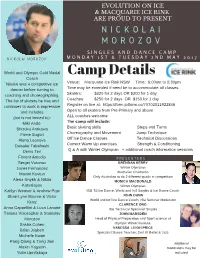

EVOLUTION ON ICE & MACQUARIE ICE RINK ARE PROUD TO PRESENT N I C K O L A I M O R O Z O V S I N G L E S A N D D A N C E C A M P NICKOLAI MOROZOV M O N D A Y 1 S T & T U E S D A Y 2 N D M A Y 2 0 1 7 World and Olympic Gold Medal Camp Details Coach Nikolai was a competitive ice Venue: Macquarie Ice Rink NSW Time: 6:00am to 2:30pm dancer before turning to Time may be extended if need be to accommodate all classes. coaching and choreographing. Skaters: $320 for 2 days OR $200 for 1 day The list of skaters he has and Coaches: $250 for 2 days OR $150 for 1 day continues to work is impressive Register on line at: https://form.jotform.co/70702011922846 and includes Open to all skaters from Pre-Primary and above (but is not limited to): ALL coaches welcome Miki Ando The camp will include: Shizuka Arakawa Basic skating skills Steps and Turns Fume Suguri Choreography and Movement Jump Technique Alena Leonova Off Ice Dance Classes Technical Discussions Daisuke Takahashi Correct Warm Up exercises Strength & Conditioning Denis Ten Q & A with Winter Olympian + additional coach information sessions Florent Amodio P R E S E N T E R S Sergei Voronov BRENDAN KERRY Javier Fernandez Winter Olympian Maxim Kovtun Australian Champion Only Australian to do 2 different quads in competition Alena Ilinykh & Nikita MONICA MACDONALD Katsalapov Winter Olympian Kaitlyn Weaver & Andrew Poje ISU TS Ice Dance, World and Int'l Singles & Ice Dance Coach Shae-Lynn Bourne & Victor JOHN DUNN World and Int'l Ice Dance Coach, ISU Seminar Moderator Kratz CLARENCE ONG Anna Cappellini & Luca Lanotte ISU Technical Specialist Singles Tatiana Volosozhar & Stanislav JOHN MARSDEN Morozov Head of Physical Preparation and Sport science at Sasha Cohen Olympic Winter Institute. -

Here They Come ... Again

* FALLEN Venice loses to Winter Haven, 3-0, in Class 6A state semifinals. THURSDAY, MAY 22, 2014 R 75¢ HERALDTRIBUNE.COM SPORTS SUN SUDS Bradenton and Venice fests celebrate & the Sunshine State’s craft brews. TICKET Progress for plan south of Ranch SARASOTA: Project poised to advance, with help of growth-plan amendments By JESSIE VAN BERKEL [email protected] SARASOTA COUNTY — The design and permitting of a long- awaited community south of Lake- wood Ranch will soon begin, along with the addition of roads that could help alleviate traffic woes near I-75 and University Hundreds attend a recent Main Street Live event in downtown Bradenton. Mayor Wayne Poston attributed his city’s population increase Parkway. to strong building activity at large developments annexed years ago and steady in-fill development. H-T ARCHIVE / SEPTEMBER 2013 The Villages of Lakewood Ranch South will add 5,144 homes —nearly40percentaffordable housing — and 390,000 square feet of commercial, office and re- tail space to Sarasota County. The county approved the Here they come ... again project several years ago, but the developer, Schroeder-Manatee Ranch Inc., has since sought Census data shows populations are amendments to its transportation agreement with Sarasota County rising anew in every city in the region and the county’s growth require- as the job seekers and retirees return ments. On Wednesday, the Sarasota County Commission granted the By ZAC ANDERSON Growth rates in North Port builders several changes. [email protected] and Bradenton increased “This is the most significant very city in Sarasota further in 2013, while Saraso- milestone in this long, winding and Manatee counties ta’s growth dipped slightly to road of an effort,” said Todd grew in population last just below 1 percent and Ven- Pokrywa, a Schroeder-Manatee year according to new ice held steady with a 1.1 per- Ranch vice president. -

P20 Layout 1

Stenson wins Bolt and Fraser-Pryce in Dubai win 2013 World 17 Athlete18 awards MONDAY, NOVEMBER 18, 2013 Vettel on pole as Red Bull sweep front row Page 18 BELGRADE: Czech Rebublic’s team members hold the Davis Cup after winning the last singles Davis Cup tennis match finals betweenCzech Republic and Serbia at the Kombank Arena in Belgrade. —AFP Czechs beat Serbia to retain Davis Cup BELGRADE: The Czech Republic retained the Davis Cup emotions were “mixed” as 2010 champions Serbia “tried to from a noisy group of Czechs in the sold-out arena. point for a 6-4 lead. He went on to take the second set in after Radek Stepanek beat outclassed Serbian youngster do our best” despite being weakened considerably. “I controlled the rubber except in the first game, and I tie-break after letting off some steam and destroying a rac- Dusan Lajovic in the decisive fifth final rubber in straight Lajovic replaced 36th-ranked Janko Tipsarevic, out with played in the best form of my life the whole weekend,” said quet, and before taking the third set in style, 6-2. sets yesterday. a heel injury, and Serbia also missed 76th-ranked Viktor Stepanek. Lajovic admitted he found it hard to predict Berdych admitted that Djokovic’s victory was deserved. The 44th-ranked, 34-year-old Stepanek beat the 23- Troicki over a doping ban. Stepanek’s moves. “I think it was his biggest advantage in “I tried to hold on to him from the beginning to the end year-old Lajovic, world number 117, 6-3, 6-1, 6-1 in under Lajovic, who has lost all four ATP-level matches he has this match.” but it wasn’t enough,” he said. -

Script Crisis and Literary Modernity in China, 1916-1958 Zhong Yurou

Script Crisis and Literary Modernity in China, 1916-1958 Zhong Yurou Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in the Graduate School of Arts and Sciences COLUMBIA UNIVERSITY 2014 © 2014 Yurou Zhong All rights reserved ABSTRACT Script Crisis and Literary Modernity in China, 1916-1958 Yurou Zhong This dissertation examines the modern Chinese script crisis in twentieth-century China. It situates the Chinese script crisis within the modern phenomenon of phonocentrism – the systematic privileging of speech over writing. It depicts the Chinese experience as an integral part of a worldwide crisis of non-alphabetic scripts in the nineteenth and twentieth centuries. It places the crisis of Chinese characters at the center of the making of modern Chinese language, literature, and culture. It investigates how the script crisis and the ensuing script revolution intersect with significant historical processes such as the Chinese engagement in the two World Wars, national and international education movements, the Communist revolution, and national salvation. Since the late nineteenth century, the Chinese writing system began to be targeted as the roadblock to literacy, science and democracy. Chinese and foreign scholars took the abolition of Chinese script to be the condition of modernity. A script revolution was launched as the Chinese response to the script crisis. This dissertation traces the beginning of the crisis to 1916, when Chao Yuen Ren published his English article “The Problem of the Chinese Language,” sweeping away all theoretical oppositions to alphabetizing the Chinese script. This was followed by two major movements dedicated to the task of eradicating Chinese characters: First, the Chinese Romanization Movement spearheaded by a group of Chinese and international scholars which was quickly endorsed by the Guomingdang (GMD) Nationalist government in the 1920s; Second, the dissident Chinese Latinization Movement initiated in the Soviet Union and championed by the Chinese Communist Party (CCP) in the 1930s. -

OLYMPISKE MEDALJØRER I KUNSTLØP (1908-) 1924 - 2002 Utarbeidet Av Magne Teigen, NSF/SG Kvinner, Single År Sted OLYMPISK MESTER SØLV BRONSE

OLYMPISKE MEDALJØRER I KUNSTLØP (1908-) 1924 - 2002 Utarbeidet av Magne Teigen, NSF/SG Kvinner, single År Sted OLYMPISK MESTER SØLV BRONSE 1908 London Madge Syers GBR Elsa Rendschmidt GER Dorothy Greenhough-Smith GBR 1920 Antwerpen Magda Julin SWE Svea Norén SWE Theresa Weld USA 1924 Chamonix Herma Planck-Szabo AUT Beatrix Loughran USA Ethel Muckelt GBR 1928 St. Moritz Sonja Henie NOR Fritzi Burger AUT Beatrix Loughran USA 1932 Lake Placid Sonja Henie NOR Fritzi Burger AUT Maribel Vinson USA 1936 Garmisch-Partenkirchen Sonja Henie NOR Cecilia Colledge GBR Vivi-Anne Hultén SWE 1948 St. Moritz Barbara Ann Scott CAN Eva Pawlik AUT Jeannette Altwegg GBR 1952 Oslo Jeannette Altwegg GBR Tenley Albright USA Jacqueline du Bief FRA 1956 Cortina d’Ampezzo Tenley Albright USA Carol Heiss USA Ingrid Wendl AUT 1960 Squaw Valley Carol Heiss USA Sjoukje Dijkstra NED Barbara Ann Roles USA 1964 Innsbruck Sjoukje Dijkstra NED Regine Heitzer AUT Petra Burka CAN 1968 Grenoble Peggy Fleming USA Gabriele Seyfert GDR Hana Mašková TCH 1972 Sapporo Beatrix Schuba AUT Karen Magnussen CAN Janet Lynn USA 1976 Innsbruck Dorothy Hamill USA Dianne de Leeuw NED Christine Errath GDR 1980 Lake Placid Anett Pötzsch GDR Linda Fratianne USA Dagmar Lurz FRG 1984 Sarajevo Katarina Witt GDR Rosalyn Sumners USA Kira Ivanova URS 1988 Calgary Katarina Witt GDR Elizabeth Manley CAN Debi Thomas USA 1992 Albertville Kristi Yamaguchi USA Midori Ito JPN Nancy Kerrigan USA 1994 Lillehammer Oksana Bayul UKR Nancy Kerrigan USA Chen Lu CHN 1998 Nagano Tara Lipinski USA Michelle Kwan USA Chen Lu CHN 2002 Salt Lake City Sarah Hughes USA Irina Slutskaya RUS Michelle Kwan USA Menn, single År Sted OLYMPISK MESTER SØLV BRONSE 1908 London Ulrich Salchow SWE Richard Johansson SWE Per Thorén SWE 1920 Antwerpen Gillis Grafström SWE Andreas Krogh NOR Martin Stixrud NOR 1924 Chamonix Gillis Grafström SWE Willy Böckl AUT Georges Gautschi SUI 1928 St. -

The BG News February 11, 2002

Bowling Green State University ScholarWorks@BGSU BG News (Student Newspaper) University Publications 2-11-2002 The BG News February 11, 2002 Bowling Green State University Follow this and additional works at: https://scholarworks.bgsu.edu/bg-news Recommended Citation Bowling Green State University, "The BG News February 11, 2002" (2002). BG News (Student Newspaper). 6913. https://scholarworks.bgsu.edu/bg-news/6913 This work is licensed under a Creative Commons Attribution-Noncommercial-No Derivative Works 4.0 License. This Article is brought to you for free and open access by the University Publications at ScholarWorks@BGSU. It has been accepted for inclusion in BG News (Student Newspaper) by an authorized administrator of ScholarWorks@BGSU. State University MONDAY February 11, 2002 Marshall Mashed: SUNNY Falcons run through HIGH: 35 i LOW 28 Thundering Herd www.bgnews.com 83-60; PAGE 9 independent student press V0UM93 ISSUE 19 Former Falcons light flame Martial arts By Dan Nied THE BC HEWS academy in BG , As Mike Eruzione look the torch al the base of the Olympic cauldron Friday, he summoned by Kara Hull of the Korean Martial Arts Club for the rest of the 1980 USA t HE BG NEWS and expects to coordinate the Olympic hockey team to join him Bowling Green pre-med stu- club with Remy's Martial Arts in lighting the world's most dent, soccer player, business Academy. lainous flame. owner, National Guardsman, "I Ie has a great support group Out walked two pieces of Haitian Olympic team member, On campus, and bis academy Bowling Green history ready to any of these roles can fit senior will help to bring the martial arts add to their already rich saga hide Remy on any given day. -

CDOS 77) Est Heureux De Vous Présenter, En Ce Début D’Année 2015, Son Guide « 100% Bleu »

Le Comité Départemental Olympique et Sportif de Seine-et-Marne (CDOS 77) est heureux de vous présenter, en ce début d’année 2015, son guide « 100% bleu ». Lancé en 2011, ce document réunit tous les seine et marnais sélectionnés en Equipe de France, soit pour les Championnats d’Europe ou du Monde, soit pour les variantes des Jeux Olympiques (comme les Jeux Méditerranéens, Jeux Mondiaux ou Jeux de la Francophonie). Notre objectif est simple : valoriser tous ceux qui ont porté haut les couleurs du 77, afin de mieux les connaître et de mieux les « supporter ». Dans ce « 100% bleu », les champions sont présentés ville par ville, avec des zooms sur les athlètes susceptibles d’être sélectionnés pour les prochains Jeux Olympiques 2016 (reconnaissable avec ce logo ). Par ailleurs un autre visuel permet d’identifier les athlètes qui ont reçu le Trophée de l’Espoir, récompense qui va fêter en 2015 son quart de siècle. Nous adressons nos félicitations aux champions, à leurs clubs, à leurs villes ainsi que nos encouragements pour 2015. Vincent KROPF Vice-président du CDOS77 En charge de la promotion et de la communication AAVVOONN Guide 100% bleu 2014 – CDOS 77 - 3 AAVVOONN--FFOONNTTAAIINNEEBBLLEEAAUU Guide 100% bleu 2014 – CDOS 77 - 4 AAVVOONN--FFOONNTTAAIINNEEBBLLEEAAUU BBUUSSSSYY//GGUUEERRMMAANNTTEESS Guide 100% bleu 2014 – CDOS 77 - 5 BBUUSSSSYY SSTT GGEEOORRGGEESS CCEELLYY EENN BBIIEERREE CCHHAAMMPPSS SSUURR MMAARRNNEE Guide 100% bleu 2014 – CDOS 77 - 6 CCHHEEVVRRYY--GGRRIISSYY--PPOONNTTAAUULLTT CCLLAAYYEE SSOOUUIILLLLYY Guide 100% bleu 2014 – CDOS 77 - 7 CCOOMMBBSS LLAA VVIILLLLEE DDAAMMMMAARRIIEE LLEESS LLYYSS Guide 100% bleu 2014 – CDOS 77 - 8 DDAAMMMMAARRIIEE LLEESS LLYYSS FFOONNTTAAIINNEEBBLLEEAAUU FFOONNTTEENNAAYY TTRREESSIIGGNNYY Guide 100% bleu 2014 – CDOS 77 - 9 GGRRAAVVOONN GGRREEZZ SSUURR LLOOIINNGG . -

Olympic Vision Becoming Reality

CHINA DAILY | HONG KONG EDITION Thursday, January 2, 2020 | 11 SPORTS YEAR IN REVIEW — WINTER OLYMPICS With many venues already in operation, 2022 organizers can reflect on a highly productive 2019 OLYMPIC VISION BECOMING REALITY By SUN XIAOCHEN lion people in winter sports and rec [email protected] reation in the buildup to 2022 and beyond. With venues taking shape and Landmark 2008 Olympics venue test events underway, China’s prepa the National Stadium, aka the Bird’s rations for the 2022 Winter Olym Nest, has switched into winter pics made huge strides in 2019 as mode, providing entrylevel curling, organizers’ focus shifted from con skating and ice hockey activities for struction to operation. the public as part of the second The impressive progress is per “Meet in 2022” Ice and Snow Cultur haps best illustrated at the National al Festival. Aquatics Center, the 2008 Summer The renovated Bird’s Nest will Olympics venue known as the Water host the opening and closing cere Cube that has recently been trans monies for the 2022 Games. formed into an “Ice Cube” following China plans to build 650 skating a yearlong renovation project. rinks and 800 ski resorts by 2022, up Now, four ice sheets lie over the from 334 and 738 respectively in center’s original main pool, which Top: The new Big Air ramp at Beijing’s Shougang Industrial Park lights up the night skyline. Left: Youngsters enjoy the fun of skating during June 2018, to help facilitate the mass has been filled by steel structures to the Ice and Snow Festival outside the National Stadium in Beijing on Dec 26. -

Presentazione Di Powerpoint

3150 FICTS PROJECTIONS FOR 4110 HOURS 16 FESTIVALS IN 5 CONTINENTS 35th MILANO INTERNATIONAL FICTS FEST 2017 15-20 Novembr e 2017 I NUMERI 4110 Ore di video in archivio 3150 Opere in archivio 96 Discipline sportive 150 Opere selezionate ogni anno tra un migliaio di opere iscritte 30 Anteprime assolute, europee e mondiali ogni anno 50 Discipline sportive sullo schermo Le Proiezioni: l’Archivio FICTS raccoglie più di 4110 ore di immagini sportive. Un patrimonio inestimabile di immagini sportive che la FICTS diffonde e mette a disposizione della comunità e delle Istituzioni, consultabile per: duplicazioni, ricerche storiche, rassegne, Festival, premiazioni, eventi, conferenze stampa, programmi tv, ecc. L’ARCHIVIO VIDEO: 4110 ore di film sportivi Calcio, tennis, boxe, ciclismo, sport estremi, nuoto, basket, etc. sono alcune delle 96 discipline sportive disponibili e fruibili dall’utente L’ARCHIVIO VIDEO 4110 ore di film sportivi Calcio, tennis, boxe, ciclismo, sport estremi, nuoto, basket, etc. sono alcune delle 96 discipline sportive disponibili e fruibili dall’utente PROIEZIONI NELLE SCUOLE: “La cultura attraverso le immagini” l’educazione sportiva nelle Scuole Primarie e Secondarie di Milano attraverso le immagini. Una iniziativa finalizzata alla diffusione della cultura sportiva, del fair-play e dei valori olimpici tra i giovani. Consiste nella presentazione di video sull’importanza dello sport come investimento sociale e come strumento di educazione, di formazione e di inclusione dei suoi protagonisti che mettono in luce l’aspetto formativo ed educativo dello sport “OLYMPIC IMAGES - OLYMPIC VALUES”. Olympic emotions through the world: Il Progetto rappresenta l’opportunità di diffondere i Valori educativi e formativi del Movimento Olimpico e Paralimpico attraverso le immagini coinvolgendo i giovani e tutta la popolazione mondiale. -

One by One, the Skaters Glide Into Their Starting

SHORT TRACK ONE BY ONE, THE SKATERS GLIDE INTO THEIR STARTING POSITIONS, SHAKING THE LAST JITTERS FROM THEIR POWERFUL LEGS AS THE ANNOUNCER CALLS THEIR NAMES. ON THE LINE, THEY CROUCH, MOTION- LESS, BALANCED ONLY ON THE PINPOINT TIP OF ONE SKATE AND THE RAZOR'THIN BLADE OF THE OTHER, WHICH THEY'VE WEDGED INTO THE ICE PARALLEL TO THE START LINE FOR MAXIMUM LEVERAGE. 1 HE CROWD HUSHES. SKATES Canada's Marc Gannon, the United States of America's Apolo Anton Ohno and Korea's Kim Dong-Sung jockey for the lead in the dramatic i 500 m final. SHEILA METZNER GLINT. MUSCLES TENSE. THIS IS HOW ALL SHORT TRACK RACES BEGIN. BUT THE WAY IN WHICH THIS ONE THE Source : Bibliothèque du CIO / IOC Library won by staving off Bulgaria's Evgenia Radanova, who won silver. Behind Radanova was Chinas Wang men's 1000 m final—ends is stunning, even in the fast, furious and notoriously unpredictable world Chunlu, who, with a bronze medal, shared in her country's glory, a moment that coincided with the of short track speed skating. Chinese New Year. "We want to take this back to China as the best gift ever," said Wang. "This has been a dream for two generations," said Yang Yang (A). "Happy New Year! Starting on the inside is Canadian and two-time Olympian Mathieu Turcotte. Next to him is Ahn Hyun-Soo, 16-year-old junior world champion from South Korea,- then American Apolo Anton On February 20, the thrills and spills continued as competitors in the final round of the mens Ohno, a rebellious teenager turned skating dynamo. -

2014 Winter Olympic Competing Nations ALBANIA (ALB)

2014 Winter Olympic Competing Nations We list below detailed historial Olympic information for every IOC Member Nation that has previously competed at the Olympic Winter Games and that will compete in Sochi, as of 27 January 2014. There appear to be 88 qualified NOCs that have met IF quota requirements as of 24 January, and have accepted them (the previous record for a Winter Olympics is 82 in 2010 at Vancouver). Unfortunately, after reallocation of some quotas, only the skiing federation (FIS) has published the final quotas as of 26 January. We have tried to list below the sports for which each NOC has qualified but there is a small chance, with reallocations, that there may be minor differences in the final allocation by sport. There are seven nations that will compete in Sochi that have never before competed at the Olympic Winter Games – Dominica, Malta, Paraguay, Timor-Leste (East Timor), Togo, Tonga, and Zimbabwe. Their factsheets have been published previously on olympstats.com – see http://olympstats.com/2014/01/23/new-winter-olympic-nations-for-sochi/, which came out on 23 January. One problem nation is listed below and that is DPR Korea (North). They have not qualified any athletes for Sochi. They had the 1st and 2nd reserves for pairs figure skating but those do not appear to have been chosen by final reallocation of quota sports by the International Skating Union (ISU). However, yesterday (26 January), DPR Korea has petitioned the IOC for redress to allow them to have Olympic athletes compete in Sochi. So they are included below but it is unknown if they will compete.