Chapter 1: Data Storage

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Broadband/IP/Cloud Computing

Broadband/IP/Cloud Computing Presentation to the Colorado Telecommunications Association www.cellstream.com (c) 2011 CellStream, Inc. 1 www.cellstream.com (c) 2011 CellStream, Inc. 2 www.cellstream.com (c) 2011 CellStream, Inc. 3 www.cellstream.com (c) 2011 CellStream, Inc. 4 www.cellstream.com (c) 2011 CellStream, Inc. 5 Past vs. Present The Past – 20th Century The Present – 21st Century • Peer-to-Peer with broadcast • Many-to-Many with multicast • Mix of Analog and Digital • All media is digital – single • Producers supply transport Consumers • All media is connected • Peer-to-Peer is 1:1 • Many-to-Many is M:N o Traditional Telephone • Producers and Consumers • Broadcast is 1:N do both o Newspaper o CNN takes reports from Twitter o TV/Radio o End users send pictures and reports www.cellstream.com (c) 2011 CellStream, Inc. 6 Where are we in Phone Evolution? PHONE 1.0 PHONE 2.0 PHONE 3.0 Soft phone Your Phone Number Your Phone Number Your Phone Number represents where you represents you represents an IP Address – are (e.g. 972-747- regardless of where you independent of location 0300 is home, 214- are. and appliance you are 405-3708 is work) using (e.g. IP Phone, Cell Phone, PDA, Computer, Television, etc.) www.cellstream.com (c) 2011 CellStream, Inc. 7 Phone 3.X • Examples of Phone 3.0 are Skype, Google Talk, others on a PC. • Phone 3.1 – Skype on an iTouch • Phone 3.2 – Android OS from Google – a phone Centric OS that runs on cellular appliances • Phone 3.3 – Android OS from Google that runs on a Phone Appliance www.cellstream.com (c) 2011 CellStream, Inc. -

Etir Code Lists

eTIR Code Lists Code lists CL01 Equipment size and type description code (UN/EDIFACT 8155) Code specifying the size and type of equipment. 1 Dime coated tank A tank coated with dime. 2 Epoxy coated tank A tank coated with epoxy. 6 Pressurized tank A tank capable of holding pressurized goods. 7 Refrigerated tank A tank capable of keeping goods refrigerated. 9 Stainless steel tank A tank made of stainless steel. 10 Nonworking reefer container 40 ft A 40 foot refrigerated container that is not actively controlling temperature of the product. 12 Europallet 80 x 120 cm. 13 Scandinavian pallet 100 x 120 cm. 14 Trailer Non self-propelled vehicle designed for the carriage of cargo so that it can be towed by a motor vehicle. 15 Nonworking reefer container 20 ft A 20 foot refrigerated container that is not actively controlling temperature of the product. 16 Exchangeable pallet Standard pallet exchangeable following international convention. 17 Semi-trailer Non self propelled vehicle without front wheels designed for the carriage of cargo and provided with a kingpin. 18 Tank container 20 feet A tank container with a length of 20 feet. 19 Tank container 30 feet A tank container with a length of 30 feet. 20 Tank container 40 feet A tank container with a length of 40 feet. 21 Container IC 20 feet A container owned by InterContainer, a European railway subsidiary, with a length of 20 feet. 22 Container IC 30 feet A container owned by InterContainer, a European railway subsidiary, with a length of 30 feet. 23 Container IC 40 feet A container owned by InterContainer, a European railway subsidiary, with a length of 40 feet. -

Breaking Through the Myths to Reality: a Future-Proof View Of

Breaking Through the Myths to Reality A Future-Proof View of Fiber Optic Inspection and Cleaning Dear Viewer: As presented at BICSI® in February- 2018, this seminar has video and animations that Edward J. Forrest, Jr. are not available as a .pdf file. If you would like the RMS(RaceMarketingServices) original version, please contact BICSI or the author. Bringing Ideas Together Caution: This Presentation is going to be controversial! IT IS BASED ON 2,500 YEARS OF SCIENCE AND PRODUCT DEVELOPMENT 2 Fact OR FICTION of Fiber Optic Cleaning and Inspection This seminarWE WILL mayDISCUSS contradictAND currentDEFINE: trends ➢ There➢ Cleaningand are what OTHER you’ve is notWAYS been important…BESIDES taught. VIDEO ➢ ➢➢“Automatic99.9%Debris➢ ➢ExistingA “Reagent FiberonS Detection” CIENTIFICthe standards, Optic surface Grade” ConnectorR isEALITY of such“good Isopropanola fiber… as enough”.is optic a is IECconnector“Pass 61300Let’sanI NSPECTIONanythingeffective- Fail”separate- 3surface-35, is is bettereasy are Factsfiberto is “Best determine thantwoto from optic understand nothing!-dimensional Practice”Fiction cleaner What we’veTwoif the ( ALL-Dimensional connector) been taught/bought/soldand is Structure“clean” over the last 30+Myth yearsfrom may“diameter”. Scientific not be the same Reality thing! 3 Fact OR FICTION of Fiber Optic Cleaning and Inspection ➢ A Fiber Optic Connector is a Two-Dimensional Structure ➢ 99.9% “Reagent Grade” Isopropanol is an effective fiber optic cleaner ➢ Cleaning is not important… anything is better than nothing! ➢ There are OTHER WAYS BESIDES VIDEO INSPECTION to determine if the connector is “clean” ➢ Debris on the surface of a fiber optic connector surface is two-dimensional “diameter”. ➢ “Automatic Detection” is “good enough”. -

File Organization and Management Com 214 Pdf

File organization and management com 214 pdf Continue 1 1 UNESCO-NIGERIA TECHNICAL - VOCATIONAL EDUCATION REVITALISATION PROJECT-PHASE II NATIONAL DIPLOMA IN COMPUTER TECHNOLOGY FILE Organization AND MANAGEMENT YEAR II- SE MESTER I THEORY Version 1: December 2 2 Content Table WEEK 1 File Concepts... 6 Bit:... 7 Binary figure... 8 Representation... 9 Transmission... 9 Storage... 9 Storage unit... 9 Abbreviation and symbol More than one bit, trit, dontcare, what? RfC on trivial bits Alternative Words WEEK 2 WEEK 3 Identification and File File System Aspects of File Systems File Names Metadata Hierarchical File Systems Means Secure Access WEEK 6 Types of File Systems Disk File Systems File Systems File Systems Transactional Systems File Systems Network File Systems Special Purpose File Systems 3 3 File Systems and Operating Systems Flat File Systems File Systems according to Unix-like Operating Systems File Systems according to Plan 9 from Bell Files under Microsoft Windows WEEK 7 File Storage Backup Files Purpose Storage Primary Storage Secondary Storage Third Storage Out Storage Features Storage Volatility Volatility UncertaintyAbility Availability Availability Performance Key Storage Technology Semiconductor Magnetic Paper Unusual Related Technology Connecting Network Connection Robotic Processing Robotic Processing File Processing Activity 4 4 Technology Execution Program interrupts secure mode and memory control mode Virtual Memory Operating Systems Linux and UNIX Microsoft Windows Mac OS X Special File Systems Journalized File Systems Graphic User Interfaces History Mainframes Microcomputers Microsoft Windows Plan Unix and Unix-like operating systems Mac OS X Real-time Operating Systems Built-in Core Development Hobby Systems Pre-Emptification 5 5 WEEK 1 THIS WEEK SPECIFIC LEARNING OUTCOMES To understand: The concept of the file in the computing concept, field, character, byte and bits in relation to File 5 6 6 Concept Files In this section, we will deal with the concepts of the file and their relationship. -

IBM-IS-DCI-UR-Newsletter.Pdf

Monthly Newsletter Mar 2016 Monthly Journal on Technology Know-How of IBM’s Technology space Tech Updates on Mainframe Platform “Mainframe“ the IBM Dictionary Of Computing defines "mainframe" as "a large computer, in particular one to which other computers can be connected so that they can share facilities the mainframe provides (for example, a System/370 computing system to which personal computers are attached so that they can upload and download programs and data). The term “Mainframe “ usually refers to hardware only, namely, main storage, execution circuitry and peripheral units“ level, as opposed to trying to do it all from the System z platform. Did you know that mainframes and all other computers have two types of physical storage: Internal and external. Physical storage located on the mainframe processor itself. This is called processor storage, real storage or central storage; think of it as memory for the mainframe. Physical storage external to the mainframe, including storage on direct access devices, such as disk drives and tape drives. This storage is called paging storage or auxiliary storage. The primary difference between the two kinds of storage relates to the way in which it is accessed, as follows: Central storage is accessed synchronously with the processor. That is, the processor must wait while data is retrieved from central storage. Auxiliary storage is accessed asynchronously. The processor accesses auxiliary storage through an input/output (I/O) request, which is scheduled to run amid other work requests in the system. During an I/O request, the processor is free to execute other, unrelated work. -

Dictionary of Ibm & Computing Terminology 1 8307D01a

1 DICTIONARY OF IBM & COMPUTING TERMINOLOGY 8307D01A 2 A AA (ay-ay) n. Administrative Assistant. An up-and-coming employee serving in a broadening assignment who supports a senior executive by arranging meetings and schedules, drafting and coordinating correspondence, assigning tasks, developing presentations and handling a variety of other administrative responsibilities. The AA’s position is to be distinguished from that of the executive secretary, although the boundary line between the two roles is frequently blurred. access control n. In computer security, the process of ensuring that the resources of a computer system can be accessed only by authorized users in authorized ways. acknowledgment 1. n. The transmission, by a receiver, of acknowledge characters as an affirmative response to a sender. 2. n. An indication that an item sent was received. action plan n. A plan. Project management is never satisfied by just a plan. The only acceptable plans are action plans. Also used to mean an ad hoc short-term scheme for resolving a specific and well defined problem. active program n. Any program that is loaded and ready to be executed. active window n. The window that can receive input from the keyboard. It is distinguishable by the unique color of its title bar and window border. added value 1. n. The features or bells and whistles (see) that distinguish one product from another. 2. n. The additional peripherals, software, support, installation, etc., provided by a dealer or other third party. administrivia n. Any kind of bureaucratic red tape or paperwork, IBM or not, that hinders the accomplishment of one’s objectives or goals. -

The 2016 SNIA Dictionary

A glossary of storage networking data, and information management terminology SNIA acknowledges and thanks its Voting Member Companies: Cisco Cryptsoft DDN Dell EMC Evaluator Group Fujitsu Hitachi HP Huawei IBM Intel Lenovo Macrosan Micron Microsoft NetApp Oracle Pure Storage Qlogic Samsung Toshiba Voting members as of 5.23.16 Storage Networking Industry Association Your Connection Is Here Welcome to the Storage Networking Industry Association (SNIA). Our mission is to lead the storage industry worldwide in developing and promoting standards, technologies, and educational services to empower organizations in the management of information. Made up of member companies spanning the global storage market, the SNIA connects the IT industry with end-to-end storage and information management solutions. From vendors, to channel partners, to end users, SNIA members are dedicated to providing the industry with a high level of knowledge exchange and thought leadership. An important part of our work is to deliver vendor-neutral and technology-agnostic information to the storage and data management industry to drive the advancement of IT technologies, standards, and education programs for all IT professionals. For more information visit: www.snia.org The Storage Networking Industry Association 4360 ArrowsWest Drive Colorado Springs, Colorado 80907, U.S.A. +1 719-694-1380 The 2016 SNIA Dictionary A glossary of storage networking, data, and information management terminology by the Storage Networking Industry Association The SNIA Dictionary contains terms and definitions related to storage and other information technologies, and is the storage networking industry's most comprehensive attempt to date to arrive at a common body of terminology for the technologies it represents. -

Wiley Networking Council Series

Y L F M A E T Brought to you by Team FLY® Team-Fly® Page i High-Speed Networking A Systematic Approach to High-Bandwidth Low-Latency Communication Page ii This page intentionally left blank. Page iii High-Speed Networking A Systematic Approach to High-Bandwidth Low-Latency Communication James P.G. Sterbenz and Joseph D. Touch with contributions from Julio Escobar Rajesh Krishnan Chunming Qiao technical editor A. Lyman Chapin Page iv Publisher: Robert Ipsen Editor: Carol A. Long Assistant Editor: Adaobi Obi Managing Editor: Gerry Fahey Text Design & Composition: UG / GGS Information Services, Inc. Designations used by companies to distinguish their products are often claimed as trademarks. In all instances where John Wiley & Sons, Inc., is aware of a claim, the product names appear in initial capital or ALL CAPITAL LETTERS. Readers, however, should contact the appropriate companies for more complete information regarding trademarks and registration. Copyright © 2001 by James P.G. Sterbenz and Joseph D. Touch. All rights reserved. Published by John Wiley & Sons, Inc. No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, scanning or otherwise, except as permitted under Sections 107 or 108 of the 1976 United States Copyright Act, without either the prior written permission of the Publisher, or authorization through payment of the appropriate per- copy fee to the Copyright Clearance Center, 222 Rosewood Drive, Danvers, MA 01923, (978) 750-8400, fax (978) 750-4744. Requests to the Publisher for permission should be addressed to the Permissions Department, John Wiley & Sons, Inc., 605 Third Avenue, New York, NY 10158-0012, (212) 850-6011, fax (212) 850-6008, E-Mail: PERMREQ @ WILEY.COM. -

Engilab Units 2018 V2.2

EngiLab Units 2021 v3.1 (v3.1.7864) User Manual www.engilab.com This page intentionally left blank. EngiLab Units 2021 v3.1 User Manual (c) 2021 EngiLab PC All rights reserved. No parts of this work may be reproduced in any form or by any means - graphic, electronic, or mechanical, including photocopying, recording, taping, or information storage and retrieval systems - without the written permission of the publisher. Products that are referred to in this document may be either trademarks and/or registered trademarks of the respective owners. The publisher and the author make no claim to these trademarks. While every precaution has been taken in the preparation of this document, the publisher and the author assume no responsibility for errors or omissions, or for damages resulting from the use of information contained in this document or from the use of programs and source code that may accompany it. In no event shall the publisher and the author be liable for any loss of profit or any other commercial damage caused or alleged to have been caused directly or indirectly by this document. "There are two possible outcomes: if the result confirms the Publisher hypothesis, then you've made a measurement. If the result is EngiLab PC contrary to the hypothesis, then you've made a discovery." Document type Enrico Fermi User Manual Program name EngiLab Units Program version v3.1.7864 Document version v1.0 Document release date July 13, 2021 This page intentionally left blank. Table of Contents V Table of Contents Chapter 1 Introduction to EngiLab Units 1 1 Overview .................................................................................................................................. -

Attacks on Quantum Key Distribution Protocols That Employ Non

Attacks on quantum key distribution protocols that employ non‐ITS authentication Christoph Pacher, Aysajan Abidin, Thomas Lorünser, Momtchil Peev, Rupert Ursin, Anton Zeilinger and Jan-Åke Larsson The self-archived postprint version of this journal article is available at Linköping University Institutional Repository (DiVA): http://urn.kb.se/resolve?urn=urn:nbn:se:liu:diva-91260 N.B.: When citing this work, cite the original publication. The original publication is available at www.springerlink.com: Pacher, C., Abidin, A., Lorünser, T., Peev, M., Ursin, R., Zeilinger, A., Larsson, J., (2016), Attacks on quantum key distribution protocols that employ non-ITS authentication, Quantum Information Processing, 15(1), 327-362. https://doi.org/10.1007/s11128-015-1160-4 Original publication available at: https://doi.org/10.1007/s11128-015-1160-4 Copyright: Springer Verlag (Germany) http://www.springerlink.com/?MUD=MP Attacks on quantum key distribution protocols that employ non-ITS authentication C Pacher1 · A Abidin2 · T Lor¨unser1 · M Peev1 · R Ursin3 · A Zeilinger3;4 · J-A˚ Larsson2 the date of receipt and acceptance should be inserted later Abstract We demonstrate how adversaries with large computing resources can break Quantum Key Distribution (QKD) protocols which employ a par- ticular message authentication code suggested previously. This authentication code, featuring low key consumption, is not Information-Theoretically Secure (ITS) since for each message the eavesdropper has intercepted she is able to send a different message from a set of messages that she can calculate by finding collisions of a cryptographic hash function. However, when this authentication code was introduced it was shown to prevent straightforward Man-In-The- Middle (MITM) attacks against QKD protocols. -

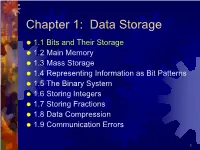

Data Storage

Chapter 1: Data Storage 1.1 Bits and Their Storage 1.2 Main Memory 1.3 Mass Storage 1.4 Representing Information as Bit Patterns 1.5 The Binary System 1.6 Storing Integers 1.7 Storing Fractions 1.8 Data Compression 1.9 Communication Errors 1 HIGH Bits LOW Bit Binary Digit A symbol whose meaning depends on the application at hand. Numeric value (1 or 0) Boolean value (true or false) Voltage (high or low) For TTL, high=5V, low=0V 2 More bits? kilobit (kbit) 103 210 megabit (Mbit) 106 220 gigabit (Gbit) 109 230 terabit (Tbit) 1012 240 petabit (Pbit) 1015 250 exabit (Ebit) 1018 260 zettabit (Zbit) 1021 270 3 Bit Patterns All data stored in a computer are represented by patterns of bits: Numbers Text characters Images Sound Anything else… 4 Boolean Operations Boolean operation any operation that manipulates one or more true/false values Can be used to operate on bits Specific operations AND OR XOR NOT 5 AND, OR, XOR 6 Gates Gates devices that produce the outputs of Boolean operations when given the operations‟ input values Often implemented as electronic circuits Provide the building blocks from which computers are constructed 7 AND, OR, XOR, NOT Gates Chapter 2 8 INPUT OUTPUT A B A NAND B NAND 0 0 1 0 1 1 1 0 1 NOR 1 1 0 XNOR 9 10 Store the bit What do we mean by „storing‟ the data? Bits in the computer at the i th second = Bits in the computer at the (i + 1) th second For example If the current state of the bit is „1‟, it should be still „1‟ at the next second 11 Flip-Flops Flip-Flop a circuit built from gates that can store one bit of data Two input lines One input line sets its stored value to 1 Another input line sets its stored value to 0 When both input lines are 0, the most recently stored value is preserved 12 Flip-Flops Illustrated X Y Z X Z 0 0 -- 0 1 0 Flip-Flop 1 0 1 Y 1 1 * 13 A Simple Flip-Flop Circuit 14 Setting to 1 – Step a. -

File Organization & Management

1 UNESCO -NIGERIA TECHNICAL & VOCATIONAL EDUCATION REVITALISATION PROJECT -PHASE II NATIONAL DIPLOMA IN COMPUTER TECHNOLOGY File Organization and Management YEAR II- SE MESTER I THEORY Version 1: December 2008 1 2 Table of Contents WEEK 1 File Concepts .................................................................................................................................6 Bit: . .................................................................................................................................................7 Binary digit .....................................................................................................................................8 Representation ...............................................................................................................................9 Transmission ..................................................................................................................................9 Storage ............................................................................................................................................9 Storage Unit .....................................................................................................................................9 Abbreviation and symbol ............................................................................................................ 10 More than one bit ......................................................................................................................... 11 Bit, trit,