Real-Time Classification of Dance Gestures from Skeleton Animation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

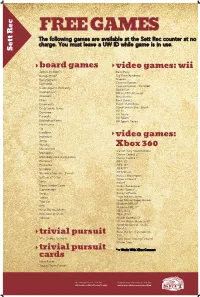

Sett Rec Counter at No Charge

FREE GAMES The following games are available at the Sett Rec counter at no charge. You must leave a UW ID while game is in use. Sett Rec board games video games: wii Apples to Apples Bash Party Backgammon Big Brain Academy Bananagrams Degree Buzzword Carnival Games Carnival Games - MiniGolf Cards Against Humanity Mario Kart Catchphrase MX vs ATV Untamed Checkers Ninja Reflex Chess Rock Band 2 Cineplexity Super Mario Bros. Crazy Snake Game Super Smash Bros. Brawl Wii Fit Dominoes Wii Music Eurorails Wii Sports Exploding Kittens Wii Sports Resort Finish Lines Go Headbanz Imperium video games: Jenga Malarky Mastermind Xbox 360 Call of Duty: World at War Monopoly Dance Central 2* Monopoly Deal (card game) Dance Central 3* Pictionary FIFA 15* Po-Ke-No FIFA 16* Scrabble FIFA 17* Scramble Squares - Parrots FIFA Street Forza 2 Motorsport Settlers of Catan Gears of War 2 Sorry Halo 4 Super Jumbo Cards Kinect Adventures* Superfection Kinect Sports* Swap Kung Fu Panda Taboo Lego Indiana Jones Toss Up Lego Marvel Super Heroes Madden NFL 09 Uno Madden NFL 17* What Do You Meme NBA 2K13 Win, Lose or Draw NBA 2K16* Yahtzee NCAA Football 09 NCAA March Madness 07 Need for Speed - Rivals Portal 2 Ruse the Art of Deception trivial pursuit SSX 90's, Genus, Genus 5 Tony Hawk Proving Ground Winter Stars* trivial pursuit * = Works With XBox Connect cards Harry Potter Young Players Edition Upcoming Events in The Sett Program your own event at The Sett union.wisc.edu/sett-events.aspx union.wisc.edu/eventservices.htm. -

Chris Canfield

308 Western Ave, Flr 2 1-617-417-0006 Cambridge, MA 02139 [email protected] Chris Canfield http://chriscanfield.net “Few people can walk into a department and have an immediate impact. Chris is one of those people.” - Robert Nelson, 2K Sports Qualifications: Employment History: o Ten years of professional game development experience. Designed for all previous and next-gen platforms, including Xbox Subatomic Studios 360, PS3, Wii, iPhone, iPad, PC, Web, DS, PSP, PS2, GameCube, 2010 - Present Xbox 1, and iPod Video. o Lifetime metacritic average of 84% with multiple games-of-the-year Harmonix Music Systems and an estimated 1.6 billion dollar retail gross. Inc. o Lectured about game design and production at MIT, Worcester 2004 – 2010 Polytechnic, Becker College, McGill University, College Week Online, DARPA, others. o ITT Norwood’s special consultant about game design issues. Stainless Steel Studios Inc. Taught full courses in game design at Bristol Community College, 2003 ITT Tech. o Experience designing for unique devices, including 2D and 3D VCE / Sega Sports cameras, wands, keyboards / mice, gamepads, accelerometers, 2002 Guitars, Drums, and other custom plastic. o Flash, 3DS Max, Visual C++, Unity3D, Dreamweaver, Photoshop, and most major development tools for the PC, 360, and PS3. Education: Masters Professional Roles: Digital Media Northeastern University Senior Designer / Systems Designer / Level Design: 2012 Fieldrunners 2, Guitar Hero II, Dance Central, Rock Band 1, Eyetoy Antigrav, Karaoke Revolution Party, Tinkerbox Bachelor Crafted design vision for the critically acclaimed Guitar Hero 2. Sociology Additionally, spearheaded content implementation teams, defined design University of California, departmental processes, created major gameplay subsystems, laid out Irvine shell flows and usability languages, built discrete sections of level 2001 design, and wrote style bibles. -

Sony Sells US-Based Online Game Unit 3 February 2015

Sony sells US-based online game unit 3 February 2015 including its laptop business and Manhattan headquarters, in a bid to claw back to steady profitability after years of massive losses. Founded in 2000, New York-based Columbus Nova manages more than $15 billion in assets, through its own funds and affiliated portfolio companies, according to the statement from the company. In 2010, it bought Harmonix Music Systems, maker of Rock Band and Dance Central, from Viacom. © 2015 AFP Fans play games at Sony booth during the annual E3 video game extravaganza in Los Angeles, California, on June 10, 2014 Japan's Sony has sold its online gaming unit to a US investment firm, in a move that should free it to make titles for consoles other than PlaySation. New York investment management firm Columbus Nova has acquired Sony Online Entertainment (SOE), maker of the popular 3D fantasy multiplayer game EverQuest, the companies said in a statement on Monday. The deal—financial terms were not disclosed—will see the former Sony unit operate as an independent game development studio under the name Daybreak Game Company. Columbus Nova, based in New York, already owns the maker of the music video game Rock Band. The move will mean the renamed company can develop games for mobile devices and non-Sony consoles, such as Microsoft's Xbox, it said. EverQuest competes with the popular World Of Warcraft title. Sony has been offloading a string of assets, 1 / 2 APA citation: Sony sells US-based online game unit (2015, February 3) retrieved 27 September 2021 from https://phys.org/news/2015-02-sony-us-based-online-game.html This document is subject to copyright. -

A Methodology for Physical Creativity

Suit The System To The Player: A Methodology for Physical Creativity Jane Friedhoff Parsons, The New School For Design 79 Fifth Avenue, Floor 16 New York, NY 10011 +1 (212) 229 - 8908 [email protected] ABSTRACT The PS Move, Kinect, and Wii tout their technological capabilities as evidence that they can best support intuitive, creative movements. However, games for these systems tend to use their technology to hold the player to higher standards of conformity. This style of game design can result in the player being made to 'fit' the game, rather than the other way around. It is worthwhile to explore alternatives for exertion games, as they can encourage exploration of long-dormant physical creativity in adults and potentially create coliberative experiences around the transgression of social norms. This paper synthesizes a methodology including generative outputs, multiple and simultaneous forms of exertion, minimized player tracking, irreverent metaphors, and play with social norms in order to promote. Scream 'Em Up tests this methodology and provides direction for future research. Keywords game design, physical creativity, embodied play, coliberation INTRODUCTION A major trend in console design is the development of increasingly sophisticated technology for tracking physical movement. The PS Move, Kinect, Wii, and other platforms largely eschew traditional controllers, instead asking the players to move their bodies or use their voices to interact with the system. Such systems tout their technological power as a shorthand for supporting more intuitive, more natural movement. The Kinect's marketing is emblematic of this: advertisements state, “you are the controller. […] If you have to kick, then kick, if you have to jump, then jump. -

Dance Central 2!

Welcome to Dance central 2! WARNING Before playing this game, read the Xbox 360® console instructions, Kinect™ sensor manual, and any other peripheral manuals for important safety and health information. Keep all manuals for future reference. For replacement hardware manuals, GETTInG STARTED go to www.xbox.com/support or call Xbox Customer Support. • Make sure your entire body is visible in the Helper Frame at the top of the screen. If you are playing with a friend, each player appears in a Important Health Warning About Playing Video Games separate Helper Frame. Photosensitive seizures • Reach your right hand out to the side to select menu items, such as A very small percentage of people may experience a seizure when exposed to certain CONTInUe on the title screen, and then swipe from right to left. visual images, including flashing lights or patterns that may appear in video games. Even people who have no history of seizures or epilepsy may have an undiagnosed condition • Return to a previous screen by reaching out your left hand to select that can cause these “photosensitive epileptic seizures” while watching video games. BACK in the lower-left corner, and then swiping from left to right. These seizures may have a variety of symptoms, including lightheadedness, altered © vision, eye or face twitching, jerking or shaking of arms or legs, disorientation, confusion, • Switch to navigating the menus using your Xbox 360 controller by or momentary loss of awareness. Seizures may also cause loss of consciousness or pressing any button. to exit controller mode, press the START button. convulsions that can lead to injury from falling down or striking nearby objects. -

Kinect for Xbox 360

MEDIA ALERT “Kinect” for Xbox 360 sets the future in motion — no controller required Microsoft reveals new ways to play that are fun and easy for everyone Sydney, Australia – June 15th – Get ready to Kinect to fun entertainment for everyone. Microsoft Corp. today capped off a two-day world premiere in Los Angeles, complete with celebrities, stunning physical dexterity and news from a galaxy far, far away, to reveal experiences that will transform living rooms in North America, beginning November 4th and will roll out to Australia and the rest of the world thereafter. Opening with a magical Cirque du Soleil performance on Sunday night attended by Hollywood’s freshest faces, Microsoft gave the transformation of home entertainment a name: Kinect for Xbox 360. Then, kicking off the Electronic Entertainment Expo (E3), Xbox 360 today invited the world to dance, hurdle, soar and make furry friends for life — all through the magic of Kinect — no controller required. “With ‘Kinectimals*’ and ‘Kinect Sports*’, ‘Your Shape™: Fitness Evolved*’ and ‘Dance Central™*’, your living room will become a zoo, a stadium, a fitness room or a dance club. You will be in the centre of your entertainment, using the best controller ever made — you,” said Kudo Tsunoda, creative director for Microsoft Game Studios. Microsoft and LucasArts also announced that they will bring Star Wars® to Kinect in 2011. And, sprinkling a little fairy dust on Xbox 360, Disney will bring its magic as well. “Xbox LIVE is about making it simple to find the entertainment you want, with the friends you care about, wherever you are. -

Music Games Rock: Rhythm Gaming's Greatest Hits of All Time

“Cementing gaming’s role in music’s evolution, Steinberg has done pop culture a laudable service.” – Nick Catucci, Rolling Stone RHYTHM GAMING’S GREATEST HITS OF ALL TIME By SCOTT STEINBERG Author of Get Rich Playing Games Feat. Martin Mathers and Nadia Oxford Foreword By ALEX RIGOPULOS Co-Creator, Guitar Hero and Rock Band Praise for Music Games Rock “Hits all the right notes—and some you don’t expect. A great account of the music game story so far!” – Mike Snider, Entertainment Reporter, USA Today “An exhaustive compendia. Chocked full of fascinating detail...” – Alex Pham, Technology Reporter, Los Angeles Times “It’ll make you want to celebrate by trashing a gaming unit the way Pete Townshend destroys a guitar.” –Jason Pettigrew, Editor-in-Chief, ALTERNATIVE PRESS “I’ve never seen such a well-collected reference... it serves an important role in letting readers consider all sides of the music and rhythm game debate.” –Masaya Matsuura, Creator, PaRappa the Rapper “A must read for the game-obsessed...” –Jermaine Hall, Editor-in-Chief, VIBE MUSIC GAMES ROCK RHYTHM GAMING’S GREATEST HITS OF ALL TIME SCOTT STEINBERG DEDICATION MUSIC GAMES ROCK: RHYTHM GAMING’S GREATEST HITS OF ALL TIME All Rights Reserved © 2011 by Scott Steinberg “Behind the Music: The Making of Sex ‘N Drugs ‘N Rock ‘N Roll” © 2009 Jon Hare No part of this book may be reproduced or transmitted in any form or by any means – graphic, electronic or mechanical – including photocopying, recording, taping or by any information storage retrieval system, without the written permission of the publisher. -

Dance Central

Bem-vindo ao Dance Central 2! Aviso Antes de começar a jogar, leia as instruções da consola Xbox 360®, o manual do sensor Kinect e um dos outros manuais periféricos com informações de segurança e saúde. Guarde todos os manuais para referência futura. Relativamente a COMEÇAR manuais de hardware de substituição, visite www.xbox.com/support ou telefone para o Suporte a Clientes Xbox. Não percas este código para continuares a dançar... • Assegura-te de que todo o teu corpo está visível na Janela de Ajuda no topo do ecrã. Se estiveres a jogar com um amigo, cada um de vocês vai aparecer numa Janela de Ajuda separada. Aviso importante de saúde Relativamente à Utilização de videojogos Ataques de Epilepsia Fotossensível • Estica a mão direita para o lado para seleccionar CONTINUE Um número muito reduzido de pessoas pode ter um ataque epiléptico ao serem expostas a (CONTINUAR) e arrasta da direita para a esquerda. determinadas imagens visuais, incluindo luzes ou padrões intermitentes que poderão aparecer em videojogos. Mesmo pessoas sem quaisquer antecedentes de tais ataques ou de epilepsia • Para regressar ao ecrã anterior, estica a mão esquerda para poderão sofrer de sintomas não diagnosticados que podem provocar estes “ataques de epilepsia IMPORTAR CANÇÕES DO seleccionar BACK (RETROCEDER), no canto inferior esquerdo, fotossensível” ao verem videojogos. e arrasta da esquerda para a direita. Estes ataques poderão ter diversos sintomas, incluindo tonturas, alterações da visão, espasmos nos olhos ou na cara, espasmos ou tremor nos braços ou nas pernas, desorientação, confusão ou DANCE CENTRAL • Para navegar pelos menus utilizando o teu Controlador Xbox 360®, perda de consciência passageira. -

Teach Me to Dance: Exploring Player Experience and Performance in Full Body Dance Games

Teach Me to Dance: Exploring Player Experience and Performance in Full Body Dance Games Emiko Charbonneau Andrew Miller Joseph J. LaViola Jr. Department of EECS Department of EECS Department of EECS University of Central Florida University of Central Florida University of Central Florida 4000 Central Florida Blvd. 4000 Central Florida Blvd. 4000 Central Florida Blvd. Orlando, FL 32816 Orlando, FL 32816 Orlando, FL 32816 [email protected] [email protected] [email protected] ABSTRACT We present a between-subjects user study designed to com- pare a dance instruction video to a rhythm game interface. The goal of our study is to answer the question: can these games be an effective learning tool for the activity they sim- ulate? We use a body controlled dance game prototype which visually emulates current commercial games. Our re- search explores the player's perceptions of their own capa- bilities, their capacity to deal with a high influx of informa- tion, and their preferences regarding body-controlled video games. Our results indicate that the game-inspired interface elements alone were not a substitute for footage of a real hu- man dancer, but participants overall preferred to have access to both forms of media. We also discuss the dance rhythm Figure 1: Our dance choreography game with Game game as abstracted entertainment, exercise motivation, and Only visuals. The orange silhouette (left) shows the realistic dance instruction. player what to do and the purple silhouette (right) shows the player's position. Categories and Subject Descriptors K.8.0 [Computing Milieux]: Personal Computing{Games; H.5.2 [Computing Methodologies]: Methodologies and systems such as the Sony Eyetoy and the Microsoft Kinect Techniques{Interaction Techniques allow for the player's limbs to act as input without the player holding or wearing an external device. -

Fact Sheet June 2012

Fact Sheet June 2012 What is “Dance Central 3”? The best-selling dance series game on Kinect for Xbox 360 returns this fall with “Dance Central 3.” Music and rhythm game innovator Harmonix Music Systems Inc. continues to expand on the “Dance Central” universe with electrifying new features that keep the party going, including an all-new multiplayer mode for up to eight players and the hottest, most diverse soundtrack yet, featuring songs by Usher, Cobra Starship, Gloria Gaynor, 50 Cent and many more. Dance through time. Hit the dance floor with the hottest moves you’ve always wanted to learn from the past four decades as well as current chart-toping dance hits with the game’s expanded story mode. Dancers will be up and moving through favorite dances from the past, including “The Hustle,” “Electric Slide” and “The Dougie,” and grooving to today’s top hits. Get the party started! “Dance Central 3” features an all-new “Crew Throwdown” mode, which pits two teams of up to four dancers head-to-head in a series of “Battles” and fresh mini-games to become the hottest crew on the dance floor. “Crew Throwdown” features innovative gameplay, including the freeform “Keep the Beat” mini-game, in which dancers earn points based on the rhythm of their movements, and “Make Your Move,” which challenges dancers to invent their own dance moves, then compete to see who can master a routine created on the spot. For a less-structured dance party, “Dance Central 3” also gets right into the action with a “Party” mode that keeps the beats rolling and lets players drop in and out with ease to the best songs in dance. -

Conserva Este Código Para Seguir Bailando

Conserva este código para seguir bailando... Las partidas en línea, las funciones, las características y el soporte técnico de Dance Central y de cualquiera de los demás productos y servicios de Harmonix Music Systems, Inc., MTV Networks, Microsoft y terceros se proporcionan únicamente “tal cual” y “tal como se ofrecen” y pueden ser modifi cadas o descontinuadas, o no estar disponibles en cualquier momento y a discreción exclusiva de las partes citadas. X17-08248-01 ADVERTENCIA Antes de disfrutar este juego, lee las instrucciones de la consola Xbox 360®, el manual del sensor Kinect y el manual de cualquier otro periférico para BIENVENIDOS A DANCE CENTRAL conocer información importante de seguridad y salud. Conserva todos los manuales para futuras consultas. Para obtener manuales de hardware de repuesto, visita www.xbox.com/support o llama al Servicio de soporte al cliente de Xbox. Es el momento de mostrar al mundo lo que vales. En Dance Central, aprenderás pasos impresionantes, dominarás rutinas Para obtener información de seguridad adicional, consulta el interior de la contratapa. completas y avanzarás de nivel, ¡desde Sujetacolumnas hasta Leyenda viviente! Información importante acerca de los videojuegos. Ataques epilépticos fotosensibles Un porcentaje escaso de personas pueden sufrir un ataque epiléptico fotosensible cuando se exponen a ciertas imágenes visuales, entre las que se incluyen los patrones y las luces parpadeantes que aparecen en los videojuegos. Incluso las personas que no tengan un historial de este tipo de ataques o de epilepsia pueden ser propensas a estos “ataques epilépticos fotosensibles” cuando fi jan la vista en un videojuego. Estos ataques presentan varios síntomas: mareos, visión alterada, tics nerviosos en la cara o los ojos, temblores de brazos o piernas, desorientación, confusión o pérdida momentánea del conocimiento. -

Poetry in Motion

Harmonix Music Systems, Inc. Poetry in motion. www.harmonixmusic.com Autodesk® 3ds Max® software Harmonix uses Autodesk software Autodesk® MotionBuilder® software to create the sweet moves of Dance Central. The quality of animation was vital to the success of Dance Central…. After creating and testing our character rig in 3ds Max, we used Story mode in MotionBuilder to make everything very clear and understandable for the players. —Riseon Kim Lead Animator Harmonix Dance Central™. Image courtesy of Harmonix Music Systems, Inc. Summary The Challenge It was 2005 when Harmonix Music Systems burst While rapid advancements in motion capture onto the video game scene with Guitar Hero, the technology have already enabled video game now-legendary (and addictive) video game in which enthusiasts to closely mirror the action of animated players simulate playing lead guitar on their favorite characters, the creators of Dance Central clearly rock songs. The Cambridge, Massachusetts–based wanted to take things to a whole new level. company upped the ante in 2007 when it released Rock Band, which allowed individuals or teams to “We wanted Dance Central to be an absolutely full- play guitar, drums, or sing songs from bands including motion dance experience,” says Dare Matheson, lead The Beatles, Green Day, and a host of others. Now, artist on the game. “Our vision for the game was Harmonix is encouraging people to get up and dance not just tracking individual positions of the player’s with Dance Central™, an extraordinarily ambitious body at different points in time, but clearly and new title that coincided with the release of the precisely tracking full-body motions through time, Kinect™ for Xbox 360®, which enables players to while scoring them on the complete dance moves control and interact with the Xbox 360 video game they are executing.” and entertainment system from Microsoft through natural gestures and spoken commands.