Globalization Support Oracle Unicode Database Support

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

UNICODE for Kannada General Information & Description

UNICODE for Kannada (U+0C80 to U+0CFF) General Information & Description Written by: C V Srinatha Sastry Issued by: Director Directorate of Information Technology Government of Karnataka Multi Storied Buildings, Vidhana Veedhi BANGALORE 560 001 INDIA PDF created with FinePrint pdfFactory Pro trial version http://www.pdffactory.com UNICODE for Kannada Introduction The Kannada script is a South Indian script. It is used to write Kannada language of Karnataka State in India. This is also used in many parts of Tamil Nadu, Kerala, Andhra Pradesh and Maharashtra States of India. In addition, the Kannada script is also used to write Tulu, Konkani and Kodava languages. Kannada along with other Indian language scripts shares a large number of structural features. The Kannada block of Unicode Standard (0C80 to 0CFF) is based on ISCII-1988 (Indian Standard Code for Information Interchange). The Unicode Standard (version 3) encodes Kannada characters in the same relative positions as those coded in the ISCII-1988 standard. The Writing system that employs Kannada script constitutes a cross between syllabic writing systems and phonemic writing systems (alphabets). The effective unit of writing Kannada is the orthographic syllable consisting of a consonant (Vyanjana) and vowel (Vowel) (CV) core and optionally, one or more preceding consonants, with a canonical structure of ((C)C)CV. The orthographic syllable need not correspond exactly with a phonological syllable, especially when a consonant cluster is involved, but the writing system is built on phonological principles and tends to correspond quite closely to pronunciation. The orthographic syllable is built up of alphabetic pieces, the actual letters of Kannada script. -

Package Mathfont V. 1.6 User Guide Conrad Kosowsky December 2019 [email protected]

Package mathfont v. 1.6 User Guide Conrad Kosowsky December 2019 [email protected] For easy, off-the-shelf use, type the following in your docu- ment preamble and compile using X LE ATEX or LuaLATEX: \usepackage[hfont namei]{mathfont} Abstract The mathfont package provides a flexible interface for changing the font of math- mode characters. The package allows the user to specify a default unicode font for each of six basic classes of Latin and Greek characters, and it provides additional support for unicode math and alphanumeric symbols, including punctuation. Crucially, mathfont is compatible with both X LE ATEX and LuaLATEX, and it provides several font-loading commands that allow the user to change fonts locally or for individual characters within math mode. Handling fonts in TEX and LATEX is a notoriously difficult task. Donald Knuth origi- nally designed TEX to support fonts created with Metafont, and while subsequent versions of TEX extended this functionality to postscript fonts, Plain TEX's font-loading capabilities remain limited. Many, if not most, LATEX users are unfamiliar with the fd files that must be used in font declaration, and the minutiae of TEX's \font primitive can be esoteric and confusing. LATEX 2"'s New Font Selection System (nfss) implemented a straightforward syn- tax for loading and managing fonts, but LATEX macros overlaying a TEX core face the same versatility issues as Plain TEX itself. Fonts in math mode present a double challenge: after loading a font either in Plain TEX or through the nfss, defining math symbols can be unin- tuitive for users who are unfamiliar with TEX's \mathcode primitive. -

Neural Substrates of Hanja (Logogram) and Hangul (Phonogram) Character Readings by Functional Magnetic Resonance Imaging

ORIGINAL ARTICLE Neuroscience http://dx.doi.org/10.3346/jkms.2014.29.10.1416 • J Korean Med Sci 2014; 29: 1416-1424 Neural Substrates of Hanja (Logogram) and Hangul (Phonogram) Character Readings by Functional Magnetic Resonance Imaging Zang-Hee Cho,1 Nambeom Kim,1 The two basic scripts of the Korean writing system, Hanja (the logography of the traditional Sungbong Bae,2 Je-Geun Chi,1 Korean character) and Hangul (the more newer Korean alphabet), have been used together Chan-Woong Park,1 Seiji Ogawa,1,3 since the 14th century. While Hanja character has its own morphemic base, Hangul being and Young-Bo Kim1 purely phonemic without morphemic base. These two, therefore, have substantially different outcomes as a language as well as different neural responses. Based on these 1Neuroscience Research Institute, Gachon University, Incheon, Korea; 2Department of linguistic differences between Hanja and Hangul, we have launched two studies; first was Psychology, Yeungnam University, Kyongsan, Korea; to find differences in cortical activation when it is stimulated by Hanja and Hangul reading 3Kansei Fukushi Research Institute, Tohoku Fukushi to support the much discussed dual-route hypothesis of logographic and phonological University, Sendai, Japan routes in the brain by fMRI (Experiment 1). The second objective was to evaluate how Received: 14 February 2014 Hanja and Hangul affect comprehension, therefore, recognition memory, specifically the Accepted: 5 July 2014 effects of semantic transparency and morphemic clarity on memory consolidation and then related cortical activations, using functional magnetic resonance imaging (fMRI) Address for Correspondence: (Experiment 2). The first fMRI experiment indicated relatively large areas of the brain are Young-Bo Kim, MD Department of Neuroscience and Neurosurgery, Gachon activated by Hanja reading compared to Hangul reading. -

Assessment of Options for Handling Full Unicode Character Encodings in MARC21 a Study for the Library of Congress

1 Assessment of Options for Handling Full Unicode Character Encodings in MARC21 A Study for the Library of Congress Part 1: New Scripts Jack Cain Senior Consultant Trylus Computing, Toronto 1 Purpose This assessment intends to study the issues and make recommendations on the possible expansion of the character set repertoire for bibliographic records in MARC21 format. 1.1 “Encoding Scheme” vs. “Repertoire” An encoding scheme contains codes by which characters are represented in computer memory. These codes are organized according to a certain methodology called an encoding scheme. The list of all characters so encoded is referred to as the “repertoire” of characters in the given encoding schemes. For example, ASCII is one encoding scheme, perhaps the one best known to the average non-technical person in North America. “A”, “B”, & “C” are three characters in the repertoire of this encoding scheme. These three characters are assigned encodings 41, 42 & 43 in ASCII (expressed here in hexadecimal). 1.2 MARC8 "MARC8" is the term commonly used to refer both to the encoding scheme and its repertoire as used in MARC records up to 1998. The ‘8’ refers to the fact that, unlike Unicode which is a multi-byte per character code set, the MARC8 encoding scheme is principally made up of multiple one byte tables in which each character is encoded using a single 8 bit byte. (It also includes the EACC set which actually uses fixed length 3 bytes per character.) (For details on MARC8 and its specifications see: http://www.loc.gov/marc/.) MARC8 was introduced around 1968 and was initially limited to essentially Latin script only. -

Recognition of Online Handwritten Gurmukhi Strokes Using Support Vector Machine a Thesis

Recognition of Online Handwritten Gurmukhi Strokes using Support Vector Machine A Thesis Submitted in partial fulfillment of the requirements for the award of the degree of Master of Technology Submitted by Rahul Agrawal (Roll No. 601003022) Under the supervision of Dr. R. K. Sharma Professor School of Mathematics and Computer Applications Thapar University Patiala School of Mathematics and Computer Applications Thapar University Patiala – 147004 (Punjab), INDIA June 2012 (i) ABSTRACT Pen-based interfaces are becoming more and more popular and play an important role in human-computer interaction. This popularity of such interfaces has created interest of lot of researchers in online handwriting recognition. Online handwriting recognition contains both temporal stroke information and spatial shape information. Online handwriting recognition systems are expected to exhibit better performance than offline handwriting recognition systems. Our research work presented in this thesis is to recognize strokes written in Gurmukhi script using Support Vector Machine (SVM). The system developed here is a writer independent system. First chapter of this thesis report consist of a brief introduction to handwriting recognition system and some basic differences between offline and online handwriting systems. It also includes various issues that one can face during development during online handwriting recognition systems. A brief introduction about Gurmukhi script has also been given in this chapter In the last section detailed literature survey starting from the 1979 has also been given. Second chapter gives detailed information about stroke capturing, preprocessing of stroke and feature extraction. These phases are considered to be backbone of any online handwriting recognition system. Recognition techniques that have been used in this study are discussed in chapter three. -

+ Natali A, Professor of Cartqraphy, the Hebreu Uhiversity of -Msalem, Israel DICTIONARY of Toponymfc TERLMINO~OGY Wtaibynafiail~

United Nations Group of E%perts OR Working Paper 4eographicalNames No. 61 Eighteenth Session Geneva, u-23 August1996 Item7 of the E%ovisfonal Agenda REPORTSOF THE WORKINGGROUPS + Natali a, Professor of Cartqraphy, The Hebreu UhiVersity of -msalem, Israel DICTIONARY OF TOPONYMfC TERLMINO~OGY WtaIbyNafiaIl~- . PART I:RaLsx vbim 3.0 upi8elfuiyl9!J6 . 001 . 002 003 004 oo!l 006 007 . ooa 009 010 . ol3 014 015 sequala~esfocJphabedcsaipt. 016 putting into dphabetic order. see dso Kqucna ruIt!% Qphabctk 017 Rtlpreat8Ii00, e.g. ia 8 computer, wflich employs ooc only numm ds but also fetters. Ia a wider sense. aIso anploying punauatiocl tnarksmd-SymboIs. 018 Persod name. Esamples: Alfredi ‘Ali. 019 022 023 biliaw 024 02s seecIass.f- 026 GrqbicsymboIusedurunitiawrIdu~morespedficaty,r ppbic symbol in 1 non-dphabedc writiog ryste.n& Exmlptes: Chinese ct, , thong; Ambaric u , ha: Japaoese Hiragana Q) , no. 027 -.modiGed Wnprehauive term for cheater. simplified aad character, varIaoL 031 CbmJnyol 032 CISS, featm? 033 cQdedrepfwltatiul 034 035 036 037' 038 039 040 041 042 047 caavasion alphabet 048 ConMQo table* 049 0nevahte0frpointinlhisgr8ti~ . -.- w%idofplaaecoordiaarurnm;aingoftwosetsofsnpight~ -* rtcight8ngfIertoeachotkrodwithap8ltKliuofl8qthonbo&. rupenmposedonr(chieflytopogtaphtc)map.see8lsouTM gz 051 see axxdimtes. rectangufar. 052 A stahle form of speech, deriyed from a pbfgin, which has became the sole a ptincipal language of 8 qxech comtnunity. Example: Haitian awle (derived from Fresh). ‘053 adllRaIfeatlue see feature, allhlral. 054 055 * 056 057 Ac&uioaofsoftwamrcqkdfocusingrdgRaIdatabmem rstoauMe~osctlto~thisdatabase. 058 ckalog of defItitioas of lbe contmuofadigitaldatabase.~ud- hlg data element cefw labels. f0mw.s. internal refm codMndtextemty,~well~their-p,. 059 see&tadichlq. 060 DeMptioa of 8 basic unit of -Lkatifiile md defiile informatioa tooccqyrspecEcdataf!eldinrcomputernxaxtLExampk Pateofmtifii~ofluwtby~namaturhority’. -

Proposal for a Korean Script Root Zone LGR 1 General Information

(internal doc. #: klgp220_101f_proposal_korean_lgr-25jan18-en_v103.doc) Proposal for a Korean Script Root Zone LGR LGR Version 1.0 Date: 2018-01-25 Document version: 1.03 Authors: Korean Script Generation Panel 1 General Information/ Overview/ Abstract The purpose of this document is to give an overview of the proposed Korean Script LGR in the XML format and the rationale behind the design decisions taken. It includes a discussion of relevant features of the script, the communities or languages using it, the process and methodology used and information on the contributors. The formal specification of the LGR can be found in the accompanying XML document below: • proposal-korean-lgr-25jan18-en.xml Labels for testing can be found in the accompanying text document below: • korean-test-labels-25jan18-en.txt In Section 3, we will see the background on Korean script (Hangul + Hanja) and principal language using it, i.e., Korean language. The overall development process and methodology will be reviewed in Section 4. The repertoire and variant groups in K-LGR will be discussed in Sections 5 and 6, respectively. In Section 7, Whole Label Evaluation Rules (WLE) will be described and then contributors for K-LGR are shown in Section 8. Several appendices are included with separate files. proposal-korean-lgr-25jan18-en 1 / 73 1/17 2 Script for which the LGR is proposed ISO 15924 Code: Kore ISO 15924 Key Number: 287 (= 286 + 500) ISO 15924 English Name: Korean (alias for Hangul + Han) Native name of the script: 한글 + 한자 Maximal Starting Repertoire (MSR) version: MSR-2 [241] Note. -

Proposal for a Kannada Script Root Zone Label Generation Ruleset (LGR)

Proposal for a Kannada Script Root Zone Label Generation Ruleset (LGR) Proposal for a Kannada Script Root Zone Label Generation Ruleset (LGR) LGR Version: 3.0 Date: 2019-03-06 Document version: 2.6 Authors: Neo-Brahmi Generation Panel [NBGP] 1. General Information/ Overview/ Abstract The purpose of this document is to give an overview of the proposed Kannada LGR in the XML format and the rationale behind the design decisions taken. It includes a discussion of relevant features of the script, the communities or languages using it, the process and methodology used and information on the contributors. The formal specification of the LGR can be found in the accompanying XML document: proposal-kannada-lgr-06mar19-en.xml Labels for testing can be found in the accompanying text document: kannada-test-labels-06mar19-en.txt 2. Script for which the LGR is Proposed ISO 15924 Code: Knda ISO 15924 N°: 345 ISO 15924 English Name: Kannada Latin transliteration of the native script name: Native name of the script: ಕನ#ಡ Maximal Starting Repertoire (MSR) version: MSR-4 Some languages using the script and their ISO 639-3 codes: Kannada (kan), Tulu (tcy), Beary, Konkani (kok), Havyaka, Kodava (kfa) 1 Proposal for a Kannada Script Root Zone Label Generation Ruleset (LGR) 3. Background on Script and Principal Languages Using It 3.1 Kannada language Kannada is one of the scheduled languages of India. It is spoken predominantly by the people of Karnataka State of India. It is one of the major languages among the Dravidian languages. Kannada is also spoken by significant linguistic minorities in the states of Andhra Pradesh, Telangana, Tamil Nadu, Maharashtra, Kerala, Goa and abroad. -

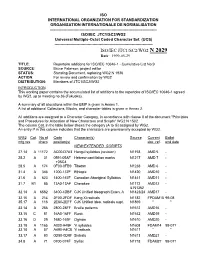

ISO/IEC JTC1/SC2/WG2 N 2029 Date: 1999-05-29

ISO INTERNATIONAL ORGANIZATION FOR STANDARDIZATION ORGANISATION INTERNATIONALE DE NORMALISATION --------------------------------------------------------------------------------------- ISO/IEC JTC1/SC2/WG2 Universal Multiple-Octet Coded Character Set (UCS) -------------------------------------------------------------------------------- ISO/IEC JTC1/SC2/WG2 N 2029 Date: 1999-05-29 TITLE: Repertoire additions for ISO/IEC 10646-1 - Cumulative List No.9 SOURCE: Bruce Paterson, project editor STATUS: Standing Document, replacing WG2 N 1936 ACTION: For review and confirmation by WG2 DISTRIBUTION: Members of JTC1/SC2/WG2 INTRODUCTION This working paper contains the accumulated list of additions to the repertoire of ISO/IEC 10646-1 agreed by WG2, up to meeting no.36 (Fukuoka). A summary of all allocations within the BMP is given in Annex 1. A list of additional Collections, Blocks, and character tables is given in Annex 2. All additions are assigned to a Character Category, in accordance with clause II of the document "Principles and Procedures for Allocation of New Characters and Scripts" WG2 N 1502. The column Cat. in the table below shows the category (A to G) assigned by WG2. An entry P in this column indicates that the characters are provisionally accepted by WG2. WG2 Cat. No of Code Character(s) Source Current Ballot mtg.res chars position(s) doc. ref. end date NEW/EXTENDED SCRIPTS 27.14 A 11172 AC00-D7A3 Hangul syllables (revision) N1158 AMD 5 - 28.2 A 31 0591-05AF Hebrew cantillation marks N1217 AMD 7 - +05C4 28.5 A 174 0F00-0FB9 Tibetan N1238 AMD 6 - 31.4 A 346 1200-137F Ethiopic N1420 AMD10 - 31.6 A 623 1400-167F Canadian Aboriginal Syllabics N1441 AMD11 - 31.7 B1 85 13A0-13AF Cherokee N1172 AMD12 - & N1362 32.14 A 6582 3400-4DBF CJK Unified Ideograph Exten. -

1 Working Draft for Supporting Blueberry Revision of XML

Working Draft for Supporting Blueberry Revision of XML Recommendation (1.0) with Particular Emphasis on Ethiopic Script and Writing System November 2001 This Version: http://www.digitaladdis.com/sk/ Ethiopics XML_Proposal_Version1.pdf Latest Ver.: http://www.digitaladdis.com/sk/ Ethiopics XML_Proposal_Version1.pdf Authors/Editors: Samuel Kinde Kassegne (Nanogen, UC San Diego, Engg Ext.) <[email protected]> Bibi Ephraim (Hewlett Packard) <[email protected]> Copyright ©2001, SKK, BE. Working Draft for Supporting Blueberry Revision of XML - Ethiopics Script 1 Abstract This document contains XML example codes, surveys and recommendations that support the need for Ethiopic script-based XML element type and attribute names in Amharic, Afaan Oromo, Tigrigna, Agewgna, Guragina, Hadiya, Harari, Sidama, Kembatigna and other Ethiopian languages that use Ethiopic script. Status of this Document This document is an initial working draft that is to be widely circulated for comment from the Ethiopic XML Interest Group and the wider public. The document is a work in progress. Table of Contents 1. Scope 2. Introduction 3. Objective 4. Review of Status of Native HTML and XML Applications in Ethiopic 4.1. Evolution of Ethiopic Content in HTML 4.2. Ethiopic and XML 5. Hybrid XML Document Markups in Ethiopic 5.1. Examples 5.2. Discussions 6. Fully-Native XML Document Markups in Ethiopic 6.1. Examples 6.2. Discussions 7. Conclusions and Recommendations 8. Appendix 9. References Working Draft for Supporting Blueberry Revision of XML - Ethiopics Script 2 1. Scope The work reported in this document contains XML example codes, surveys and recommendations that support the need for a revision of current XML standards to allow Ethiopic-based XML element type and attribute names in Amharic, Afaan Oromo, Tigrigna, Agewgna, Guragina, Hadiya, Harari, Sidama, Kembatigna and other Ethiopian languages that use Ethiopic script. -

Pronunciation

PRONUNCIATION Guide to the Romanized version of quotations from the Guru Granth Saheb. A. Consonants Gurmukhi letter Roman Word in Roman Word in Gurmukhi Meaning Letter letters using the letters using the relevant letter relevant letter from from the second the first column column S s Sabh sB All H h Het ihq Affection K k Krodh kroD Anger K kh Khayl Kyl Play G g Guru gurU Teacher G gh Ghar Gr House | ng Ngyani / gyani i|AwnI / igAwnI Possessing divine knowledge C c Cor cor Thief C ch Chaata Cwqw Umbrella j j Jahaaj jhwj Ship J jh Jhaaroo JwVU Broom \ ny Sunyi su\I Quiet t t Tap t`p Jump T th Thag Tg Robber f d Dar fr Fear F dh Dholak Folk Drum x n Hun hux Now q t Tan qn Body Q th Thuk Quk Sputum d d Den idn Day D dh Dhan Dn Wealth n n Net inq Everyday p p Peta ipqw Father P f Fal Pl Fruit b b Ben ibn Without B bh Bhagat Bgq Saint m m Man mn Mind X y Yam Xm Messenger of death r r Roti rotI Bread l l Loha lohw Iron v v Vasai vsY Dwell V r Koora kUVw Rubbish (n) in brackets, and (g) in brackets after the consonant 'n' both indicate a nasalised sound - Eg. 'Tu(n)' meaning 'you'; 'saibhan(g)' meaning 'by himself'. All consonants in Punjabi / Gurmukhi are sounded - Eg. 'pai-r' meaning 'foot' where the final 'r' is sounded. 3 Copyright Material: Gurmukh Singh of Raub, Pahang, Malaysia B. -

The Unicode Standard, Version 4.0--Online Edition

This PDF file is an excerpt from The Unicode Standard, Version 4.0, issued by the Unicode Consor- tium and published by Addison-Wesley. The material has been modified slightly for this online edi- tion, however the PDF files have not been modified to reflect the corrections found on the Updates and Errata page (http://www.unicode.org/errata/). For information on more recent versions of the standard, see http://www.unicode.org/standard/versions/enumeratedversions.html. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and Addison-Wesley was aware of a trademark claim, the designations have been printed in initial capital letters. However, not all words in initial capital letters are trademark designations. The Unicode® Consortium is a registered trademark, and Unicode™ is a trademark of Unicode, Inc. The Unicode logo is a trademark of Unicode, Inc., and may be registered in some jurisdictions. The authors and publisher have taken care in preparation of this book, but make no expressed or implied warranty of any kind and assume no responsibility for errors or omissions. No liability is assumed for incidental or consequential damages in connection with or arising out of the use of the information or programs contained herein. The Unicode Character Database and other files are provided as-is by Unicode®, Inc. No claims are made as to fitness for any particular purpose. No warranties of any kind are expressed or implied. The recipient agrees to determine applicability of information provided. Dai Kan-Wa Jiten used as the source of reference Kanji codes was written by Tetsuji Morohashi and published by Taishukan Shoten.