How Can We Accelerate the Insertion of Leading-Edge Technologies Into Our Military Weapons Systems?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Rns Over the Long Term and Critical to Enabling This Is Continued Investment in Our Technology and People, a Capital Allocation Priority

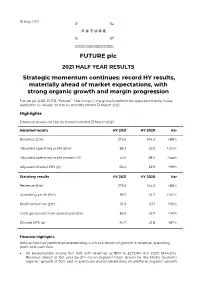

19 May 2021 FUTURE plc 2021 HALF YEAR RESULTS Strategic momentum continues: record HY results, materially ahead of market expectations, with strong organic growth and margin progression Future plc (LSE: FUTR, “Future”, “the Group”), the global platform for specialist media, today publishes its results for the six months ended 31 March 2021. Highlights Financial results for the six months ended 31 March 2021 Adjusted results HY 2021 HY 2020 Var Revenue (£m) 272.6 144.3 +89% Adjusted operating profit (£m)1 89.2 39.9 +124% Adjusted operating profit margin (%) 33% 28% +5ppt Adjusted diluted EPS (p) 65.4 32.9 +99% Statutory results HY 2021 HY 2020 Var Revenue (£m) 272.6 144.3 +89% Operating profit (£m) 59.7 24.7 +142% Profit before tax (£m) 56.9 27.1 +110% Cash generated from operations (£m) 85.9 35.7 +141% Diluted EPS (p) 40.7 21.8 +87% Financial highlights Robust first-half performance extending our track record of growth in revenue, operating profit and cash flow: An exceptionally strong first half, with revenue up 89% to £272.6m (HY 2020: £144.3m). Revenue ahead of last year by 21% on an organic2 basis, driven by the Media division’s organic2 growth of 30% and in particular digital advertising on-platform organic2 growth of 30% and eCommerce affiliates’ organic2 growth of 56%. US achieved revenue growth of 31% on an organic2 basis and UK revenues grew by 5% organically (UK has a higher mix of events and magazines revenues which were impacted more materially by the pandemic). -

Symbolism of Commander Isaac Hull's

Presentation Pieces in the Collection of the USS Constitution Museum Silver Urn Presented to Captain Isaac Hull, 1813 Prepared by Caitlin Anderson, 2010 © USS Constitution Museum 2010 What is it? [Silver urn presented to Capt. Isaac Hull. Thomas Fletcher & Sidney Gardiner. Philadelphia, 1813. Private Collection.](1787–1827) Silver; h. 29 1/2 When is it from? © USS Constitution Museum 2010 1813 Physical Characteristics: The urn (known as a vase when it was made)1 is 29.5 inches high, 22 inches wide, and 12 inches deep. It is made entirely of sterling silver. The workmanship exhibits a variety of techniques, including cast, applied, incised, chased, repoussé (hammered from behind), embossed, and engraved decorations.2 Its overall form is that of a Greek ceremonial urn, and it is decorated with various classical motifs, an engraved scene of the battle between the USS Constitution and the HMS Guerriere, and an inscription reading: The Citizens of Philadelphia, at a meeting convened on the 5th of Septr. 1812, voted/ this Urn, to be presented in their name to CAPTAIN ISAAC HULL, Commander of the/ United States Frigate Constitution, as a testimonial of their sense of his distinguished/ gallantry and conduct, in bringing to action, and subduing the British Frigate Guerriere,/ on the 19th day of August 1812, and of the eminent service he has rendered to his/ Country, by achieving, in the first naval conflict of the war, a most signal and decisive/ victory, over a foe that had till then challenged an unrivalled superiority on the/ ocean, and thus establishing the claim of our Navy to the affection and confidence/ of the Nation/ Engraved by W. -

1 Overview of USS Constitution Re-Builds & Restorations USS

Overview of USS Constitution Re-builds & Restorations USS Constitution has undergone numerous “re-builds”, “re-fits”, “over hauls”, or “restorations” throughout her more than 218-year career. As early as 1801, she received repairs after her first sortie to the Caribbean during the Quasi-War with France. In 1803, six years after her launch, she was hove-down in Boston at May’s Wharf to have her underwater copper sheathing replaced prior to sailing to the Mediterranean as Commodore Edward Preble’s flagship in the Barbary War. In 1819, Isaac Hull, who had served aboard USS Constitution as a young lieutenant during the Quasi-War and then as her first War of 1812 captain, wrote to Stephen Decatur: “…[Constitution had received] a thorough repair…about eight years after she was built – every beam in her was new, and all the ceilings under the orlops were found rotten, and her plank outside from the water’s edge to the Gunwale were taken off and new put on.”1 Storms, battle, and accidents all contributed to the general deterioration of the ship, alongside the natural decay of her wooden structure, hemp rigging, and flax sails. The damage that she received after her War of 1812 battles with HMS Guerriere and HMS Java, to her masts and yards, rigging and sails, and her hull was repaired in the Charlestown Navy Yard. Details of the repair work can be found in RG 217, “4th Auditor’s Settled Accounts, National Archives”. Constitution’s overhaul of 1820-1821, just prior to her return to the Mediterranean, saw the Charlestown Navy Yard carpenters digging shot out of her hull, remnants left over from her dramatic 1815 battle against HMS Cyane and HMS Levant. -

The American Navy by Rear-Admiral French E

:*' 1 "J<v vT 3 'i o> -< •^^^ THE AMERICAN BOOKS A LIBRARY OF GOOD CITIZENSHIP " The American Books" are designed as a series of authoritative manuals, discussing problems of interest in America to-day. THE AMERICAN BOOKS THE AMERICAN COLLEGE BY ISAAC SHARPLESS THE INDIAN TO-DAY BY CHARLES A. EASTMAN COST OF LIVING BY FABIAN FRANKLIN THE AMERICAN NAVY BY REAR-ADMIRAL FRENCH E. CHADWICK, U. S. N. MUNICIPAL FREEDOM BY OSWALD RYAN AMERICAN LITERATURE BY LEON KELLNER (translated from THB GERMAN BY JULIA FRANKLIN) SOCIALISM IN AMERICA BY JOHN MACY AMERICAN IDEALS BY CLAYTON S. COOPER THE UNIVERSITY MOVEMENT BY IRA REMSEN THE AMERICAN SCHOOL BY WALTER S. HINCHMAN THE FEDERAL RESERVE BY H. PARKER WILLIS {For more extended notice of the series, see the last pages of this book.) The American Books The American Navy By Rear-Admiral French E. Chadwick (U. S. N., Retired) GARDEN CITY NEW YORK DOUBLEDAY, PAGE & COMPANY 191S Copyright, 1915, by DOUBLEDAY, PaGE & CoMPANY All rights reserved, including that of translation into foreign languages, inxluding the Scandinavian m 21 1915 'CI, A 401 J 38 TO MY COMRADES OF THE NAVY PAST, PRESENT, AND FUTURE BIOGRAPHICAL NOTE Rear-Admiral French Ensor Chadwick was born at Morgantown, W. Va., February 29, 1844. He was appointed to the U. S. Naval Academy from West Virginia (then part of Virginia) in 1861, and graduated in November, 1864. In the summer of 1864 he was attached to the Marblehead in pursuit of the Confederate steamers Florida and Tallahassee. After the Civil War he served successively in a number of vessels, and was promoted to the rank of Lieutenant-Commander in 1869; was instruc- tor at the Naval Academy; on sea-service, and on lighthouse duty (i 870-1 882); Naval Attache at the American Embassy in London (1882- 1889); commanded the Yorktown (1889-1891); was Chief Intelligence Officer (1892-1893); and Chief of the Bureau of Equipment (1893-1897). -

Antarctica: Music, Sounds and Cultural Connections

Antarctica Music, sounds and cultural connections Antarctica Music, sounds and cultural connections Edited by Bernadette Hince, Rupert Summerson and Arnan Wiesel Published by ANU Press The Australian National University Acton ACT 2601, Australia Email: [email protected] This title is also available online at http://press.anu.edu.au National Library of Australia Cataloguing-in-Publication entry Title: Antarctica - music, sounds and cultural connections / edited by Bernadette Hince, Rupert Summerson, Arnan Wiesel. ISBN: 9781925022285 (paperback) 9781925022292 (ebook) Subjects: Australasian Antarctic Expedition (1911-1914)--Centennial celebrations, etc. Music festivals--Australian Capital Territory--Canberra. Antarctica--Discovery and exploration--Australian--Congresses. Antarctica--Songs and music--Congresses. Other Creators/Contributors: Hince, B. (Bernadette), editor. Summerson, Rupert, editor. Wiesel, Arnan, editor. Australian National University School of Music. Antarctica - music, sounds and cultural connections (2011 : Australian National University). Dewey Number: 780.789471 All rights reserved. No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, mechanical, photocopying or otherwise, without the prior permission of the publisher. Cover design and layout by ANU Press Cover photo: Moonrise over Fram Bank, Antarctica. Photographer: Steve Nicol © Printed by Griffin Press This edition © 2015 ANU Press Contents Preface: Music and Antarctica . ix Arnan Wiesel Introduction: Listening to Antarctica . 1 Tom Griffiths Mawson’s musings and Morse code: Antarctic silence at the end of the ‘Heroic Era’, and how it was lost . 15 Mark Pharaoh Thulia: a Tale of the Antarctic (1843): The earliest Antarctic poem and its musical setting . 23 Elizabeth Truswell Nankyoku no kyoku: The cultural life of the Shirase Antarctic Expedition 1910–12 . -

U·M·I University Microfilms International a Bell & Howell Information Company 300 North Zeeb Road

INFORMATION TO USERS This manuscript has been reproduced from the microfilm master. UMI films the text directly from the original or copy submitted. Thus, some thesis and dissertation copies are in typewriter face, while others may be from any type of computer printer. The quality of this reproduction is dependent upon the quality of the copy submitted. Broken or indistinct print, colored or poor quality illustrations and photographs, print bleedthrough, substandard margins, and improper alignment can adverselyaffect reproduction. In the unlikely event that the author did not send UMI a complete manuscript and there are missing pages, these will be noted. Also, if unauthorized copyrightmaterial had to be removed, a note will indicate the deletion. Oversize materials (e.g., maps, drawings, charts) are reproduced by sectioning the original, beginning at the upper left-hand corner and continuing from left to right in equal sections with small overlaps. Each original is also photographed in one exposure and is included in reduced form at the back of the book. Photographs included in the original manuscript have been reproduced xerographically in this copy. Higher quality 6" x 9" black and white photographic prints are available for any photographs or illustrations appearing in this copy for an additional charge. Contact UMI directly to order. U·M·I University Microfilms International A Bell & Howell Information Company 300 North Zeeb Road. Ann Arbor. M148106-1346 USA 313/761-4700 800/521-0600 Order Number 9429649 Subversive dialogues: Melville's intertextual strategies and nineteenth-century American ideologies Shin, Moonsu, Ph.D. University of Hawaii, 1994 V·M·I 300 N. -

The 1812 Streets of Cambridgeport

The 1812 Streets of Cambridgeport The Last Battle of the Revolution Less than a quarter of a century after the close of the American Revolution, Great Britain and the United States were again in conflict. Britain and her allies were engaged in a long war with Napoleonic France. The shipping-related industries of the neutral United States benefited hugely, conducting trade with both sides. Hundreds of ships, built in yards on America’s Atlantic coast and manned by American sailors, carried goods, including foodstuffs and raw materials, to Europe and the West Indies. Merchants and farmers alike reaped the profits. In Cambridge, men made plans to profit from this brisk trade. “[T]he soaring hopes of expansionist-minded promoters and speculators in Cambridge were based solidly on the assumption that the economic future of Cambridge rested on its potential as a shipping center.” The very name, Cambridgeport, reflected “the expectation that several miles of waterfront could be developed into a port with an intricate system of canals.” In January 1805, Congress designated Cambridge as a “port of delivery” and “canal dredging began [and] prices of dock lots soared." [1] Judge Francis Dana, a lawyer, diplomat, and Chief Justice of the Massachusetts Supreme Judicial Court, was one of the primary investors in the development of Cambridgeport. He and his large family lived in a handsome mansion on what is now Dana Hill. Dana lost heavily when Jefferson declared an embargo in 1807. Britain and France objected to America’s commercial relationship with their respective enemies and took steps to curtail trade with the United States. -

Liminal Encounters and the Missionary Position: New England's Sexual Colonization of the Hawaiian Islands, 1778-1840

University of Southern Maine USM Digital Commons All Theses & Dissertations Student Scholarship 2014 Liminal Encounters and the Missionary Position: New England's Sexual Colonization of the Hawaiian Islands, 1778-1840 Anatole Brown MA University of Southern Maine Follow this and additional works at: https://digitalcommons.usm.maine.edu/etd Part of the Other American Studies Commons Recommended Citation Brown, Anatole MA, "Liminal Encounters and the Missionary Position: New England's Sexual Colonization of the Hawaiian Islands, 1778-1840" (2014). All Theses & Dissertations. 62. https://digitalcommons.usm.maine.edu/etd/62 This Open Access Thesis is brought to you for free and open access by the Student Scholarship at USM Digital Commons. It has been accepted for inclusion in All Theses & Dissertations by an authorized administrator of USM Digital Commons. For more information, please contact [email protected]. LIMINAL ENCOUNTERS AND THE MISSIONARY POSITION: NEW ENGLAND’S SEXUAL COLONIZATION OF THE HAWAIIAN ISLANDS, 1778–1840 ________________________ A THESIS SUBMITTED IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF MASTERS OF THE ARTS THE UNIVERSITY OF SOUTHERN MAINE AMERICAN AND NEW ENGLAND STUDIES BY ANATOLE BROWN _____________ 2014 FINAL APPROVAL FORM THE UNIVERSITY OF SOUTHERN MAINE AMERICAN AND NEW ENGLAND STUDIES June 20, 2014 We hereby recommend the thesis of Anatole Brown entitled “Liminal Encounters and the Missionary Position: New England’s Sexual Colonization of the Hawaiian Islands, 1778 – 1840” Be accepted as partial fulfillment of the requirements for the Degree of Master of Arts Professor Ardis Cameron (Advisor) Professor Kent Ryden (Reader) Accepted Dean, College of Arts, Humanities, and Social Sciences ii ACKNOWLEDGEMENTS This thesis has been churning in my head in various forms since I started the American and New England Studies Masters program at The University of Southern Maine. -

Uss "Vincennes"

S. Hao, 100-1085 INVESTIGATION IfTO THE DOWNING OF AN IRANIAN AIRLINER BY THE U.S.S. "VINCENNES" HEARING BEFORE THE COMMITTEE ON ARMED SERVICES UNITED STATES SENATE ONE HUNDREDTH CONGRESS SECOND SESSION SEPTEMBER 8, 1988 Printed for the use of the Committee on Armed Services U.S. GOVERNMENT PRINTING OFFICE 90-853 WASHINGTON : 1989 For sale by the Superintendent of Documents, Congressional Sales Office U.S. Government Printing Office, Washington, DC 20402 03o -" COMMITTEE ON ARMED SERVICES SAM NUNN, Georgia, Chairman JOHN C. STENNIS, Mississippi JOHN W. WARNER, Virginia J. JAMES EXON, Nebraska STROM THURMOND, South Carolina CARL LEVIN, Michigan GORDON J. HUMPHREY, New Hampshire P)WARD M. KENNEDY, Massachusetts WILLIAM S. COHEN, Maine JEFF BINGAMAN, New Mexico DAN QUAYLE, Indiana ALAN J. DIXON, Illinois PETE WILSON, California JOHN GLENN, Ohio PHIL GRAMM, Texas ALBERT GORE, JR., Tennessee STEVEN D. SYMMS, Idaho TIMOTHY E. WIRTH, Colorado JOHN McCAIN, Arizona RICHARD C. SHELBY, Alabama ARNOLD L. PuNARO, Staff Director CAu M. SMrm, Staff Director for the Minority CHRISTINS COWART DAUTH, Chief Clerk (II) CONTENTS CHRONOLOGICAL LIST OF WITNESSES Page Fogarty, Rear Adm. William M., USN, Director of Policy and Plans, U.S. Central Command, and Head of the Investigation Team accompanied by Capt. George N. Gee, USN, Director, Surface Combat Systems Division, ice of the Chief of Naval Operations and Capt. Richard D. DeBobes, Legal Adviser and Legislative Assistant to the Chairman of the Joint Chiefs of S taff . .......................................................................................................................... 4 Kelly, Rear Adm. Robert J., USN, Vice Director for Operations, Joint Staff ..... 17 (III) INVESTIGATION INTO THE DOWNING OF AN IRANIAN AIRLINER BY THE U.S.S. -

Ladies and Gentlemen

reaching the limits of their search area, ENS Reid and his navigator, ENS Swan decided to push their search a little farther. When he spotted small specks in the distance, he promptly radioed Midway: “Sighted main body. Bearing 262 distance 700.” PBYs could carry a crew of eight or nine and were powered by two Pratt & Whitney R-1830-92 radial air-cooled engines at 1,200 horsepower each. The aircraft was 104 feet wide wing tip to wing tip and 63 feet 10 inches long from nose to tail. Catalinas were patrol planes that were used to spot enemy submarines, ships, and planes, escorted convoys, served as patrol bombers and occasionally made air and sea rescues. Many PBYs were manufactured in San Diego, but Reid’s aircraft was built in Canada. “Strawberry 5” was found in dilapidated condition at an airport in South Africa, but was lovingly restored over a period of six years. It was actually flown back to San Diego halfway across the planet – no small task for a 70-year old aircraft with a top speed of 120 miles per hour. The plane had to meet FAA regulations and was inspected by an FAA official before it could fly into US airspace. Crew of the Strawberry 5 – National Archives Cover Artwork for the Program NOTES FROM THE ARTIST Unlike the action in the Atlantic where German submarines routinely targeted merchant convoys, the Japanese never targeted shipping in the Pacific. The Cover Artwork for the Veterans' Biographies American convoy system in the Pacific was used primarily during invasions where hundreds of merchant marine ships shuttled men, food, guns, This PBY Catalina (VPB-44) was flown by ENS Jack Reid with his ammunition, and other supplies across the Pacific. -

America's Undeclared Naval War

America's Undeclared Naval War Between September 1939 and December 1941, the United States moved from neutral to active belligerent in an undeclared naval war against Nazi Germany. During those early years the British could well have lost the Battle of the Atlantic. The undeclared war was the difference that kept Britain in the war and gave the United States time to prepare for total war. With America’s isolationism, disillusionment from its World War I experience, pacifism, and tradition of avoiding European problems, President Franklin D. Roosevelt moved cautiously to aid Britain. Historian C.L. Sulzberger wrote that the undeclared war “came about in degrees.” For Roosevelt, it was more than a policy. It was a conviction to halt an evil and a threat to civilization. As commander in chief of the U.S. armed forces, Roosevelt ordered the U.S. Navy from neutrality to undeclared war. It was a slow process as Roosevelt walked a tightrope between public opinion, the Constitution, and a declaration of war. By the fall of 1941, the U.S. Navy and the British Royal Navy were operating together as wartime naval partners. So close were their operations that as early as autumn 1939, the British 1 | P a g e Ambassador to the United States, Lord Lothian, termed it a “present unwritten and unnamed naval alliance.” The United States Navy called it an “informal arrangement.” Regardless of what America’s actions were called, the fact is the power of the United States influenced the course of the Atlantic war in 1941. The undeclared war was most intense between September and December 1941, but its origins reached back more than two years and sprang from the mind of one man and one man only—Franklin Roosevelt. -

Trends in U.S. Oil and Natural Gas Upstream Costs

Trends in U.S. Oil and Natural Gas Upstream Costs March 2016 Independent Statistics & Analysis U.S. Department of Energy www.eia.gov Washington, DC 20585 This report was prepared by the U.S. Energy Information Administration (EIA), the statistical and analytical agency within the U.S. Department of Energy. By law, EIA’s data, analyses, and forecasts are independent of approval by any other officer or employee of the United States Government. The views in this report therefore should not be construed as representing those of the Department of Energy or other federal agencies. U.S. Energy Information Administration | Trends in U.S. Oil and Natural Gas Upstream Costs i March 2016 Contents Summary .................................................................................................................................................. 1 Onshore costs .......................................................................................................................................... 2 Offshore costs .......................................................................................................................................... 5 Approach .................................................................................................................................................. 6 Appendix ‐ IHS Oil and Gas Upstream Cost Study (Commission by EIA) ................................................. 7 I. Introduction……………..………………….……………………….…………………..……………………….. IHS‐3 II. Summary of Results and Conclusions – Onshore Basins/Plays…..………………..…….…