Compiler-Directed Soft Error Mitigation for Embedded Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Technical Competencies and Professional Skills

TECHNICAL COMPETENCIES AND PROFESSIONAL SKILLS Applied Mathematics Technical Skills: data visualization and analysis, SQL, finance and accounting Programming Languages: R, MATLAB, C++, Java, Python Markup Languages: LaTeX, HTML, CSS Software: Microsoft Office (Excel, Office, Word), XCode, Visual Studio Note: Skills, software, and languages are dependent on elective taken, computer selection, and class year. Applied Sciences Instrumentation: UV/Vis spectroscopy, NMR spectroscopy, IR spectroscopy, GC-MS, LC-MS, thermocycler, nucleic acid sequencer, microplate reader, cryocooler Lab Techniques: Gel electrophoresis and SDS-PAGE, Western blotting, primer design and Polymerase Chain Reaction (PCR), QPCR, sterile lab technique, cell culture, nucleic acid isolation and purification, protein isolation and purification (chromatography, centrifugation, dialysis), enzyme thermodynamics and kinetics, transfection and transformation, chemical synthesis and purification (distillation, extraction, chromatography, rotary evaporator, crystallization), inert atmosphere and Schlenk line techniques, titration, dissection of preserved specimens, four-probe electrical measurement, Bragg diffraction Competencies: Application of the scientific method, Scientific writing and oral communication Software and computational skills: Microsoft Office (Word, Excel, Powerpoint), ChemDraw, PyMol, TopSpin, Java, Protein DataBank (PDB), NCBI suite (including BLAST, GenBank), Orca Quantum Chemistry software, LoggerPro, LabView Architecture Design Skills: Multi-scale civic -

NESS Esk a 2005.0034090 a 22005 Sato Et

US007 185309B1 (12) United States Patent (10) Patent No.: US 7,185,309 B1 Kulkarni et al. (45) Date of Patent: Feb. 27, 2007 (54) METHOD AND APPARATUS FOR 2004/0006584 A1 1/2004 Vandeweerd APPLICATION-SPECIFIC PROGRAMMABLE 2004/O128120 A1 7/2004 Coburn et al. NESS Esk A 2005.00340902005/0114593 A1A 220055/2005 SatoCassell et al.et al. (75) Inventors: Chidamber R. Kulkarni, San Jose, CA 2005/0172085 A1 8/2005 Klingman (US); Gordon J. Brebner, Monte 2005/0172087 A1 8/2005 Klingman Sereno, CA (US); Eric R. Keller, 2005/0172088 A1 8/2005 Klingman Boulder, CO (US); Philip B. 2005/0172089 A1 8/2005 Klingman James-Roxby, Longmont, CO (US) 2005/0172090 A1 8/2005 Klingman O O 2005/0172289 A1 8/2005 Klingman (73) Assignee: Xilinx, Inc., San Jose, CA (US) 2005/0172290 A1 8/2005 Klingman (*) Notice: Subject to any disclaimer, the term of this patent is extended or adjusted under 35 (21) Appl. No.: 10/769,591 OTHER PUBLICATIONS (22) Filed: Jan. 30, 2004 U.S. Appl. No. 10/769,330, filed Jan. 30, 2004, James-Roxby et al. (51) Int. Cl. (Continued) G06F 7/50 (2006.01) Primary Examiner Thuan Do (52) U.S. Cl. ............................................. 716/18: 718/2 Assistant Examiner Binh Tat (58) Field of Classification Search .................. 716/18, (74) Attorney, Agent, or Firm—Robert Brush 716/2, 3: 709/217: 71.9/313 See application file for complete search history. (57) ABSTRACT (56) References Cited U.S. PATENT DOCUMENTS Programmable architecture for implementing a message 5,867,180 A * 2/1999 Katayama et al. -

Standby Power Management Architecture for Deep- Submicron Systems

Standby Power Management Architecture for Deep- Submicron Systems Michael Alan Sheets Electrical Engineering and Computer Sciences University of California at Berkeley Technical Report No. UCB/EECS-2006-70 http://www.eecs.berkeley.edu/Pubs/TechRpts/2006/EECS-2006-70.html May 19, 2006 Copyright © 2006, by the author(s). All rights reserved. Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, to republish, to post on servers or to redistribute to lists, requires prior specific permission. Standby Power Management Architecture for Deep-Submicron Systems by Michael Alan Sheets B.S.C.E. (Georgia Institute of Technology) 1999 M.S. (University of California, Berkeley) 2003 A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Engineering-Electrical Engineering and Computer Sciences in the GRADUATE DIVISION of the UNIVERSITY OF CALIFORNIA, BERKELEY Committee in charge: Professor Jan Rabaey, Chair Professor Robert Brodersen Professor Paul Wright Spring 2006 The dissertation of Michael Alan Sheets is approved: Chair Date Date Date University of California, Berkeley Spring 2006 Standby Power Management Architecture for Deep-Submicron Systems Copyright 2006 by Michael Alan Sheets 1 Abstract Standby Power Management Architecture for Deep-Submicron Systems by Michael Alan Sheets Doctor of Philosophy in Engineering-Electrical Engineering and Computer Sciences University of California, Berkeley Professor Jan Rabaey, Chair In deep-submicron processes a signi¯cant portion of the power budget is lost in standby power due to increasing leakage e®ects. -

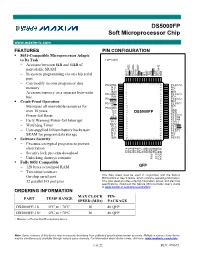

DS5000FP Soft Microprocessor Chip

DS5000FP Soft Microprocessor Chip www.maxim-ic.com FEATURES PIN CONFIGURATION 8051-Compatible Microprocessor Adapts to Its Task TOP VIEW − Accesses between 8kB and 64kB of nonvolatile SRAM LE BD6 PSEN BD5 P2.7/A15 BD4 − In-system programming via on-chip serial BA11 P0.5/AD5 CE2 P0.6/AD6 BA10 P0.7/AD7 CE1 EA N.C. BD7 A port − Can modify its own program or data 80 79 78 77 76 75 74 73 72 71 70 69 68 67 66 65 P0.4/AD4 1 64 P2.6/A14 2 N.C. 63 N.C. memory 3 62 N.C. N.C. BA9 4 61 BD3 − Accesses memory on a separate byte-wide P0.3/AD3 5 60 P2.5/A13 bus BA8 6 59 BD2 P0.2/AD2 7 58 P2.4/A12 Crash-Proof Operation BA13 8 57 BD1 P0.1/AD1 9 56 P2.3/A11 R/W 10 55 BD0 − Maintains all nonvolatile resources for 11 54 P0.0/AD0 VLI VCC0 12 53 GND over 10 years VCC 13 DS5000FP 52 GND 14 51 − Power-fail Reset VCC P2.2/A10 P1.0 15 50 P2.1/A9 BA14 16 49 P2.0/A8 − Early Warning Power-fail Interrupt P1.1 17 48 XTAL1 BA12 18 47 XTAL2 19 − Watchdog Timer P1.2 46 P3.7/RD 20 45 BA7 P3.6/WR − User-supplied lithium battery backs user P1.3 21 44 P3.5/T1 N.C. 22 43 N.C. SRAM for program/data storage N.C. 23 42 N.C. -

Microprocessor Design

Microprocessor Design en.wikibooks.org March 15, 2015 On the 28th of April 2012 the contents of the English as well as German Wikibooks and Wikipedia projects were licensed under Creative Commons Attribution-ShareAlike 3.0 Unported license. A URI to this license is given in the list of figures on page 211. If this document is a derived work from the contents of one of these projects and the content was still licensed by the project under this license at the time of derivation this document has to be licensed under the same, a similar or a compatible license, as stated in section 4b of the license. The list of contributors is included in chapter Contributors on page 209. The licenses GPL, LGPL and GFDL are included in chapter Licenses on page 217, since this book and/or parts of it may or may not be licensed under one or more of these licenses, and thus require inclusion of these licenses. The licenses of the figures are given in the list of figures on page 211. This PDF was generated by the LATEX typesetting software. The LATEX source code is included as an attachment (source.7z.txt) in this PDF file. To extract the source from the PDF file, you can use the pdfdetach tool including in the poppler suite, or the http://www. pdflabs.com/tools/pdftk-the-pdf-toolkit/ utility. Some PDF viewers may also let you save the attachment to a file. After extracting it from the PDF file you have to rename it to source.7z. -

Product Selector Guide July 2011

Product Selector Guide July 2011 FPGA • CPLD • MIXED SIGNAL • INTELLECTUAL PROPERTY • DEVELOPMENT KITS • DESIGN TOOLS Downloaded from Elcodis.com electronic components distributor CONTENTS ■ Advanced Packaging ....................................................... 4 ■ FPGA Products .............................................................. 6 ■ CPLD Products .............................................................. 8 ■ Mixed Signal Products .................................................... 8 ■ Intellectual Property and Reference Designs .........................10 ■ Development Kits and Evaluation Boards .............................14 ■ Programming Hardware ..................................................19 ■ Lattice Diamond® and ispLEVER® Design Software .................20 ■ PAC-Designer ® Design Software ........................................20 Page 2 Downloaded from Elcodis.com electronic components distributor Lattice Semiconductor designs, develops and markets a diverse portfolio of low-power, high-value, programmable solutions. Lattice is committed to offering engineers a complete support ecosystem for their system designs, including silicon, design software tools, IP cores, reference designs, development kits and evaluation boards. FPGA, PLD and Mixed Signal Products Lattice FPGA (Field Programmable Gate Array) solutions deliver unique features, low power, and excellent value for FPGA designs. We are also the leading supplier of low-density CMOS PLDs, and our CPLD and SPLD solutions deliver an optimal fit for -

Institutionen För Systemteknik Department of Electrical Engineering

Institutionen för systemteknik Department of Electrical Engineering Examensarbete/Master Thesis Improving an FPGA Optimized Processor Examensarbete utfört i elektroniksystem vid Tekniska högskolan vid Linköpings universitet av Mahdad Davari LiTH-ISY-EX--11/4520--SE Linköping 2011 TEKNISKA HÖGSKOLAN LINKÖPINGS UNIVERSITET Department of Electrical Engineering Linköpings tekniska högskola Linköping University Institutionen för systemteknik S-581 83 Linköping, Sweden 581 83 Linköping This page intentionally left blank. Improving an FPGA Optimized Processor Examensarbete utfört i Datorteknik vid Tekniska högskolan i Linköping av Mahdad Davari LiTH-ISY-EX--11/4520--SE Handledare: Andreas Ehliar ISY, Linköpings universitet Examinator: Olle Seger ISY, Linköpings universitet Linköping, October 2011 This page intentionally left blank. II Presentation Date Department and Division 2011-10-14 Department of Electrical Engineering Publishing Date (Electronic version) 2011-10-17 Language Type of Publication ISBN (Licentiate thesis) x English Licentiate thesis Other (specify below) x Degree thesis ISRN: LiTH-ISY-EX--11/4520--SE Thesis C-level Title of series (Licentiate thesis) Thesis D-level Report Number of Pages Other (specify below) Series number/ISSN (Licentiate thesis) 117 URL, Electronic Version http://www.ep.liu.se Publication Title Improving an FPGA Optimized Processor Author Mahdad Davari Abstract This work aims at improving an existing soft microprocessor core optimized for Xilinx Virtex®-4 FPGA. Instruction and data caches will be designed and implemented. Interrupt support will be added as well, preparing the microprocessor core to host operating systems. Thorough verification of the added modules is also emphasized in this work. Maintaining core clock frequency at its maximum has been the main concern through all the design and implementation steps. -

Reconfigurable Spi Driver for Mips Soft-Core Processor Using Fpga

RECONFIGURABLE SPI DRIVER FOR MIPS SOFT-CORE PROCESSOR USING FPGA 1HESHAM ALOBAISI, 2SAIM MOHAMMED, 3MOHAMMAD AWEDH 1,2,3Department of Electrical and Computer Engineering, King Abdulaziz University (KAU), Jeddah, Saudi Arabia Email: [email protected], [email protected], [email protected] Abstract- Field Programmable Gate Arrays (FPGA) are used widely in applications which require high speed parallel computing. It provides a perfect solution which requires short time for customization after manufacturing. MIPS soft-core processor and SPI protocol soft-core implementation is well known in FPGA, but the customized driver for SPI communication is not available. The SPI communication protocol is widely used in a wide range of devices such as sensors, memory devices, I/O expanders, etc. The objective of this research is to design and test a reconfigurable SPI driver for MIPS processor so that communication between devices which use an SPI protocol becomes feasible. Design requires three blocks a soft-core MIPS processor, SPI protocol block and an interconnecting block between MIPS processor and SPI block. MIPS processor loads the transmitting data and configuration bits in the SFR register of Data memory and SPI block reads that register and after communication loads the received data into another SFR register which MIPS processor can read upon request. To demonstrate the proposed technique, we wrote the code and verified the results. The design architecture is coded using Verilog and VHDL based on top-down hierarchical design methodology and realized in Spartan-3E FPGA using Xilinx ISE 14.7. Based on the ISE and FPGA implementation results, the maximum operating frequency of the whole system is found to be 122.632 MHz. -

Readthedocs-Breathe Documentation Release 1.0.0

ReadTheDocs-Breathe Documentation Release 1.0.0 Thomas Edvalson Feb 06, 2019 Contents 1 Going to 11: Amping Up the Programming-Language Run-Time Foundation3 2 Solid Compilation Foundation and Language Support5 2.1 Quick Start Guide............................................5 2.1.1 Current Release Notes.....................................5 2.1.2 Installation Guide........................................5 2.1.3 Programming Guide......................................6 2.1.4 ROCm GPU Tunning Guides..................................7 2.1.5 GCN ISA Manuals.......................................7 2.1.6 ROCm API References.....................................7 2.1.7 ROCm Tools..........................................8 2.1.8 ROCm Libraries........................................9 2.1.9 ROCm Compiler SDK..................................... 10 2.1.10 ROCm System Management.................................. 10 2.1.11 ROCm Virtualization & Containers.............................. 10 2.1.12 Remote Device Programming................................. 11 2.1.13 Deep Learning on ROCm.................................... 11 2.1.14 System Level Debug...................................... 11 2.1.15 Tutorial............................................. 11 2.1.16 ROCm Glossary......................................... 12 2.2 Current Release Notes.......................................... 12 2.2.1 New features and enhancements in ROCm 2.1......................... 12 2.2.1.1 RocTracer v1.0 preview release – ‘rocprof’ HSA runtime tracing and statistics sup- port -

A High Performance Microprocessor with Dsp Extensions Optimized for the Virtex-4 Fpga

A HIGH PERFORMANCE MICROPROCESSOR WITH DSP EXTENSIONS OPTIMIZED FOR THE VIRTEX-4 FPGA Andreas Ehliar∗, Per Karlstrom¨ †, Dake Liu Department of Electrical Engineering Linkoping¨ University Sweden email: [email protected], [email protected], [email protected] ABSTRACT In this paper we will present a high speed soft micropro- As the use of FPGAs increases, the importance of highly cessor core with DSP extensions optimized for the Virtex- optimized processors for FPGAs will increase. In this paper 4 FPGA family. The microarchitecture of the processor is we present the microarchitecture of a soft microprocessor carefully designed to allow for high speed operation. core optimized for the Virtex-4 architecture. The core can operate at 357 MHz, which is significantly faster than Xil- 2. RELATED WORK inx’ Microblaze architecture on the same FPGA. At this fre- quency it is necessary to keep the logic complexity down There are many soft processor cores available for FPGA us- and this paper shows how this can be done while retaining age although Nios II, Mico32, and Microblaze are common sufficient functionality for a high performance processor. choices thanks to the support from their vendors. Altera’s Nios II [2]. is a 32-bit RISC processor that 1. INTRODUCTION comes in three flavors; e, s, and, f with a one, five, or six pipeline stages respectively. The use of FPGAs has increased steadily since their in- Xilinx’ Microblaze is a 32-bit RISC processor [10] opti- troduction. The first FPGAs were limited devices, usable mized for Xilinx FPGAs. mainly for glue logic whereas the capabilities of modern FP- Lattice’ Mico32 is a 32 bit RISC processor [7] with a six GAs allow for extremely varied use cases in everything from stage pipeline. -

Fine-Grained On-Line Power Monitoring for Soft Microprocessor Based System-On-Chip

Fine-grained On-line Power Monitoring for Soft Microprocessor based System-on-Chip Young H. Cho and Siddharth S. Bhargav Information Sciences Institute University of Southern California Marina del Rey, California, U.S.A. {youngcho, ssbharga}@isi.edu Abstract — Today’s CMOS technologies allow larger circuit seem to point to fine-grained power management as the path designs to fit on a single chip. However, this advantage comes at forward to additional power savings for the future digital a high price of increased process-voltage-temperature (PVT) designs [1]. variations. FPGAs and their designs are no exceptions to such An enabling technology for an effective power variations. In fact, the same bit file loaded into two different management is high accuracy fine-grained power measurement FPGAs of the same model can produce a significant difference in power and thermal characteristics due to variations that exist system. Since traditional power measurement algorithms rely within the chip. Since it is increasingly difficult to control on statistically derived power models and direct measurement physical variations through manufacturing tasks, there is a need methods, they are proving to be either not accurate enough or for practical ways to sense chip variations to provide a way for too expensive to drive fine-grained power manager. FPGAs circuit designers to compensate or avoid its negative effects. One present additional challenges to measurement systems because of the most critical aspects of such variation is power. Therefore, of their need to support hardware reconfiguration. we developed and demonstrated a high accuracy on-chip on-line We present an efficient on-line sub-component level power Energy-per-Component (EPC) measurement technology on measurement method that is fine-grained and highly accurate. -

Embedded Systems Course Using Altera FPGA Subramaniam Ganesan, Oakland University, [email protected]

Embedded Systems course using Altera FPGA Subramaniam Ganesan, Oakland University, [email protected], Abstract As the number of embedded system applications and their complexities are increasing there is a demand to use the advanced technologies for embedded system design. This course describes the concepts of system design with the latest FPGAs (Field programmable gate arrays) which can also implement soft- core microcontrollers, and lists a number of laboratory exercises and end of the semester course projects. This course covers the design of advanced FPGAs with soft-core micros and interface to hard-core micros and applications The applications taught include digital signal processing, image processing, display, pulse width modulation, small motor control, automotive navigation etc. This paper describes a senior undergraduate/ graduate level course with details of the topics taught, text books to use, software tools to use, the lab exercises and suitable projects. Introduction Embedded systems need increase in performance and more functions often. There is a need for integration of more devices and chips, decrease in power consumption, cost, size and time to market. Price of complex FPGAs with 32 bit soft microprocessor core are falling and are closer to the microprocessor system price. Altera, Xilinx are leading FPGA chip manufacturers and they have a number of devices for embedded system market at different price ranges. FPGA represents the logical extension in the “hard to soft” migration of system functionality. Software tools for FPGA are growing and becoming easier. Processors, peripheral devices, logic, and software can be changed in FPGA system even after manufacturing, leading to easier re-configurability and upgradability.