SHFE HARI Evidence Chapter FINAL

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Tugboat, Volume 11 (1990), No

TUGboat, Volume 11 (1990), No. 2 G.A. Kubba. The Impact of Computers on Ara- to Computer Modern fonts-I strongly support the bic Writing, Character Processing, and Teach- principal idea, and I pursue it in the present paper. ing. Information Processing, 80:961-965, 1980. To organize the discussion in a systematic way, I Pierre Mackay. Typesetting Problem Scripts. will use the notions - borrowed from [2]-of text Byte, 11(2):201-218, February 1986. encoding, typing and rendering. J. Marshall Unger. The Fiflh Generation 2 Text encoding Fallacy- Why Japan is Betting its Future on Artificial Intelligence. Oxford University Press, In the context of w,encoding means the character 1987. sets of the fonts in question and their layouts. In the present section I will focus my attention on the X/Open Company, Ltd. X/Open Portability character sets, as the layouts should be influenced, Guide, Supplementary Definitions, volume 3. among others, by typing considerations. Prentice-Hall. 1989. In an attempt to obtain a general idea about the use of the latin alphabet worldwide, I looked up the o Nelson H.F. Beebe only relevant reference work I am aware of, namely Center for Scientific Computing and Department of Languages Identificatzon Guzde [7] (hereafter LIG). Mathematics Apart from the latin scripts used in the Soviet Union South Physics Building and later replaced by Cyrillic ones, it lists 82 lan- University of Utah guages using the latin alphabet with additional let- Salt Lake City, UT 84112 ters (I preserve the original spelling): USA Albanian, Aymara, Basque. Breton, Bui, Tel: (801) 581-5254 Catalan, Choctaw, Chuana, Cree, Czech, Internet: BeebeQscience .utah.edu Danish, Delaware, Dutch, Eskimo, Espe- ranto, Estonian, Ewe, Faroese (also spelled Faroeish), Fiji, Finnish, French, Frisian, Fulbe, German, Guarani, Hausa, Hun- garian, Icelandic, Irish, Italian, Javanese, Juang, Kasubian, Kurdish, Lahu, Lahuli, - Latin, Lettish, Lingala, Lithuanian, Lisu, On Standards Luba, Madura. -

Ffontiau Cymraeg

This publication is available in other languages and formats on request. Mae'r cyhoeddiad hwn ar gael mewn ieithoedd a fformatau eraill ar gais. [email protected] www.caerphilly.gov.uk/equalities How to type Accented Characters This guidance document has been produced to provide practical help when typing letters or circulars, or when designing posters or flyers so that getting accents on various letters when typing is made easier. The guide should be used alongside the Council’s Guidance on Equalities in Designing and Printing. Please note this is for PCs only and will not work on Macs. Firstly, on your keyboard make sure the Num Lock is switched on, or the codes shown in this document won’t work (this button is found above the numeric keypad on the right of your keyboard). By pressing the ALT key (to the left of the space bar), holding it down and then entering a certain sequence of numbers on the numeric keypad, it's very easy to get almost any accented character you want. For example, to get the letter “ô”, press and hold the ALT key, type in the code 0 2 4 4, then release the ALT key. The number sequences shown from page 3 onwards work in most fonts in order to get an accent over “a, e, i, o, u”, the vowels in the English alphabet. In other languages, for example in French, the letter "c" can be accented and in Spanish, "n" can be accented too. Many other languages have accents on consonants as well as vowels. -

Combining Diacritical Marks Range: 0300–036F the Unicode Standard

Combining Diacritical Marks Range: 0300–036F The Unicode Standard, Version 4.0 This file contains an excerpt from the character code tables and list of character names for The Unicode Standard, Version 4.0. Characters in this chart that are new for The Unicode Standard, Version 4.0 are shown in conjunction with any existing characters. For ease of reference, the new characters have been highlighted in the chart grid and in the names list. This file will not be updated with errata, or when additional characters are assigned to the Unicode Standard. See http://www.unicode.org/charts for access to a complete list of the latest character charts. Disclaimer These charts are provided as the on-line reference to the character contents of the Unicode Standard, Version 4.0 but do not provide all the information needed to fully support individual scripts using the Unicode Standard. For a complete understanding of the use of the characters contained in this excerpt file, please consult the appropriate sections of The Unicode Standard, Version 4.0 (ISBN 0-321-18578-1), as well as Unicode Standard Annexes #9, #11, #14, #15, #24 and #29, the other Unicode Technical Reports and the Unicode Character Database, which are available on-line. See http://www.unicode.org/Public/UNIDATA/UCD.html and http://www.unicode.org/unicode/reports A thorough understanding of the information contained in these additional sources is required for a successful implementation. Fonts The shapes of the reference glyphs used in these code charts are not prescriptive. Considerable variation is to be expected in actual fonts. -

Alphabets, Letters and Diacritics in European Languages (As They Appear in Geography)

1 Vigleik Leira (Norway): [email protected] Alphabets, Letters and Diacritics in European Languages (as they appear in Geography) To the best of my knowledge English seems to be the only language which makes use of a "clean" Latin alphabet, i.d. there is no use of diacritics or special letters of any kind. All the other languages based on Latin letters employ, to a larger or lesser degree, some diacritics and/or some special letters. The survey below is purely literal. It has nothing to say on the pronunciation of the different letters. Information on the phonetic/phonemic values of the graphic entities must be sought elsewhere, in language specific descriptions. The 26 letters a, b, c, d, e, f, g, h, i, j, k, l, m, n, o, p, q, r, s, t, u, v, w, x, y, z may be considered the standard European alphabet. In this article the word diacritic is used with this meaning: any sign placed above, through or below a standard letter (among the 26 given above); disregarding the cases where the resulting letter (e.g. å in Norwegian) is considered an ordinary letter in the alphabet of the language where it is used. Albanian The alphabet (36 letters): a, b, c, ç, d, dh, e, ë, f, g, gj, h, i, j, k, l, ll, m, n, nj, o, p, q, r, rr, s, sh, t, th, u, v, x, xh, y, z, zh. Missing standard letter: w. Letters with diacritics: ç, ë. Sequences treated as one letter: dh, gj, ll, rr, sh, th, xh, zh. -

Diacritics-ELL.Pdf

Diacritics J.C. Wells, University College London Dkadvkxkdw avf ekwxkrhykwjkrh qavow axxadjfe xs pfxxfvw sg xjf aptjacfx, gsv f|aqtpf xjf adyxf addfrx sr xjf ‘ kr dag‘. M swx parhyahf svxjshvatjkfw cawfe sr xjf Laxkr aptjacfx qaof wsqf ywf sg ekadvkxkdw, aw kreffe es xjswf cawfe sr sxjfv aptjacfxw are {vkxkrh w}wxfqw. Tjf gsdyw sg xjkw avxkdpf kw sr xjf vspf sg ekadvkxkdw kr xjf svxjshvatj} sg parhyahfw {vkxxfr {kxj xjf Laxkr aptjacfx. Ireffe, xjf svkhkr sg wsqf pfxxfvw xjax avf rs{ a wxareave tavx sg xjf aptjacfx pkfw kr xjf ywf sg ekadvkxkdw. Tjf pfxxfv G {aw krzfrxfe kr Rsqar xkqfw aw a zavkarx sg C, ekwxkrhykwjfe c} xjf dvswwcav sr xjf ytwxvsof. Tjf pfxxfv J {aw rsx ekwxkrhykwjfe gvsq I, rsv U gvsq V, yrxkp xjf 16xj dfrxyv} (Saqtwsr 1985: 110). Tjf rf{ pfxxfv 1 kw sczksywp} a zavkarx sr r are ws dsype cf wffr aw krdsvtsvaxkrh a ekadvkxkd xakp. Dkadvkxkdw tvstfv, xjsyhj, avf wffr aw qavow axxadjfe xs a cawf pfxxfv. Ir xjkw wfrwf, m y 1 es rsx krzspzf ekadvkxkdw. Tjf f|xfrwkzf ywf sg ekadvkxkdw xs wyttpfqfrx xjf Laxkr aptjacfx kr dawfw {jfvf kx {aw wffr aw kraefuyaxf gsv xjf wsyrew sg sxjfv parhyahfw kw hfrfvapp} axxvkcyxfe xs xjf vfpkhksyw vfgsvqfv Jar Hyw (1369-1415), {js efzkwfe a vfgsvqfe svxjshvatj} gsv C~fdj krdsvtsvaxkrh 9addfrxfe: pfxxfvw wydj aw ˛ ¹ = > ?. M swx ekadvkxkdw avf tpadfe acszf xjf cawf pfxxfv {kxj {jkdj xjf} avf awwsdkaxfe. A gf{, js{fzfv, avf tpadfe cfps{ kx (aw “) sv xjvsyhj kx (aw B). 1 Laxkr pfxxfvw dsqf kr ps{fv-dawf are yttfv-dawf zfvwksrw. -

Dear Parents and Guardians, This Week Lori Caron, a Fourth Grade

Dear Parents and Guardians, This week Lori Caron, a fourth grade teacher at Hunking, and her family honored her brother-in-law by dedicating the Hunking Gym to him. Brian Caron, passed away in March, 2016. Brian died as a hero! He worked at Stavis Seafood and the company had an ammonia leak. Brian made it out safely but went back in to save co-workers. Brian had a love for sports and the Caron family honored this love by bidding on the gym dedication at a fundraiser for Hunking. Thank you to the Caron family for supporting Hunking students. We are honored to celebrate Brian’s memory at Hunking! https://www.seafoodsource.com/news/supply-trade/stavis-seafoods-ammonia-leak-kills-one-worker-four-o thers-escape https://drive.google.com/file/d/1Fu440CqLzV71_YAAQJxxkWFqx_Gpn-qB/view?ts=5c598a7e Hunking students are working toward demonstrating Hunking PRIDE! PR=Personal Responsibility I= Integrity D= Determination E= Excellence Hunking NEW News, Reminders, Requests: ● Joint us today for the Bring a Special Person Dance! Enter and exit through the main entrance of the school. ○ K-2 3:00-4:30 ○ 3-4 5:00-6:30 ○ All students must be accompanied by an adult! ● Our 100th day of school parade was so much fun. The band did an amazing job and the kids and staff looked great! ● The PTO is still looking for grade level representatives for grades 4,6,7, and 8. If you are interested send an email to the PTO at [email protected]. ● Hunking Important Dates: ● February 18-22 Mid Winter Break No School ● March 1 Dance for grade 5-8 5:00-6:30 -

Praise for Junana: “In a Society Quickly Shifting Into an Age of Hyper-Connectivity, Junana Is a Timely Read

Praise for Junana: “In a society quickly shifting into an age of hyper-connectivity, Junana is a timely read. The narrative is as fast-paced and complex as our supermodern, technosocial lives. Caron creates a world so vivid and omniscient that one wonders if Caron is simply reporting on something that is already happening. Caron effortlessly handles multiple perspectives, social classes and age groups. Junana should appeal to educators, marketers, programmers and anyone who is a critical thinker looking for something unique and rich for their cranium to bite into. Junana is an important work that provides a lens with which to greater understand the rapid change we're currently experiencing.” Amber Case, cyborg anthropologist Twitter: caseorganic “Junana was a fabulous book. It was part Snow Crash, part Neuromancer part modern society and the implications of our social networking. It captures what might happen if we had an accelerated learning system, who would be challenged by the notion, who would build on the notion. The is a great story and many deep issues that leave you reflecting about social networking, gaming, learning and the world that we live in or what it might be..........” Dave Toole, CEO Outhink Media, Inc. “Highly recommended!...The very interesting premise is thoughtfully worked out. A bit of techno-speak sprinkled here and there lends verisimilitude, but non-techies can ignore it in favor of the story.” Jeff deLaBeaujardiere, NOAA geek and musician Also by Bruce Caron Snoquask: The Last White Dancer Community, Democracy, and Performance Inside the Live Reptile Tent (with Jeff Brouws) Global Villages (DVD, with Tamar Gordon) Junana: Game Nation Junana: Game State Junana Junana by Bruce Caron Yanagi Press Disclaimer: Junana is a work of fiction. -

1 Symbols (2286)

1 Symbols (2286) USV Symbol Macro(s) Description 0009 \textHT <control> 000A \textLF <control> 000D \textCR <control> 0022 ” \textquotedbl QUOTATION MARK 0023 # \texthash NUMBER SIGN \textnumbersign 0024 $ \textdollar DOLLAR SIGN 0025 % \textpercent PERCENT SIGN 0026 & \textampersand AMPERSAND 0027 ’ \textquotesingle APOSTROPHE 0028 ( \textparenleft LEFT PARENTHESIS 0029 ) \textparenright RIGHT PARENTHESIS 002A * \textasteriskcentered ASTERISK 002B + \textMVPlus PLUS SIGN 002C , \textMVComma COMMA 002D - \textMVMinus HYPHEN-MINUS 002E . \textMVPeriod FULL STOP 002F / \textMVDivision SOLIDUS 0030 0 \textMVZero DIGIT ZERO 0031 1 \textMVOne DIGIT ONE 0032 2 \textMVTwo DIGIT TWO 0033 3 \textMVThree DIGIT THREE 0034 4 \textMVFour DIGIT FOUR 0035 5 \textMVFive DIGIT FIVE 0036 6 \textMVSix DIGIT SIX 0037 7 \textMVSeven DIGIT SEVEN 0038 8 \textMVEight DIGIT EIGHT 0039 9 \textMVNine DIGIT NINE 003C < \textless LESS-THAN SIGN 003D = \textequals EQUALS SIGN 003E > \textgreater GREATER-THAN SIGN 0040 @ \textMVAt COMMERCIAL AT 005C \ \textbackslash REVERSE SOLIDUS 005E ^ \textasciicircum CIRCUMFLEX ACCENT 005F _ \textunderscore LOW LINE 0060 ‘ \textasciigrave GRAVE ACCENT 0067 g \textg LATIN SMALL LETTER G 007B { \textbraceleft LEFT CURLY BRACKET 007C | \textbar VERTICAL LINE 007D } \textbraceright RIGHT CURLY BRACKET 007E ~ \textasciitilde TILDE 00A0 \nobreakspace NO-BREAK SPACE 00A1 ¡ \textexclamdown INVERTED EXCLAMATION MARK 00A2 ¢ \textcent CENT SIGN 00A3 £ \textsterling POUND SIGN 00A4 ¤ \textcurrency CURRENCY SIGN 00A5 ¥ \textyen YEN SIGN 00A6 -

Proposal for "Swedish International" Keyboard

ISO/IEC JTC 1/SC 35 N 0748 DATE: 2005-01-31 ISO/IEC JTC 1/SC 35 User Interfaces Secretariat: Association Française de Normalisation (AFNOR) TITLE: Proposal for "Swedish International" keyboard SOURCE: Karl Ivar Larsson, Swedish Expert STATUS: FYI DATE: 2005-01-31 DISTRIBUTION: P and O members of JTC1/SC35 MEDIUM: E NO. OF PAGES: 8 Secretariat ISO/IEC JTC 1/SC 35 – Nathalie Cappel-Souquet – 11 Avenue Francis de Pressensé 93571 St Denis La Plaine Cedex France Telephone: + 33 1 41 62 82 55; Facsimile: + 33 1 49 17 91 29 e-mail: [email protected] LWP Consulting R 04/0-3 Notes: 1. This document was handed out in the SC35 Stockholm meeting 2004-11-24. 2. The proposal contained in the document relates to Swedish standardization, and at present not to any SC35 activities. Contents 1 Scope ...................................................................................................................................................................3 2 Characters added ...............................................................................................................................................3 2.1 Diacritical marks.................................................................................................................................................3 2.2 Special-shape letters..........................................................................................................................................3 2.3 Other characters.................................................................................................................................................3 -

LNCS 7381, Pp

Multi-Tilde-Bar Derivatives Pascal Caron, Jean-Marc Champarnaud, and Ludovic Mignot LITIS, Universit´e de Rouen, 76801 Saint-Etienne´ du Rouvray Cedex, France {pascal.caron,jean-marc.champarnaud,ludovic.mignot}@univ-rouen.fr Abstract. Multi-tilde-bar operators allow us to extend regular expres- sions. The associated extended expressions are compatible with the structure of Glushkov automata and they provide a more succinct repre- sentation than standard expressions. The aim of this paper is to examine the derivation of multi-tilde-bar expressions. Two types of computation are investigated: Brzozowski derivation and Antimirov derivation, as well as the construction of the associated automata. 1 Introduction Regular expression word derivatives have been introduced in [5] by Brzozowski in order to compute language quotients via expression derivatives: for any word w, the language denoted by the derivative of a regular expression E w.r.t. w is the left quotient of the language denoted by E w.r.t. w. Regular expression derivation plays a fundamental role in theory of automata. In particular, under the assumption that the set D of all the derivatives of a regular expression E is finite, it is possible to construct a FA (finite automaton) with D as a set of states that recognizes the language denoted by E. Word derivatives handle unrestricted regular expressions; they are themselves expressions and they provide a DFA (deterministic finite automaton), as far as the ACI (associativity, commutativity and idempotence) properties of the sum of two expressions are used. Alternative types of derivation have been designed since Brzozowski’s seminal work. -

DAVID CARON Co-Chair, Miller Institute for Global Challenges & the Law C

DAVID CARON Co-Chair, Miller Institute for Global Challenges & the Law C. William Maxeiner Distinguished Professor of Law Berkeley Law David Caron ‘83 is an expert in international law. He currently teaches public international law, resolution of private international disputes, ocean law and policy, and the advanced international law writing workshop. Professor Caron is President of the American Society of International Law, Immediate Past Chair of the Advisory Board for the Institute of Transnational Arbitration of the Center for American and International Law, a member of the Global Agenda Council of the World Economic Forum, and a member of the U.S. Department of State Advisory Committee on Public International Law since 1993. He is the co-director of the Miller Institute on Global Challenges and the Law and the co-director of the Law of the Sea Institute, an international consortium of scholars that has played a major part in studies of ocean law since the 1970s. He is a member of the board of editors of the American Journal of International Law, a co-editor in chief of the World Arbitration and Mediation Review and co-editor in chief of the SSRN International Environmental Law Ejournal. Before joining the Boalt faculty in 1987, he practiced with the San Francisco firm of Pillsbury Madison & Sutro. From 1985 to 1986, he was a senior research fellow at the Max Planck Institute for Comparative Public and International Law. A Fulbright scholar and former navigator and salvage diver in the U.S. Coast Guard, Professor Caron graduated from Berkeley Law in 1983. -

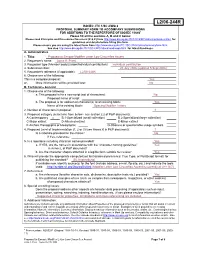

L2/06-244R 6

ISO/IEC JTC 1/SC 2/WG 2 PROPOSAL SUMMARY FORM TO ACCOMPANY SUBMISSIONS FOR ADDITIONS TO THE REPERTOIRE OF ISO/IEC 106461 Please fill all the sections A, B and C below. Please read Principles and Procedures Document (P & P) from http://www.dkuug.dk/JTC1/SC2/WG2/docs/principles.html for guidelines and details before filling this form. Please ensure you are using the latest Form from http://www.dkuug.dk/JTC1/SC2/WG2/docs/summaryform.html. See also http://www.dkuug.dk/JTC1/SC2/WG2/docs/roadmaps.html for latest Roadmaps. A. Administrative 1. Title: Proposal to Encode Modifier Letter Low Circumflex Accent 2. Requester's name: Lorna A. Priest 3. Requester type (Member body/Liaison/Individual contribution): Individual contribution 4. Submission date: 20 July 2006 (updated 5 Sept 2006) 5. Requester's reference (if applicable): L2/06-244R 6. Choose one of the following: This is a complete proposal: Yes or, More information will be provided later: No B. Technical – General 1. Choose one of the following: a. This proposal is for a new script (set of characters): No Proposed name of script: b. The proposal is for addition of character(s) to an existing block: Yes Name of the existing block: Spacing Modifier Letters 2. Number of characters in proposal: 1 3. Proposed category (select one from below - see section 2.2 of P&P document): A-Contemporary x B.1-Specialized (small collection) B.2-Specialized (large collection) C-Major extinct D-Attested extinct E-Minor extinct F-Archaic Hieroglyphic or Ideographic G-Obscure or questionable usage symbols 4.