Transistor-Level Programmable Fabric

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Intel Quartus Prime Pro Edition User Guide: Partial Reconfiguration Send Feedback

Intel® Quartus® Prime Pro Edition User Guide Partial Reconfiguration Updated for Intel® Quartus® Prime Design Suite: 19.3 Subscribe UG-20136 | 2019.11.18 Send Feedback Latest document on the web: PDF | HTML Contents Contents 1. Creating a Partial Reconfiguration Design.......................................................................4 1.1. Partial Reconfiguration Terminology..........................................................................5 1.2. Partial Reconfiguration Process Sequence..................................................................6 1.3. Internal Host Partial Reconfiguration........................................................................ 7 1.4. External Host Partial Reconfiguration........................................................................ 9 1.5. Partial Reconfiguration Design Considerations............................................................9 1.5.1. Partial Reconfiguration Design Guidelines.................................................... 11 1.5.2. PR File Management.................................................................................12 1.5.3. Evaluating PR Region Initial Conditions....................................................... 16 1.5.4. Creating Wrapper Logic for PR Regions........................................................16 1.5.5. Creating Freeze Logic for PR Regions.......................................................... 17 1.5.6. Resetting the PR Region Registers.............................................................. 18 1.5.7. Promoting -

CHAPTER 3: Combinational Logic Design with Plds

CHAPTER 3: Combinational Logic Design with PLDs LSI chips that can be programmed to perform a specific function have largely supplanted discrete SSI and MSI chips in board-level designs. A programmable logic device (PLD), is an LSI chip that contains a “regular” circuit structure, but that allows the designer to customize it for a specific application. PLDs sold in the market is not customized with specific functions. Instead, it is programmed by the purchaser to perform a function required by a particular application. PLD-based board-level designs often cost less than SSI/MSI designs for a number of reasons. Since PLDs provide more functionality per chip, the total chip and printed- circuit-board (PCB) area are less. Manufacturing costs are reduced in other ways too. A PLD-based board manufacturer needs to keep samples of few, “generic” PLD types, instead of many different MSI part types. This reduces overall inventory requirements and simplifies handling. PLD-type structures also appear as logic elements embedded in LSI chips, where chip count and board areas are not an issue. Despite the fact that a PLD may “waste” a certain number of gates, a PLD structure can actually reduce circuit cost because its “regular” physical structure may use less chip area than a “random logic” circuit. More importantly, the logic function performed by the PLD structure can often be “tweaked” in successive chip revisions by changing just one or a few metal mask layers that define signal connections in the array, instead of requiring a wholesale addition of gates and gate inputs and subsequent re-layout of a “random logic” design. -

SRAM Read/Write Margin Enhancements Using Finfets

IEEE TRANSACTIONS ON VERY LARGE SCALE INTEGRATION (VLSI) SYSTEMS, VOL. 18, NO. 6, JUNE 2010 887 SRAM Read/Write Margin Enhancements Using FinFETs Andrew Carlson, Member, IEEE, Zheng Guo, Student Member, IEEE, Sriram Balasubramanian, Member, IEEE, Radu Zlatanovici, Member, IEEE, Tsu-Jae King Liu, Fellow, IEEE, and Borivoje Nikolic´, Senior Member, IEEE Abstract—Process-induced variations and sub-threshold [1]. Accurate control is essential for high read stability. Sim- leakage in bulk-Si technology limit the scaling of SRAM into ilarly, variability and device leakage affect the writeability of the sub-32 nm nodes. New device architectures are being considered cell. To maintain both desired writeability and read stability of to improve control and reduce short channel effects. Among the SRAM arrays, several radical departures from the conven- the likely candidates, FinFETs are the most attractive option be- cause of their good scalability and possibilities for further SRAM tional design have been considered as follows. performance and yield enhancement through independent gating. 1) Scaling of the traditional six-transistor (6-T) SRAM cell The enhancements to read/write margins and yield are investi- at a slower pace, since a transistor with a larger area is gated in detail for two cell designs employing independently gated more immune to variations. This is a common approach FinFETs. It is shown that FinFET-based 6-T SRAM cells designed in 65- and 45-nm technology nodes; while it still might with pass-gate feedback (PGFB) achieve significant improvements in the cell read stability without area penalty. The write-ability of be applicable to small arrays in future, it fundamentally the cell can be improved through the use of pull-up write gating undermines the objective of technology scaling. -

Software-Defined Hardware Provides the Key to High-Performance Data

Software-Defined Hardware Provides the Key to High-Performance Data Acceleration (WP019) Software-Defined Hardware Provides the Key to High- Performance Data Acceleration (WP019) November 13, 2019 White Paper Executive Summary Across a wide range of industries, data acceleration is the key to building efficient, smart systems. Traditional general-purpose processors are falling short in their ability to support the performance and latency constraints that users have. A number of accelerator technologies have appeared to fill the gap that are based on custom silicon, graphics processors or dynamically reconfigurable hardware, but the key to their success is their ability to integrate into an environment where high throughput, low latency and ease of development are paramount requirements. A board-level platform developed jointly by Achronix and BittWare has been optimized for these applications, providing developers with a rapid path to deployment for high-throughput data acceleration. A Growing Demand for Distributed Acceleration There is a massive thirst for performance to power a diverse range of applications in both cloud and edge computing. To satisfy this demand, operators of data centers, network hubs and edge-computing sites are turning to the technology of customized accelerators. Accelerators are a practical response to the challenges faced by users with a need for high-performance computing platforms who can no longer count on traditional general-purpose CPUs, such as those in the Intel Xeon family, to support the growth in demand for data throughput. The core of the problem with the general- purpose CPU is that Moore's Law continues to double the number of available transistors per square millimeter approximately every two years but no longer allows for growth in clock speeds. -

Design and Implementation of 4 Bit Carry Skip Adder Using Nmos and Pmos Transmission Gate

EasyChair Preprint № 2561 Design and Implementation of 4 Bit Carry Skip Adder Using Nmos and Pmos Transmission Gate Ashutosh Pandey, Harshit Singh, Vivek Kumar Chaubey and Utkarsh Jaiswal EasyChair preprints are intended for rapid dissemination of research results and are integrated with the rest of EasyChair. February 5, 2020 CHAPTER-1 INTRODUCTION In the field of electronics, a digital circuit that performs addition of numbers is called an adder or summer. In various kinds of processors like computers, adders have many applications in the arithmetic logic units, as well as in other parts, where these are used to compute table indices, addresses and similar operations. Mostly, the common adders operate on binary numbers, but they can also be constructed for many other numerical representations, such as excess-3 or binary coded decimal (BCD). It is insignificant to customize the adder into an adder-subtractor unit in situations where negative numbers are represented by one's or two's complement. The usage of power efficient VLSI circuits is required to satiate the perennial need for mobile electronic devices. The calculations in these devices ought to be performed using area efficient and low power circuits working at higher speed. The most elementary arithmetic operation is addition; and the most basic arithmetic component of the processor is the adder. Depending upon the delay, area and power consumption requirements; certain adder implementations such as ripple carry, carry-skip, carry select and carry look ahead are available. When large bit numbers are used, the ripple carry adder (RCA) is not very efficient. With the bit length, there is a linear increase in delay. -

High Performance Ripple Carry Adder Using Domino

International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395 -0056 Volume: 02 Issue: 08 | Nov-2015 www.irjet.net p-ISSN: 2395-0072 HIGH PERFORMANCE RIPPLE CARRY ADDER USING DOMINO LOGIC Dr.J.Karpagam1 , A.Arunadevi2 1 Professor, Department of ECE, KPRIET, Coimbatore, Tamilnadu, India 2 PG Scholar, Department of ECE, KPRIET, Coimbatore, Tamilnadu, India ---------------------------------------------------------------------***--------------------------------------------------------------------- ABSTRACT excessively with a mixer of dynamic and static circuit styles, use of dual supply voltages and dual threshold The demand and popularity of portable electronics is voltages. driving designers to strive for small silicon area, higher speeds, low power dissipation and reliability. Domino logic Domino logic is a clocked logic family which means that circuits are important as it provides better speed and has every single logic gate has a clock signal present. When the lesser transistor requirement when compared to static clock signal turns low, node N0 goes high, causing the CMOS logic circuits. This project presents the design and output of the gate to go low. This represents the only performance of 8-bit Ripple Carry Adder using CMOS mechanism for the gate output to go low once it has been Domino logic targeting at full-custom high speed driven high. The operating period of the cell when its input applications. The constant delay characteristic of this logic clock and output are low is called the recharge phase or style regardless of the logic expression makes it suitable for cycle. The next phase, when the clock is high, is called the implementing complicated logic expression such as addition. evaluate phase or cycle. -

Advanced MOSFET Designs and Implications for SRAM Scaling

Advanced MOSFET Designs and Implications for SRAM Scaling By Changhwan Shin A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Engineering - Electrical Engineering and Computer Sciences in the Graduate Division of the University of California, Berkeley Committee in charge: Professor Tsu-Jae King Liu, Chair Professor Borivoje Nikolić Professor Eugene E. Haller Spring 2011 Advanced MOSFET Designs and Implications for SRAM Scaling Copyright © 2011 by Changhwan Shin Abstract Advanced MOSFET Designs and Implications for SRAM Scaling by Changhwan Shin Doctor of Philosophy in Engineering – Electrical Engineering and Computer Sciences University of California, Berkeley Professor Tsu-Jae King Liu, Chair Continued planar bulk MOSFET scaling is becoming increasingly difficult due to increased random variation in transistor performance with decreasing gate length, and thereby scaling of SRAM using minimum-size transistors is further challenging. This dissertation will discuss various advanced MOSFET designs and their benefits for extending density and voltage scaling of static memory (SRAM) arrays. Using three- dimensional (3-D) process and design simulations, transistor designs are optimized. Then, using an analytical compact model calibrated to the simulated transistor current-vs.-voltage characteristics, the performance and yield of six-transistor (6-T) SRAM cells are estimated. For a given cell area, fully depleted silicon-on-insulator (FD-SOI) MOSFET technology is projected to provide for significantly improved yield across a wide range of operating voltages, as compared with conventional planar bulk CMOS technology. Quasi-Planar (QP) bulk silicon MOSFETs are a lower-cost alternative and also can provide for improved SRAM yield. A more printable "notchless" QP bulk SRAM cell layout is proposed to reduce lithographic variations, and is projected to achieve six-sigma yield (required for terabit-scale SRAM arrays) with a minimum operating voltage below 1 Volt. -

Ice40 Ultraplus Family Data Sheet

iCE40 UltraPlus™ Family Data Sheet FPGA-DS-02008 Version 1.4 August 2017 iCE40 UltraPlus™ Family Data Sheet Copyright Notice Copyright © 2017 Lattice Semiconductor Corporation. All rights reserved. The contents of these materials contain proprietary and confidential information (including trade secrets, copyright, and other Intellectual Property interests) of Lattice Semiconductor Corporation and/or its affiliates. All rights are reserved. You are permitted to use this document and any information contained therein expressly and only for bona fide non-commercial evaluation of products and/or services from Lattice Semiconductor Corporation or its affiliates; and only in connection with your bona fide consideration of purchase or license of products or services from Lattice Semiconductor Corporation or its affiliates, and only in accordance with the terms and conditions stipulated. Contents, (in whole or in part) may not be reproduced, downloaded, disseminated, published, or transferred in any form or by any means, except with the prior written permission of Lattice Semiconductor Corporation and/or its affiliates. Copyright infringement is a violation of federal law subject to criminal and civil penalties. You have no right to copy, modify, create derivative works of, transfer, sublicense, publicly display, distribute or otherwise make these materials available, in whole or in part, to any third party. You are not permitted to reverse engineer, disassemble, or decompile any device or object code provided herewith. Lattice Semiconductor Corporation reserves the right to revoke these permissions and require the destruction or return of any and all Lattice Semiconductor Corporation proprietary materials and/or data. Patents The subject matter described herein may contain one or more inventions claimed in patents or patents pending owned by Lattice Semiconductor Corporation and/or its affiliates. -

Architecture Description and Packing for Logic Blocks with Hierarchy, Modes and Complex Interconnect

Architecture Description and Packing for Logic Blocks with Hierarchy, Modes and Complex Interconnect Jason Luu, Jason Anderson, and Jonathan Rose The Edward S. Rogers Sr. Department of Electrical and Computer Engineering University of Toronto, Toronto, ON, Canada jluu|janders|[email protected] SRHI D SRLO Reset Type INIT1 Q CE Sync/Async ABSTRACT COUT INIT0 CK SR FF/LAT DX The development of future FPGA fabrics with more sophis- DMUX DI2 D6:1 A6:A1 W6:W1 D ticated and complex logic blocks requires a new CAD flow D O6 FF/LAT O5 DX INIT1 Q DQ D INIT0 CK DI1 SRHI that permits the expression of that complexity and the abil- CE SRLO WEN MC31 SRHI D SRLO CK Q SR DI INIT1 CE INIT0 ity to synthesize to it. In this paper, we present a new logic CK SR CX CMUX block description language that can depict complex intra- DI2 C6:1 A6:A1 W6:W1 C C O6 block interconnect, hierarchy and modes of operation. These FF/LAT O5 CX INIT1 Q CQ D INIT0 CK DI1 CE SRHI SRLO features are necessary to support modern and future FPGA WEN MC31 SRHI CK D SRLO SR CI INIT1 Q CE INIT0 complex soft logic blocks, memory and hard blocks. The key CK SR BX BMUX part of the CAD flow associated with this complexity is the DI2 B6:1 A6:A1 W6:W1 B B O6 packer, which takes the logical atomic pieces of the complex O5 FF/LAT BX INIT1 Q BQ D DI1 INIT0 CK CE SRHI SRLO WEN MC31 SRHI CK blocks and groups them into whole physical entities. -

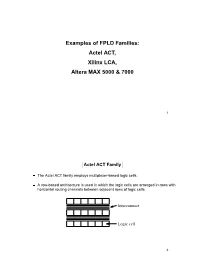

Examples of FPLD Families: Actel ACT, Xilinx LCA, Altera MAX 5000 & 7000

Examples of FPLD Families: Actel ACT, Xilinx LCA, Altera MAX 5000 & 7000 1 Actel ACT Family ¯ The Actel ACT family employs multiplexer-based logic cells. ¯ A row-based architecture is used in which the logic cells are arranged in rows with horizontal routing channels between adjacent rows of logic cells. Interconnect Logic cell 2 ACT 1 Logic Modules ¯ ACT 1 FPGAs use a single type of logic module. Logic Module Logic Module Logic Module M1 A0 F A0 D Actel ACT 0 F1 A1 0 M3 F1 A1 1 '1' 1 SA F1 S F SA 0 F F2 C 0 M2 1 B0 1 B0 S D 0 B1 0 F2 B1 1 F2 '1' 1 SB (a) S SB S3 A S0 S3 S0 '0' S1 O1 S1 O1 B F=(A·B)+(B'·C)+D (b) (c) (d) (a) An Actel FPGA. (b) An ACT 1 logic module. (c) An implementation of an ACT 1 logic module using pass transistors. (d) An example of function implementation by an ACT 1 logic module. 3 ACT 2 and ACT 3 Logic Modules ¯ Both ACT 2 and ACT 3 FPGAs use two types of logic module. C-Module S-Module (ACT 2) S-Module (ACT 3) D00 D00 SED00 SE D01 D01 D01 D10 YOUTD10 YQD10 YQ D11 D11 D11 A1 A1 A1 B1 S1 B1 S1 B1 S1 A0 A0 A0 B0 S0 CLR S0 B0 S0 CLR CLK CLK (a) (b) (c) SE (sequential element) SE 1 1 D D Q Q Z Z D 0 0 Q CLK C2 S S C1 master slave C2 latch latch CLR CLR C1 CLR combinational logic for clock flip-flop macro and clear D 1D Q CLK C1 (d) (e) (a) The C-module used by both ACT 2 and ACT 3 FPGAs. -

(12) United States Patent (1O) Patent No.: US 7,489,538 B2 Mari Et Al

mu uuuu ui iiui iiui mu mil uui uui lull uui uuii uu uii mi (12) United States Patent (1o) Patent No.: US 7,489,538 B2 Mari et al. (45) Date of Patent: Feb. 10, 2009 (54) RADIATION TOLERANT COMBINATIONAL 5,406,513 A * 4/1995 Canafis et al . .............. 365/181 LOGIC CELL (Continued) (75) Inventors: Gary R. Maki, Post Falls, ID (US); OTHER PUBLICATIONS Jody W. Gambles, Post Falls, ID (US); Sterling Whitaker, Albuquerque, NM "Ionizing Radiation Effects in MOS Devices and Circuits", Edited by (US) T.P. Ma., Department of Electrical Engineering, Yale University, New Haven Connecticut and Paul V. Dressendorfer, Sandia National (73) Assignee: University of Idaho, Moscow, ID (US) Laboratories, Albuquerque, NM, A Wiley-Interscience Publication, John Wiley & Sons, pp. 484-589. (*) Notice: Subject to any disclaimer, the term of this (Continued) patent is extended or adjusted under 35 U.S.C. 154(b) by 263 days. Primary Examiner Vu A Le (74) Attorney, Agent, or Firm Haverstock & Owens LLP (21) Appl. No.: 11/527,375 (57) ABSTRACT (22) Filed: Sep. 25, 2006 A system has a reduced sensitivity to Single Event Upset (65) Prior Publication Data and/or Single Event Transient(s) compared to traditional logic devices. In a particular embodiment, the system US 2007/0109865 Al May 17, 2007 includes an input, a logic block, a bias stage, a state machine, and an output. The logic block is coupled to the input. The Related U.S. Application Data logic block is for implementing a logic function, receiving a (60) Provisional application No. 60/736,979, filed on Nov. -

Efpgas : Architectural Explorations, System Integration & a Visionary Industrial Survey of Programmable Technologies Syed Zahid Ahmed

eFPGAs : Architectural Explorations, System Integration & a Visionary Industrial Survey of Programmable Technologies Syed Zahid Ahmed To cite this version: Syed Zahid Ahmed. eFPGAs : Architectural Explorations, System Integration & a Visionary Indus- trial Survey of Programmable Technologies. Micro and nanotechnologies/Microelectronics. Université Montpellier II - Sciences et Techniques du Languedoc, 2011. English. tel-00624418 HAL Id: tel-00624418 https://tel.archives-ouvertes.fr/tel-00624418 Submitted on 16 Sep 2011 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Université Montpellier 2 (UM2) École Doctorale I2S LIRMM (Laboratoire d'Informatique, de Robotique et de Microélectronique de Montpellier) Domain: Microelectronics PhD thesis report for partial fulfillment of requirements of Doctorate degree of UM2 Thesis conducted in French Industrial PhD (CIFRE) framework between: Menta & LIRMM lab (Dec.2007 – Feb. 2011) in Montpellier, FRANCE “eFPGAs: Architectural Explorations, System Integration & a Visionary Industrial Survey of Programmable Technologies” eFPGAs: Explorations architecturales, integration système, et une enquête visionnaire industriel des technologies programmable by Syed Zahid AHMED Presented and defended publically on: 22 June 2011 Jury: Mr. Guy GOGNIAT Prof. at STICC/UBS (Lorient, FRANCE) President Mr. Habib MEHREZ Prof. at LIP6/UPMC (Paris, FRANCE) Reviewer Mr.