POWER4 System Microarchitecture

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Wind Rose Data Comes in the Form >200,000 Wind Rose Images

Making Wind Speed and Direction Maps Rich Stromberg Alaska Energy Authority [email protected]/907-771-3053 6/30/2011 Wind Direction Maps 1 Wind rose data comes in the form of >200,000 wind rose images across Alaska 6/30/2011 Wind Direction Maps 2 Wind rose data is quantified in very large Excel™ spreadsheets for each region of the state • Fields: X Y X_1 Y_1 FILE FREQ1 FREQ2 FREQ3 FREQ4 FREQ5 FREQ6 FREQ7 FREQ8 FREQ9 FREQ10 FREQ11 FREQ12 FREQ13 FREQ14 FREQ15 FREQ16 SPEED1 SPEED2 SPEED3 SPEED4 SPEED5 SPEED6 SPEED7 SPEED8 SPEED9 SPEED10 SPEED11 SPEED12 SPEED13 SPEED14 SPEED15 SPEED16 POWER1 POWER2 POWER3 POWER4 POWER5 POWER6 POWER7 POWER8 POWER9 POWER10 POWER11 POWER12 POWER13 POWER14 POWER15 POWER16 WEIBC1 WEIBC2 WEIBC3 WEIBC4 WEIBC5 WEIBC6 WEIBC7 WEIBC8 WEIBC9 WEIBC10 WEIBC11 WEIBC12 WEIBC13 WEIBC14 WEIBC15 WEIBC16 WEIBK1 WEIBK2 WEIBK3 WEIBK4 WEIBK5 WEIBK6 WEIBK7 WEIBK8 WEIBK9 WEIBK10 WEIBK11 WEIBK12 WEIBK13 WEIBK14 WEIBK15 WEIBK16 6/30/2011 Wind Direction Maps 3 Data set is thinned down to wind power density • Fields: X Y • POWER1 POWER2 POWER3 POWER4 POWER5 POWER6 POWER7 POWER8 POWER9 POWER10 POWER11 POWER12 POWER13 POWER14 POWER15 POWER16 • Power1 is the wind power density coming from the north (0 degrees). Power 2 is wind power from 22.5 deg.,…Power 9 is south (180 deg.), etc… 6/30/2011 Wind Direction Maps 4 Spreadsheet calculations X Y POWER1 POWER2 POWER3 POWER4 POWER5 POWER6 POWER7 POWER8 POWER9 POWER10 POWER11 POWER12 POWER13 POWER14 POWER15 POWER16 Max Wind Dir Prim 2nd Wind Dir Sec -132.7365 54.4833 0.643 0.767 1.911 4.083 -

The 32-Bit PA-RISC Run-Time Architecture Document, V. 1.0 for HP

The 32-bit PA-RISC Run-time Architecture Document HP-UX 11.0 Version 1.0 (c) Copyright 1997 HEWLETT-PACKARD COMPANY. The information contained in this document is subject to change without notice. HEWLETT-PACKARD MAKES NO WARRANTY OF ANY KIND WITH REGARD TO THIS MATERIAL, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE. Hewlett-Packard shall not be liable for errors contained herein or for incidental or consequential damages in connection with furnishing, performance, or use of this material. Hewlett-Packard assumes no responsibility for the use or reliability of its software on equipment that is not furnished by Hewlett-Packard. This document contains proprietary information which is protected by copyright. All rights are reserved. No part of this document may be photocopied, reproduced, or translated to another language without the prior written consent of Hewlett-Packard Company. CSO/STG/STD/CLO Hewlett-Packard Company 11000 Wolfe Road Cupertino, California 95014 By The Run-time Architecture Team 32-bit PA-RISC RUN-TIME ARCHITECTURE DOCUMENT 11.0 version 1.0 -1 -2 CHAPTER 1 Introduction 7 1.1 Target Audiences 7 1.2 Overview of the PA-RISC Runtime Architecture Document 8 CHAPTER 2 Common Coding Conventions 9 2.1 Memory Model 9 2.1.1 Text Segment 9 2.1.2 Initialized and Uninitialized Data Segments 9 2.1.3 Shared Memory 10 2.1.4 Subspaces 10 2.2 Register Usage 10 2.2.1 Data Pointer (GR 27) 10 2.2.2 Linkage Table Register (GR 19) 10 2.2.3 Stack Pointer (GR 30) 11 2.2.4 Space -

Power4 Focuses on Memory Bandwidth IBM Confronts IA-64, Says ISA Not Important

VOLUME 13, NUMBER 13 OCTOBER 6,1999 MICROPROCESSOR REPORT THE INSIDERS’ GUIDE TO MICROPROCESSOR HARDWARE Power4 Focuses on Memory Bandwidth IBM Confronts IA-64, Says ISA Not Important by Keith Diefendorff company has decided to make a last-gasp effort to retain control of its high-end server silicon by throwing its consid- Not content to wrap sheet metal around erable financial and technical weight behind Power4. Intel microprocessors for its future server After investing this much effort in Power4, if IBM fails business, IBM is developing a processor it to deliver a server processor with compelling advantages hopes will fend off the IA-64 juggernaut. Speaking at this over the best IA-64 processors, it will be left with little alter- week’s Microprocessor Forum, chief architect Jim Kahle de- native but to capitulate. If Power4 fails, it will also be a clear scribed IBM’s monster 170-million-transistor Power4 chip, indication to Sun, Compaq, and others that are bucking which boasts two 64-bit 1-GHz five-issue superscalar cores, a IA-64, that the days of proprietary CPUs are numbered. But triple-level cache hierarchy, a 10-GByte/s main-memory IBM intends to resist mightily, and, based on what the com- interface, and a 45-GByte/s multiprocessor interface, as pany has disclosed about Power4 so far, it may just succeed. Figure 1 shows. Kahle said that IBM will see first silicon on Power4 in 1Q00, and systems will begin shipping in 2H01. Looking for Parallelism in All the Right Places With Power4, IBM is targeting the high-reliability servers No Holds Barred that will power future e-businesses. -

IBM Powerpc 970 (A.K.A. G5)

IBM PowerPC 970 (a.k.a. G5) Ref 1 David Benham and Yu-Chung Chen UIC – Department of Computer Science CS 466 PPC 970FX overview ● 64-bit RISC ● 58 million transistors ● 512 KB of L2 cache and 96KB of L1 cache ● 90um process with a die size of 65 sq. mm ● Native 32 bit compatibility ● Maximum clock speed of 2.7 Ghz ● SIMD instruction set (Altivec) ● 42 watts @ 1.8 Ghz (1.3 volts) ● Peak data bandwidth of 6.4 GB per second A picture is worth a 2^10 words (approx.) Ref 2 A little history ● PowerPC processor line is a product of the AIM alliance formed in 1991. (Apple, IBM, and Motorola) ● PPC 601 (G1) - 1993 ● PPC 603 (G2) - 1995 ● PPC 750 (G3) - 1997 ● PPC 7400 (G4) - 1999 ● PPC 970 (G5) - 2002 ● AIM alliance dissolved in 2005 Processor Ref 3 Ref 3 Core details ● 16(int)-25(vector) stage pipeline ● Large number of 'in flight' instructions (various stages of execution) - theoretical limit of 215 instructions ● 512 KB L2 cache ● 96 KB L1 cache – 64 KB I-Cache – 32 KB D-Cache Core details continued ● 10 execution units – 2 load/store operations – 2 fixed-point register-register operations – 2 floating-point operations – 1 branch operation – 1 condition register operation – 1 vector permute operation – 1 vector ALU operation ● 32 64 bit general purpose registers, 32 64 bit floating point registers, 32 128 vector registers Pipeline Ref 4 Benchmarks ● SPEC2000 ● BLAST – Bioinformatics ● Amber / jac - Structure biology ● CFD lab code SPEC CPU2000 ● IBM eServer BladeCenter JS20 ● PPC 970 2.2Ghz ● SPECint2000 ● Base: 986 Peak: 1040 ● SPECfp2000 ● Base: 1178 Peak: 1241 ● Dell PowerEdge 1750 Xeon 3.06Ghz ● SPECint2000 ● Base: 1031 Peak: 1067 Apple’s SPEC Results*2 ● SPECfp2000 ● Base: 1030 Peak: 1044 BLAST Ref. -

Introduction to the Cell Multiprocessor

Introduction J. A. Kahle M. N. Day to the Cell H. P. Hofstee C. R. Johns multiprocessor T. R. Maeurer D. Shippy This paper provides an introductory overview of the Cell multiprocessor. Cell represents a revolutionary extension of conventional microprocessor architecture and organization. The paper discusses the history of the project, the program objectives and challenges, the design concept, the architecture and programming models, and the implementation. Introduction: History of the project processors in order to provide the required Initial discussion on the collaborative effort to develop computational density and power efficiency. After Cell began with support from CEOs from the Sony several months of architectural discussion and contract and IBM companies: Sony as a content provider and negotiations, the STI (SCEI–Toshiba–IBM) Design IBM as a leading-edge technology and server company. Center was formally opened in Austin, Texas, on Collaboration was initiated among SCEI (Sony March 9, 2001. The STI Design Center represented Computer Entertainment Incorporated), IBM, for a joint investment in design of about $400,000,000. microprocessor development, and Toshiba, as a Separate joint collaborations were also set in place development and high-volume manufacturing technology for process technology development. partner. This led to high-level architectural discussions A number of key elements were employed to drive the among the three companies during the summer of 2000. success of the Cell multiprocessor design. First, a holistic During a critical meeting in Tokyo, it was determined design approach was used, encompassing processor that traditional architectural organizations would not architecture, hardware implementation, system deliver the computational power that SCEI sought structures, and software programming models. -

IBM Power Roadmap

POWER6™ Processor and Systems Jim McInnes [email protected] Compiler Optimization IBM Canada Toronto Software Lab © 2007 IBM Corporation All statements regarding IBM future directions and intent are subject to change or withdrawal without notice and represent goals and objectives only Role .I am a Technical leader in the Compiler Optimization Team . Focal point to the hardware development team . Member of the Power ISA Architecture Board .For each new microarchitecture I . help direct the design toward helpful features . Design and deliver specific compiler optimizations to enable hardware exploitation 2 IBM POWER6 Overview © 2007 IBM Corporation All statements regarding IBM future directions and intent are subject to change or withdrawal without notice and represent goals and objectives only POWER5 Chip Overview High frequency dual-core chip . 8 execution units 2LS, 2FP, 2FX, 1BR, 1CR . 1.9MB on-chip shared L2 – point of coherency, 3 slices . On-chip L3 directory and controller . On-chip memory controller Technology & Chip Stats . 130nm lithography, Cu, SOI . 276M transistors, 389 mm2 . I/Os: 2313 signal, 3057 Power/Gnd 3 IBM POWER6 Overview © 2007 IBM Corporation All statements regarding IBM future directions and intent are subject to change or withdrawal without notice and represent goals and objectives only POWER6 Chip Overview SDU RU Ultra-high frequency dual-core chip IFU FXU . 8 execution units L2 Core0 VMX L2 2LS, 2FP, 2FX, 1BR, 1VMX QUAD LSU FPU QUAD . 2 x 4MB on-chip L2 – point of L3 coherency, 4 quads L2 CNTL CNTL . On-chip L3 directory and controller . Two on-chip memory controllers M FBC GXC M Technology & Chip stats C C . -

Power Architecture® ISA 2.06 Stride N Prefetch Engines to Boost Application's Performance

Power Architecture® ISA 2.06 Stride N prefetch Engines to boost Application's performance History of IBM POWER architecture: POWER stands for Performance Optimization with Enhanced RISC. Power architecture is synonymous with performance. Introduced by IBM in 1991, POWER1 was a superscalar design that implemented register renaming andout-of-order execution. In Power2, additional FP unit and caches were added to boost performance. In 1996 IBM released successor of the POWER2 called P2SC (POWER2 Super chip), which is a single chip implementation of POWER2. P2SC is used to power the 30-node IBM Deep Blue supercomputer that beat world Chess Champion Garry Kasparov at chess in 1997. Power3, first 64 bit SMP, featured a data prefetch engine, non-blocking interleaved data cache, dual floating point execution units, and many other goodies. Power3 also unified the PowerPC and POWER Instruction set and was used in IBM's RS/6000 servers. The POWER3-II reimplemented POWER3 using copper interconnects, delivering double the performance at about the same price. Power4 was the first Gigahertz dual core processor launched in 2001 which was awarded the MicroProcessor Technology Award in recognition of its innovations and technology exploitation. Power5 came in with symmetric multi threading (SMT) feature to further increase application's performance. In 2004, IBM with 15 other companies founded Power.org. Power.org released the Power ISA v2.03 in September 2006, Power ISA v.2.04 in June 2007 and Power ISA v.2.05 with many advanced features such as VMX, virtualization, variable length encoding, hyper visor functionality, logical partitioning, virtual page handling, Decimal Floating point and so on which further boosted the architecture leadership in the market place and POWER5+, Cell, POWER6, PA6T, Titan are various compliant cores. -

POWER8: the First Openpower Processor

POWER8: The first OpenPOWER processor Dr. Michael Gschwind Senior Technical Staff Member & Senior Manager IBM Power Systems #OpenPOWERSummit Join the conversation at #OpenPOWERSummit 1 OpenPOWER is about choice in large-scale data centers The choice to The choice to The choice to differentiate innovate grow . build workload • collaborative • delivered system optimized innovation in open performance solutions ecosystem • new capabilities . use best-of- • with open instead of breed interfaces technology scaling components from an open ecosystem Join the conversation at #OpenPOWERSummit Why Power and Why Now? . Power is optimized for server workloads . Power8 was optimized to simplify application porting . Power8 includes CAPI, the Coherent Accelerator Processor Interconnect • Building on a long history of IBM workload acceleration Join the conversation at #OpenPOWERSummit POWER8 Processor Cores • 12 cores (SMT8) 96 threads per chip • 2X internal data flows/queues • 64K data cache, 32K instruction cache Caches • 512 KB SRAM L2 / core • 96 MB eDRAM shared L3 • Up to 128 MB eDRAM L4 (off-chip) Accelerators • Crypto & memory expansion • Transactional Memory • VMM assist • Data Move / VM Mobility • Coherent Accelerator Processor Interface (CAPI) Join the conversation at #OpenPOWERSummit 4 POWER8 Core •Up to eight hardware threads per core (SMT8) •8 dispatch •10 issue •16 execution pipes: •2 FXU, 2 LSU, 2 LU, 4 FPU, 2 VMX, 1 Crypto, 1 DFU, 1 CR, 1 BR •Larger Issue queues (4 x 16-entry) •Larger global completion, Load/Store reorder queue •Improved branch prediction •Improved unaligned storage access •Improved data prefetch Join the conversation at #OpenPOWERSummit 5 POWER8 Architecture . High-performance LE support – Foundation for a new ecosystem . Organic application growth Power evolution – Instruction Fusion 1600 PowerPC . -

Processor-103

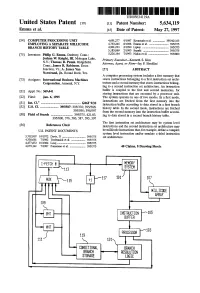

US005634119A United States Patent 19 11 Patent Number: 5,634,119 Emma et al. 45 Date of Patent: May 27, 1997 54) COMPUTER PROCESSING UNIT 4,691.277 9/1987 Kronstadt et al. ................. 395/421.03 EMPLOYING ASEPARATE MDLLCODE 4,763,245 8/1988 Emma et al. ........................... 395/375 BRANCH HISTORY TABLE 4,901.233 2/1990 Liptay ...... ... 395/375 5,185,869 2/1993 Suzuki ................................... 395/375 75) Inventors: Philip G. Emma, Danbury, Conn.; 5,226,164 7/1993 Nadas et al. ............................ 395/800 Joshua W. Knight, III, Mohegan Lake, Primary Examiner-Kenneth S. Kim N.Y.; Thomas R. Puzak. Ridgefield, Attorney, Agent, or Firm-Jay P. Sbrollini Conn.; James R. Robinson, Essex Junction, Vt.; A. James Wan 57 ABSTRACT Norstrand, Jr., Round Rock, Tex. A computer processing system includes a first memory that 73 Assignee: International Business Machines stores instructions belonging to a first instruction set archi Corporation, Armonk, N.Y. tecture and a second memory that stores instructions belong ing to a second instruction set architecture. An instruction (21) Appl. No.: 369,441 buffer is coupled to the first and second memories, for storing instructions that are executed by a processor unit. 22 Fied: Jan. 6, 1995 The system operates in one of two modes. In a first mode, instructions are fetched from the first memory into the 51 Int, C. m. G06F 9/32 instruction buffer according to data stored in a first branch 52 U.S. Cl. ....................... 395/587; 395/376; 395/586; history table. In the second mode, instructions are fetched 395/595; 395/597 from the second memory into the instruction buffer accord 58 Field of Search ........................... -

The 32-Bit PA-RISC Run- Time Architecture Document

The 32-bit PA-RISC Run- time Architecture Document HP-UX 10.20 Version 3.0 (c) Copyright 1985-1997 HEWLETT-PACKARD COMPANY. The information contained in this document is subject to change without notice. HEWLETT-PACKARD MAKES NO WARRANTY OF ANY KIND WITH REGARD TO THIS MATERIAL, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OFMERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE. Hewlett-Packard shall not be liable for errors contained herein or for incidental or consequential damages in connection with furnishing, performance, or use of this material. Hewlett-Packard assumes no responsibility for the use or reliability of its software on equipment that is not furnished by Hewlett-Packard. This document contains proprietary information which is protected by copyright. All rights are reserved. No part of this document may be photocopied, reproduced, or translated to another language without the prior written consent of Hewlett- Packard Company. CSO/STG/STD/CLO Hewlett-Packard Company 11000 Wolfe Road Cupertino, California 95014 By The Run-time Architecture Team CHAPTER 1 Introduction This document describes the runtime architecture for PA-RISC systems running either the HP-UX or the MPE/iX operating system. Other operating systems running on PA-RISC may also use this runtime architecture or a variant of it. The runtime architecture defines all the conventions and formats necessary to compile, link, and execute a program on one of these operating systems. Its purpose is to ensure that object modules produced by many different compilers can be linked together into a single application, and to specify the interfaces between compilers and linker, and between linker and operating system. -

Processor Design:System-On-Chip Computing For

Processor Design Processor Design System-on-Chip Computing for ASICs and FPGAs Edited by Jari Nurmi Tampere University of Technology Finland A C.I.P. Catalogue record for this book is available from the Library of Congress. ISBN 978-1-4020-5529-4 (HB) ISBN 978-1-4020-5530-0 (e-book) Published by Springer, P.O. Box 17, 3300 AA Dordrecht, The Netherlands. www.springer.com Printed on acid-free paper All Rights Reserved © 2007 Springer No part of this work may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, photocopying, microfilming, recording or otherwise, without written permission from the Publisher, with the exception of any material supplied specifically for the purpose of being entered and executed on a computer system, for exclusive use by the purchaser of the work. To Pirjo, Lauri, Eero, and Santeri Preface When I started my computing career by programming a PDP-11 computer as a freshman in the university in early 1980s, I could not have dreamed that one day I’d be able to design a processor. At that time, the freshmen were only allowed to use PDP. Next year I was given the permission to use the famous brand-new VAX-780 computer. Also, my new roommate at the dorm had got one of the first personal computers, a Commodore-64 which we started to explore together. Again, I could not have imagined that hundreds of times the processing power will be available in an everyday embedded device just a quarter of century later. -

Design of the RISC-V Instruction Set Architecture

Design of the RISC-V Instruction Set Architecture Andrew Waterman Electrical Engineering and Computer Sciences University of California at Berkeley Technical Report No. UCB/EECS-2016-1 http://www.eecs.berkeley.edu/Pubs/TechRpts/2016/EECS-2016-1.html January 3, 2016 Copyright © 2016, by the author(s). All rights reserved. Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, to republish, to post on servers or to redistribute to lists, requires prior specific permission. Design of the RISC-V Instruction Set Architecture by Andrew Shell Waterman A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Computer Science in the Graduate Division of the University of California, Berkeley Committee in charge: Professor David Patterson, Chair Professor Krste Asanovi´c Associate Professor Per-Olof Persson Spring 2016 Design of the RISC-V Instruction Set Architecture Copyright 2016 by Andrew Shell Waterman 1 Abstract Design of the RISC-V Instruction Set Architecture by Andrew Shell Waterman Doctor of Philosophy in Computer Science University of California, Berkeley Professor David Patterson, Chair The hardware-software interface, embodied in the instruction set architecture (ISA), is arguably the most important interface in a computer system. Yet, in contrast to nearly all other interfaces in a modern computer system, all commercially popular ISAs are proprietary.