Brief History of Microprogramming

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

On the Hardware Reduction of Z-Datapath of Vectoring CORDIC

On the Hardware Reduction of z-Datapath of Vectoring CORDIC R. Stapenhurst*, K. Maharatna**, J. Mathew*, J.L.Nunez-Yanez* and D. K. Pradhan* *University of Bristol, Bristol, UK **University of Southampton, Southampton, UK [email protected] Abstract— In this article we present a novel design of a hardware wordlength larger than 18-bits the hardware requirement of it optimal vectoring CORDIC processor. We present a mathematical becomes more than the classical CORDIC. theory to show that using bipolar binary notation it is possible to eliminate all the arithmetic computations required along the z- In this particular work we propose a formulation to eliminate datapath. Using this technique it is possible to achieve three and 1.5 all the arithmetic operations along the z-datapath for conventional times reduction in the number of registers and adder respectively two-sided vector rotation and thereby reducing the hardware compared to classical CORDIC. Following this, a 16-bit vectoring while increasing the accuracy. Also the resulting architecture CORDIC is designed for the application in Synchronizer for IEEE shows significant hardware saving as the wordlength increases. 802.11a standard. The total area and dynamic power consumption Although we stick to the 2’s complement number system, without of the processor is 0.14 mm2 and 700μW respectively when loss of generality, this formulation can be adopted easily for synthesized in 0.18μm CMOS library which shows its effectiveness redundant arithmetic and higher radix formulation. A 16-bit as a low-area low-power processor. processor developed following this formulation requires 0.14 mm2 area and consumes 700 μW dynamic power when synthesized in 0.18μm CMOS library. -

18-447 Computer Architecture Lecture 6: Multi-Cycle and Microprogrammed Microarchitectures

18-447 Computer Architecture Lecture 6: Multi-Cycle and Microprogrammed Microarchitectures Prof. Onur Mutlu Carnegie Mellon University Spring 2015, 1/28/2015 Agenda for Today & Next Few Lectures n Single-cycle Microarchitectures n Multi-cycle and Microprogrammed Microarchitectures n Pipelining n Issues in Pipelining: Control & Data Dependence Handling, State Maintenance and Recovery, … n Out-of-Order Execution n Issues in OoO Execution: Load-Store Handling, … 2 Reminder on Assignments n Lab 2 due next Friday (Feb 6) q Start early! n HW 1 due today n HW 2 out n Remember that all is for your benefit q Homeworks, especially so q All assignments can take time, but the goal is for you to learn very well 3 Lab 1 Grades 25 20 15 10 5 Number of Students 0 30 40 50 60 70 80 90 100 n Mean: 88.0 n Median: 96.0 n Standard Deviation: 16.9 4 Extra Credit for Lab Assignment 2 n Complete your normal (single-cycle) implementation first, and get it checked off in lab. n Then, implement the MIPS core using a microcoded approach similar to what we will discuss in class. n We are not specifying any particular details of the microcode format or the microarchitecture; you can be creative. n For the extra credit, the microcoded implementation should execute the same programs that your ordinary implementation does, and you should demo it by the normal lab deadline. n You will get maximum 4% of course grade n Document what you have done and demonstrate well 5 Readings for Today n P&P, Revised Appendix C q Microarchitecture of the LC-3b q Appendix A (LC-3b ISA) will be useful in following this n P&H, Appendix D q Mapping Control to Hardware n Optional q Maurice Wilkes, “The Best Way to Design an Automatic Calculating Machine,” Manchester Univ. -

The 32-Bit PA-RISC Run-Time Architecture Document, V. 1.0 for HP

The 32-bit PA-RISC Run-time Architecture Document HP-UX 11.0 Version 1.0 (c) Copyright 1997 HEWLETT-PACKARD COMPANY. The information contained in this document is subject to change without notice. HEWLETT-PACKARD MAKES NO WARRANTY OF ANY KIND WITH REGARD TO THIS MATERIAL, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE. Hewlett-Packard shall not be liable for errors contained herein or for incidental or consequential damages in connection with furnishing, performance, or use of this material. Hewlett-Packard assumes no responsibility for the use or reliability of its software on equipment that is not furnished by Hewlett-Packard. This document contains proprietary information which is protected by copyright. All rights are reserved. No part of this document may be photocopied, reproduced, or translated to another language without the prior written consent of Hewlett-Packard Company. CSO/STG/STD/CLO Hewlett-Packard Company 11000 Wolfe Road Cupertino, California 95014 By The Run-time Architecture Team 32-bit PA-RISC RUN-TIME ARCHITECTURE DOCUMENT 11.0 version 1.0 -1 -2 CHAPTER 1 Introduction 7 1.1 Target Audiences 7 1.2 Overview of the PA-RISC Runtime Architecture Document 8 CHAPTER 2 Common Coding Conventions 9 2.1 Memory Model 9 2.1.1 Text Segment 9 2.1.2 Initialized and Uninitialized Data Segments 9 2.1.3 Shared Memory 10 2.1.4 Subspaces 10 2.2 Register Usage 10 2.2.1 Data Pointer (GR 27) 10 2.2.2 Linkage Table Register (GR 19) 10 2.2.3 Stack Pointer (GR 30) 11 2.2.4 Space -

A 4.7 Million-Transistor CISC Microprocessor

Auriga2: A 4.7 Million-Transistor CISC Microprocessor J.P. Tual, M. Thill, C. Bernard, H.N. Nguyen F. Mottini, M. Moreau, P. Vallet Hardware Development Paris & Angers BULL S.A. 78340 Les Clayes-sous-Bois, FRANCE Tel: (+33)-1-30-80-7304 Fax: (+33)-1-30-80-7163 Mail: [email protected] Abstract- With the introduction of the high range version of parallel multi-processor architecture. It is used in a family of the DPS7000 mainframe family, Bull is providing a processor systems able to handle up to 24 such microprocessors, which integrates the DPS7000 CPU and first level of cache on capable to support 10 000 simultaneously connected users. one VLSI chip containing 4.7M transistors and using a 0.5 For the development of this complex circuit, a system level µm, 3Mlayers CMOS technology. This enhanced CPU has design methodology has been put in place, putting high been designed to provide a high integration, high performance emphasis on high-level verification issues. A lot of home- and low cost systems. Up to 24 such processors can be made CAD tools were developed, to meet the stringent integrated in a single system, enabling performance levels in performance/area constraints. In particular, an integrated the range of 850 TPC-A (Oracle) with about 12 000 Logic Synthesis and Formal Verification environment tool simultaneously active connections. The design methodology has been developed, to deal with complex circuitry issues involved massive use of formal verification and symbolic and to enable the designer to shorten the iteration loop layout techniques, enabling to reach first pass right silicon on between logical design and physical implementation of the several foundries. -

A Developer's Guide to the POWER Architecture

http://www.ibm.com/developerworks/linux/library/l-powarch/ 7/26/2011 10:53 AM English Sign in (or register) Technical topics Evaluation software Community Events A developer's guide to the POWER architecture POWER programming by the book Brett Olsson , Processor architect, IBM Anthony Marsala , Software engineer, IBM Summary: POWER® processors are found in everything from supercomputers to game consoles and from servers to cell phones -- and they all share a common architecture. This introduction to the PowerPC application-level programming model will give you an overview of the instruction set, important registers, and other details necessary for developing reliable, high performing POWER applications and maintaining code compatibility among processors. Date: 30 Mar 2004 Level: Intermediate Also available in: Japanese Activity: 22383 views Comments: The POWER architecture and the application-level programming model are common across all branches of the POWER architecture family tree. For detailed information, see the product user's manuals available in the IBM® POWER Web site technical library (see Resources for a link). The POWER architecture is a Reduced Instruction Set Computer (RISC) architecture, with over two hundred defined instructions. POWER is RISC in that most instructions execute in a single cycle and typically perform a single operation (such as loading storage to a register, or storing a register to memory). The POWER architecture is broken up into three levels, or "books." By segmenting the architecture in this way, code compatibility can be maintained across implementations while leaving room for implementations to choose levels of complexity for price/performances trade-offs. The levels are: Book I. -

Embedded Multi-Core Processing for Networking

12 Embedded Multi-Core Processing for Networking Theofanis Orphanoudakis University of Peloponnese Tripoli, Greece [email protected] Stylianos Perissakis Intracom Telecom Athens, Greece [email protected] CONTENTS 12.1 Introduction ............................ 400 12.2 Overview of Proposed NPU Architectures ............ 403 12.2.1 Multi-Core Embedded Systems for Multi-Service Broadband Access and Multimedia Home Networks . 403 12.2.2 SoC Integration of Network Components and Examples of Commercial Access NPUs .............. 405 12.2.3 NPU Architectures for Core Network Nodes and High-Speed Networking and Switching ......... 407 12.3 Programmable Packet Processing Engines ............ 412 12.3.1 Parallelism ........................ 413 12.3.2 Multi-Threading Support ................ 418 12.3.3 Specialized Instruction Set Architectures ....... 421 12.4 Address Lookup and Packet Classification Engines ....... 422 12.4.1 Classification Techniques ................ 424 12.4.1.1 Trie-based Algorithms ............ 425 12.4.1.2 Hierarchical Intelligent Cuttings (HiCuts) . 425 12.4.2 Case Studies ....................... 426 12.5 Packet Buffering and Queue Management Engines ....... 431 399 400 Multi-Core Embedded Systems 12.5.1 Performance Issues ................... 433 12.5.1.1 External DRAMMemory Bottlenecks ... 433 12.5.1.2 Evaluation of Queue Management Functions: INTEL IXP1200 Case ................. 434 12.5.2 Design of Specialized Core for Implementation of Queue Management in Hardware ................ 435 12.5.2.1 Optimization Techniques .......... 439 12.5.2.2 Performance Evaluation of Hardware Queue Management Engine ............. 440 12.6 Scheduling Engines ......................... 442 12.6.1 Data Structures in Scheduling Architectures ..... 443 12.6.2 Task Scheduling ..................... 444 12.6.2.1 Load Balancing ................ 445 12.6.3 Traffic Scheduling ................... -

Open PDF in New Window

BEST AVAILABLE COPY i The pur'pose of this DIGITAL COMPUTER Itop*"°a""-'"""neesietter' YNEWSLETTER . W OFFICf OF NIVAM RUSEARCMI • MATNEMWTICAL SCIENCES DIVISION Vol. 9, No. 2 Editors: Gordon D. Goldstein April 1957 Albrecht J. Neumann TABLE OF CONTENTS It o Page No. W- COMPUTERS. U. S. A. "1.Air Force Armament Center, ARDC, Eglin AFB, Florida 1 2. Air Force Cambridge Research Center, Bedford, Mass. 1 3. Autonetics, RECOMP, Downey, Calif. 2 4. Corps of Engineers, U. S. Army 2 5. IBM 709. New York, New York 3 6. Lincoln Laboratory TX-2, M.I.T., Lexington, Mass. 4 7. Litton Industries 20 and 40 DDA, Beverly Hills, Calif. 5 8. Naval Air Test Centcr, Naval Air Station, Patuxent River, Maryland 5 9. National Cash Register Co. NC 304, Dayton, Ohio 6 10. Naval Air Missile Test Center, RAYDAC, Point Mugu, Calif. 7 11. New York Naval Shipyard, Brooklyn, New York 7 12. Philco, TRANSAC. Philadelphia, Penna. 7 13. Western Reserve Univ., Cleveland, Ohio 8 COMPUTING CENTERS I. Univ. of California, Radiation Lab., Livermore, Calif. 9 2. Univ. of California, SWAC, Los Angeles, Calif. 10 3. Electronic Associates, Inc., Princeton Computation Center, Princeton, New Jersey 10 4. Franklin Institute Laboratories, Computing Center, Philadelphia, Penna. 11 5. George Washington Univ., Logistics Research Project, Washington, D. C. 11 6. M.I.T., WHIRLWIND I, Cambridge, Mass. 12 7. National Bureau of Standards, Applied Mathematics Div., Washington, D.C. 12 8. Naval Proving Ground, Naval Ordnance Computation Center, Dahlgren, Virgin-.a 12 9. Ramo Wooldridge Corp., Digital Computing Center, Los Angeles, Calif. -

TCD-SCSS-T.20121208.032.Pdf

AccessionIndex: TCD-SCSS-T.20121208.032 Accession Date: 8-Dec-2012 Accession By: Prof.J.G.Byrne Object name: Burroughs 1714 Vintage: c.1972 Synopsis: Commercial zero-instruction-set computer used by the Dept.Computer Science from 1973-1979. Just two prototyping boards survive. Description: The Burroughs 1714 was one of their B1700 family, introduced in 1972 to compete with IBM's System/3. The original research for the B1700 series, initially codenamed the Proper Language Processor or Program Language Processor (PLP) was done at the Burroughs Pasadena plant. The family were known as the Burroughs Small Systems, as distinct from the Burroughs Medium Systems (B2000, etc) and the Burroughs Large Systems (B5000, etc). All the Burroughs machines had high-level language architectures. The large were ALGOL machines, the medium COBOL machines, but the small were universal machines. The principal designer of the B1700 family was Wayne T. Wilner. He designed the architecture as a zero-instruction-set computer, an attempt to bridge the inefficient semantic gap between the ideal solution to a particular programming problem and the real physical hardware. The B1700 architecture executed idealized virtual machines for any language from virtual memory. It achieved this feat by microprogramming, see the microinstruction set further below. The Burroughs MCP (Master Control Program) would schedule a particular job to run, then preload the interpreter for whatever language was required into a writeable control store, allowing the machine to emulate the desired virtual machine. The hardware was optimised for this. It had bit-addressable memory, a variable-width ALU, could OR in data from a register into the instruction register (for very efficient instruction parsing), and the output of the ALU was directly addressable as X+Y or X-Y read-only registers. -

Microprocessor Design

Microprocessor Design en.wikibooks.org March 15, 2015 On the 28th of April 2012 the contents of the English as well as German Wikibooks and Wikipedia projects were licensed under Creative Commons Attribution-ShareAlike 3.0 Unported license. A URI to this license is given in the list of figures on page 211. If this document is a derived work from the contents of one of these projects and the content was still licensed by the project under this license at the time of derivation this document has to be licensed under the same, a similar or a compatible license, as stated in section 4b of the license. The list of contributors is included in chapter Contributors on page 209. The licenses GPL, LGPL and GFDL are included in chapter Licenses on page 217, since this book and/or parts of it may or may not be licensed under one or more of these licenses, and thus require inclusion of these licenses. The licenses of the figures are given in the list of figures on page 211. This PDF was generated by the LATEX typesetting software. The LATEX source code is included as an attachment (source.7z.txt) in this PDF file. To extract the source from the PDF file, you can use the pdfdetach tool including in the poppler suite, or the http://www. pdflabs.com/tools/pdftk-the-pdf-toolkit/ utility. Some PDF viewers may also let you save the attachment to a file. After extracting it from the PDF file you have to rename it to source.7z. -

Processor-103

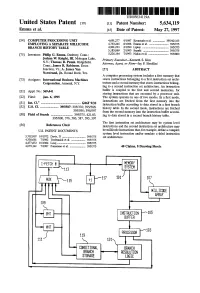

US005634119A United States Patent 19 11 Patent Number: 5,634,119 Emma et al. 45 Date of Patent: May 27, 1997 54) COMPUTER PROCESSING UNIT 4,691.277 9/1987 Kronstadt et al. ................. 395/421.03 EMPLOYING ASEPARATE MDLLCODE 4,763,245 8/1988 Emma et al. ........................... 395/375 BRANCH HISTORY TABLE 4,901.233 2/1990 Liptay ...... ... 395/375 5,185,869 2/1993 Suzuki ................................... 395/375 75) Inventors: Philip G. Emma, Danbury, Conn.; 5,226,164 7/1993 Nadas et al. ............................ 395/800 Joshua W. Knight, III, Mohegan Lake, Primary Examiner-Kenneth S. Kim N.Y.; Thomas R. Puzak. Ridgefield, Attorney, Agent, or Firm-Jay P. Sbrollini Conn.; James R. Robinson, Essex Junction, Vt.; A. James Wan 57 ABSTRACT Norstrand, Jr., Round Rock, Tex. A computer processing system includes a first memory that 73 Assignee: International Business Machines stores instructions belonging to a first instruction set archi Corporation, Armonk, N.Y. tecture and a second memory that stores instructions belong ing to a second instruction set architecture. An instruction (21) Appl. No.: 369,441 buffer is coupled to the first and second memories, for storing instructions that are executed by a processor unit. 22 Fied: Jan. 6, 1995 The system operates in one of two modes. In a first mode, instructions are fetched from the first memory into the 51 Int, C. m. G06F 9/32 instruction buffer according to data stored in a first branch 52 U.S. Cl. ....................... 395/587; 395/376; 395/586; history table. In the second mode, instructions are fetched 395/595; 395/597 from the second memory into the instruction buffer accord 58 Field of Search ........................... -

Hereby the Screen Stands in For, and Thereby Occludes, the Deeper Workings of the Computer Itself

John Warnock and an IDI graphical display unit, University of Utah, 1968. Courtesy Salt Lake City Deseret News . 24 doi:10.1162/GREY_a_00233 Downloaded from http://www.mitpressjournals.org/doi/pdf/10.1162/GREY_a_00233 by guest on 27 September 2021 The Random-Access Image: Memory and the History of the Computer Screen JACOB GABOURY A memory is a means for displacing in time various events which depend upon the same information. —J. Presper Eckert Jr. 1 When we speak of graphics, we think of images. Be it the windowed interface of a personal computer, the tactile swipe of icons across a mobile device, or the surreal effects of computer-enhanced film and video games—all are graphics. Understandably, then, computer graphics are most often understood as the images displayed on a computer screen. This pairing of the image and the screen is so natural that we rarely theorize the screen as a medium itself, one with a heterogeneous history that develops in parallel with other visual and computa - tional forms. 2 What then, of the screen? To be sure, the computer screen follows in the tradition of the visual frame that delimits, contains, and produces the image. 3 It is also the skin of the interface that allows us to engage with, augment, and relate to technical things. 4 But the computer screen was also a cathode ray tube (CRT) phosphorescing in response to an electron beam, modified by a grid of randomly accessible memory that stores, maps, and transforms thousands of bits in real time. The screen is not simply an enduring technique or evocative metaphor; it is a hardware object whose transformations have shaped the ma - terial conditions of our visual culture. -

The 32-Bit PA-RISC Run- Time Architecture Document

The 32-bit PA-RISC Run- time Architecture Document HP-UX 10.20 Version 3.0 (c) Copyright 1985-1997 HEWLETT-PACKARD COMPANY. The information contained in this document is subject to change without notice. HEWLETT-PACKARD MAKES NO WARRANTY OF ANY KIND WITH REGARD TO THIS MATERIAL, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OFMERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE. Hewlett-Packard shall not be liable for errors contained herein or for incidental or consequential damages in connection with furnishing, performance, or use of this material. Hewlett-Packard assumes no responsibility for the use or reliability of its software on equipment that is not furnished by Hewlett-Packard. This document contains proprietary information which is protected by copyright. All rights are reserved. No part of this document may be photocopied, reproduced, or translated to another language without the prior written consent of Hewlett- Packard Company. CSO/STG/STD/CLO Hewlett-Packard Company 11000 Wolfe Road Cupertino, California 95014 By The Run-time Architecture Team CHAPTER 1 Introduction This document describes the runtime architecture for PA-RISC systems running either the HP-UX or the MPE/iX operating system. Other operating systems running on PA-RISC may also use this runtime architecture or a variant of it. The runtime architecture defines all the conventions and formats necessary to compile, link, and execute a program on one of these operating systems. Its purpose is to ensure that object modules produced by many different compilers can be linked together into a single application, and to specify the interfaces between compilers and linker, and between linker and operating system.