An Overview of Exascale Architecture Challenges

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Resolutions to Censure the President: Procedure and History

Resolutions to Censure the President: Procedure and History Updated February 1, 2021 Congressional Research Service https://crsreports.congress.gov R45087 Resolutions to Censure the President: Procedure and History Summary Censure is a reprimand adopted by one or both chambers of Congress against a Member of Congress, President, federal judge, or other government official. While Member censure is a disciplinary measure that is sanctioned by the Constitution (Article 1, Section 5), non-Member censure is not. Rather, it is a formal expression or “sense of” one or both houses of Congress. Censure resolutions targeting non-Members have utilized a range of statements to highlight conduct deemed by the resolutions’ sponsors to be inappropriate or unauthorized. Before the Nixon Administration, such resolutions included variations of the words or phrases unconstitutional, usurpation, reproof, and abuse of power. Beginning in 1972, the most clearly “censorious” resolutions have contained the word censure in the text. Resolutions attempting to censure the President are usually simple resolutions. These resolutions are not privileged for consideration in the House or Senate. They are, instead, considered under the regular parliamentary mechanisms used to process “sense of” legislation. Since 1800, Members of the House and Senate have introduced resolutions of censure against at least 12 sitting Presidents. Two additional Presidents received criticism via alternative means (a House committee report and an amendment to a resolution). The clearest instance of a successful presidential censure is Andrew Jackson. The Senate approved a resolution of censure in 1834. On three other occasions, critical resolutions were adopted, but their final language, as amended, obscured the original intention to censure the President. -

First Phase Lifting of Mass Suspension

Mass in Church during COVID Pandemic: 1st Phase of Return 1 (Version of May 14, 2020) These directives are promulgated by the Archbishop of Santa Fe for the early lifting of the suspension of publicly-attended Mass General: Attendance limited to 10% of building capacity (per fire marshal assessment). Dispensation from Sunday obligation remains for all. Safety/ common good is priority. Coordination with staff will be essential, as will be clear and detailed communication to the people. Local pastors can make these directives more stringent as necessitated by local conditions; however, they cannot make them less strict. Reopening will be accomplished in phases. The Archbishop/Vicar General will continue to offer live stream/recorded Mass each Sunday and weekdays, and parishes are encouraged to do so as well. Social distancing/masks/increased cleaning are mandatory. Each measure presents an additional layer of protection, which individually may be insufficient. Cleaning staff should be present to disinfect commonly-touched surfaces after each Mass (pews, door handles, rails, etc.) Have on hand ample cleaning supplies, and masks if possible. Persons over 60 and with compromised immune systems should be pre-advised that they are at increased risk and be encouraged to remain home. Communicate that these guidelines are for the safety of lives and health for themselves and neighbors, and that continued opening depends on everyone’s cooperation. The Archdiocese remains responsive to changes in conditions/requirements, and will revise these instructions periodically as necessary. In Churches No open holy water present. There may be a dispenser for people to fill bottles if desired. -

Transcript: Suspension & Debarment

Transcript: Suspension & Debarment - Hello, and thank you for joining today's acquisition seminar hosted by the Federal Acquisition Institute. Today's seminar entitled Suspension and Debarment: What Makes a Successful Meeting will give us a glimpse into the minds of Suspension and Debarment Officials in the Federal Government, and a beind the closed door look at a successful meeting between a Suspension and Debarment Official and contractor representatives. Suspension and debarment, two terms that probably strike terror into the heart of any company or individual that wants to do business with the Federal Government. But suspension and debarment aren't meant to be punitive. And meetings with Suspension and Debarment Officials are not meant to be contentious or antagonistic. Suspension and debarment are used only as a last resort to protect the interests of the Federal Government. More often than not, a meeting with a Suspension and Debarment Official is an opportunity to help an individual or company get back on track. To enlighten us on the implications of suspension and debarment, we have a team of officials from agencies across the Federal Government. First we'll present two Suspension and Debarment Officials as they discuss, among other things, what they look for when they're determining a contractor's present responsibility and how members of the acquisition workforce can help. Then we'll present an example of a contractors meeting with a Suspension and Debarment Official. These closed door meetings have wide ranging effects on Federal Government procurement across all agencies. The meetings are necessarily private, but they are one of a Suspension and Debarment Official's most useful and effective tools for enhancing contractor responsibility. -

Pope Leo of Bourges, Clerical Immunity and the Early Medieval Secular Charles West1 at First Glance, to Investigate the Secular

Pope Leo of Bourges, clerical immunity and the early medieval secular Charles West1 At first glance, to investigate the secular in the early Middle Ages may seem to be wilfully flirting with anachronism. It is now widely agreed that the early medieval political order was constructed neither against nor in opposition to the Church, but rather that the Church, or ecclesia, largely constituted that order.2 This would seem to leave little early medieval scope for the secular, understood in conventional terms as the absence of religion (and moreover often associated with modernity).3 This position is broadly reflected in the recent historiography, which focuses either on Augustine’s short-lived notion of the secular in Late Antiquity, or on the role of Gregorian Reform in beginning a slow secularisation of political authority.4 These separate approaches are spared from intersecting by the early medieval half- millennium which is presented, as so often, as either too late or too early for key developments. However, in recent years, the argument has been forcefully made across a range of fields that, far from being a neutral or universal category, the secular is in reality ‘a historically-produced idea’, and one that moreover encodes a disguised theology.5 As a consequence, ‘to tell a story 1 This paper is based on research carried out on a project funded by the AHRC 2014-16. Thanks to Emma Hunter, Conrad Leyser, Rob Priest, Steve Schoenig and Danica Summerlin for their advice on a draft, as well as to audiences at UEA and Cambridge, the class of HST 6079 in Sheffield, and the editor of this special issue. -

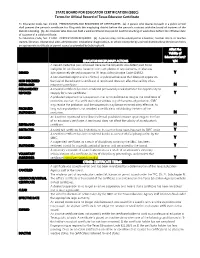

(SBEC) Terms for Official Record of Texas Educator Certificate

STATE BOARD FOR EDUCATOR CERTIFICATION (SBEC) Terms for Official Record of Texas Educator Certificate Tx. Education Code, Sec. 21.053. PRESENTATION AND RECORDING OF CERTIFICATES. (a) A person who desires to teach in a public school shall present the person's certificate for filing with the employing district before the person's contract with the board of trustees of the district is binding. (b) An educator who does not hold a valid certificate may not be paid for teaching or work done before the effective date of issuance of a valid certificate. Tx Education Code, Sec. 21.003. CERTIFICATION REQUIRED. (a) A person may not be employed as a teacher, teacher intern or teacher trainee, librarian, educational aide, administrator, educational diagnostician, or school counselor by a school district unless the person holds an appropriate certificate or permit issued as provided by Subchapter B. Affect the Validity of Educator’s Cert.? EDUCATOR DISCIPLINARY ACTIONS Y/N A denied credential was not issued because the requestor was determined to be ineligible for certification, based on non-completion of requirements, or else was DENIED administratively denied pursuant to 19 Texas Administrative Code §249.12. Y A non-inscribed reprimand is a formal, unpublished censure that does not appear on NON INSCRIBED the face of the educator’s certificate. A reprimand does not affect the validity of an REPRIMAND educator’s certificate. N PERMANENTLY A revoked certificate has been rendered permanently invalid without the opportunity to REVOKED reapply for a new certificate. Y A probated suspension is a suspension that is not enforced as long as the conditions of probation are met. -

Mary W. Helms Source: Anthropos, Bd

Before the Dawn. Monks and the Night in Late Antiquity and Early Medieval Europe Author(s): Mary W. Helms Source: Anthropos, Bd. 99, H. 1. (2004), pp. 177-191 Published by: Anthropos Institute Stable URL: http://www.jstor.org/stable/40466312 . Accessed: 29/07/2013 13:48 Your use of the JSTOR archive indicates your acceptance of the Terms & Conditions of Use, available at . http://www.jstor.org/page/info/about/policies/terms.jsp . JSTOR is a not-for-profit service that helps scholars, researchers, and students discover, use, and build upon a wide range of content in a trusted digital archive. We use information technology and tools to increase productivity and facilitate new forms of scholarship. For more information about JSTOR, please contact [email protected]. Anthropos Institute is collaborating with JSTOR to digitize, preserve and extend access to Anthropos. http://www.jstor.org This content downloaded from 152.13.249.96 on Mon, 29 Jul 2013 13:48:56 PM All use subject to JSTOR Terms and Conditions H Anthropos IT] 99.2004:177-191 Beforethe Dawn Monksand theNight in Late Antiquityand EarlyMedieval Europe MaryW. Helms Abstract.- Early European monkswere preoccupiedwith the and withformal rules and especiallyritual that night.They were quintessentialmen of the dark,for nocturns, definedand activatedfundamental tenets of faith by fartheir longest liturgical office, was conductedeach night, offices. in the blacknessof unlitchurches. In so monks throughcarefully organized liturgical virtually doing these not only rituallyanticipated the coming of the dawn but Foremostamong ideologicallycharged also, and especially,engaged withthe primordialcosmological monasticsettings and liturgical presentations were darknessthat preceded the original creation of Genesis. -

APOSTOLIC MAI\DATE of SUSPENSION

] APOSTOLIC MAI\DATE Of SUSPENSION. E, Most lllustrious & Most Reverend Lord JAMES ATKINSON-WAKE. OSB. Archbishop. FIEREBY declare as of this day I date is suspended as a Titular Bishop RICKY O ALCANTARA with immediate effect. He has no I authority to say Mass in public only in private at this stage. He has disobeyed a public document agreed in September 2018 by the Philippine conference of Bishops of the act of Excommunication of Mr Clifford Garcia and by act of canon law the same act falls upon him. Thergfore he has been suspended from all functions as a priest from this day I date being the 13ft December 2018. His celebret will be surrended : I.D. Number: T8P140870-7EP He has no authority to say Mass at the Our Mother of Perpetual Help and the Child Jesus. The conference of bishops will meet in lgIarch 2019 in the Philippines and the case will be fully heard by the conference of bishops'before the Superior General and Vicar Judge and the case will be fully decided. Their fore, Fr Allan Garcia is to take over Mass without interference at Our Mother of Perpetual Help and the Child Jesus until otherwise decided by *y office. Suspended Fr Ricky O Alcantara has spoken out against the Superior General in telling lies, untruth and hatred against the Archbishop & Superior General and brought the said office by his actions in to di violation of Canon Law. o' Given s 13th day of the Month of December in the year of our xx Most illu Lord James Atkinson *ffflY His Eminence, ArchbiSE-op of Birmingham & Dudley. -

Catholic Church

THIRD SUNDAY OF EASTER ~ APRIL 26, 2020 Saint Anne Catholic Church 817 So. Lincoln Road, Escanaba, MI 49829 Since 1888 Parish Office Hours: Mon.- Fri. 9:00 am-12:00 pm & 1:00-4:00 pm • Phone: 906.786.1421 Email: [email protected] ~ www.dioceseofmarquette.org/stanneescanaba From the Desk of Father Fran From the reading of Luke’s Gospel this Sunday, we hear the account of two of Jesus’ disciples who encountered Jesus after His Resurrection. This encounter shows how the first community of disciples came to believe that Jesus had risen from the dead and how the first community of believers, the Church, came into being. When reading today’s Gospel, we may be surprised to learn how those two friends of Jesus could walk and converse with Him at some length yet not recognize Him. Again, we discover here that the risen Jesus is not always easily recognized. Cleopas and the other disciple walked with a person whom they believe to be a stranger; only later did they discover the stranger to be Jesus. We also learn from this Gospel account how the first community of believers met and recognized Jesus in the breaking of the bread, just as we meet and recognize Jesus in the Eucharist. What took place at a table in the home of Cleopas and the other disciple is really no different than what takes place in our Catholic Churches at an altar during the Liturgy of the Eucharist. The disciples invited the stranger (Jesus) to stay and dine with them. During the meal in which they shared in the breaking of the bread, the disciples’ eyes were opened; they recognized the stranger as Jesus, the One who was known to be crucified days earlier. -

Byzantine Critiques of Monasticism in the Twelfth Century

A “Truly Unmonastic Way of Life”: Byzantine Critiques of Monasticism in the Twelfth Century DISSERTATION Presented in Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy in the Graduate School of The Ohio State University By Hannah Elizabeth Ewing Graduate Program in History The Ohio State University 2014 Dissertation Committee: Professor Timothy Gregory, Advisor Professor Anthony Kaldellis Professor Alison I. Beach Copyright by Hannah Elizabeth Ewing 2014 Abstract This dissertation examines twelfth-century Byzantine writings on monasticism and holy men to illuminate monastic critiques during this period. Drawing upon close readings of texts from a range of twelfth-century voices, it processes both highly biased literary evidence and the limited documentary evidence from the period. In contextualizing the complaints about monks and reforms suggested for monasticism, as found in the writings of the intellectual and administrative elites of the empire, both secular and ecclesiastical, this study shows how monasticism did not fit so well in the world of twelfth-century Byzantium as it did with that of the preceding centuries. This was largely on account of developments in the role and operation of the church and the rise of alternative cultural models that were more critical of traditional ascetic sanctity. This project demonstrates the extent to which twelfth-century Byzantine society and culture had changed since the monastic heyday of the tenth century and contributes toward a deeper understanding of Byzantine monasticism in an under-researched period of the institution. ii Dedication This dissertation is dedicated to my family, and most especially to my parents. iii Acknowledgments This dissertation is indebted to the assistance, advice, and support given by Anthony Kaldellis, Tim Gregory, and Alison Beach. -

1455. Causes for Censure, Suspension, Or Revocation of License, Registration, Or Certification

§1455. Causes for censure, suspension, or revocation of license, registration, or certification A. The commission may censure a licensee, registrant, or certificate holder or conditionally or unconditionally suspend or revoke any license, registration, or certificate issued under this Chapter, levy fines or impose civil penalties not to exceed five thousand dollars, or impose continuing education requirements on licensees, registrants, or certificate holders if, in the opinion of the commission, a licensee, registrant, or certificate holder is performing or attempting to perform or has performed or has attempted to perform any of the following acts: (1) Committing any act in violation of the Louisiana Real Estate License Law not specified in this Section. (2) Violating any rule or regulation promulgated by the commission in the interest of the public and consistent with the provisions of this Chapter. (3) Committing any act in violation of the Louisiana Timesharing Act. (4) Failure to account for any money coming into his possession belonging to others. (5) Failure to properly disburse money which belongs to others upon its coming into his possession. (6) Commingling the money or other property of his principals with his own. (7) Accepting, giving, or charging any undisclosed commission, rebate, or direct profit on expenditures made for a principal. (8) Representing or attempting to represent a real estate broker or real estate agency other than the licensed broker or agency listed on the real estate license issued by the commission. (9) Acting in the dual capacity of agent and undisclosed principal in any transaction. However, such a relationship shall not constitute dual agency if the licensee is the seller or lessor of property that he owns or if the property is owned by a real estate business of which the licensee is the sole proprietor and agent and the same is disclosed to the buyer or tenant. -

The Catholic Church and the Holocaust, 1930–1965 Ii Introduction Introduction Iii

Introduction i The Catholic Church and the Holocaust, 1930–1965 ii Introduction Introduction iii The Catholic Church and the Holocaust, 1930 –1965 Michael Phayer INDIANA UNIVERSITY PRESS Bloomington and Indianapolis iv Introduction This book is a publication of Indiana University Press 601 North Morton Street Bloomington, IN 47404-3797 USA http://www.indiana.edu/~iupress Telephone orders 800-842-6796 Fax orders 812-855-7931 Orders by e-mail [email protected] © 2000 by John Michael Phayer All rights reserved No part of this book may be reproduced or utilized in any form or by any means, electronic or mechanical, including photocopying and re- cording, or by any information storage and retrieval system, without permission in writing from the publisher. The Association of Ameri- can University Presses’ Resolution on Permissions constitutes the only exception to this prohibition. The paper used in this publication meets the minimum requirements of American National Standard for Information Sciences—Perma- nence of Paper for Printed Library Materials, ANSI Z39.48-1984. Manufactured in the United States of America Library of Congress Cataloging-in-Publication Data Phayer, Michael, date. The Catholic Church and the Holocaust, 1930–1965 / Michael Phayer. p. cm. Includes bibliographical references and index. ISBN 0-253-33725-9 (alk. paper) 1. Pius XII, Pope, 1876–1958—Relations with Jews. 2. Judaism —Relations—Catholic Church. 3. Catholic Church—Relations— Judaism. 4. Holocaust, Jewish (1939–1945) 5. World War, 1939– 1945—Religious aspects—Catholic Church. 6. Christianity and an- tisemitism—History—20th century. I. Title. BX1378 .P49 2000 282'.09'044—dc21 99-087415 ISBN 0-253-21471-8 (pbk.) 2 3 4 5 6 05 04 03 02 01 Introduction v C O N T E N T S Acknowledgments ix Introduction xi 1. -

Pastoral Manual Are Offered to Assist Them in Serving the Faithful

SECTION I SACRAMENTS POLICIES AND PROCEDURES Priests, deacons and pastoral ministers of the Diocese of Rockville Centre serve parishes and institutions through sacramental ministries. The following sections of the Pastoral Manual are offered to assist them in serving the faithful. They are a general guide and are not meant to be exhaustive in nature. The disciplines of the universal Church and the specific policies of the Diocese of Rockville Centre are summarized below. Note that this section is preceded by a table of contents. The general index at the end of the Pastoral Manual may also be helpful. For a more complete treatment of these sacraments, reference should be made to the 1983 Code of Canon Law which contains legislation for the universal Church; the rite for each sacrament also explains the details of sacramental celebration. Diocesan policies and procedures are coordinated by the Chancellor’s Office. Often parishes have their own sacramental policies which assist Church ministers in serving their parishioners. TABLE OF CONTENTS I. SACRAMENTS: INTRODUCTION..................................................................................................1 A. CATECHESIS AND PREPARATION: READINESS FOR THE SACRAMENTS .................................................7 B. VALIDITY AND LAWFULNESS.............................................................................................................7 C. OFFERINGS: GENERAL PRINCIPLES ....................................................................................................8