An Approach to Bodo Document Clustering

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

LCSH Section K

K., Rupert (Fictitious character) Motion of K stars in line of sight Ka-đai language USE Rupert (Fictitious character : Laporte) Radial velocity of K stars USE Kadai languages K-4 PRR 1361 (Steam locomotive) — Orbits Ka’do Herdé language USE 1361 K4 (Steam locomotive) UF Galactic orbits of K stars USE Herdé language K-9 (Fictitious character) (Not Subd Geog) K stars—Galactic orbits Ka’do Pévé language UF K-Nine (Fictitious character) BT Orbits USE Pévé language K9 (Fictitious character) — Radial velocity Ka Dwo (Asian people) K 37 (Military aircraft) USE K stars—Motion in line of sight USE Kadu (Asian people) USE Junkers K 37 (Military aircraft) — Spectra Ka-Ga-Nga script (May Subd Geog) K 98 k (Rifle) K Street (Sacramento, Calif.) UF Script, Ka-Ga-Nga USE Mauser K98k rifle This heading is not valid for use as a geographic BT Inscriptions, Malayan K.A.L. Flight 007 Incident, 1983 subdivision. Ka-houk (Wash.) USE Korean Air Lines Incident, 1983 BT Streets—California USE Ozette Lake (Wash.) K.A. Lind Honorary Award K-T boundary Ka Iwi National Scenic Shoreline (Hawaii) USE Moderna museets vänners skulpturpris USE Cretaceous-Paleogene boundary UF Ka Iwi Scenic Shoreline Park (Hawaii) K.A. Linds hederspris K-T Extinction Ka Iwi Shoreline (Hawaii) USE Moderna museets vänners skulpturpris USE Cretaceous-Paleogene Extinction BT National parks and reserves—Hawaii K-ABC (Intelligence test) K-T Mass Extinction Ka Iwi Scenic Shoreline Park (Hawaii) USE Kaufman Assessment Battery for Children USE Cretaceous-Paleogene Extinction USE Ka Iwi National Scenic Shoreline (Hawaii) K-B Bridge (Palau) K-TEA (Achievement test) Ka Iwi Shoreline (Hawaii) USE Koro-Babeldaod Bridge (Palau) USE Kaufman Test of Educational Achievement USE Ka Iwi National Scenic Shoreline (Hawaii) K-BIT (Intelligence test) K-theory Ka-ju-ken-bo USE Kaufman Brief Intelligence Test [QA612.33] USE Kajukenbo K. -

Dimasa Kachari of Assam

ETHNOGRAPHIC STUDY NO·7II , I \ I , CENSUS OF INDIA 1961 VOLUME I MONOGRAPH SERIES PART V-B DIMASA KACHARI OF ASSAM , I' Investigation and Draft : Dr. p. D. Sharma Guidance : A. M. Kurup Editing : Dr. B. K. Roy Burman Deputy Registrar General, India OFFICE OF THE REGISTRAR GENERAL, INDIA MINISTRY OF HOME AFFAIRS NEW DELHI CONTENTS FOREWORD v PREFACE vii-viii I. Origin and History 1-3 II. Distribution and Population Trend 4 III. Physical Characteristics 5-6 IV. Family, Clan, Kinship and Other Analogous Divisions 7-8 V. Dwelling, Dress, Food, Ornaments and Other Material Objects distinctive qfthe Community 9-II VI. Environmental Sanitation, Hygienic Habits, Disease and Treatment 1~ VII. Language and Literacy 13 VIII. Economic Life 14-16 IX. Life Cycle 17-20 X. Religion . • 21-22 XI. Leisure, Recreation and Child Play 23 XII. Relation among different segments of the community 24 XIII. Inter-Community Relationship . 2S XIV Structure of Soci141 Control. Prestige and Leadership " 26 XV. Social Reform and Welfare 27 Bibliography 28 Appendix 29-30 Annexure 31-34 FOREWORD : fhe Constitution lays down that "the State shall promote with special care the- educational and economic hterest of the weaker sections of the people and in particular of the Scheduled Castes and Scheduled Tribes and shall protect them from social injustice and all forms of exploitation". To assist States in fulfilling their responsibility in this regard, the 1961 Census provided a series of special tabulations of the social and economic data on Scheduled Castes and Scheduled Tribes. The lists of Scheduled Castes and Scheduled Tribes are notified by the President under the Constitution and the Parliament is empowered to include in or exclude from the lists, any caste or tribe. -

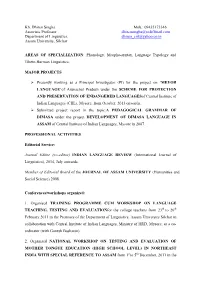

Kh. Dhiren Singha Mob.: 09435173346 Associate Professor [email protected] Department of Linguistics, Dhiren [email protected] Assam University, Silchar

Kh. Dhiren Singha Mob.: 09435173346 Associate Professor [email protected] Department of Linguistics, [email protected] Assam University, Silchar AREAS OF SPECIALIZATION: Phonology, Morpho-syntax, Language Typology and Tibeto-Burman Linguistics. MAJOR PROJECTS Presently working as a Principal Investigator (PI) for the project on ‘MEYOR LANGUAGE’of Arunachal Pradesh under the SCHEME FOR PROTECTION AND PRESERVATION OF ENDANGERED LANGUAGESof Central Institute of Indian Languages (CIIL), Mysore, from October, 2013 onwards. Submitted project report in the topic:A PEDAGOGICAL GRAMMAR OF DIMASA under the project DEVELOPMENT OF DIMASA LANGUAGE IN ASSAM of Central Institute of Indian Languages, Mysore in 2007. PROFESSIONAL ACTIVITIES Editorial Service: Journal Editor (co-editor) INDIAN LANGUAGE REVIEW (International Journal of Linguistics), 2014, July onwards. Member of Editorial Board of the JOURNAL OF ASSAM UNIVERSITY (Humanities and Social Science) 2008. Conferences/workshops organized: 1. Organised TRAINING PROGRAMME CUM WORKSHOP ON LANGUAGE TEACHING, TESTING AND EVALUATIONfor the college teachers from 23rd to 26th February 2011 in the Premises of the Department of Linguistics, Assam University Silchar in collaboration with Central Institute of Indian Languages, Ministry of HRD, Mysore, as a co- ordinator (with Ganesh Baskaran). 2. Organised NATIONAL WORKSHOP ON TESTING AND EVALUATION OF MOTHER TONGUE EDUCATION (HIGH SCHOOL LEVEL) IN NORTHEAST INDIA WITH SPECIAL REFERENCE TO ASSAM from 1stto 5th December, 2011 in the Premises of the Department of Linguistics, Assam University Silchar in collaboration with Central Institute of Indian Languages, Ministry of HRD, Mysore, as a co-ordinator (with Ganesh Baskaran). PROFESSIONAL ORGANIZATIONS I. Life member of Dravidian Linguistics Association, St. Xavier’s College, P. O. -

Tone Systems of Dimasa and Rabha: a Phonetic and Phonological Study

TONE SYSTEMS OF DIMASA AND RABHA: A PHONETIC AND PHONOLOGICAL STUDY By PRIYANKOO SARMAH A DISSERTATION PRESENTED TO THE GRADUATE SCHOOL OF THE UNIVERSITY OF FLORIDA IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY UNIVERSITY OF FLORIDA 2009 1 © 2009 Priyankoo Sarmah 2 To my parents and friends 3 ACKNOWLEDGMENTS The hardships and challenges encountered while writing this dissertation and while being in the PhD program are no way unlike anything experienced by other Ph.D. earners. However, what matters at the end of the day is the set of people who made things easier for me in the four years of my life as a Ph.D. student. My sincere gratitude goes to my advisor, Dr. Caroline Wiltshire, without whom I would not have even dreamt of going to another grad school to do a Ph.D. She has been a great mentor to me. Working with her for the dissertation and for several projects broadened my intellectual horizon and all the drawbacks in me and my research are purely due my own markedness constraint, *INTELLECTUAL. I am grateful to my co-chair, Dr. Ratree Wayland. Her knowledge and sharpness made me see phonetics with a new perspective. Not much unlike the immortal Sherlock Holmes I could often hear her echo: One's ideas must be as broad as Nature if they are to interpret Nature. I am indebted to my committee member Dr. Andrea Pham for the time she spent closely reading my dissertation draft and then meticulously commenting on it. Another committee member, Dr. -

And Topic Markers Indicate Gender and Number by Means of the Pronominal Article They Follow (Ho-Kwe M.TOP, Ko-Kwe F.TOP and Mo-Kwe PL-TOP)

The elusive topic: Towards a typology of topic markers (with special reference to cumulation with number in Bolinao and gender in Nalca) Bernhard Wälchli (Stockholm University) [email protected] Helsinki; January 22, 2020 1 The elusive topic: Towards a typology of topic markers (with special reference to cumulation with number in Bolinao and gender in Nalca) Bernhard Wälchli (Stockholm University) Abstract At least since the 1970s, topic has been widely recognized to reflect an important category in most different approaches to linguistics. However, researchers have never agreed about what exactly a topic is (researchers disagree, for instance, about whether topics express backgrounding or foregrounding) and to what extent topics are elements of syntax or discourse or both. Topics are notoriously difficult to distinguish from a range of related phenomena. Some definitions of topic are suspiciously similar to definitions of definiteness, subject, noun and contrast, so the question arises as to what extent topic is a phenomenon of its own. However, topics are also internally diverse. There is disagreement, for instance, as to whether contrastive and non-contrastive topics should be subsumed under the same notion. This talk tries to approach the category type topic bottom-up by considering cross-linguistic functional diversity in marked topics, semasiologically defined as instances of topics with explicit segmental topic markers. The first part of the talk considers the question as to whether topic markers can be defined as a gram type with one or several prototypical functions that can be studied on the basis of material from parallel texts and from descriptive sources. -

An Unsupervised Word Level Language Identification of English and Kokborok Code

© 2020 JETIR August 2020, Volume 7, Issue 8 www.jetir.org (ISSN-2349-5162) An Unsupervised Word Level Language Identification of English and Kokborok Code- Mixed and Code-Switched Sentences 1Enjula Uchoi, 2Lenin Laitonjam 1Lecturer, 2Supervisor, 1Department of Computer Science and Engineering, 1Dhalai District Polytechnic, Agartala, India. Abstract: Considering the increasing uses of multilingual text, the need for an automatic word level language identification model is raised. In this regard, we present an unsupervised model for word level language identification of English and Kokborok code-mixed and code-switched sentences. Several works have already been reported for various languages, including various Indian languages. But, to the best of our knowledge, ours is the first language identification work dedicated to low resource English-Kokborok language pairs. The proposed model combines a frequency lexicon based, character n-gram language model and a language dependent morphological dictionary-based model for correctly classifying each word. The model which is suitable for low resource languages that do not have a large number of annotated dataset is able to achieve a good performance with word accuracy level of 84%. IndexTerms - Word level language identification, code-mixing, code-switching, dictionaries, affixes, kokborok, English. I. INTRODUCTION Automatic language identification based on code-mixing and code-switching in Social media is gaining more and more attention for those researchers who are basically working and doing research work in the field of Natural Language Processing. In this generation multilingual speakers are much higher in number than the monolingual speakers, we can see in Social media many of the speakers are multilingual speakers using more than two languages in their conversation in Facebook, tweeter and in many others sources. -

People of the Margins Philippe Ramirez

People of the Margins Philippe Ramirez To cite this version: Philippe Ramirez. People of the Margins. Spectrum, 2014, 978-81-8344-063-9. hal-01446144 HAL Id: hal-01446144 https://hal.archives-ouvertes.fr/hal-01446144 Submitted on 25 Jan 2017 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. People of the Margins People of the Margins Across Ethnic Boundaries in North-East India Philippe Ramirez SPECTRUM PUBLICATIONS GUWAHATI : DELHI In association with CNRS, France SPECTRUM PUBLICATIONS • Hem Barua Road, Pan Bazar, GUWAHATI-781001, Assam, India. Fax/Tel +91 361 2638434 Email [email protected] • 298-B Tagore Park Extn., Model Town-1, DELHI-110009, India. Tel +91 9435048891 Email [email protected] Website: www.spectrumpublications.in First published in 2014 © Author Published by arrangement with the author for worldwide sale. Unless otherwise stated, all photographs and maps are by the author. All rights reserved. Except for brief quotations in critical articles or reviews, no part of this publication may be reproduced, stored in a retrieval system, or transmitted/used in any form by any means, electronic, mechanical, photocopying, recording or otherwise, without the prior written permission of the publishers. -

Contribution of the Missionary to the Bodo Language and Literature

International Journal of All Research Education and Scientific Methods (IJARESM), ISSN: 2455-6211 Volume 8, Issue 12, December-2020, Impact Factor: 7.429, Available online at: www.ijaresm.com Contribution of the Missionary to the Bodo Language and Literature Prasanta Boro Asstt. Professor, Deptt. Of Bodo, L.O.K.D. College, Dhekiajuli, Sonitpur, Assam. -------------------------------------------------------*****************--------------------------------------------------- ABSTRACT Linguistically the Bodos include a large group of people who are the speakers of the Tibeto-Burman speeches of the North and East Bengal, Assam and Burma. The period starting from 1797 down to 1959 has been described as the Missionary age of the Bodo Literature by many writers and thinkers. The activities and contributions of the Christian Missionaries towards the growth of Bodo Literature in early period, research works on the Bodo Language and Literature had been undertaken by the Missionary workers and writers. In 1846 B. H. Hodgson used the Bodo word in his writings for the first time. Contribution of Rev. S. Endle towards the growth of Bodo Language and Literature in greater than any other writer or contributor of the Christian Missionary. In his “An Outline of the Kachari Grammar (1884)”, he discussed widely about the Bodo Language of the Boro Kacharis of Darrang District. He also described vividly about the Bodo Culture in his book – “The Kacharis (1911)”. In 1889 Rev. L. O. Skrefshrud brought out his “A Short Grammar of the Mech of Bodo Language”. In this paper, an attempt has been made to study activities and contribution of the Missionary towards the growth of Bodo Language and Literature. -

Regions of Assam

REGIONS OF ASSAM Geographically Assam is situated in the north-eastern region of the Indian sub- continent. It covers an area of 78,523 sq. kilometres (approximate). Assam – the gateway to north-east India is a land of blue hills, valleys and rivers. Assam has lavishly bestowed upon unique natural beauty and abundant natural wealth. The natural beauty of Assam is one of the most fascinating in the country with evergreen forests, majestic rivers, rich landscape, lofty green hills, bushy grassy plains, rarest flora and fauna, beautiful islands and what not. The capital of Assam is Dispur and the state emblem is one-hoed rhino. Assam is bounded by Manipur, Nagaland and Myanmar in the east and in the rest by West Bengal in the north by Bhutan and Arunachal Pradesh and in the route by Mizoram, Tripura, Bangladesh and Meghalaya. Literacy rate in Assam has seen upward trend and is 72.19 percent as per 2011 population census. Of that, male literacy stands at 77.85 percent while female literacy is at 66.27 percent. As per details from Census 2011, Assam has population of 3.12 Crores, an increase from figure of 2.67 Crore in 2001 census. Total population of Assam as per 2011 census is 31,205,576 of which male and female are 15,939,443 and 15,266,133 respectively. In 2001, total population was 26,655,528 in which males were 13,777,037 while females were 12,878,491. The total population growth in this decade was 17.07 percent while in previous decade it was 18.85 percent. -

Use Style: Paper Title

IJRECE VOL. 2 ISSUE 3 JUL-SEP 2014 ISSN: 2348-2281 (ONLINE) Design challenges of Spoken Dialogue System in Bodo Language Dr. Manoj Kr. Deka1 ,Aniruddha Deka1 1Dept. of Com.Sc & Tech, Bodoland University Abstract- In the last few years there is a significant Could we somehow achieve human like performance and development in natural language processing, speech flexibility with computer interfaces? recognition and text-to-speech conversion. In these significant Wouldn’t it make sense to have systems that could be used development the research in spoken dialogue is Spoken by having human-human like conversation, that is, the dialogue can play a vital role between human-computer most natural way for a human to interact with somebody? interactions. Spoken dialogue is the missing component which One of the solutions of these questions is spoken dialogue makes possible to develop conversational interfaces between system. Spoken dialogue systems try to achieve this human and computer. Again the designing a spoken dialogue conversational gap between the user and the system. There is a system with a developing language like Bodo is more substantial improvement in natural language processing, challenging. In this paper we give a brief survey on spoken speech recognition and text-to-speech conversion in the past dialogue systems, its challenges and discuss different issues decade. Spoken dialogue is the missing component in between for developing spoken dialogue systems in context of Bodo of these, making it possible to develop conversational Language. interfaces to computers. [3] Keywords—Spoken dialouge system, importance and II. ARCHITECTURE OF SPOKEN DIALOGUE SYSTEM characteristics ,Bodo language, Bodo corpus. -

Morphological Analyzer in the Development of Bilingual Dictionary (Kokborok-English) - an Analysis for Appropriate Method and Approach Partha Sarkar, Dr

ISSN: 2277-3754 ISO 9001:2008 Certified International Journal of Engineering and Innovative Technology (IJEIT) Volume 4, Issue 10, April 2015 Morphological Analyzer in the Development of Bilingual Dictionary (Kokborok-English) - An Analysis for Appropriate Method and Approach Partha Sarkar, Dr. Bipul Syam Purkayastha Abstract: The genre of Natural Language Processing is a particular area i.e. the development of Bilingual widespread area which is confronted with many challenges dictionary, the area of Morphology and Morphological and consecutively it produces new positive dimensions in the Analysis become very crucial.But before we delve deep fields of computer applications or machine translation. Now a into the analysis of the areas „Bilingual Dictionary‟and days, the particular area, machine translation, is becoming „Morphological Analyzer‟, let us have a bird‟s eye view popular because of its practical uses and applications for various purposes. However, machine translation is very much on Kokborok language. related with the linguistic issues like morphology, syntax, semantics etc. This paper is particularly focused on the II. A BRIEF OVERVIEW OF KOKBOROK development of Kokborok bilingual dictionary. However, when LANGUAGE we tend to discuss the particular area i.e., the development of Kokborok language is regarded as the native language bilingual dictionary, the area of morphology and spoken by the Borok people belonging to the state of morphological analysis become very crucial. This paper is Tripura. The term `Kok-borok` is actually a compound aimed at discussing and analyzing the morphology of word consisting of two different words; the word Kokborok language, the various NLP methods and techniques „kok‟literally means language, and the word related with morphological analyzer to develop a Kokborok „borok‟means nation. -

UC Berkeley Dissertations, Department of Linguistics

UC Berkeley Dissertations, Department of Linguistics Title Pronouns and Pronominal Morphology in Tibeto-Burman Permalink https://escholarship.org/uc/item/00h8574v Author Bauman, James Publication Date 1975 eScholarship.org Powered by the California Digital Library University of California Pronouns and Pronominal Morphology in Tibe+o-Burman By- James John Bauman B .S . (Michigan S+a+e U n iv e rs ity ) 1964 C .P h il. (U n iv e rs ity of C a lifo rn ia ) 1973 DISSERTATION Submitted in partial satisfaction of the requirements for the degree of DOCTOR OF PHILOSOPHY in Li nguis+i cs in the GRADUATE DIVISION of the UNIVERSITY OF CALIFORNIA, BERKELEY Approved: Committee in Charge DEGREE CONFERRED DECEMBER 20, 1975 Reproduced with permission of the copyright owner. Further reproduction prohibited without permission. To my parents Robert and Julia Bauman wiio awaited this accomplishment with, patience, understanding, and love. Reproduced with permission of the copyright owner. Further reproduction prohibited without permission. PREFACE My thanks and appreciation go out to the members of my committee, Jim Matisoff, Shirley Silver, and Karl Zimmer, for the painful task of reading previous drafts of this work, often lacking in depth, organiza tion, and style, much of which they kindly supplied. I am, of course, indebted in more significant ways to the people who, as my teachers, contributed so much to the background necessary for successfully realizing this work. Jim Matisoff stands in the high est rank. His enthusiasm for Southeast Asia and his skill in discussing it, generated in me a similar enthusiasm at, luckily, an impressionable point of my career.