Dena Slothower

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

In the Land of Blood and Honey What’S Fair Or Just in Love and War Crimes? Lessons for Transitional Justice* Carrie Menkel-Meadow

4 In The Land of Blood and Honey What’s Fair or Just in Love and War Crimes? Lessons for Transitional Justice* Carrie Menkel-Meadow n 1990, the University of California at Los Angeles (UCLA) held a conference on “Probing the Limits of Representation: Nazism and the IFinal Solution” during which leading international scholars of history, literature, cultural studies, philosophy, critical theory, arts, language, sociology, and psychology explored the question of whether the mass atrocities of Nazism and the Final Solution to exterminate all European Jewry could be represented in anything other than “factual” historical documentation and narrative (Friedlander 1992; White 1992; LaCapra 1996). This was some years after the first documentaries and “fictional- ized” films on the Holocaust had appeared, after Judgment at Nuremberg (Kramer 1961), but before Schindler’s List (Spielberg 1993) and after vari- ous cultural critics had said such things as “there can be no poetry after Auschwitz” (attributed to Theodor Adorno), and no morally acceptable artistic representation could be made of the Shoah (Friedlander 1992). The conference explored what were then called “limits to representation” of certain “truths,” so reprehensible, so mass and, at the same time, so spe- cific or so exceptional (the “German” problem; Maier 1988), that no genre could fully, morally, or “accurately” depict either the systematic evil or the mass pain, death, and injury that is the Holocaust. As the child of Holocaust survivors, I attended that conference as both scholar and one generation removed “victim,” having spent a lifetime listening to my family’s stories and seeing many visual images of both their own stories and the larger international one that was World War II. -

CONTRACT CONFLICT Guy A

COPYRIGHT © 2017 VIRGINIA LAW REVIEW ASSOCIATION COPYRIGHT SURVIVES: RETHINKING THE COPYRIGHT- CONTRACT CONFLICT Guy A. Rub* Twenty-one years ago, copyright died. More accurately, it was killed. In 1996, in ProCD v. Zeidenberg, Judge Easterbrook, writing for the Seventh Circuit, held that a contract that restricted the use of factual information was not preempted by the Copyright Act and therefore enforceable. The reaction among copyright scholars was swift and passionate. In dozens of articles and books, spreading over two decades, scholars cautioned that if the ProCD approach is broadly adopted, the results would be dire. Through contracts, the rights of copyright owners would run amok, expand, and in doing so they would invade, shrink, and possibly destroy the public domain. Contracts, we were repeatedly warned throughout the years, would kill copyright law. This Article challenges this scholarly consensus by studying the court opinions that have dealt with the copyright-contract conflict over the past four decades. This examination reveals surprising facts: notwithstanding the scholars’ warnings, ProCD’s approach won the day and was embraced by most federal circuit courts. The doomsday scenarios scholars warned against, however, did not materialize. The * Associate Professor, The Ohio State University Michael E. Moritz College of Law. I would like to thank Professors Clark Asay, Ian Ayres, Jonathan Baker, Scott Baker, Omri Ben-Shahar, Robert Brauneis, Wendy Gordon, David Hoffman, Robert Merges, Mark McKenna, Alan Michaels, Michael -

ICN 2020 Virtual Annual Conference Day

KnowledgeVision | ICN 2020 Day One Sept 14 CYNTHIA: Welcome to ICN 2020. We are delighted that you are joining us this week for the ICN Annual Conference. Today through Wednesday, we'll see two plenary discussions and related working group videos each day. On Thursday, we have special programming on ICN work related to NGAs, younger agencies, and economists. Each day you'll find the link to these session posted on the conference web page, just like you did today. If you have questions, suggestions, or just want to let us know that you're tuning in, you can email us at [email protected] and [email protected]. Follow ICN on Twitter. This week using the special hashtag ICN2020. And now it is my great pleasure to turn it over to [INAUDIBLE] president and ICN chair Andreas Mundt. ANDREAS Thanks a lot, Cynthia. Well, welcome to the 2020 ICN Annual Conference, a MUNDT: meeting of members of the ICN of our non-governmental advisors and many other people this time, because we take a chance and have more attendees than ever probably. We have four conference days ahead of us. We're going to have a host showcase. There will be working group plenaries. There will be special sessions for younger agencies for our non-governmental advisors for economists, and there will be a follow up program after the conference that will be organized by the working groups. There will be weekly events. Well, this is our first virtual conference. A virtual organization like the ICN and a virtual conference sounds easy, but in fact, it was hard to do that, to set up the program adjusted to the virtual world. -

Cashier Du Cinema

PROFILES CASHIER DU CINEMA After supermarkets, private equity, and politics, Ron Burkle makes a move on Hollywood. BY CONNIE BRUCK nside the towering iron gates of Green don’t want to think you’re in L.A., you’re Around that time, Burkle was seen at an Acres, a Beverly Hills estate built in not really in L.A.” L.A. night club, with “two drop-dead- theI nineteen-twenties by the silent-film Burkle turned Green Acres into the gorgeous girls, maybe twenty years old, actor Harold Lloyd, a narrow road climbs city’s premier political showcase. Shortly one on either arm,” a person who was at through eight acres of lawns and gardens after buying the estate, he hosted a fund- the club said. “It was such a statement: to a mansion modelled on the Villa Pal- raiser for Senator Dianne Feinstein, I can do whatever I want.” The New mieri, the Florentine palazzo where Boc- whose campaign he served as a finance York Post ran items about Burkle carous- caccio set the “Decameron.” One after- chair. The next year, he met Bill Clinton, ing with young models on his plane, noon in mid-June, the courtyard held a who was running for President, and the which had customized sleeping quarters. dozen gleaming sports cars—Lambor- two found a ready kinship. Although (Burkle, working with an F.B.I. sting, se- ghinis, Teslas, Ferraris, McLarens—ar- Burkle was a registered Republican until cretly videotaped the Post columnist ranged in a circular design, like giant steel 2004, by the mid-nineties he had be- Jared Paul Stern asking him for money petals. -

POWERVR 3D Application Development Recommendations

Imagination Technologies Copyright POWERVR 3D Application Development Recommendations Copyright © 2009, Imagination Technologies Ltd. All Rights Reserved. This publication contains proprietary information which is protected by copyright. The information contained in this publication is subject to change without notice and is supplied 'as is' without warranty of any kind. Imagination Technologies and the Imagination Technologies logo are trademarks or registered trademarks of Imagination Technologies Limited. All other logos, products, trademarks and registered trademarks are the property of their respective owners. Filename : POWERVR. 3D Application Development Recommendations.1.7f.External.doc Version : 1.7f External Issue (Package: POWERVR SDK 2.05.25.0804) Issue Date : 07 Jul 2009 Author : POWERVR POWERVR 1 Revision 1.7f Imagination Technologies Copyright Contents 1. Introduction .................................................................................................................................4 1. Golden Rules...............................................................................................................................5 1.1. Batching.........................................................................................................................5 1.1.1. API Overhead ................................................................................................................5 1.2. Opaque objects must be correctly flagged as opaque..................................................6 1.3. Avoid mixing -

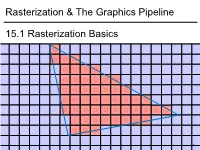

Rasterization & the Graphics Pipeline 15.1 Rasterization Basics

1 1 0.8 0.6 0.4 0.2 0 -0.2 -0.4 -0.6 -0.8 15.1Rasterization Basics Rasterization & TheGraphics Pipeline Rasterization& -1 -1 -0.8 -0.6 -0.4 -0.2 0 0.2 0.4 0.6 0.8 1 In This Video • The Graphics Pipeline and how it processes triangles – Projection, rasterisation, shading, depth testing • DirectX12 and its stages 2 Modern Graphics Pipeline • Input – Geometric model • Triangle vertices, vertex normals, texture coordinates – Lighting/material model (shader) • Light source positions, colors, intensities, etc. • Texture maps, specular/diffuse coefficients, etc. – Viewpoint + projection plane – You know this, you’ve done it! • Output – Color (+depth) per pixel Colbert & Krivanek 3 The Graphics Pipeline • Project vertices to 2D (image) • Rasterize triangle: find which pixels should be lit • Compute per-pixel color • Test visibility (Z-buffer), update frame buffer color 4 The Graphics Pipeline • Project vertices to 2D (image) • Rasterize triangle: find which pixels should be lit – For each pixel, test 3 edge equations • if all pass, draw pixel • Compute per-pixel color • Test visibility (Z-buffer), update frame buffer color 5 The Graphics Pipeline • Perform projection of vertices • Rasterize triangle: find which pixels should be lit • Compute per-pixel color • Test visibility, update frame buffer color – Store minimum distance to camera for each pixel in “Z-buffer” • ~same as tmin in ray casting! – if new_z < zbuffer[x,y] zbuffer[x,y]=new_z framebuffer[x,y]=new_color frame buffer Z buffer 6 The Graphics Pipeline For each triangle transform into eye space (perform projection) setup 3 edge equations for each pixel x,y if passes all edge equations compute z if z<zbuffer[x,y] zbuffer[x,y]=z framebuffer[x,y]=shade() 7 (Simplified version) DirectX 12 Pipeline (Vulkan & Metal are highly similar) Vertex & Textures, index data etc. -

Powervr Graphics - Latest Developments and Future Plans

PowerVR Graphics - Latest Developments and Future Plans Latest Developments and Future Plans A brief introduction • Joe Davis • Lead Developer Support Engineer, PowerVR Graphics • With Imagination’s PowerVR Developer Technology team for ~6 years • PowerVR Developer Technology • SDK, tools, documentation and developer support/relations (e.g. this session ) facebook.com/imgtec @PowerVRInsider │ #idc15 2 Company overview About Imagination Multimedia, processors, communications and cloud IP Driving IP innovation with unrivalled portfolio . Recognised leader in graphics, GPU compute and video IP . #3 design IP company world-wide* Ensigma Communications PowerVR Processors Graphics & GPU Compute Processors SoC fabric PowerVR Video MIPS Processors General Processors PowerVR Vision Processors * source: Gartner facebook.com/imgtec @PowerVRInsider │ #idc15 4 About Imagination Our IP plus our partners’ know-how combine to drive and disrupt Smart WearablesGaming Security & VR/AR Advanced Automotive Wearables Retail eHealth Smart homes facebook.com/imgtec @PowerVRInsider │ #idc15 5 About Imagination Business model Licensees OEMs and ODMs Consumers facebook.com/imgtec @PowerVRInsider │ #idc15 6 About Imagination Our licensees and partners drive our business facebook.com/imgtec @PowerVRInsider │ #idc15 7 PowerVR Rogue Hardware PowerVR Rogue Recap . Tile-based deferred renderer . Building on technology proven over 5 previous generations . Formally announced at CES 2012 . USC - Universal Shading Cluster . New scalar SIMD shader core . General purpose compute is a first class citizen in the core … . … while not forgetting what makes a shader core great for graphics facebook.com/imgtec @PowerVRInsider │ #idc15 9 TBDR Tile-based . Tile-based . Split each render up into small tiles (32x32 for the most part) . Bin geometry after vertex shading into those tiles . Tile-based rasterisation and pixel shading . -

Nabet-Abc Master Agreement

NABET-CWA/ABC Inc. 2017-2021 Master Agreement MASTER AGREEMENT TABLE OF CONTENTS General Articles Article I. Recognition and Warranty ............................................................... 2 II. No Discrimination ........................................................................... 2 III. Employment ..................................................................................... 3 IV. Check-Off ........................................................................................ 7 V. No Strikes or Lockouts .................................................................... 9 VI. Transfer of Work ............................................................................. 9 VII. Program Origination ...................................................................... 10 VIII. Work Schedule, Overtime and Penalties ....................................... 15 IX. Meal Periods .................................................................................. 22 X. Night Shift Differential .................................................................. 22 XI. Seniority, Layoffs and Rehires ...................................................... 23 XII. Transfers, Training and Temporary Upgrading ............................. 31 XIII. Leave of Absence .......................................................................... 33 XIV. Discharges ...................................................................................... 35 XV. Severance Pay ............................................................................... -

Real-Time Ray Traced Ambient Occlusion and Animation Image Quality and Performance of Hardware- Accelerated Ray Traced Ambient Occlusion

DEGREE PROJECTIN COMPUTER SCIENCE AND ENGINEERING, SECOND CYCLE, 30 CREDITS STOCKHOLM, SWEDEN 2021 Real-time Ray Traced Ambient Occlusion and Animation Image quality and performance of hardware- accelerated ray traced ambient occlusion FABIAN WALDNER KTH ROYAL INSTITUTE OF TECHNOLOGY SCHOOL OF ELECTRICAL ENGINEERING AND COMPUTER SCIENCE Real-time Ray Traced Ambient Occlusion and Animation Image quality and performance of hardware-accelerated ray traced ambient occlusion FABIAN Waldner Master’s Programme, Industrial Engineering and Management, 120 credits Date: June 2, 2021 Supervisor: Christopher Peters Examiner: Tino Weinkauf School of Electrical Engineering and Computer Science Swedish title: Strålspårad ambient ocklusion i realtid med animationer Swedish subtitle: Bildkvalité och prestanda av hårdvaruaccelererad, strålspårad ambient ocklusion © 2021 Fabian Waldner Abstract | i Abstract Recently, new hardware capabilities in GPUs has opened the possibility of ray tracing in real-time at interactive framerates. These new capabilities can be used for a range of ray tracing techniques - the focus of this thesis is on ray traced ambient occlusion (RTAO). This thesis evaluates real-time ray RTAO by comparing it with ground- truth ambient occlusion (GTAO), a state-of-the-art screen space ambient occlusion (SSAO) method. A contribution by this thesis is that the evaluation is made in scenarios that includes animated objects, both rigid-body animations and skinning animations. This approach has some advantages: it can emphasise visual artefacts that arise due to objects moving and animating. Furthermore, it makes the performance tests better approximate real-world applications such as video games and interactive visualisations. This is particularly true for RTAO, which gets more expensive as the number of objects in a scene increases and have additional costs from managing the ray tracing acceleration structures. -

Michael Doggett Department of Computer Science Lund University Overview

Graphics Architectures and OpenCL Michael Doggett Department of Computer Science Lund university Overview • Parallelism • Radeon 5870 • Tiled Graphics Architectures • Important when Memory and Bandwidth limited • Different to Tiled Rasterization! • Tessellation • OpenCL • Programming the GPU without Graphics © mmxii mcd “Only 10% of our pixels require lots of samples for soft shadows, but determining which 10% is slower than always doing the samples. ” by ID_AA_Carmack, Twitter, 111017 Parallelism • GPUs do a lot of work in parallel • Pipelining, SIMD and MIMD • What work are they doing? © mmxii mcd What’s running on 16 unifed shaders? 128 fragments in parallel 16 cores = 128 ALUs MIMD 16 simultaneous instruction streams 5 Slide courtesy Kayvon Fatahalian vertices/fragments primitives 128 [ OpenCL work items ] in parallel CUDA threads vertices primitives fragments 6 Slide courtesy Kayvon Fatahalian Unified Shader Architecture • Let’s take the ATI Radeon 5870 • From 2009 • What are the components of the modern graphics hardware pipeline? © mmxii mcd Unified Shader Architecture Grouper Rasterizer Unified shader Texture Z & Alpha FrameBuffer © mmxii mcd Unified Shader Architecture Grouper Rasterizer Unified Shader Texture Z & Alpha FrameBuffer ATI Radeon 5870 © mmxii mcd Shader Inputs Vertex Grouper Tessellator Geometry Grouper Rasterizer Unified Shader Thread Scheduler 64 KB GDS SIMD 32 KB LDS Shader Processor 5 32bit FP MulAdd Texture Sampler Texture 16 Shader Processors 256 KB GPRs 8 KB L1 Tex Cache 20 SIMD Engines Shader Export Z & Alpha 4 -

3/4-Discrete Optimal Transport

3 4-discrete optimal transport Fr´ed´ericde Gournay Jonas Kahn L´eoLebrat July 26, 2021 Abstract 3 This paper deals with the 4 -discrete 2-Wasserstein optimal transport between two measures, where one is supported by a set of segment and the other one is supported by a set of Dirac masses. We select the most suitable optimization procedure that computes the optimal transport and provide numerical examples of approximation of cloud data by segments. Introduction The numerical computation of optimal transport in the sense of the 2-Wasserstein distance has known several break- throughs in the last decade. One can distinguish three kinds of methods : The first method is based on underlying PDEs [2] and is only available when the measures are absolutely continuous with respect to the Lebesgue measure. The second method deals with discrete measures and is known as the Sinkhorn algorithm [7, 3]. The main idea of this method is to add an entropic regularization in the Kantorovitch formulation. The third method is known as semi- discrete [19, 18, 8] optimal transport and is limited to the case where one measure is atomic and the other is absolutely continuous. This method uses tools of computational geometry, the Laguerre tessellation which is an extension of the Voronoi diagram. The aim of this paper is to develop an efficient method to approximate a discrete measure (a point cloud) by a measure carried by a curve. This requires a novel way to compute an optimal transportation distance between two such measures, none of the methods quoted above comply fully to this framework. -

Difi: Fast Distance Field Computation Using Graphics Hardware

DiFi: Fast Distance Field Computation using Graphics Hardware Avneesh Sud Dinesh Manocha Department of Computer Science Univeristy of North Carolina Chapel Hill, NC 27599-3175 {sud,dm}@cs.unc.edu http://gamma.cs.unc.edu/DiFi Abstract Most practically efficient methods for computing the dis- tance fields in 2− or 3−space compute the distance field We present a novel algorithm for fast computation of dis- on a discrete grid. Essentially the methods fall into 2 cat- cretized distance fields using graphics hardware. Given a egories: methods based on front propagation and methods set of primitives and a distance metric, our algorithm com- based on Voronoi regions. putes the distance field along each slice of a uniform spatial Main Contributions: In this paper we present an ap- grid by rasterizing the distance functions. It uses bounds proach that computes discrete approximations of the 3D dis- on the spatial extent of Voronoi regions of each primitive as tance field and its application to medial axis computation well as spatial coherence between adjacent slices to cull the using the graphics hardware. Our contributions include: primitives. As a result, it can significantly reduce the load on the graphics pipeline. We have applied this technique 1. A novel site culling algorithm that uses Voronoi region to compute the Voronoi regions of large models composed properties to accelerate 3D distance field computation of tens of thousands of primitives. For a given grid size, 2. A technique for reducing the fill per slice using con- the culling efficiency increases with the model complexity. servative bounds on the Voronoi regions To demonstrate this technique, we compute the medial axis transform of a polyhedral model.