John F. Muratore 20 Mar 2013

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Advancements in Rocket Technology

Advancements in Rocket Technology Prepared by Marcelo Fernando Condori Mendoza Credits: NASA https://www.nasa.gov/exploration/systems/sls/overview.html 1. History of Rocketry Ancient Rockets Rockets for Warfare Rockets as Inventions Early - Mid 20th Century Rockets Space Race Rockets Future Rockets Space Launch System (SLS) Overview NASA’s Space Launch System, or SLS, is an advanced launch vehicle that provides the foundation for human exploration beyond Earth’s orbit. Credits: NASA https://www.nasa.gov/sites/default/files/atoms/files/00 80_sls_fact_sheet_10162019a_final_508.pdf The Power to Explore Beyond Earth’s Orbit To fill America’s future needs for deep space missions, SLS will evolve into increasingly more powerful configurations. The first SLS vehicle, called Block 1, was able to send more than 26 metric tons (t) or 57,000 pounds (lbs.) to orbits beyond the Moon. https://www.nasa.gov/exploration/systems/sls/overview.html Block 1 - Initial SLS Configuration Block 1 - Initial SLS Configuration Credits: NASA What is SpaceX? QUESTION SpaceX headquarters in December Spaceflight Industries will carry and 2017; plumes from a flight of a launch a cluster of Kleos satellites on Falcon 9 rocket are visible overhead the SpaceX Falcon 9 scheduled for launch mid 2021. Space Exploration Technologies Corp., trading as SpaceX, is an American aerospace manufacturer and space transportation services company headquartered in Hawthorne, California, which was founded in 2002 by Elon Mask. An Airbus A321 on final assembly line 3 in the Airbus plant at Hamburg Finkenwerder Airport Main important events The goal was reducing space transportation costs to enable the colonization of Mars. -

Elevation and Deformation Extraction from Tomosar

Title Elevation and Deformation Extraction from TomoSAR Lang Feng Thesis submitted for the degree of Doctor of Philosophy Mullard Space Science Laboratory Department of Space and Climate Physics University College London 2019 1 This page is intentionally left blank. 2 Author Declaration I, Lang Feng, confirm that the work presented in this thesis is my own. Where information has been derived from other sources, I confirm that this has been indicated in the thesis. LANG FENG 2019-02-01 3 This page is intentionally left blank. 4 Abstract 3D SAR tomography (TomoSAR) and 4D SAR differential tomography (Diff- TomoSAR) exploit multi-baseline SAR data stacks to provide an essential innovation of SAR Interferometry for many applications, sensing complex scenes with multiple scatterers mapped into the same SAR pixel cell. However, these are still influenced by DEM uncertainty, temporal decorrelation, orbital, tropospheric and ionospheric phase distortion and height blurring. In this thesis, these techniques are explored. As part of this exploration, the systematic procedures for DEM generation, DEM quality assessment, DEM quality improvement and DEM applications are first studied. Besides, this thesis focuses on the whole cycle of systematic methods for 3D & 4D TomoSAR imaging for height and deformation retrieval, from the problem formation phase, through the development of methods to testing on real SAR data. After DEM generation introduction from spaceborne bistatic InSAR (TanDEM- X) and airborne photogrammetry (Bluesky), a new DEM co-registration method with line feature validation (river network line, ridgeline, valley line, crater boundary feature and so on) is developed and demonstrated to assist the study of a wide area DEM data quality. -

General Assembly Distr.: Limited 16 February 2007

United Nations A/AC.105/C.1/L.291 General Assembly Distr.: Limited 16 February 2007 Original: English Committee on the Peaceful Uses of Outer Space Scientific and Technical Subcommittee Forty-fourth session Vienna, 12-23 February 2007 Draft report I. Introduction 1. The Scientific and Technical Subcommittee of the Committee on the Peaceful Uses of Outer Space held its forty-fourth session at the United Nations Office at Vienna from 12 to 23 February 2007 under the chairmanship of Mazlan Othman (Malaysia). 2. The Subcommittee held […] meetings. A. Attendance 3. Representatives of the following 50 member States of the Committee attended the session: Algeria, Argentina, Australia, Austria, Brazil, Burkina Faso, Canada, Chile, China, Colombia, Cuba, Czech Republic, Ecuador, Egypt, France, Germany, Greece, Hungary, India, Indonesia, Iran (Islamic Republic of), Italy, Japan, Kazakhstan, Libyan Arab Jamahiriya, Malaysia, Morocco, Nigeria, Pakistan, Peru, Philippines, Poland, Portugal, Republic of Korea, Romania, Russian Federation, Saudi Arabia, Slovakia, South Africa, Spain, Sudan, Sweden, Syrian Arab Republic, Thailand, Turkey, Ukraine, United Kingdom of Great Britain and Northern Ireland, United States of America, Venezuela (Bolivarian Republic of) and Viet Nam. 4. At the 658th meeting, on 12 February, the Chairman informed the Subcommittee that requests had been received from Angola, Bolivia, the Dominican Republic, Paraguay, Switzerland, the former Yugoslav Republic of Macedonia and Tunisia to attend the session as observers. Following past practice, those States were invited to send delegations to attend the current session of the Subcommittee and address it, as appropriate, without prejudice to further requests of that nature; that action did not involve any decision of the Subcommittee concerning status but was a courtesy that the Subcommittee extended to those delegations. -

Spacex's Expanding Launch Manifest

October 2013 SpaceX’s expanding launch manifest China’s growing military might Servicing satellites in space A PUBLICATION OF THE AMERICAN INSTITUTE OF AERONAUTICS AND ASTRONAUTICS SpaceX’s expanding launch manifest IT IS HARD TO FIND ANOTHER SPACE One of Brazil, and the Turkmensat 1 2012, the space docking feat had been launch services company with as di- for the Ministry of Communications of performed only by governments—the verse a customer base as Space Explo- Turkmenistan. U.S., Russia, and China. ration Technologies (SpaceX), because The SpaceX docking debunked there simply is none. No other com- A new market the myth that has prevailed since the pany even comes close. Founded only The move to begin launching to GEO launch of Sputnik in 1957, that space a dozen years ago by Elon Musk, is significant, because it opens up an travel can be undertaken only by na- SpaceX has managed to win launch entirely new and potentially lucrative tional governments because of the contracts from agencies, companies, market for SpaceX. It also puts the prohibitive costs and technological consortiums, laboratories, and univer- company into direct competition with challenges involved. sities in the U.S., Argentina, Brazil, commercial launch heavy hitters Ari- Teal Group believes it is that Canada, China, Germany, Malaysia, anespace of Europe with its Ariane mythology that has helped discourage Mexico, Peru, Taiwan, Thailand, Turk- 5ECA, U.S.-Russian joint venture Inter- more private investment in commercial menistan, and the Netherlands in a rel- national Launch Services with its Pro- spaceflight and the more robust growth atively short period. -

Ultra-Massive Mimo Communications in the Millimeter Wave and Terahertz Bands for Terrestrial and Space Wireless Systems

ULTRA-MASSIVE MIMO COMMUNICATIONS IN THE MILLIMETER WAVE AND TERAHERTZ BANDS FOR TERRESTRIAL AND SPACE WIRELESS SYSTEMS A Dissertation Presented to The Academic Faculty By Shuai Nie In Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy in the School of Electrical and Computer Engineering College of Engineering Georgia Institute of Technology May 2021 c Shuai Nie 2021 ULTRA-MASSIVE MIMO COMMUNICATIONS IN THE MILLIMETER WAVE AND TERAHERTZ BANDS FOR TERRESTRIAL AND SPACE WIRELESS SYSTEMS Thesis committee: Dr. Ian F. Akyildiz, Advisor Dr. Gordon Stuber¨ School of Electrical and Computer Engi- School of Electrical and Computer Engi- neering neering Georgia Institute of Technology (formerly) Georgia Institute of Technology Dr. Raghupathy Sivakumar, Chair Dr. Chuanyi Ji School of Electrical and Computer Engi- School of Electrical and Computer Engi- neering neering Georgia Institute of Technology Georgia Institute of Technology Dr. Manos M. Tentzeris Dr. Ashutosh Dhekne School of Electrical and Computer Engi- School of Computer Science neering Georgia Institute of Technology Georgia Institute of Technology Date approved: April 20, 2021 ACKNOWLEDGMENTS First and foremost, I would like to express my heartiest gratitude to my advisor, Prof. Ian F. Akyildiz, for his continuous support, guidance, and encouragement to me during my Ph.D. journey. I have been blessed to have the opportunity to research under his supervision and learn under his mentorship. He has led me to numerous amazing research opportunities and allowed me to explore new directions of my research interests. I am also grateful to him for his enthusiasm and passion, which not only motivated me to advance towards the completion of this dissertation, but inspired me to embrace the unknowns in my future career. -

Spacex Launch Manifest

Small Launchers and Small Satellites: Does Size Matter? Does Price Matter? Space Enterprise Council/CSIS - October 26, 2007 “... several ominous trends now compel a reassessment of the current business model for meeting the nation’s needs for military space capabilities.” Adm. Arthur K. Cebrowski (Ret.) Space Exploration Technologies Corporation Spacex.com One of the more worrisome trends, from a U.S. perspective, has been the declining influence of American vehicles in the global commercial launch market. Once one of the dominant players in the marketplace, the market share of U.S. -manufactured vehicles has declined because of the introduction of new vehicles and new competitors, such as Russia, which can offer launches at lower prices and/or with greater performance than their American counterparts. [The Declining Role in the U.S. Commercial Launch Industry, Futron, June 2005] Space Exploration Technologies Corporation Spacex com Current State of the World Launch Market French Firm Vaults Ahead In Civilian Rocket Market Russia Designs Spaceport -Wall Street Journal Complex for South Korea -Itar-Tass Brazil Fires Rocket in Bid to Revive Space Program India Plans to Double -Reuters Satellite Launches Within China to Map "Every Inch" MFuiltvi -ecoYuenatrrys -RIA Novosti of Moon Surface -Reuters Astronomers Call for Arab India to Orbit Israeli Space Agency Spy Satellite in -Arabian Business September -Space Daily China Looking For Military Advantage Over Russia Calls for Building U.S. Space Program Lunar Base -San Francisco Chronicle -Itar-Tass January 11, 2007 - Chinese SC-19 rams into a Chinese weather sat orbiting at 475 miles, scattering 1600 pieces of debris through low-Earth orbit. -

2013 Commercial Space Transportation Forecasts

Federal Aviation Administration 2013 Commercial Space Transportation Forecasts May 2013 FAA Commercial Space Transportation (AST) and the Commercial Space Transportation Advisory Committee (COMSTAC) • i • 2013 Commercial Space Transportation Forecasts About the FAA Office of Commercial Space Transportation The Federal Aviation Administration’s Office of Commercial Space Transportation (FAA AST) licenses and regulates U.S. commercial space launch and reentry activity, as well as the operation of non-federal launch and reentry sites, as authorized by Executive Order 12465 and Title 51 United States Code, Subtitle V, Chapter 509 (formerly the Commercial Space Launch Act). FAA AST’s mission is to ensure public health and safety and the safety of property while protecting the national security and foreign policy interests of the United States during commercial launch and reentry operations. In addition, FAA AST is directed to encourage, facilitate, and promote commercial space launches and reentries. Additional information concerning commercial space transportation can be found on FAA AST’s website: http://www.faa.gov/go/ast Cover: The Orbital Sciences Corporation’s Antares rocket is seen as it launches from Pad-0A of the Mid-Atlantic Regional Spaceport at the NASA Wallops Flight Facility in Virginia, Sunday, April 21, 2013. Image Credit: NASA/Bill Ingalls NOTICE Use of trade names or names of manufacturers in this document does not constitute an official endorsement of such products or manufacturers, either expressed or implied, by the Federal Aviation Administration. • i • Federal Aviation Administration’s Office of Commercial Space Transportation Table of Contents EXECUTIVE SUMMARY . 1 COMSTAC 2013 COMMERCIAL GEOSYNCHRONOUS ORBIT LAUNCH DEMAND FORECAST . -

Financial Operational Losses in Space Launch

UNIVERSITY OF OKLAHOMA GRADUATE COLLEGE FINANCIAL OPERATIONAL LOSSES IN SPACE LAUNCH A DISSERTATION SUBMITTED TO THE GRADUATE FACULTY in partial fulfillment of the requirements for the Degree of DOCTOR OF PHILOSOPHY By TOM ROBERT BOONE, IV Norman, Oklahoma 2017 FINANCIAL OPERATIONAL LOSSES IN SPACE LAUNCH A DISSERTATION APPROVED FOR THE SCHOOL OF AEROSPACE AND MECHANICAL ENGINEERING BY Dr. David Miller, Chair Dr. Alfred Striz Dr. Peter Attar Dr. Zahed Siddique Dr. Mukremin Kilic c Copyright by TOM ROBERT BOONE, IV 2017 All rights reserved. \For which of you, intending to build a tower, sitteth not down first, and counteth the cost, whether he have sufficient to finish it?" Luke 14:28, KJV Contents 1 Introduction1 1.1 Overview of Operational Losses...................2 1.2 Structure of Dissertation.......................4 2 Literature Review9 3 Payload Trends 17 4 Launch Vehicle Trends 28 5 Capability of Launch Vehicles 40 6 Wastage of Launch Vehicle Capacity 49 7 Optimal Usage of Launch Vehicles 59 8 Optimal Arrangement of Payloads 75 9 Risk of Multiple Payload Launches 95 10 Conclusions 101 10.1 Review of Dissertation........................ 101 10.2 Future Work.............................. 106 Bibliography 108 A Payload Database 114 B Launch Vehicle Database 157 iv List of Figures 3.1 Payloads By Orbit, 2000-2013.................... 20 3.2 Payload Mass By Orbit, 2000-2013................. 21 3.3 Number of Payloads of Mass, 2000-2013.............. 21 3.4 Total Mass of Payloads in kg by Individual Mass, 2000-2013... 22 3.5 Number of LEO Payloads of Mass, 2000-2013........... 22 3.6 Number of GEO Payloads of Mass, 2000-2013.......... -

Spacex's Expanding Launch Manifest

October 2013 SpaceX’s expanding launch manifest China’s growing military might Servicing satellites in space A PUBLICATION OF THE AMERICAN INSTITUTE OF AERONAUTICS AND ASTRONAUTICS * 2220-&* +) % +),)".0-")"*/1+(0)" +2&/2+-'.1"-(,,&*$#-&*$"."/. $-""*+3&.4-*$")".0-"!4 !"/"-)&*",-/& (",+.&/&+**!1"(+ &/4 +*".//&+*-4)".0-")"*/1+(0)" • ! " ! • 3"), &16-/,#&)"*"02/"*"+101)"001%+ P*#/,*4))0#,/),40-""!0 1, % +!"6,+! • ),41"*-"/12/"0#/,* 9 81,*,/"1%+ 8 • !")#,/2+01"!60"-/1"!3,/1& ) ,/+"/'"104("0+! #),40 0"0 4%"/",1%"/*"1%,!0/"2+ "/1&++!&+ ,*-)"1" • /,-/&"1/6-,)/&71&,+*&+1&+&+$#&"/,-1& )"0 * • "0&$+"!*+2# 12/"!+!20"!6"5-"/&"+ "!/"0"/ %"/0 • 3"/)--&+$ ,+3"/$&+$!&3"/$&+$#/&+$"0"10!"1"/*&+"1%"0""!&+$ -/1& )"-,0&1&,+4&1% ± P*/"0,)21&,+ &$%3)&!1"!!1/1"01, (7&+),40-""!#),40 (7*0-,00&)" • ! "01# &)&16%/!4/""*"!!"!&+01/2*"+1 201,*!"0&$+"!-/,"0#,/ 201,*--)& 1&,+ &+$)"0*)),-1& ) "00/".2&/"!#,/ (0 11"/ • ! ,01!3+ "!0&$+)-/, "00,/ 5 )20&3"20"/#/&"+!)6!3+ "!!1-/, "00&+$0,#14/"#,/),4 +!+,+,-1&*) ,+!&1&,+0 "), &16#)2 121&,+0-" 1/)+! /,000-" 1/)!&$+,01& 0#,/-/ 1& ) ,*-)"5#),4-/,)"*0 0-0-&* +) +*/ /0. 1,!&0 2006,2//".2&/"*"+10 % /1& )"0""!&+$!3& "+!-/,!2 103&))" 3 October 2013 DEPARTMENTS COMMENTARY 3 Russian rocket engines forever? INTERNATIONAL BEAT 4 Business aviation: Contraction, then recovery. WASHINGTON WATCH 6 Governing in spite of gridlock. CONVERSATIONS 8 Page 6 With Loren Thompson. SPACE UPDATE 12 Space station repair: How it’s done. Page 16 ENGINEERING NOTEBOOK 16 Space science GOLD: A payload trend? OUT OF THE PAST 42 CAREER OPPORTUNITIES 44 Page 20 FEATURES CHINA’S GROWING MILITARY MIGHT 20 China’s continuing military modernization is strengthening its ability to wage war in new and expanding areas including cyberspace. -

Launch Services Overview to the Planetary Exploration Decadal Survey Committee

Launch Services Overview to the Planetary Exploration Decadal Survey Committee November 17, 2009 Bill Wrobel NASA / SOMD Agenda • Overview • Manifest • Launch Vehicles • Issues 2 Overview • NASA’s Launch Services Program (LSP) was consolidated at KSC in 1998 – LSP provides acquisition, technical management, mission integration and launch management • NASA utilizes a mixed fleet of vehicles (small, medium & intermediate) with varying levels of performance used to support a mix of mission sizes – Mainly for Science Mission Directorate payloads, but SOMD (TDRS) and other government agencies also use NASA launch services – Launches conducted from multiple ranges; CCAFS, VAFB, RTS, WFF, and Kodiak • Vehicles are selected from the NASA Launch Services Contract (NLS) – Through competition based on mass, orbit, class of payload, and best value – Current NLS contract expires in 2010, RFP released to extend the contract • Most recent contract action purchased four intermediate class missions – TDRS – K & L, RBSP and MMS • Important issues – Loss of Medium Class launch service provider, which has been 50% of NASA missions historically – Compressed manifest – Possibility that NASA incurs a portion of the intermediate class infrastructure costs post 2010 3 Launch Services Program Roles & Responsibilities • Identify & Aggregate NASA Space Launch Requirements • Provide Launch Services for Other Agencies, Upon Request • Procure Commercially Available Expendable Launch Vehicle Launch Services to meet Spacecraft mission requirements • Overall Integration -

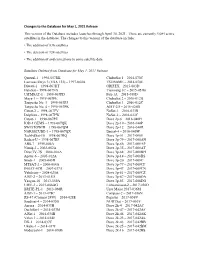

Changes to the Database for May 1, 2021 Release This Version of the Database Includes Launches Through April 30, 2021

Changes to the Database for May 1, 2021 Release This version of the Database includes launches through April 30, 2021. There are currently 4,084 active satellites in the database. The changes to this version of the database include: • The addition of 836 satellites • The deletion of 124 satellites • The addition of and corrections to some satellite data Satellites Deleted from Database for May 1, 2021 Release Quetzal-1 – 1998-057RK ChubuSat 1 – 2014-070C Lacrosse/Onyx 3 (USA 133) – 1997-064A TSUBAME – 2014-070E Diwata-1 – 1998-067HT GRIFEX – 2015-003D HaloSat – 1998-067NX Tianwang 1C – 2015-051B UiTMSAT-1 – 1998-067PD Fox-1A – 2015-058D Maya-1 -- 1998-067PE ChubuSat 2 – 2016-012B Tanyusha No. 3 – 1998-067PJ ChubuSat 3 – 2016-012C Tanyusha No. 4 – 1998-067PK AIST-2D – 2016-026B Catsat-2 -- 1998-067PV ÑuSat-1 – 2016-033B Delphini – 1998-067PW ÑuSat-2 – 2016-033C Catsat-1 – 1998-067PZ Dove 2p-6 – 2016-040H IOD-1 GEMS – 1998-067QK Dove 2p-10 – 2016-040P SWIATOWID – 1998-067QM Dove 2p-12 – 2016-040R NARSSCUBE-1 – 1998-067QX Beesat-4 – 2016-040W TechEdSat-10 – 1998-067RQ Dove 3p-51 – 2017-008E Radsat-U – 1998-067RF Dove 3p-79 – 2017-008AN ABS-7 – 1999-046A Dove 3p-86 – 2017-008AP Nimiq-2 – 2002-062A Dove 3p-35 – 2017-008AT DirecTV-7S – 2004-016A Dove 3p-68 – 2017-008BH Apstar-6 – 2005-012A Dove 3p-14 – 2017-008BS Sinah-1 – 2005-043D Dove 3p-20 – 2017-008C MTSAT-2 – 2006-004A Dove 3p-77 – 2017-008CF INSAT-4CR – 2007-037A Dove 3p-47 – 2017-008CN Yubileiny – 2008-025A Dove 3p-81 – 2017-008CZ AIST-2 – 2013-015D Dove 3p-87 – 2017-008DA Yaogan-18 -

Emerging Spacefaring Nations Review of Selected Countries and Considerations for Europe

+ Full Report Emerging Spacefaring Nations Review of selected countries and considerations for Europe Report: Title: “ESPI Report 79 - Emerging Spacefaring Nations - Full Report” Published: June 2021 ISSN: 2218-0931 (print) • 2076-6688 (online) Editor and publisher: European Space Policy Institute (ESPI) Schwarzenbergplatz 6 • 1030 Vienna • Austria Phone: +43 1 718 11 18 -0 E-Mail: [email protected] Website: www.espi.or.at Rights reserved - No part of this report may be reproduced or transmitted in any form or for any purpose without permission from ESPI. Citations and extracts to be published by other means are subject to mentioning “ESPI Report 79 - Emerging Spacefaring Nations - Full Report, June 2021. All rights reserved” and sample transmission to ESPI before publishing. ESPI is not responsible for any losses, injury or damage caused to any person or property (including under contract, by negligence, product liability or otherwise) whether they may be direct or indirect, special, incidental or consequential, resulting from the information contained in this publication. Design: copylot.at Cover page picture credit: Shutterstock TABLE OF CONTENTS 1 ABOUT EMERGING SPACEFARING NATIONS ........................................................................................ 8 1.1 A more diverse international space community ................................................................................ 8 1.2 Defining and mapping space actors ..................................................................................................