Through a New Lens: Apollo, Challenger and Columbia Through

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The International Space Station and the Space Shuttle

Order Code RL33568 The International Space Station and the Space Shuttle Updated November 9, 2007 Carl E. Behrens Specialist in Energy Policy Resources, Science, and Industry Division The International Space Station and the Space Shuttle Summary The International Space Station (ISS) program began in 1993, with Russia joining the United States, Europe, Japan, and Canada. Crews have occupied ISS on a 4-6 month rotating basis since November 2000. The U.S. Space Shuttle, which first flew in April 1981, has been the major vehicle taking crews and cargo back and forth to ISS, but the shuttle system has encountered difficulties since the Columbia disaster in 2003. Russian Soyuz spacecraft are also used to take crews to and from ISS, and Russian Progress spacecraft deliver cargo, but cannot return anything to Earth, since they are not designed to survive reentry into the Earth’s atmosphere. A Soyuz is always attached to the station as a lifeboat in case of an emergency. President Bush, prompted in part by the Columbia tragedy, made a major space policy address on January 14, 2004, directing NASA to focus its activities on returning humans to the Moon and someday sending them to Mars. Included in this “Vision for Space Exploration” is a plan to retire the space shuttle in 2010. The President said the United States would fulfill its commitments to its space station partners, but the details of how to accomplish that without the shuttle were not announced. The shuttle Discovery was launched on July 4, 2006, and returned safely to Earth on July 17. -

Columbia and Challenger: Organizational Failure at NASA

ARTICLE IN PRESS Space Policy 19 (2003) 239–247 Columbia and Challenger: organizational failure at NASA Joseph Lorenzo Hall* Astronomy Department/School of Information Management and Systems, Astronomy Department, University of California at Berkeley, 601 Campbell Hall, Berkeley, CA 94720-3411, USA Abstract The National Aeronautics and Space Administration (NASA)—as the global leader in all areas of spaceflight and space science— is a unique organization in terms of size, mission, constraints, complexity and motivations. NASA’s flagship endeavor—human spaceflight—is extremely risky and one of the most complicated tasks undertaken by man. It is well accepted that the tragic destruction of the Space Shuttle Challenger on 28 January 1986 was the result of organizational failure. The surprising disintegration of the Space Shuttle Columbia in February 2003—nearly 17 years to the day after Challenger—was a shocking reminder of how seemingly innocuous details play important roles in risky systems and organizations. NASA as an organization has changed considerably over the 42 years of its existence. If it is serious about minimizing failure and promoting its mission, perhaps the most intense period of organizational change lies in its immediate future. This paper outlines some of the critical features of NASA’s organization and organizational change, namely path dependence and ‘‘normalization of deviance’’. Subsequently, it reviews the rationale behind calling the Challenger tragedy an organizational failure. Finally, it argues that the recent Columbia accident displays characteristics of organizational failure and proposes recommendations for the future. r 2003 Elsevier Ltd. All rights reserved. 1. Introduction in 1967 are examples of failure at NASA that cost a total of 17 astronaut lives. -

Our Finest Hour

Our Finest Hour I recently heard Al Roker say, “This year’s hurricane season has the potential to be one of the worst on record.” I’m sure it’s true, and thousands of people are now dealing with the aftermath of Hurricane Laura, but it’s just not something I wanted to hear. It feels like one more thing – hashtag 2020. I won’t recount all of the reasons 2020 has been a difficult year. There are shared reasons we all have dealt with and individual reasons many will never know, but the bottom line is: 2020 has been hard. Even with all the challenges of this year, I am truly inspired by the resilience, heroism, and compassion I see from so many people. We need look no further than our very own healthcare facilities to be inspired by what people can do, not only in spite of incredible difficulty, but actually because of it. The way our colleagues have risen to the occasion is truly an example of the Power of One. We have seen how important it is to care for each other. This year makes me think of one of my favorite movies, Apollo 13. There’s a well- known scene where the NASA crew in Houston is waiting to find out if the astronauts are going to land safely or if they’re going to be lost forever to space. While they’re waiting, two men are talking, and one of them lists all the problems: “We got the parachute situation, the heat shield, the angle of the trajectory and the typhoon.” The other says, “This could be the worst disaster NASA’s ever experienced.” Standing nearby is Flight Director Gene Kranz, who delivers his famous line, “With all due respect sir, I believe this is going to be our finest hour.” I don’t know if we will look back at 2020 and call it our “finest hour.” It’s not a year many of us will remember fondly. -

Columbia Accident Investigation Board

COLUMBIA ACCIDENT INVESTIGATION BOARD Report Volume I August 2003 COLUMBIA ACCIDENT INVESTIGATION BOARD On the Front Cover This was the crew patch for STS-107. The central element of the patch was the microgravity symbol, µg, flowing into the rays of the Astronaut symbol. The orbital inclination was portrayed by the 39-degree angle of the Earthʼs horizon to the Astronaut symbol. The sunrise was representative of the numerous science experiments that were the dawn of a new era for continued microgravity research on the International Space Station and beyond. The breadth of science conduct- ed on this mission had widespread benefits to life on Earth and the continued exploration of space, illustrated by the Earth and stars. The constellation Columba (the dove) was chosen to symbolize peace on Earth and the Space Shuttle Columbia. In addition, the seven stars represent the STS-107 crew members, as well as honoring the original Mercury 7 astronauts who paved the way to make research in space possible. The Israeli flag represented the first person from that country to fly on the Space Shuttle. On the Back Cover This emblem memorializes the three U.S. human space flight accidents – Apollo 1, Challenger, and Columbia. The words across the top translate to: “To The Stars, Despite Adversity – Always Explore“ Limited First Printing, August 2003, by the Columbia Accident Investigation Board Subsequent Printing and Distribution by the National Aeronautics and Space Administration and the Government Printing Office Washington, D.C. 2 Report Volume I August 2003 COLUMBIA ACCIDENT INVESTIGATION BOARD IN MEMORIAM Rick D. Husband Commander William C. -

ASTRODYNAMICS 2011 Edited by Hanspeter Schaub Brian C

ASTRODYNAMICS 2011 Edited by Hanspeter Schaub Brian C. Gunter Ryan P. Russell William Todd Cerven Volume 142 ADVANCES IN THE ASTRONAUTICAL SCIENCES ASTRODYNAMICS 2011 2 AAS PRESIDENT Frank A. Slazer Northrop Grumman VICE PRESIDENT - PUBLICATIONS Dr. David B. Spencer Pennsylvania State University EDITORS Dr. Hanspeter Schaub University of Colorado Dr. Brian C. Gunter Delft University of Technology Dr. Ryan P. Russell Georgia Institute of Technology Dr. William Todd Cerven The Aerospace Corporation SERIES EDITOR Robert H. Jacobs Univelt, Incorporated Front Cover Photos: Top photo: Cassini looking back at an eclipsed Saturn, Astronomy picture of the day 2006 October 16, credit CICLOPS, JPL, ESA, NASA; Bottom left photo: Shuttle shadow in the sunset (in honor of the end of the Shuttle Era), Astronomy picture of the day 2010 February 16, credit: Expedition 22 Crew, NASA; Bottom right photo: Comet Hartley 2 Flyby, Astronomy picture of the day 2010 November 5, Credit: NASA, JPL-Caltech, UMD, EPOXI Mission. 3 ASTRODYNAMICS 2011 Volume 142 ADVANCES IN THE ASTRONAUTICAL SCIENCES Edited by Hanspeter Schaub Brian C. Gunter Ryan P. Russell William Todd Cerven Proceedings of the AAS/AIAA Astrodynamics Specialist Conference held July 31 – August 4 2011, Girdwood, Alaska, U.S.A. Published for the American Astronautical Society by Univelt, Incorporated, P.O. Box 28130, San Diego, California 92198 Web Site: http://www.univelt.com 4 Copyright 2012 by AMERICAN ASTRONAUTICAL SOCIETY AAS Publications Office P.O. Box 28130 San Diego, California 92198 Affiliated with the American Association for the Advancement of Science Member of the International Astronautical Federation First Printing 2012 Library of Congress Card No. -

Developing the Space Shuttle1

****EU4 Chap 2 (161-192) 4/2/01 12:45 PM Page 161 Chapter Two Developing the Space Shuttle1 by Ray A. Williamson Early Concepts of a Reusable Launch Vehicle Spaceflight advocates have long dreamed of building reusable launchers because they offer relative operational simplicity and the potential of significantly reduced costs com- pared to expendable vehicles. However, they are also technologically much more difficult to achieve. German experimenters were the first to examine seriously what developing a reusable launch vehicle (RLV) might require. During the 1920s and 1930s, they argued the advantages and disadvantages of space transportation, but were far from having the technology to realize their dreams. Austrian engineer Eugen M. Sänger, for example, envi- sioned a rocket-powered bomber that would be launched from a rocket sled in Germany at a staging velocity of Mach 1.5. It would burn rocket fuel to propel it to Mach 10, then skip across the upper reaches of the atmosphere and drop a bomb on New York City. The high-flying vehicle would then continue to skip across the top of the atmosphere to land again near its takeoff point. This idea was never picked up by the German air force, but Sänger revived a civilian version of it after the war. In 1963, he proposed a two-stage vehi- cle in which a large aircraft booster would accelerate to supersonic speeds, carrying a rel- atively small RLV to high altitudes, where it would be launched into low-Earth orbit (LEO).2 Although his idea was advocated by Eurospace, the industrial consortium formed to promote the development of space activities, it was not seriously pursued until the mid- 1980s, when Dornier and other German companies began to explore the concept, only to drop it later as too expensive and technically risky.3 As Sänger’s concepts clearly illustrated, technological developments from several dif- ferent disciplines must converge to make an RLV feasible. -

CHAPTER 9: the Flight of Apollo

CHAPTER 9: The Flight of Apollo The design and engineering of machines capable of taking humans into space evolved over time, and so too did the philosophy and procedures for operating those machines in a space environment. MSC personnel not only managed the design and construction of space- craft, but the operation of those craft as well. Through the Mission Control Center, a mission control team with electronic tentacles linked the Apollo spacecraft and its three astronauts with components throughout the MSC, NASA, and the world. Through the flights of Apollo, MSC became a much more visible component of the NASA organization, and oper- ations seemingly became a dominant focus of its energies. Successful flight operations required having instant access to all of the engineering expertise that went into the design and fabrication of the spacecraft and the ability to draw upon a host of supporting groups and activities. N. Wayne Hale, Jr., who became a flight director for the later Space Transportation System (STS), or Space Shuttle, missions, compared the flights of Apollo and the Shuttle as equivalent to operating a very large and very complex battleship. Apollo had a flight crew of only three while the Shuttle had seven. Instead of the thousands on board being physically involved in operating the battleship, the thousands who helped the astronauts fly Apollo were on the ground and tied to the command and lunar modules by the very sophisticated and advanced electronic and computer apparatus housed in Mission Control.1 The flights of Apollo for the first time in history brought humans from Earth to walk upon another celes- tial body. -

What Lessons Can We Learn from the Report of the Columbia Accident Investigation Board for Engineering Ethics?

Technè 10:1 Fall 2006 Murata, From the Challenger to Columbia/...35 From Challenger to Columbia: What lessons can we learn from the report of the Columbia accident investigation board for engineering ethics? Junichi Murata University of Tokyo, Department of History and Philosophy of Science, 3-8-1 Komaba, Meguro-ku, Tokyo, Japan phone: +81 3 5454 6693, fax: +81 3 5454 6978 email: [email protected] Abstract: One of the most important tasks of engineering ethics is to give engineers the tools required to act ethically to prevent possible disastrous accidents which could result from engineers’ decisions and actions. The space shuttle Challenger disaster is referred to as a typical case in almost every textbook. This case is seen as one from which engineers can learn important lessons, as it shows impressively how engineers should act as professionals, to prevent accidents. The Columbia disaster came seventeen years later in 2003. According to the report of the Columbia accident investigation board, the main cause of the accident was not individual actions which violated certain safety rules but rather was to be found in the history and culture of NASA. A culture is seen as one which desensitized managers and engineers to potential hazards as they dealt with problems of uncertainty. This view of the disaster is based on Dian Vaughan’s analysis of the Challenger disaster, where inherent organizational factors and culture within NASA had been highlighted as contributing to the disaster. Based on the insightful analysis of the Columbia report and the work of Diane Vaughan, we search for an alternative view of engineering ethics. -

Skylab: the Human Side of a Scientific Mission

SKYLAB: THE HUMAN SIDE OF A SCIENTIFIC MISSION Michael P. Johnson, B.A. Thesis Prepared for the Degree of MASTER OF ARTS UNIVERSITY OF NORTH TEXAS May 2007 APPROVED: J. Todd Moye, Major Professor Alfred F. Hurley, Committee Member Adrian Lewis, Committee Member and Chair of the Department of History Sandra L. Terrell, Dean of the Robert B. Toulouse School of Graduate Studies Johnson, Michael P. Skylab: The Human Side of a Scientific Mission. Master of Arts (History), May 2007, 115pp., 3 tables, references, 104 titles. This work attempts to focus on the human side of Skylab, America’s first space station, from 1973 to 1974. The thesis begins by showing some context for Skylab, especially in light of the Cold War and the “space race” between the United States and the Soviet Union. The development of the station, as well as the astronaut selection process, are traced from the beginnings of NASA. The focus then shifts to changes in NASA from the Apollo missions to Skylab, as well as training, before highlighting the three missions to the station. The work then attempts to show the significance of Skylab by focusing on the myriad of lessons that can be learned from it and applied to future programs. Copyright 2007 by Michael P. Johnson ii ACKNOWLEDGEMENTS This thesis would not be possible without the help of numerous people. I would like to begin, as always, by thanking my parents. You are a continuous source of help and guidance, and you have never doubted me. Of course I have to thank my brothers and sisters. -

Burbedge, Johnson, Wise, Yuan 1 Annotated Bibliography Primary Sources “1986: Space Shuttle Challenger Disaster Live on CNN. 2

Burbedge, Johnson, Wise, Yuan 1 Annotated Bibliography Primary Sources “1986: Space Shuttle Challenger disaster Live on CNN.” Youtube, uploaded by CNN, 27 Jan. 2011, <www.youtube.com/watch?reload=9&v=AfnvFnzs91s>. This video is important because it provided clips of the Challenger exploding that we used in our documentary. It was shot live by CNN when the Challenger launched. This was the first video that we all saw of the Challenger as it exploded. Broad, William J. “6 in crew and High School Teacher teacher are killed 74 seconds after liftoff.” The Shuttle Explodes, The New York Times, 29 Jan. 1986, <http://movies2.nytimes.com/learning/general/onthisday/big/0128.html>. This newspaper article helped us get a credible (it was the NY Times) and detailed explanation regarding the who, what, when, where, and how of the Challenger disaster. It gave some information that other sources didn’t have, like what happened right before and right after the Challenger exploded. Forrester, Bob. Interviewed by Caroline Johnson. 9 Mar. 2019. This source was very important and crucial to the creation of our documentary. It was one of the interviews that we had in the documentary. We were able to learn vital and very interesting information from Mr. Forrester. The information that was given to us not only furthered our knowledge, but also improved the documentary as a whole. This was a primary source because we were able to get information directly from a source that both witnessed the Challenger disaster, and was also highly involved with the program. Fuqua. “Investigation of the Challenger Accident.” Government Publishing Office, 29 Oct. -

Shoot for the Moon: the Space Race and the Extraordinary Voyage of Apollo 11

Shoot for the Moon: The Space Race and the Extraordinary Voyage of Apollo 11 Hardcover – March 12, 2019 By James Donovan (Author) „It does not really require a pilot and besides you have to sweep the monkey shit aside before you sit down” a slightly envious Chuck Yeager is quoted in chapter 2 of the book, titled “Of Monkeys and Men”. In the end, the concept to first send monkeys as forerunners for humans into space was right: with a good portion of luck, excellently trained test pilots, who were not afraid to put their lives on the line, dedicated managers and competent mission control teams Kennedy's bold challenge became true. This book by James Donovan was published in 2019 for the 50the anniversary of the first landing of the two astronauts Neil Armstrong and “Buzz”Aldrin on the Moon. The often published events are re-told in a manner making it worthwhile to read again as an “eye witness” but it serves also as legacy for the next generations. The author reports the events as a thoroughly researched adventure but also has the talent to tell the events like a close friend to the involved persons and astronauts and is able to create the resentments, fears and feelings of the American people with respect to “landing a man on the Moon and bring him back safely” over the time period which was started by the “Sputnik-shock” in 1957. The book can also be seen as homage of the engineers, scientists and technicians bringing the final triumph home. -

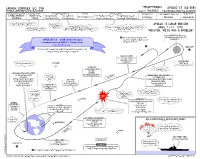

View the Apollo 13 Chart

APOLLO 13 (AS-508) LC-39A JULY 2015 JOHN F. KENNEDY SPACE CENTER AS-508-2 3rd LUNAR LANDING MISSION MISSION FACTS & HIGHLIGHTS Apollo 13 was to be NASA’s third mission to land on the Moon. The spacecraft launched from the Kennedy Space Center in Florida. Midway to the moon, an explosion in one of the oxygen tanks of the service module crippled the spacecraft. After the in-flight emergency, for safety reasons, the crew was forced to temporarily power down and evacuate the command module, taking refuge in the lunar module. The spacecraft then orbited the Moon without landing. Later in the flight, heading back toward Earth, the crew returned to the command module, restored power, jettisoned the damaged service module, then the lunar module and, after an anxious re- entry blackout period, returned safely to the Earth. The command module and its crew slasheddown in the South Pacific Ocean and were recovered by the U.S.S. Iwo Jima (LPH-2). FACTS • Apollo XIII Mission Motto: Ex Luna, Scientia • Lunar Module: Aquarius • Command and Service Module: Odyssey • Crew: • James A. Lovell, Jr. - Commander • John L. Swigert, Jr. - Command Module Pilot ( * ) • Fred W. Haise, Jr. - Lunar Module Pilot • NASA Flight Directors • Milt Windler - Flight Director Shift #1 • Gerald Griffin - Flight Director Shift #2 • Gene Kranz - Flight Director Shift #3 • Glynn Lunney - Flight Director Shift #4 • William Reeves - Systems • Launch Site: John F. Kennedy Space Center, Florida • Launch Complex: Pad 39A • Launch: 2:13 pm EST, April 11, 1970 •Orbit: • Altitude: 118.99 miles • Inclination 32.547 degrees • Earth orbits: 1.5 • Lunar Landing Site: Intended to be Fra Mauro (later became landing site for Apollo 14) • Return to Earth: April 17, 1970 • Splashdown: 18:07:41 UTC (1:07:41 pm EST) • Recovery site location: South Pacific Ocean (near Samoa) S21 38.6 W165 21.7 • Recovery ship: U.S.S.