Reconstructing the Fragmented Library of Mondsee Abbey

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Reading Monastic History in Bookbinding Waste

Fragmentology A Journal for the Study of Medieval Manuscript Fragments Fragmentology is an international, peer-reviewed Open Access journal, dedicated to publishing scholarly articles and reviews concerning medieval manuscript frag- ments. Fragmentology welcomes submissions, both articles and research notes, on any aspect pertaining to Latin and Greek manuscript fragments in the Middle Ages. Founded in 2018 as part of Fragmentarium, an international research project at the University of Fribourg (Switzerland) funded by the Swiss National Science Foundation, the Stavros Niarchos Foundation, and the Zeno-Karl-Schindler Foun- dation, Fragmentology is owned and published by Codices Electronici AG and controlled by the Editorial Board in service to the scholarly community. Authors of articles, research notes, and reviews published in Fragmentology retain copyright over their works and have agreed to publish them in open access under a Creative Commons Attribution license. Submissions are free, and Fragmentology does not require payment or membership from authors or institutions. Editors: Christoph Flüeler (Fribourg) William Duba (Fribourg) Book Review Editor: Veronika Drescher (Fribourg/Paris) Editorial Board: Lisa Fagin Davis, (Boston, MA), Christoph Egger (Vienna), Thomas Falmagne (Frankfurt), Scott Gwara (Columbia, SC), Nicholas Herman (Philadelphia), Christoph Mackert (Leipzig), Marilena Maniaci (Cassino), Stefan Morent (Tübingen), Åslaug Ommundsen (Bergen), Nigel Palmer (Oxford) Instructions for Authors: Detailed instructions can be found at http://fragmen- tology.ms/submit-to-fragmentology/. Authors must agree to publish their work in Open Access. Fragmentology is published annually at the University of Fribourg. For further information, inquiries may be addressed to [email protected]. Editorial Address: Fragmentology University of Fribourg Rue de l’Hôpital 4 1700 Fribourg, Switzerland. -

BR0083 000 A.Pdf

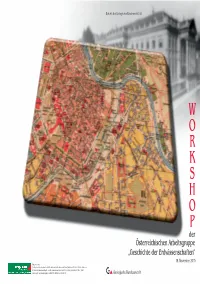

Umschlag 83-2010 Workshop.ps D:\ACINOM\Publikationen\Berichte der GBA\83-2010 Workshop GeschErdw\Umschlag 83-2010.cdr Donnerstag, 11. November 2010 15:31:54 Farbprofil: Deaktiviert Composite Standardbildschirm 100 100 Berichte der Geologischen Bundesanstalt, 83 95 95 75 75 25 25 5 5 0 0 100 100 95 95 75 75 19. November 2010 Ausschnitt aus: 25 „G. Freytag's Verkehrsplan der k. k. Reichshaupt- & Residenzstadt Wien, Maßstab 1:15.000 – Mit vollständigem 25 Straßenverzeichnis und Angabe der Häusernummern, Druck und Verlag G. Freytag & Berndt, Wien, 1902“ 5 mit freundlicher Genehmigung von FREYTAG-BERNDT u. ARTARIA KG. Geologische Bundesanstalt 5 0 0 Workshop der Österreichischen Arbeitsgruppe „Geschichte der Erdwissenschaften“ 19. November 2010 Geologische Bundesanstalt Beiträge zum Workshop Redaktion: Bernhard Hubmann & Johannes Seidl Berichte der Geologischen Bundesanstalt, 83 Wien, im November 2010 Impressum Berichte der Geologischen Bundesanstalt, 83 ISSN 1017-8880 Wien, im November 2010 Workshop der Österreichischen Arbeitsgruppe „Geschichte der Erdwissenschaften“ 19. November 2010 Geologische Bundesanstalt Beiträge zum Workshop Vordere Umschlagseite: Ausschnitt aus: „G. Freytag’s Verkehrsplan der k. k. Reichshaupt- & Residenzstadt Wien, Maßstab 1:15.000 – Mit vollständigem Straßenverzeichnis und Angabe der Häusernummern, Druck und Verlag G. Freytag & Berndt, Wien, 1902“ mit freundlicher Genehmigung von FREYTAG-BERNDT u. ARTARIA KG (Kartographisches Institut und Verlag). Siehe dazu Beitrag von SUTTNER, HÖFLER & HOFMANN, S. 41 ff. Alle Rechte für das In- und Ausland vorbehalten © Geologische Bundesanstalt Medieninhaber, Herausgeber und Verleger: Geologische Bundesanstalt, A-1030 Wien, Neulinggasse 38, Österreich Die Autorinnen und Autoren sind für den Inhalt ihrer Arbeiten verantwortlich und sind mit der digitalen Verbreitung Ihrer Arbeiten im Internet einverstanden. -

Maps -- by Region Or Country -- Eastern Hemisphere -- Europe

G5702 EUROPE. REGIONS, NATURAL FEATURES, ETC. G5702 Alps see G6035+ .B3 Baltic Sea .B4 Baltic Shield .C3 Carpathian Mountains .C6 Coasts/Continental shelf .G4 Genoa, Gulf of .G7 Great Alföld .P9 Pyrenees .R5 Rhine River .S3 Scheldt River .T5 Tisza River 1971 G5722 WESTERN EUROPE. REGIONS, NATURAL G5722 FEATURES, ETC. .A7 Ardennes .A9 Autoroute E10 .F5 Flanders .G3 Gaul .M3 Meuse River 1972 G5741.S BRITISH ISLES. HISTORY G5741.S .S1 General .S2 To 1066 .S3 Medieval period, 1066-1485 .S33 Norman period, 1066-1154 .S35 Plantagenets, 1154-1399 .S37 15th century .S4 Modern period, 1485- .S45 16th century: Tudors, 1485-1603 .S5 17th century: Stuarts, 1603-1714 .S53 Commonwealth and protectorate, 1660-1688 .S54 18th century .S55 19th century .S6 20th century .S65 World War I .S7 World War II 1973 G5742 BRITISH ISLES. GREAT BRITAIN. REGIONS, G5742 NATURAL FEATURES, ETC. .C6 Continental shelf .I6 Irish Sea .N3 National Cycle Network 1974 G5752 ENGLAND. REGIONS, NATURAL FEATURES, ETC. G5752 .A3 Aire River .A42 Akeman Street .A43 Alde River .A7 Arun River .A75 Ashby Canal .A77 Ashdown Forest .A83 Avon, River [Gloucestershire-Avon] .A85 Avon, River [Leicestershire-Gloucestershire] .A87 Axholme, Isle of .A9 Aylesbury, Vale of .B3 Barnstaple Bay .B35 Basingstoke Canal .B36 Bassenthwaite Lake .B38 Baugh Fell .B385 Beachy Head .B386 Belvoir, Vale of .B387 Bere, Forest of .B39 Berkeley, Vale of .B4 Berkshire Downs .B42 Beult, River .B43 Bignor Hill .B44 Birmingham and Fazeley Canal .B45 Black Country .B48 Black Hill .B49 Blackdown Hills .B493 Blackmoor [Moor] .B495 Blackmoor Vale .B5 Bleaklow Hill .B54 Blenheim Park .B6 Bodmin Moor .B64 Border Forest Park .B66 Bourne Valley .B68 Bowland, Forest of .B7 Breckland .B715 Bredon Hill .B717 Brendon Hills .B72 Bridgewater Canal .B723 Bridgwater Bay .B724 Bridlington Bay .B725 Bristol Channel .B73 Broads, The .B76 Brown Clee Hill .B8 Burnham Beeches .B84 Burntwick Island .C34 Cam, River .C37 Cannock Chase .C38 Canvey Island [Island] 1975 G5752 ENGLAND. -

Books, Manuscripts and More for March 2020

Leo Cadogan March 2020 [email protected] LEO CADOGAN RARE BOOKS 74 Mayton Street, London N7 6QT BOOKS, MANUSCRIPTS AND MORE FOR MARCH 2020 item 44 FARMING, WEATHER AND MEDICAL ADVICE FOR THE YEAR 1. Aicher, Otto, O.S.B.: Institutiones oeconomicae, sive discursus morales in duos libros Oeconomicorum Aristotelis, quibus omnia domesticae doctrinae, seu familiae regendae 1 Leo Cadogan March 2020 [email protected] elementa continentur. Accessit his liber III. de oeconomia in specie, quid quovis mense faciendum sit oeconomo. Salisburgi [Salzburg], typis Melchioris Hann 1690. 8vo. (14.8 cms. x 9.6 cms.), pp. [16] 223 [1]. Engraved extra title-page, featuring woman holding shovel (personification of Economy?), by J. Franck (possibly the German engraver Johann Franck - cf. Benezit). Light spotting and browning, very good, bound in brown morocco, decorated in gilt, arms at centre of front cover of Mondsee Abbey in Upper Austria, edges gilt, ties removed (worming to front cover, slightly rubbed). Inscription to verso of extra title-page, (Josephus ? Rysigger, professus Mondseensis, 1690), covered over with paper, a small seal applied to front pastedown, inscription to f.f.e.p. recto “Fran: Jac: Posch parochus in Ischl”. Finely-bound copy of the first edition of this handbook to household management, following the pseudo-Aristotelian work ‘Economics’. The book includes (126-223) a guide to the months of the year, with each month having a list of things to do on the farm, a list of rustic observations, which are largely concerned with the weather, and a list of medical observations. There are also general chapters on the times for sowing seed, instructions for what to do when the moon is in each sign of the Zodiac, and a list of rules for the farm manager. -

Fremontia Journal of the California Native Plant Society

$10.00 (Free to Members) VOL. 40, NO. 1 AND VOL. 40, NO. 2 • JANUARY 2012 AND MAY 2012 FREMONTIA JOURNAL OF THE CALIFORNIA NATIVE PLANT SOCIETY THE NEW JEPSONJEPSON MANUALMANUAL THE FIRST FLORA OF CALIFORNIA NAMING OF THE GENUS SEQUOIA FENS:FENS: AA REMARKABLEREMARKABLE HABITATHABITAT AND OTHER ARTICLES VOL. 40, NO. 1 AND VOL. 40, NO. 2, JANUARY 2012 AND MAY 2012 FREMONTIA CALIFORNIA NATIVE PLANT SOCIETY CNPS, 2707 K Street, Suite 1; Sacramento, CA 95816-5130 FREMONTIA Phone: (916) 447-CNPS (2677) Fax: (916) 447-2727 Web site: www.cnps.org Email: [email protected] VOL. 40, NO. 1, JANUARY 2012 AND VOL. 40, NO. 2, MAY 2012 MEMBERSHIP Membership form located on inside back cover; Copyright © 2012 dues include subscriptions to Fremontia and the CNPS Bulletin California Native Plant Society Mariposa Lily . $1,500 Family or Group . $75 Bob Hass, Editor Benefactor . $600 International or Library . $75 Patron . $300 Individual . $45 Beth Hansen-Winter, Designer Plant Lover . $100 Student/Retired/Limited Income . $25 Brad Jenkins, Cynthia Powell, CORPORATE/ORGANIZATIONAL and Cynthia Roye, Proofreaders 10+ Employees . $2,500 4-6 Employees . $500 7-10 Employees . $1,000 1-3 Employees . $150 CALIFORNIA NATIVE PLANT SOCIETY STAFF – SACRAMENTO CHAPTER COUNCIL Executive Director: Dan Glusenkamp David Magney (Chair); Larry Levine Dedicated to the Preservation of Finance and Administration (Vice Chair); Marty Foltyn (Secretary) Manager: Cari Porter Alta Peak (Tulare): Joan Stewart the California Native Flora Membership and Development Bristlecone (Inyo-Mono): -

Martius and Flora Brasiliensis, Names Not to Be Forgotten ♣

ACTA SCIENTIFIC AGRICULTURE (ISSN: 2581-365X) Volume 3 Issue 12 December 2019 Research Article Martius and Flora Brasiliensis, Names Not to be Forgotten ♣ João Vicente Ganzarolli de Oliveira* Senior Professor and Researcher of the Tércio Pacitti Institute of the Federal University of Rio de Janeiro, Brazil *Corresponding Author: João Vicente Ganzarolli de Oliveira, Senior Professor and Researcher of the Tércio Pacitti Institute of the Federal University of Rio de Janeiro, Brazil. Received: November 4, 2019; Published: November 29, 2019 DOI: 10.31080/ASAG.2019.03.0735 There are three stages of scientific discovery: first people deny it is true; then they deny it is important; finally they credit the wrong person. (…) How a person masters his fate is more important than what his fate is. Alexander von Humboldt Abstract This article focuses the life and work of the German botanist and explorer Karl Friedrich Philipp von Martius (1794-1868), notably his Flora Brasiliensis. Martius’ most important work is an unrivalled (and well succeeded) attempt to classify the plants (mostly angiosperms) of Brazil, a country of continental dimensions that encompasses one third of South America; in spite of the fact that the Flora Brasiliensis became a standard reference for the identification of Brazilian and South American vegetation in general, in Brazil, MartiusKeywords: and Martius; his magnificent Flora Braziliensis; work have Brazil;been systematically Plants; Nature relegated to oblivion. Introduction: without plants… that the Flora Brasiliensis became a standard reference for the iden- 2. The following lines concern the life and work of the German natu- One should bear in mind that, “In the face of the enormous amount ralist Karl Friedrich Philipp von Martius (1794-1868), notably his tification of Brazilian and South American vegetation in general Flora Brasiliensis. -

Lehre Und Lehrbücher Der Naturgeschichte an Der Universität Wien Von 1749 Bis 1849

©Geol. Bundesanstalt, Wien; download unter www.geologie.ac.at Berichte der Geologischen Bundesanstalt, ISSN 1017-8880, Band 83, Wien 2010 BEITRÄGE Lehre und Lehrbücher der Naturgeschichte an der Universität Wien von 1749 bis 1849 Matthias Svojtka Anton Baumgartnerstr. 44/A4/092, A-1230 Wien; e-mail: [email protected] Blüht denn noch keine Stapelie?1 „Es werden also die Dinge, die um uns sind, nach jener Ordnung betrachtet, wie die Natur auf den Stufen der Organisatzion [sic!] vom Staube der Erde bis zum edlen Metalle, vom Metalle zur Pflanze, zum Thiere und Menschen hinaufsteigt. Dieses leistet die Naturgeschichte, welche eine sehr nützliche Anwendung noch dadurch erhält, wenn auch die Vertheilung der Produkten [sic!] auf unserm Erdball gezeiget wird“2. Die Naturgeschichte ist zunächst und vor allem eine beschreibende und – seit Carl von Linné (1707-1787) – systematisierende Wissenschaft. Beschrieben werden Naturkörper aus den „drei Reichen“ Mineralogie, Botanik und Zoologie, vornehmlich nach ihren äußeren Kennzeichen3. Dabei ist primär kein Gedanke an eine „Geschichte der Natur“, also eine Genese der Naturkörper im Laufe der Erdgeschichte, enthalten – Naturgeschichte darf hier noch mit Naturerzählung gleichgesetzt werden (EGGLMAIER 1988: VIII). Erst mit der zunehmenden „Verzeitlichung“ in den Naturwissenschaften in der zweiten Hälfte des 18. Jahrhunderts und dem Aufkommen des Begriffes „Biologie“ (nach unserem heutigen Verständnis) 4 im Jahr 1802 entwickelte sich die Naturgeschichte mehr und mehr von einer Beschreibung der Lebewesen zu einer Wissenschaft vom Leben (LEPENIES 1976)5. Der naturgeschichtliche Unterricht umfasste in Summe immer Inhalte aus den drei Reichen – Mineralogie, Botanik und Zoologie; in Wien kam es bereits 1749 an der Medizinischen Fakultät zur Einrichtung einer Lehrkanzel für Botanik (und Chemie). -

Fun in the Water for All the Family

The MondSeeLand, home to both the Mondsee and Irrsee lake, has been a popular holiday destination for decades. At just 27 km away from the festival city of Salzburg, international guests to the region can enjoy the many activities it has to offer. Fun in the water for all the family The Mondsee and Irrsee lakes are the warmest lakes in the Salzkammergut region and can reach temperatures of 27 °C in July and August. The Irrsee lake is very natural and ideal for all gentle water sports. The Mondsee lake plays host to numerous water sports activities such as surfing and sailing schools, wakeboarding, kite surfing, water skiing schools, diving schools, boat trips, diving, etc. The Mondsee is 11.8 km long and 2.5 km at its widest point. The Irrsee is 5 km long and 1 km at its widest point. Both lakes have excellent water quality. - 1 - Walking, cycling, running A high quality network of footpaths has been created around the Mondsee and Irrsee over the last few years, covering more than 100 km and leading to the neighbouring lakes – the Attersee, Fuschlsee and Wolfgangsee. A nature map of the MondSeeLand can be purchased from the tourist office (for 1 euro). This shows all the footpaths, cycle paths, jogging paths and specially designated relaxation areas. The cycle paths and footpaths are all well signposted and suitable for family days out. Meetings, congresses and company events The ‘Schloss Mondsee’ castle is home to a modern conference and events centre for hosting a variety of activities. International congresses and conferences of up to 500 participants are increasingly being held here, attracted by the fascination of the MondSeeLand, the good connection with the A1 motorway and the high quality historical atmosphere. -

The Thun-Hohenstein University Reforms 1849–1860

The Thun-Hohenstein University Reforms 1849–1860 Conception – Implementation – Aftermath Edited by Christof Aichner and Brigitte Mazohl VERÖFFENTLICHUNGEN DER KOMMISSION FÜR NEUERE GESCHICHTE ÖSTERREICHS Band 115 Kommission für Neuere Geschichte Österreichs Vorsitzende: em. Univ.-Prof. Dr. Brigitte Mazohl Stellvertretender Vorsitzender: Univ.-Prof. Dr. Reinhard Stauber Mitglieder: Dr. Franz Adlgasser Univ.-Prof. Dr. Peter Becker Univ.-Prof. i. R. Dr. Ernst Bruckmüller Univ.-Prof. Dr. Laurence Cole Univ.-Prof. Dr. Margret Friedrich Univ.-Prof. Dr. Elisabeth Garms-Cornides Univ.-Prof. Dr. Michael Gehler Univ.-Doz. Mag. Dr. Andreas Gottsmann Univ.-Prof. Dr. Margarete Grandner em. Univ.-Prof. Dr. Hanns Haas Univ.-Prof. i. R. Dr. Wolfgang Häusler Univ.-Prof. i. R. Dr. Ernst Hanisch Univ.-Prof. Dr. Gabriele Haug-Moritz Dr. Michael Hochedlinger Univ.-Prof. Dr. Lothar Höbelt Mag. Thomas Just Univ.-Prof. i. R. Dr. Grete Klingenstein em. Univ.-Prof. Dr. Alfred Kohler Univ.-Prof. Dr. Christopher Laferl Gen. Dir. Univ.-Doz. Dr. Wolfgang Maderthaner Dr. Stefan Malfèr Gen. Dir. i. R. H.-Prof. Dr. Lorenz Mikoletzky Dr. Gernot Obersteiner Dr. Hans Petschar em. Univ.-Prof. Dr. Helmut Rumpler Univ.-Prof. Mag. Dr. Martin Scheutz em. Univ.-Prof. Dr. Gerald Stourzh Univ.-Prof. Dr. Arno Strohmeyer Univ.-Prof. i. R. Dr. Arnold Suppan Univ.-Doz. Dr. Werner Telesko Univ.-Prof. Dr. Thomas Winkelbauer Sekretär: Dr. Christof Aichner The Thun-Hohenstein University Reforms 1849–1860 Conception – Implementation – Aftermath Edited by Christof Aichner and Brigitte Mazohl Published with the support from the Austrian Science Fund (FWF): PUB 397-G28 Open access: Except where otherwise noted, this work is licensed under a Creative Commons Attribution 4.0 Unported License. -

Evolution, Revolution, and Reform in Vienna: Franz Unger's Ideas On

Journal of the History of Biology 31: 179–209, 1998. 179 © 1998 Kluwer Academic Publishers. Printed in the Netherlands. Evolution, Revolution, and Reform in Vienna: Franz Unger’s Ideas on Descent and Their Post-1848 Reception SANDER GLIBOFF Department of the History of Science, Medicine, and Technology The Johns Hopkins University Baltimore, MD 21218, U.S.A. Introduction Beginning in the fall of 1851, Viennese readers could follow a heated dis- pute in the press involving botanist Franz Unger (1800–1871) and his new theory of evolution. Unger was one of the professors recruited for the Uni- versity of Vienna in the course of educational reforms begun in response to the Revolutions of 1848. He was already well known for many and varied contributions to cell biology, plant physiology and pathology, biogeography, and paleobotany, and he was expected to help make Vienna into a center of scientific research. Today, accounts of Unger’s work may be found in histories of botany and surveys of pre-Darwinian evolution, as well as in some of the literature on Gregor Mendel, who attended Unger’s courses in the early 1850s.1 Unger set off the dispute with a series of newspaper articles, titled Botanische Briefe (Botanical letters), in which he argued that all plants 1 On Unger’s contributions to botany, see Johanna Enslein, “Die wissenschaftsge- schichtliche Untersuchung und Wertung der anatomischen, physiologischen und ökologischen Arbeiten von Franz Unger,” Ph. D. diss., University of Vienna, 1960; Martin Möbius, Geschichte der Botanik (Jena: Gustav Fischer, 1937); Julius Sachs, Geschichte der Botanik (Munich: R. Oldenbourg, 1875). -

Cambridge University Press 978-1-107-04210-0 — the Cambridge History of Medieval Monasticism in the Latin West Volume 2 Index More Information

Cambridge University Press 978-1-107-04210-0 — The Cambridge History of Medieval Monasticism in the Latin West Volume 2 Index More Information 1191 Index Aachen (chapel), 420 advocates, 859 – 61 Aachen, Synods of (816-819), 184 , 186 – 7 , 291 , lay nobility as, 581 , 585 323 , 375 , 387 , 422 , 424 , 438 – 41 , 455 , 462 , 482 , Áed mac Bricc of Rahugh, 301 497 – 8 , 508 – 9 , 526 Ælfric of Eynsham, 511 , 513 Aaron, Bishop of Kraków, 885 Colloquy , 415 Abelard, Peter, 652 , 675 , 682 , 697 , 735 , 740 , 741 , 743 , Aelred of Rievaulx, 573 , 721 , 751 , 753 , 756 777 , 967 , 1076 Rule for a Recluse , 753 , 759 Sic et Non, 458 Æthelwold of Winchester, 426 , 507 – 17 , Abbaye- aux- Dames of Saintes (monastery), 252 – 53 534 , 539 – 40 Abbo of Fleury, 595 , 627 Agaune, Life of the Abbots of , 61 Collectio , 6 2 1 , 6 2 7 Agaune, Saint- Maurice d’ (monastery), 37 , 116 , Abraham of Kaškar, 69 181 , 244 , 248 , 292 – 3 Abraham of Pboou/ Farshut, 54 Agde, Council of (506), 750 Abraham of Quiduna, Life of, 749 , 759 Agilulf, Lombard King, 238 Adalbero of Laon, Carmen ad Rotbertum Agnes of Antioch, 889 regem , 1157 Agnes of Babenberg, 889 Acemetes (monastery), 343 – 44 Agnes of Bohemia, 893 Acta Murensia , 5 7 2 Agnes of Hereford, 907 Acts of Paul and Thecla , 43 , 99 Agnes of Meissen (or Quedlinburg), 1004 Acts of Peter , 43 Agriculture, see also property and land Acts of the Apostles , 42 animal husbandry and pastoralism, 841 – 44 Acts of Thomas , 43 in Byzantium, 354 – 56 , see also property and land Adalard of Corbie, 460 , 472 cereal production, -

St. Pölten Abbey Was Founded Around 790 by the Bene 788 It Became an Imperial Benedictine Abbey

MigratiMigratioonn iinn thethe MiddlMiddlee Ages:Ages: ParasiteParasite stagesstages inin mmoonasterinasterialal latrinelatrine pits.pits. AndreasAndreas R.R. HasslHassl1,41,4,, AlicAlicee KKaaltenbergerltenberger22,, RonalRonaldd RisyRisy33 1 Institute of Specific Prophylaxis and Tropical Medicine, Center for Pathophysiology, Infectiology and Immunology, Medical University of Vienna 2 Institute of Archeologies, University of Innsbruck 3 Stadtarchäologie St. Pölten 4 MicroMicro--BBiiologologyy CCoonsultnsult Dr.Dr. AndAndrreaseas HasslHassl A fascinating aspect of archeomicrobiology is the evidence of endoparasitic diseases in long before deceased persons and domestic animals that can be revealed by studying well preserved excretions and that can elucidate everyday life of groups of people. During more recent archeological excavations in abandoned monasteries in Mondsee (Upper Austria) and St. Pölten (Lower Austria) well preserved refuse pits were discovered and the contents were scientifically processed in an interdisciplinary approach. Mondsee Abbey was founded in 748 by the Bavarian duke, in The St. Pölten abbey was founded around 790 by the Bene 788 it became an Imperial Benedictine abbey. 831 – 1142 it dictine abbey Tegernsee. After devastation it was repopulated was part of the monastery to Regensburg Cathedral. In 1506 by Canons Regular in 1081. In 1784 the abbey was dissolved, the possession passed from Bavaria to Austria. After a period but the buildings are used as the bishop seat of the diocese St. of decline during the Reformation, the abbey entered a second Pölten since 1785. period of prosperity, culminating in an extensive re-building of In the case of the abbey in St. the church and the monastic premises 1730 – 1770. In 1791 the abbey was dissolved.