Node-Level Coordinated Power-Performance Management Rathijit Sen David A

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Microarchitecture of the Pentium 4 Processor

The Microarchitecture of the Pentium 4 Processor Glenn Hinton, Desktop Platforms Group, Intel Corp. Dave Sager, Desktop Platforms Group, Intel Corp. Mike Upton, Desktop Platforms Group, Intel Corp. Darrell Boggs, Desktop Platforms Group, Intel Corp. Doug Carmean, Desktop Platforms Group, Intel Corp. Alan Kyker, Desktop Platforms Group, Intel Corp. Patrice Roussel, Desktop Platforms Group, Intel Corp. Index words: Pentium® 4 processor, NetBurst™ microarchitecture, Trace Cache, double-pumped ALU, deep pipelining provides an in-depth examination of the features and ABSTRACT functions of the Intel NetBurst microarchitecture. This paper describes the Intel® NetBurst™ ® The Pentium 4 processor is designed to deliver microarchitecture of Intel’s new flagship Pentium 4 performance across applications where end users can truly processor. This microarchitecture is the basis of a new appreciate and experience its performance. For example, family of processors from Intel starting with the Pentium it allows a much better user experience in areas such as 4 processor. The Pentium 4 processor provides a Internet audio and streaming video, image processing, substantial performance gain for many key application video content creation, speech recognition, 3D areas where the end user can truly appreciate the applications and games, multi-media, and multi-tasking difference. user environments. The Pentium 4 processor enables real- In this paper we describe the main features and functions time MPEG2 video encoding and near real-time MPEG4 of the NetBurst microarchitecture. We present the front- encoding, allowing efficient video editing and video end of the machine, including its new form of instruction conferencing. It delivers world-class performance on 3D cache called the Execution Trace Cache. -

Multiprocessing Contents

Multiprocessing Contents 1 Multiprocessing 1 1.1 Pre-history .............................................. 1 1.2 Key topics ............................................... 1 1.2.1 Processor symmetry ...................................... 1 1.2.2 Instruction and data streams ................................. 1 1.2.3 Processor coupling ...................................... 2 1.2.4 Multiprocessor Communication Architecture ......................... 2 1.3 Flynn’s taxonomy ........................................... 2 1.3.1 SISD multiprocessing ..................................... 2 1.3.2 SIMD multiprocessing .................................... 2 1.3.3 MISD multiprocessing .................................... 3 1.3.4 MIMD multiprocessing .................................... 3 1.4 See also ................................................ 3 1.5 References ............................................... 3 2 Computer multitasking 5 2.1 Multiprogramming .......................................... 5 2.2 Cooperative multitasking ....................................... 6 2.3 Preemptive multitasking ....................................... 6 2.4 Real time ............................................... 7 2.5 Multithreading ............................................ 7 2.6 Memory protection .......................................... 7 2.7 Memory swapping .......................................... 7 2.8 Programming ............................................. 7 2.9 See also ................................................ 8 2.10 References ............................................. -

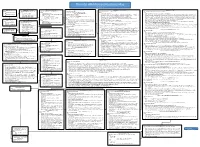

The Intel X86 Microarchitectures Map Version 2.0

The Intel x86 Microarchitectures Map Version 2.0 P6 (1995, 0.50 to 0.35 μm) 8086 (1978, 3 µm) 80386 (1985, 1.5 to 1 µm) P5 (1993, 0.80 to 0.35 μm) NetBurst (2000 , 180 to 130 nm) Skylake (2015, 14 nm) Alternative Names: i686 Series: Alternative Names: iAPX 386, 386, i386 Alternative Names: Pentium, 80586, 586, i586 Alternative Names: Pentium 4, Pentium IV, P4 Alternative Names: SKL (Desktop and Mobile), SKX (Server) Series: Pentium Pro (used in desktops and servers) • 16-bit data bus: 8086 (iAPX Series: Series: Series: Series: • Variant: Klamath (1997, 0.35 μm) 86) • Desktop/Server: i386DX Desktop/Server: P5, P54C • Desktop: Willamette (180 nm) • Desktop: Desktop 6th Generation Core i5 (Skylake-S and Skylake-H) • Alternative Names: Pentium II, PII • 8-bit data bus: 8088 (iAPX • Desktop lower-performance: i386SX Desktop/Server higher-performance: P54CQS, P54CS • Desktop higher-performance: Northwood Pentium 4 (130 nm), Northwood B Pentium 4 HT (130 nm), • Desktop higher-performance: Desktop 6th Generation Core i7 (Skylake-S and Skylake-H), Desktop 7th Generation Core i7 X (Skylake-X), • Series: Klamath (used in desktops) 88) • Mobile: i386SL, 80376, i386EX, Mobile: P54C, P54LM Northwood C Pentium 4 HT (130 nm), Gallatin (Pentium 4 Extreme Edition 130 nm) Desktop 7th Generation Core i9 X (Skylake-X), Desktop 9th Generation Core i7 X (Skylake-X), Desktop 9th Generation Core i9 X (Skylake-X) • Variant: Deschutes (1998, 0.25 to 0.18 μm) i386CXSA, i386SXSA, i386CXSB Compatibility: Pentium OverDrive • Desktop lower-performance: Willamette-128 -

A Performance Analysis Tool for Intel SGX Enclaves

sgx-perf: A Performance Analysis Tool for Intel SGX Enclaves Nico Weichbrodt Pierre-Louis Aublin Rüdiger Kapitza IBR, TU Braunschweig LSDS, Imperial College London IBR, TU Braunschweig Germany United Kingdom Germany [email protected] [email protected] [email protected] ABSTRACT the provider or need to refrain from offloading their workloads Novel trusted execution technologies such as Intel’s Software Guard to the cloud. With the advent of Intel’s Software Guard Exten- Extensions (SGX) are considered a cure to many security risks in sions (SGX)[14, 28], the situation is about to change as this novel clouds. This is achieved by offering trusted execution contexts, so trusted execution technology enables confidentiality and integrity called enclaves, that enable confidentiality and integrity protection protection of code and data – even from privileged software and of code and data even from privileged software and physical attacks. physical attacks. Accordingly, researchers from academia and in- To utilise this new abstraction, Intel offers a dedicated Software dustry alike recently published research works in rapid succession Development Kit (SDK). While it is already used to build numerous to secure applications in clouds [2, 5, 33], enable secure network- applications, understanding the performance implications of SGX ing [9, 11, 34, 39] and fortify local applications [22, 23, 35]. and the offered programming support is still in its infancy. This Core to all these works is the use of SGX provided enclaves, inevitably leads to time-consuming trial-and-error testing and poses which build small, isolated application compartments designed to the risk of poor performance. -

Intel PSU Cage Replacement Process Support Guide

Intel® PSU Cage Replacement Process Support Guide A Guide for Technically Qualified Assemblers of Intel® 2U ATX Products Document No.: PSU-01 Revision No.: 002 Disclaimer Information in this document is provided in connection with Intel® products. No license, express or implied, by estoppel or otherwise, to any intellectual property rights is granted by this document. Except as provided in Intel's Terms and Conditions of Sale for such products, Intel assumes no liability whatsoever, and Intel disclaims any express or implied warranty, relating to sale and/or use of Intel products including liability or warranties relating to fitness for a particular purpose, merchantability, or infringement of any patent, copyright or other intellectual property right. Intel products are not designed, intended or authorized for use in any medical, life saving, or life sustaining applications or for any other application in which the failure of the Intel product could create a situation where personal injury or death may occur. Intel may make changes to specifications and product descriptions at any time, without notice. Intel server boards contain a number of high-density VLSI and power delivery components that need adequate airflow for cooling. Intel's own chassis are designed and tested to meet the intended thermal requirements of these components when the fully integrated system is used together. It is the responsibility of the system integrator that chooses not to use Intel developed server building blocks to consult vendor datasheets and operating parameters to determine the amount of airflow required for their specific application and environmental conditions. Intel Corporation can not be held responsible if components fail or the server board does not operate correctly when used outside any of their published operating or non-operating limits. -

Intel® Itanium® Architecture Assembly Language Reference Guide

Intel® Itanium® Architecture Assembly Language Reference Guide Copyright © 2000 - 2003 Intel Corporation. All rights reserved. Order Number: 248801-004 World Wide Web: http://developer.intel.com Intel(R) Itanium(R) Architecture Assembly Lanuage Reference Guide Page 2 Disclaimer and Legal Information Information in this document is provided in connection with Intel products. No license, express or implied, by estoppel or otherwise, to any intellectual property rights is granted by this document. EXCEPT AS PROVIDED IN INTEL'S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER, AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT. Intel products are not intended for use in medical, life saving, or life sustaining applications. This Intel® Itanium® Architecture Assembly Language Reference Guide as well as the software described in it is furnished under license and may only be used or copied in accordance with the terms of the license. The information in this manual is furnished for informational use only, is subject to change without notice, and should not be construed as a commitment by Intel Corporation. Intel Corporation assumes no responsibility or liability for any errors or inaccuracies that may appear in this document or any software that may be provided in association with this document. Designers must not rely on the absence or characteristics of any features or instructions marked "reserved" or "undefined." Intel reserves these for future definition and shall have no responsibility whatsoever for conflicts or incompatibilities arising from future changes to them. -

PRIMEQUEST Servers: Mission-Critical Servers for Linux and Windows

WHITE PAPER PRIMEQUEST Servers: Mission-Critical Servers for Linux and Windows March 2006 PREPARED FOR Executive Summary Fujitsu A year ago, Fujitsu expanded its portfolio of robust enterprise servers with the introduction of the PRIMEQUEST family. Fujitsu had observed that a growing number of customers were attracted to Linux and Windows operating environments and to servers employing Intel processors. The Itanium 2-powered PRIMEQUEST TABLE OF CONTENTS line addresses that customer desire with high performance servers designed for Executive Summary .................................1 mission-critical Linux and Windows environments. Introduction...............................................1 With the addition of an eight-processor model 420, Fujitsu’s PRIMEQUEST family Industry Standard, now offers Itanium-based servers that span the range from midrange to high end. but with a Unique Approach....................2 The PRIMEQUEST models complement Fujitsu’s SPARC/Solaris PRIMEPOWER systems in mission-critical environments. Currently available with Madison 9M PRIMEQUEST Family Overview ..............3 chips, the midrange PRIMEQUEST 420 joins the PRIMEQUEST 440 with 16 processors and the PRIMEQUEST 480, which scales up to 32 processors. PRIMEQUEST Configurations .................5 Data Mirroring ...........................................7 Employing standard Intel processors certainly should not imply that PRIMEQUEST is merely another Itanium-based platform. Quite to the contrary, Fujitsu has Flexible Partitioning .................................8 -

Product Brief: Intel® Atom™ Processor E3900 Series, Intel® Celeron

Product brief Intel® IoT Technology Intel Atom®, Intel® Pentium®, and Intel® Celeron® Processors Achieving New Levels of CPU Performance, Fast Graphics and Media Processing, Image Processing, and Security The latest generation of Intel Atom®, Pentium®, and Celeron® processors empowers real-time computing in digital surveillance, new in-vehicle experiences, advancements in industrial and ofce automation, new solutions for retail and medical, and more. Meeting the demands of the rapidly growing Internet of Things The number of connected machines has grown by approximately 300 percent1 in recent years and is expected to continue to explode. By 2020, 330 billion devices will create 35 trillion GB of data annually,2 and will require greatly increased processing power at the edge in order to maintain viability. Intel is supporting this rapid development and the growing complexity of IoT infrastructures with the release of the new Intel Atom® processor E3900 series, Intel® Pentium® processor N4200, and Intel® Celeron® processor N3350. They deliver the ability to handle more tasks. From manufacturing machines that can see, to intelligent video systems that can analyze data, these new processors enable amazing new possibilities. Powerful edge intelligence for IoT These new Intel Atom, Pentium, and Celeron processors ofer enhanced processing power in compact, low-power packages. They are now available with a dual- or quad- core processor running at up to 2.5 GHz and memory speeds up to LPDDR4 2400. All of this performance resides in a compact fip chip ball grid array (FCBGA), utilizing 14 nm silicon technology, making it an excellent ft for a wide range of IoT applications when space and power are at a premium. -

The Intel X86 Microarchitectures Map Version 2.2

The Intel x86 Microarchitectures Map Version 2.2 P6 (1995, 0.50 to 0.35 μm) 8086 (1978, 3 µm) 80386 (1985, 1.5 to 1 µm) P5 (1993, 0.80 to 0.35 μm) NetBurst (2000 , 180 to 130 nm) Skylake (2015, 14 nm) Alternative Names: i686 Series: Alternative Names: iAPX 386, 386, i386 Alternative Names: Pentium, 80586, 586, i586 Alternative Names: Pentium 4, Pentium IV, P4 Alternative Names: SKL (Desktop and Mobile), SKX (Server) Series: Pentium Pro (used in desktops and servers) • 16-bit data bus: 8086 (iAPX Series: Series: Series: Series: • Variant: Klamath (1997, 0.35 μm) 86) • Desktop/Server: i386DX Desktop/Server: P5, P54C • Desktop: Willamette (180 nm) • Desktop: Desktop 6th Generation Core i5 (Skylake-S and Skylake-H) • Alternative Names: Pentium II, PII • 8-bit data bus: 8088 (iAPX • Desktop lower-performance: i386SX Desktop/Server higher-performance: P54CQS, P54CS • Desktop higher-performance: Northwood Pentium 4 (130 nm), Northwood B Pentium 4 HT (130 nm), • Desktop higher-performance: Desktop 6th Generation Core i7 (Skylake-S and Skylake-H), Desktop 7th Generation Core i7 X (Skylake-X), • Series: Klamath (used in desktops) 88) • Mobile: i386SL, 80376, i386EX, Mobile: P54C, P54LM Northwood C Pentium 4 HT (130 nm), Gallatin (Pentium 4 Extreme Edition 130 nm) Desktop 7th Generation Core i9 X (Skylake-X), Desktop 9th Generation Core i7 X (Skylake-X), Desktop 9th Generation Core i9 X (Skylake-X) • New instructions: Deschutes (1998, 0.25 to 0.18 μm) i386CXSA, i386SXSA, i386CXSB Compatibility: Pentium OverDrive • Desktop lower-performance: Willamette-128 -

AN1209 Motorola Burstram

Order this document by AN1209/D AN1209 The Freescale BurstRAM Prepared by: James Garris This note introduces the MCM62486 32K x 9 Synchronous maintain synchronization with the i486 or other logic driving BurstRAM. The device was designed to provide a high-perfor- the cache. mance, secondary cache for the Intel i486 microprocessor and future microprocessors with burst protocol. Four of these USE WITH THE i486 PROCESSOR devices can supply a 128K byte direct-mapped bursting cache The 62486 requires an ASIC or discrete PAL type of cache with parity support. controller to work with the i486. This cache control logic must THE MCM62486 also include 8K x 16 of cache-tag comparator RAM and any other buffers needed for system operation. The 62486 is a synchronous device with input registers and Control signals are sourced as follows: K is driven by the . address counters surrounding a standard 32K x 9 FSRAM system clock (CLK); ADSP is an output from the micropro- . core. The additional circuitry in the periphery enables the cessor; and ADV, ADSC are generated from the cache control c memory to uniquely interface with the i486. Like the i486, the logic. The data bus and lower address bus may interface n directly with the 62486 or the address bus may be buffered to I timings are referenced to the rising edge of the clock (K). Sig- nals generated by the processor and control logic must be improve its drive to the rest of the system. A simple block dia- , r stable during all transitions of clock from low to high. -

Agilent E2492E Probe Adapter for Intel. Pentium. III and Celeron

Installation Guide Publication Number E2492-92007 December 1999 © Copyright Agilent Technologies, Inc. 1999 All Rights Reserved Agilent E2492E Probe Adapter for the Intel® Pentium® III and Celeron™ Processors Installation at a Glance This Installation Guide explains how to install Agilent Technologies, Inc. probe adapter for the Intel® Pentium® III or Celeron™ processors in the 370-pin PPGA package. The probe adapter provides a quick and reliable connection from a processor to the Agilent E2487C Analysis Probe. The analysis probe then connects to your Agilent logic analyzer for state analysis. Installation Overview • Remove the processor. • Install the probe adapter. • Connect the processor to the probe adapter. • Connect the probe adapter to the Agilent E2487C analysis probe. Installation 1 Remove the clip and heatsink from the processor on the target system. 2 Remove the processor from the socket on the target system. 3 Install the probe adapter on the target system. Ensure that the connector is fully seated. 4 Install the processor on the socket of the probe adapter. Ensure that the connector is fully seated. CAUTION: Bending the flexible cable beyond the minimum bend radius will shorten the life of the probe adapter. See the dimension diagram the end of this document for minimum bend radius. 5 Installation 5 Use the clip to attach the heatsink to the socket on the probe adapter. 6 Connect the probe adapter to the Agilent E2487C Analysis Probe The probe adapter has five high-density connectors, labeled Cable 1 through Cable 5. The Agilent E2487C Analysis Probe also has five high-density cabled connectors, labeled P1 through P5. -

Intel® Celeron® Processor in the 478-Pin Package up to 1.80 Ghz Datasheet

Intel® Celeron® Processor in the 478-Pin Package up to 1.80 GHz Datasheet I Available at 1.70 and 1.80 GHz I Level 1 Execution Trace Cache stores 12K I Binary compatible with applications micro-ops and removes decoder latency running on previous members of the Intel from main execution loops microprocessor line I 128 KB Advanced Transfer Cache (on-die, I System bus frequency at 400 MHz. full speed Level 2 (L2) cache) with Error Correction Code (ECC) I Rapid Execution Engine: Arithmetic Logic Units (ALUs) run at twice the processor I 144 Streaming SIMD Extensions 2 (SSE2) core frequency. Instructions I Hyper Pipelined Technology. I Power Management capabilities I Advanced Dynamic Execution —System Management mode —Very deep out-of-order execution —Multiple low-power states —Enhanced branch prediction I Optimized for 32-bit applications running on advanced 32-bit operating systems I 8 KB Level 1 data cache The Intel® Celeron® processor in the 478-pin package up to 1.80 GHz expands Intel’s processor family into the value-priced PC market segment. Intel Celeron processors provide the value customer the capability to affordably get onto the Internet, and utilize educational programs, home-office software and productivity applications. All of Intel’s Celeron processors include an integrated L2 cache, and are built on Intel's advanced CMOS process technology. The Intel Celeron processor is backed by over 30 years of Intel experience in manufacturing high-quality, reliable microprocessors. June 2002 Document Number: 290748-002 Information in this document is provided solely to enable use of Intel products.