Production Engineering and Management

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

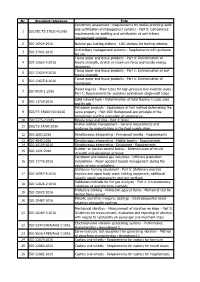

Nr. Standard Reference Title 1 ISO/IEC TS 17021-9:2016

Nr. Standard reference Title Conformity assessment - Requirements for bodies providing audit and certification of management systems - Part 9: Competence 1 ISO/IEC TS 17021-9:2016 requirements for auditing and certification of anti-bribery management systems 2 ISO 16924:2016 Natural gas fuelling stations - LNG stations for fuelling vehicles Anti-bribery management systems - Requirements with guidance 3 ISO 37001:2016 for use Tissue paper and tissue products - Part 4: Determination of 4 ISO 12625-4:2016 tensile strength, stretch at maximum force and tensile energy absorption Tissue paper and tissue products - Part 5: Determination of wet 5 ISO 12625-5:2016 tensile strength Tissue paper and tissue products - Part 6: Determination of 6 ISO 12625-6:2016 grammage Diesel engines - Steel tubes for high-pressure fuel injection pipes - 7 ISO 8535-1:2016 Part 1: Requirements for seamless cold-drawn single-wall tubes Solid mineral fuels - Determination of total fluorine in coal, coke 8 ISO 11724:2016 and fly ash Petroleum products - Equivalency of test method determining the 9 ISO/TR 19686-100:2016 same property - Part 100: Background and principle of the comparison and the evaluation of equivalency 10 ISO 5775-2:2015 Bicycle tyres and rims - Part 2: Rims Animal welfare management - General requirements and 11 ISO/TS 34700:2016 guidance for organizations in the food supply chain 12 ISO 2603:2016 Simultaneous interpreting - Permanent booths - Requirements 13 ISO 4043:2016 Simultaneous interpreting - Mobile booths - Requirements 14 ISO 20109:2016 Simultaneous -

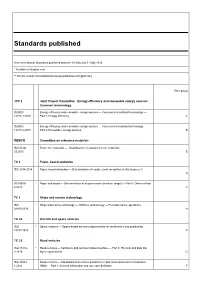

Standards Published

Standards published New International Standards published between 01 May and 31 May 2015 * Available in English only ** French version of standard previously published in English only Price group JPC 2 Joint Project Committee - Energy efficiency and renewable energy sources - Common terminology ISO/IEC Energy efficiency and renewable energy sources — Common international terminology — 13273-1:2015 Part 1: Energy efficiency C ISO/IEC Energy efficiency and renewable energy sources — Common international terminology — 13273-2:2015 Part 2: Renewable energy sources B REMCO Committee on reference materials ISO Guide Reference materials — Good practice in using reference materials 33:2015 E TC 6 Paper, board and p ulps ISO 2144:2015 Paper, board and pulps — Determination of residue (ash) on ignition at 900 degrees C A ISO 5636- * Paper and board — Determination of air permeance (medium range) — Part 6: Oken method 6:2015 C TC 8 Ships and marine technology ISO * Ships and marine technology — Offshore wind energy — Port and marine operations 29400:2015 H TC 20 Aircraft and space vehicles ISO * Space systems — Space based services requirements for centimetre class positioning 18197:2015 E TC 22 Road vehicles ISO 15118- * Road vehicles — Vehicle to grid communication interface — Part 3: Physical and data link 3:2015 layer requirements G ISO 18541- Road vehicles — Standardized access to automotive repair and maintenance information 1:2014 (RMI) — Part 1: General information and use case definition F ISO 18541- Road vehicles — Standardized access to -

WORK PROGRAMME of General Directorate of Standardization - ALBANIA (Period 1 July to 31 December 2017)

WORK PROGRAMME of General Directorate of Standardization - ALBANIA (Period 1 July to 31 December 2017) Technical Committee No. 1 “Quality assurance and social responsibility”, 3 standards No. Standard number English title 1. EN ISO 17034:2016 General requirements for the competence of reference material producers (ISO 17034:2016) 2. ISO/IEC TS 17021-9:2016 Conformity assessment - Requirements for bodies providing audit and certification of management systems - Part 9: Competence requirements for auditing and certification of anti-bribery management systems 3. ISO/IEC TR 17026:2015 Conformity assessment - Example of a certification scheme for tangible products Technical Committee No. 3 “Electrical and electronical materials”, 28 standards No. Standard number English title 1. CLC/TS 50576:2016 Electric cables - Extended application of test results for reaction to fire 2. EN 50620:2017 Electric cables - Charging cables for electric vehicles 3. EN 60061- Lamp caps and holders together with gauges for the control of 1:1993/A55:2017 interchangeability and safety - Part 1: Lamp caps 4. EN 60153-1:2016/AC:2017 Hollow metallic waveguides - Part 1: General requirements and measuring methods 5. EN 60153-2:2016/AC:2017 Hollow metallic waveguides - Part 2: Relevant specifications for ordinary rectangular waveguides 6. EN 60317-67:2017 Specifications for particular types of winding wires - Part 67: Polyvinyl acetal enamelled rectangular aluminium wire, class 105 7. EN 60317-68:2017 Specifications for particular types of winding wires - Part 68: Polyvinyl acetal enamelled rectangular aluminium wire, class 120 8. EN 60404-1:2017 Magnetic materials - Part 1: Classification 1 9. EN 60404-8-6:2017 Magnetic materials - Part 8-6: Specifications for individual materials - Soft magnetic metallic materials 10. -

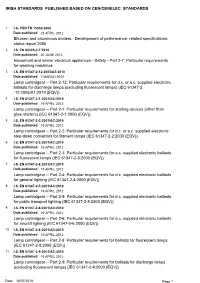

Progress File (Standards Publications)

IRISH STANDARDS PUBLISHED BASED ON CEN/CENELEC STANDARDS 1. I.S. CEN TR 15352:2006 Date published 23 APRIL 2012 Bitumen and bituminous binders - Development of performance- related specifications: status report 2005 2. I.S. EN 60335-2-7:2010 Date published 20 JUNE 2012 Household and similar electrical appliances - Safety - Part 2-7: Particular requirements for washing machines 3. I.S. EN 61347-2-12:2005/A1:2010 Date published 7 MARCH 2012 Lamp controlgear -- Part 2-12: Particular requirements for d.c. or a.c. supplied electronic ballasts for discharge lamps (excluding fluorescent lamps) (IEC 61347-2 -12:2005/A1:2010 (EQV)) 4. I.S. EN 61347-2-1:2001/AC:2010 Date published 19 APRIL 2012 Lamp controlgear -- Part 2-1: Particular requirements for starting devices (other than glow starters) (IEC 61347-2-1:2000 (EQV)) 5. I.S. EN 61347-2-2:2001/AC:2010 Date published 19 APRIL 2012 Lamp controlgear -- Part 2-2: Particular requirements for d.c. or a.c. supplied electronic step-down convertors for filament lamps (IEC 61347-2-2:2000 (EQV)) 6. I.S. EN 61347-2-3:2001/AC:2010 Date published 19 APRIL 2012 Lamp controlgear -- Part 2-3: Particular requirements for a.c. supplied electronic ballasts for fluorescent lamps (IEC 61347-2-3:2000 (EQV)) 7. I.S. EN 61347-2-4:2001/AC:2010 Date published 19 APRIL 2012 Lamp controlgear -- Part 2-4: Particular requirements for d.c. supplied electronic ballasts for general lighting (IEC 61347-2-4:2000 (EQV)) 8. I.S. EN 61347-2-5:2001/AC:2010 Date published 19 APRIL 2012 Lamp controlgear -- Part 2-5: Particular requirements for d.c. -

News Iso Standards: 2017/01

NEWS ISO STANDARDS: 2017/01 ISO/IEC 26557: 2016 214.00 € ISO/IEC 26557 Software and systems engineering -- Methods and Buy tools for variability mechanisms in software and systems product line ISO 13775-2: 2016 141.00 € ISO 13775-2 Thermoplastic tubing and hoses for automotive use -- Buy Part 2: Petroleum-based-fuel applications ISO 16847: 2016 54.00 € ISO 16847 Textiles -- Test method for assessing the matting Buy appearance of napped fabrics after cleansing ISO 16923: 2016 189.00 € ISO 16923 Natural gas fuelling stations -- CNG stations for fuelling Buy vehicles ISO 16924: 2016 214.00 € ISO 16924 Natural gas fuelling stations -- LNG stations for fuelling Buy vehicles ISO 24617-8: 2016 189.00 € ISO 24617-8 Language resource management -- Semantic annotation Buy framework (SemAF) -- Part 8: Semantic relations in discourse, core annotation schema (DR-core) ISO 17987-7: 2016 239.00 € ISO 17987-7 Road vehicles -- Local Interconnect Network (LIN) -- Part Buy 7: Electrical Physical Layer (EPL) conformance test specification ISO/TS 18062: 2016 141.00 € ISO/TS 18062 Health informatics -- Categorial structure for Buy representation of herbal medicaments in terminological systems ISO 17493: 2016 69.00 € ISO 17493 Clothing and equipment for protection against heat -- Test Buy method for convective heat resistance using a hot air circulating oven ISO 1213-2: 2016 189.00 € ISO 1213-2 Solid mineral fuels -- Vocabulary -- Part 2: Terms relating Buy to sampling, testing and analysis ISO 18203: 2016 106.00 € ISO 18203 Steel -- Determination of the thickness -

ISO Update Supplement to Isofocus

ISO Update Supplement to ISOfocus January 2017 International Standards in process ISO/CD 7240-7 Fire detection and alarm systems — Part 7: Point-type smoke detectors using scattered An International Standard is the result of an agreement between light, transmitted light or ionization the member bodies of ISO. A first important step towards an Interna- TC 22 Road vehicles tional Standard takes the form of a committee draft (CD) - this is cir- ISO/CD Road vehicles — Automotive cables — Part 1: culated for study within an ISO technical committee. When consensus 19642-1 Terminology has been reached within the technical committee, the document is sent to the Central Secretariat for processing as a draft International ISO/CD Road vehicles — Automotive cables — Part 2: Standard (DIS). The DIS requires approval by at least 75 % of the 19642-2 Test methods member bodies casting a vote. A confirmation vote is subsequently ISO/CD Road vehicles — Automotive cables — Part 3: carried out on a final draft International Standard (FDIS), the approval 19642-3 Dimensions and requirements for 30 V a.c. or criteria remaining the same. 60 V d.c. single core copper conductor cables ISO/CD Road vehicles — Automotive cables — Part 19642-4 4: Dimensions and requirements for 30 V a.c. and 60 V d.c. single core aluminium conductor cables ISO/CD Road vehicles — Automotive cables — Part 5: 19642-5 Dimensions and requirements for 600 V a.c. or 900 V d.c., 1000 V a.c. or 1500 V d.c. single CD registered core copper conductor cables ISO/CD Road vehicles — Automotive cables — Part 6: 19642-6 Dimensions and requirements for 600 V a.c. -

PUB ISO 2016-12.Pdf

Standards published New International Standards published between 01 December and 31 December 2016 * Available in English only ** French version of standard previously published in English only Price group PC 252 Natural gas fuelling stations for vehicles ISO 16923:2016 * Natural gas fuelling stations — CNG stations for fuelling vehicles F ISO 16924:2016 * Natural gas fuelling stations — LNG stations for fuelling vehicles G TC 6 Paper, board and pulps ISO 12625-4:2016 Tissue paper and tissue products — Part 4: Determination of tensile strength, stretch at maxi- mum force and tensile energy absorption B ISO 12625-5:2016 Tissue paper and tissue products — Part 5: Determination of wet tensile strength C ISO 12625-6:2016 Tissue paper and tissue products — Part 6: Determination of grammage B TC 8 Ships and marine technology ISO 19355:2016 * Ships and marine technology — Marine cranes — Structural requirements B TC 17 Steel ISO 18203:2016 Steel — Determination of the thickness of surface-hardened layers B TC 20 Aircraft and space vehicles ISO 7718-1:2016 * Aircraft — Passenger doors interface requirements for connection of passenger boarding bridge or passenger transfer vehicle — Part 1: Main deck doors A ISO 7718-2:2016 * Aircraft — Passenger doors interface requirements for connection of passenger boarding bridge or passenger transfer vehicle — Part 2: Upper deck doors A TC 22 Road vehicles ISO 11898-2:2016 * Road vehicles — Controller area network (CAN) — Part 2: High-speed medium access unit E ISO 17987-7:2016 * Road vehicles — Local Interconnect -

Web-Based Reference Software for Characterisation of Surface Roughness

Web-Based Reference Software For Characterisation Of Surface Roughness Dem Fachbereich Maschinenbau und Verfahrenstechnik der technischen Universität Kaiserslautern zur Erlangung des akademischen Grades Doktor-Ingenieur (Dr.-Ing.) vorgelegte Dissertation von Frau M.Sc. Bini Alapurath George aus Bangalore Datum der wissenschaftlichen Aussprache: 28.09.2017 Betreuer der Dissertation: Prof. Dr.-Ing. Jörg Seewig D 386 II Acknowledgment Firstly, I would like to express my gratitude and sincere thanks to my doctoral advisor Prof. Dr.-Ing. Jörg Seewig for his advice and guidance during my research and for giving me the opportunity to pursue my PhD. I would like to thank my colleagues from the Institute of Measurement and Sensor Technology for the support and discussions during my research. I wish to extend my thanks to Mr. Timo Wiegel for supporting me with my research, as part of his internship program in the Institute of Measurement and Sensor Tech- nology, T.U. Kaiserslautern during summer 2012. I am thankful to my husband, Dr. Mahesh Poolakkaparambil for his support and encouragement. A special thanks to my mother, Valsala George for her love and prayers. I am indebted to them for their help and support. I take this opportunity to thank all my friends for the memorable time during my stay in Kaiserslautern. Finally, I would like to assert that all the contents and pictures in this thesis are from the knowledge of literatures, publications and paper, which are mentioned in the references and naturally my knowledge from the study and research in this work. Kaiserslautern, __________________ Signature: ______________________ III Abstract This research explores the development of web based reference software for char- acterisation of surface roughness for two-dimensional surface data. -

Isoupdate January 2020

ISO Update Supplement to ISOfocus January 2020 International Standards in process ISO/CD Irrigation equipment — Sprinklers — Part 2: 15886-2 Requirements for design (structure) and testing An International Standard is the result of an agreement between TC 34 Food products the member bodies of ISO. A first important step towards an Interna- ISO 10272- Microbiology of the food chain — Horizon- tional Standard takes the form of a committee draft (CD) - this is cir- 1:2017/CD tal method for detection and enumeration culated for study within an ISO technical committee. When consensus Amd 1 of Campylobacter spp. — Part 1: Detection has been reached within the technical committee, the document is method — Amendment 1 sent to the Central Secretariat for processing as a draft International Standard (DIS). The DIS requires approval by at least 75 % of the ISO 10272- Microbiology of the food chain — Horizontal member bodies casting a vote. A confirmation vote is subsequently 2:2017/CD method for detection and enumeration of carried out on a final draft International Standard (FDIS), the approval Amd 1 Campylobacter spp. — Part 2: Colony-count criteria remaining the same. technique — Amendment 1 ISO/CD 24363 Determination of fatty acid methyl esters and squalene by gas chromatography ISO/CD Vegetable fats and oils — Determination of 29822-2 relative amounts of 1,2- and 1,3-diacylglycerols — Part 2: Isolation by solid phase extraction (SPE) ISO/CD TS Molecular biomarker analysis — Detection 20224-2.2 of animal derived materials in foodstuffs and CD registered feedstuffs by real-time PCR — Part 2: Ovine DNA Detection Method ISO/CD TS Molecular biomarker analysis — Detection Period from 01 December 2019 to 01 January 2020 20224-3.2 of animal derived materials in foodstuffs and These documents are currently under consideration in the technical feedstuffs by real-time PCR — Part 3: Porcine committee. -

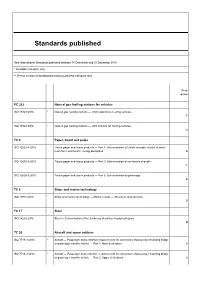

Standards Published

Standards published New International Standards published between 01 March and 31 March 2017 * Available in English only ** French version of standard previously published in English only Price group TC 5 Ferrous metal pipes and metallic fittings ISO 9349:2017 * Ductile iron pipes, fittings, accessories and their joints — Thermal preinsulated products B TC 8 Ships and marine technology ISO 20519:2017 Ships and marine technology — Specification for bunkering of liquefied natural gas fuelled vessels F ISO 18154:2017 * Ships and marine technology — Safety valve for cargo tanks of LNG carriers — Design and testing requirements B TC 10 Technical product documentation ISO 13715:2017 Technical product documentation — Edges of undefined shape — Indication and dimen- sioning D TC 20 Aircraft and space vehicles ISO 15872:2017 * Aerospace — UNJ threads — Gauging D ISO 12604-1:2017 * Aircraft ground handling — Checked baggage — Part 1: Mass and dimensions A TC 22 Road vehicles ISO 15005:2017 Road vehicles — Ergonomic aspects of transportation and control systems — Dialogue management principles and compliance procedures C ISO 15008:2017 * Road vehicles — Ergonomic aspects of transport information and control systems — Speci- fications and test procedures for in-vehicle visual presentation D ISO 12614-19:2017 * Road vehicles — Liquefied natural gas (LNG) fuel system components — Part 19: Automatic valve A ISO 12619-6:2017 * Road vehicles — Compressed gaseous hydrogen (CGH2) and hydrogen/natural gas blend fuel system components — Part 6: Automatic valve -

1 Introduction

A generic parallel computational framework of lifting wavelet transform for online engineering surface filtration Yuanping Xua Chaolong Zhanga, b, ⁎ [email protected] Zhijie Xub Jiliu Zhouc Kaiwei Wangd Jian Huanga aSchool of Software Engineering, Chengdu University of Information Technology, No.24 Block 1, Xuefu Road, Chengdu 610225, People's Republic of China bSchool of Computing & Engineering, University of Huddersfield, Queensgate HD1 3DH, Huddersfield, UK cSchool of Computer Science, Chengdu University of Information Technology, No.24 Block 1, Xuefu Road, Chengdu 610225, People's Republic of China dSchool of Optical Science and Engineering, Zhejiang University, Hangzhou 310027, People's Republic of China ⁎Corresponding author at: School of Software Engineering, Chengdu University of Information Technology, No.24 Block 1, Xuefu Road, Chengdu 610225, People's Republic of China. Abstract Nowadays, complex geometrical surface texture measurement and evaluation require advanced filtration techniques. Discrete wavelet transform (DWT), especially the second-generation wavelet (Lifting Wavelet Transform – LWT), is the most adopted one due to its unified and abundant characteristics in measured data processing, geometrical feature extraction, manufacturing process planning, and production monitoring. However, when dealing with varied complex functional surfaces, the computational payload for performing DWT in real-time often becomes a core bottleneck in the context of massive measured data and limited computational capacities. It is a more prominent -

Thesis Submitted for the Degree of Doctor of Philosophy

University of Bath PHD Resource-Independent Computer Aided Inspection Mahmoud, Hesham Award date: 2016 Awarding institution: University of Bath Link to publication Alternative formats If you require this document in an alternative format, please contact: [email protected] General rights Copyright and moral rights for the publications made accessible in the public portal are retained by the authors and/or other copyright owners and it is a condition of accessing publications that users recognise and abide by the legal requirements associated with these rights. • Users may download and print one copy of any publication from the public portal for the purpose of private study or research. • You may not further distribute the material or use it for any profit-making activity or commercial gain • You may freely distribute the URL identifying the publication in the public portal ? Take down policy If you believe that this document breaches copyright please contact us providing details, and we will remove access to the work immediately and investigate your claim. Download date: 10. Oct. 2021 Resource-Independent Computer Aided Inspection Hesham Abdelfattah Mahmoud A thesis submitted for the degree of Doctor of Philosophy University of Bath Department of Mechanical Engineering August 2016 COPYRIGHT Attention is drawn to the fact that copyright of this thesis rests with the author. A copy of this thesis has been supplied on condition that anyone who consults it is understood to recognise that its copyright rests with the author and that they must not copy it or use material from it except as permitted by law or with the consent of the author.