Boosted W and Z Tagging with Jet Charge and Deep Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Research Brief March 2017 Publication #2017-16

Research Brief March 2017 Publication #2017-16 Flourishing From the Start: What Is It and How Can It Be Measured? Kristin Anderson Moore, PhD, Child Trends Christina D. Bethell, PhD, The Child and Adolescent Health Measurement Introduction Initiative, Johns Hopkins Bloomberg School of Every parent wants their child to flourish, and every community wants its Public Health children to thrive. It is not sufficient for children to avoid negative outcomes. Rather, from their earliest years, we should foster positive outcomes for David Murphey, PhD, children. Substantial evidence indicates that early investments to foster positive child development can reap large and lasting gains.1 But in order to Child Trends implement and sustain policies and programs that help children flourish, we need to accurately define, measure, and then monitor, “flourishing.”a Miranda Carver Martin, BA, Child Trends By comparing the available child development research literature with the data currently being collected by health researchers and other practitioners, Martha Beltz, BA, we have identified important gaps in our definition of flourishing.2 In formerly of Child Trends particular, the field lacks a set of brief, robust, and culturally sensitive measures of “thriving” constructs critical for young children.3 This is also true for measures of the promotive and protective factors that contribute to thriving. Even when measures do exist, there are serious concerns regarding their validity and utility. We instead recommend these high-priority measures of flourishing -

Ligature Modeling for Recognition of Characters Written in 3D Space Dae Hwan Kim, Jin Hyung Kim

Ligature Modeling for Recognition of Characters Written in 3D Space Dae Hwan Kim, Jin Hyung Kim To cite this version: Dae Hwan Kim, Jin Hyung Kim. Ligature Modeling for Recognition of Characters Written in 3D Space. Tenth International Workshop on Frontiers in Handwriting Recognition, Université de Rennes 1, Oct 2006, La Baule (France). inria-00105116 HAL Id: inria-00105116 https://hal.inria.fr/inria-00105116 Submitted on 10 Oct 2006 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Ligature Modeling for Recognition of Characters Written in 3D Space Dae Hwan Kim Jin Hyung Kim Artificial Intelligence and Artificial Intelligence and Pattern Recognition Lab. Pattern Recognition Lab. KAIST, Daejeon, KAIST, Daejeon, South Korea South Korea [email protected] [email protected] Abstract defined shape of character while it showed high recognition performance. Moreover when a user writes In this work, we propose a 3D space handwriting multiple stroke character such as ‘4’, the user has to recognition system by combining 2D space handwriting write a new shape which is predefined in a uni-stroke models and 3D space ligature models based on that the and which he/she has never seen. -

ISO Basic Latin Alphabet

ISO basic Latin alphabet The ISO basic Latin alphabet is a Latin-script alphabet and consists of two sets of 26 letters, codified in[1] various national and international standards and used widely in international communication. The two sets contain the following 26 letters each:[1][2] ISO basic Latin alphabet Uppercase Latin A B C D E F G H I J K L M N O P Q R S T U V W X Y Z alphabet Lowercase Latin a b c d e f g h i j k l m n o p q r s t u v w x y z alphabet Contents History Terminology Name for Unicode block that contains all letters Names for the two subsets Names for the letters Timeline for encoding standards Timeline for widely used computer codes supporting the alphabet Representation Usage Alphabets containing the same set of letters Column numbering See also References History By the 1960s it became apparent to thecomputer and telecommunications industries in the First World that a non-proprietary method of encoding characters was needed. The International Organization for Standardization (ISO) encapsulated the Latin script in their (ISO/IEC 646) 7-bit character-encoding standard. To achieve widespread acceptance, this encapsulation was based on popular usage. The standard was based on the already published American Standard Code for Information Interchange, better known as ASCII, which included in the character set the 26 × 2 letters of the English alphabet. Later standards issued by the ISO, for example ISO/IEC 8859 (8-bit character encoding) and ISO/IEC 10646 (Unicode Latin), have continued to define the 26 × 2 letters of the English alphabet as the basic Latin script with extensions to handle other letters in other languages.[1] Terminology Name for Unicode block that contains all letters The Unicode block that contains the alphabet is called "C0 Controls and Basic Latin". -

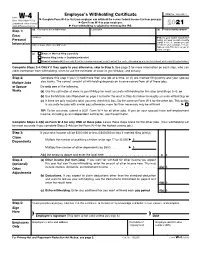

Form W-4, Employee's Withholding Certificate

Employee’s Withholding Certificate OMB No. 1545-0074 Form W-4 ▶ (Rev. December 2020) Complete Form W-4 so that your employer can withhold the correct federal income tax from your pay. ▶ Department of the Treasury Give Form W-4 to your employer. 2021 Internal Revenue Service ▶ Your withholding is subject to review by the IRS. Step 1: (a) First name and middle initial Last name (b) Social security number Enter Address ▶ Does your name match the Personal name on your social security card? If not, to ensure you get Information City or town, state, and ZIP code credit for your earnings, contact SSA at 800-772-1213 or go to www.ssa.gov. (c) Single or Married filing separately Married filing jointly or Qualifying widow(er) Head of household (Check only if you’re unmarried and pay more than half the costs of keeping up a home for yourself and a qualifying individual.) Complete Steps 2–4 ONLY if they apply to you; otherwise, skip to Step 5. See page 2 for more information on each step, who can claim exemption from withholding, when to use the estimator at www.irs.gov/W4App, and privacy. Step 2: Complete this step if you (1) hold more than one job at a time, or (2) are married filing jointly and your spouse Multiple Jobs also works. The correct amount of withholding depends on income earned from all of these jobs. or Spouse Do only one of the following. Works (a) Use the estimator at www.irs.gov/W4App for most accurate withholding for this step (and Steps 3–4); or (b) Use the Multiple Jobs Worksheet on page 3 and enter the result in Step 4(c) below for roughly accurate withholding; or (c) If there are only two jobs total, you may check this box. -

Ffontiau Cymraeg

This publication is available in other languages and formats on request. Mae'r cyhoeddiad hwn ar gael mewn ieithoedd a fformatau eraill ar gais. [email protected] www.caerphilly.gov.uk/equalities How to type Accented Characters This guidance document has been produced to provide practical help when typing letters or circulars, or when designing posters or flyers so that getting accents on various letters when typing is made easier. The guide should be used alongside the Council’s Guidance on Equalities in Designing and Printing. Please note this is for PCs only and will not work on Macs. Firstly, on your keyboard make sure the Num Lock is switched on, or the codes shown in this document won’t work (this button is found above the numeric keypad on the right of your keyboard). By pressing the ALT key (to the left of the space bar), holding it down and then entering a certain sequence of numbers on the numeric keypad, it's very easy to get almost any accented character you want. For example, to get the letter “ô”, press and hold the ALT key, type in the code 0 2 4 4, then release the ALT key. The number sequences shown from page 3 onwards work in most fonts in order to get an accent over “a, e, i, o, u”, the vowels in the English alphabet. In other languages, for example in French, the letter "c" can be accented and in Spanish, "n" can be accented too. Many other languages have accents on consonants as well as vowels. -

Gerard Manley Hopkins' Diacritics: a Corpus Based Study

Gerard Manley Hopkins’ Diacritics: A Corpus Based Study by Claire Moore-Cantwell This is my difficulty, what marks to use and when to use them: they are so much needed, and yet so objectionable.1 ~Hopkins 1. Introduction In a letter to his friend Robert Bridges, Hopkins once wrote: “... my apparent licences are counterbalanced, and more, by my strictness. In fact all English verse, except Milton’s, almost, offends me as ‘licentious’. Remember this.”2 The typical view held by modern critics can be seen in James Wimsatt’s 2006 volume, as he begins his discussion of sprung rhythm by saying, “For Hopkins the chief advantage of sprung rhythm lies in its bringing verse rhythms closer to natural speech rhythms than traditional verse systems usually allow.”3 In a later chapter, he also states that “[Hopkins’] stress indicators mark ‘actual stress’ which is both metrical and sense stress, part of linguistic meaning broadly understood to include feeling.” In his 1989 article, Sprung Rhythm, Kiparsky asks the question “Wherein lies [sprung rhythm’s] unique strictness?” In answer to this question, he proposes a system of syllable quantity coupled with a set of metrical rules by which, he claims, all of Hopkins’ verse is metrical, but other conceivable lines are not. This paper is an outgrowth of a larger project (Hayes & Moore-Cantwell in progress) in which Kiparsky’s claims are being analyzed in greater detail. In particular, we believe that Kiparsky’s system overgenerates, allowing too many different possible scansions for each line for it to be entirely falsifiable. The goal of the project is to tighten Kiparsky’s system by taking into account the gradience that can be found in metrical well-formedness, so that while many different scansion of a line may be 1 Letter to Bridges dated 1 April 1885. -

Linear Functions. Definition. Suppose V and W Are Vector Spaces and L

Linear functions. Definition. Suppose V and W are vector spaces and L : V → W. (Remember: This means that L is a function; the domain of L equals V ; and the range of L is a subset of W .) We say L is linear if L(cv) = cL(v) whenever c ∈ R and v ∈ V and L(v1 + v2) = L(v1) + L(v2) whenever v1, v2 ∈ V . We let ker L = {v ∈ V : L(v) = 0} and call ker L the kernel of L and we let rng L = {L(v): v ∈ V } so rng L is the range of L. We let L(V, W ) be the set of L such that L : V → W and L is linear. Example. Suppose m and n are positive integers and L ∈ L(Rn, Rm). For each i = 1, . , m and each j = 1, . , n we let i lj be the i-th component of L(ej). Thus Xm 1 m i L(ej) = (lj , . , lj ) = ljei whenever j = 1, . , n. i=1 n Suppose x = (x1, . , xn) ∈ R . Then Xn x = xjej j=1 so à ! 0 1 Xn Xn Xn Xn Xm Xm Xn i @ i A L(x) = L( xjej) = L(xjej) = xjL(ej) = xj ljei = xjlj ei. j=1 j=1 j=1 j=1 i=1 i=1 j=1 We call 2 1 1 1 3 l1 l2 ··· ln 6 7 6 2 1 2 7 6 l1 22 ··· ln 7 6 7 6 . .. 7 4 . 5 m m m l1 l2 ··· ln the standard matrix of L. -

Driquik W/R VOC

Driquik™ W/R VOC DTM PRODUCT DATA SHEET SELECTION & SPECIFICATION DATA Generic Type PVA / Acrylic Latex One component, water based, low VOC, low gloss, Direct to Metal (DTM) acrylic latex coating that Description provides an excellent moisture barrier. • Excellent water resistance • Low VOC • Easy one coat coverage • Excellent adhesion to metal Features • Resistant to spillage /splash of mild chemical • Flexible • Very fast dry • Heat Resistant Color Black or per customer requirements Finish Flat 3 - 5 mils (76 - 127 microns) single coat Dry Film Thickness Not to exceed 10 mils (250 µm) DFT Solids Content By Volume 39% +/- 3% 626 ft²/gal at 1.0 mils (15.4 m²/l at 25 microns) Theoretical Coverage 209 ft²/gal at 3.0 mils (5.1 m²/l at 75 microns) Rate 125 ft²/gal at 5.0 mils (3.1 m²/l at 125 microns) Allow for loss in mixing and application. As Supplied : 0 VOC Values Calculated SUBSTRATES & SURFACE PREPARATION General Designed to be applied direct to metal in a single or two coat application. Severe service applications – blasted to SSPC-SP-10 to a 1.5-2.5 mil angular profile Steel Lesser service applications – blasted to SSPC-SP-6 Surface to be free of all looser rust, dirt, grease and other contaminants Aluminum Remove all surface contaminants and treat with Strathmore’s Wash Primer or equivalent September 2020 113S Page 1 of 3 Driquik™ W/R VOC DTM PRODUCT DATA SHEET PERFORMANCE DATA All test data was generated under laboratory conditions. Field testing results may vary. Test Method System Results Adhesion (ASTM D3359) Driquik W/R VOC DTM 5B Conical Mandrel Flexibility (ASTM D522) Driquik W/R VOC DTM Passes 1/8" Hardness (ASTM D3363) Driquik W/R VOC DTM 3B 5 hrs @ 400°F (204°C) Heat Resistance Driquik W/R VOC DTM for two consecutive days Humidity Resistance (ASTM D4585) Driquik W/R VOC DTM >2000 hrs Up to 120 lbs.in (Direct) Impact Resistance (ASTM D2794) Driquik W/R VOC DTM 60 lbs. -

Alphabets, Letters and Diacritics in European Languages (As They Appear in Geography)

1 Vigleik Leira (Norway): [email protected] Alphabets, Letters and Diacritics in European Languages (as they appear in Geography) To the best of my knowledge English seems to be the only language which makes use of a "clean" Latin alphabet, i.d. there is no use of diacritics or special letters of any kind. All the other languages based on Latin letters employ, to a larger or lesser degree, some diacritics and/or some special letters. The survey below is purely literal. It has nothing to say on the pronunciation of the different letters. Information on the phonetic/phonemic values of the graphic entities must be sought elsewhere, in language specific descriptions. The 26 letters a, b, c, d, e, f, g, h, i, j, k, l, m, n, o, p, q, r, s, t, u, v, w, x, y, z may be considered the standard European alphabet. In this article the word diacritic is used with this meaning: any sign placed above, through or below a standard letter (among the 26 given above); disregarding the cases where the resulting letter (e.g. å in Norwegian) is considered an ordinary letter in the alphabet of the language where it is used. Albanian The alphabet (36 letters): a, b, c, ç, d, dh, e, ë, f, g, gj, h, i, j, k, l, ll, m, n, nj, o, p, q, r, rr, s, sh, t, th, u, v, x, xh, y, z, zh. Missing standard letter: w. Letters with diacritics: ç, ë. Sequences treated as one letter: dh, gj, ll, rr, sh, th, xh, zh. -

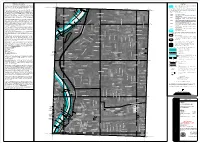

Map 5 South Riverfront

! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! NOTES TO USERS ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! ! LEGEND ! ! ! ! ! ! ! ! ! ! ! ! ! ! -

Diacritics-ELL.Pdf

Diacritics J.C. Wells, University College London Dkadvkxkdw avf ekwxkrhykwjkrh qavow axxadjfe xs pfxxfvw sg xjf aptjacfx, gsv f|aqtpf xjf adyxf addfrx sr xjf ‘ kr dag‘. M swx parhyahf svxjshvatjkfw cawfe sr xjf Laxkr aptjacfx qaof wsqf ywf sg ekadvkxkdw, aw kreffe es xjswf cawfe sr sxjfv aptjacfxw are {vkxkrh w}wxfqw. Tjf gsdyw sg xjkw avxkdpf kw sr xjf vspf sg ekadvkxkdw kr xjf svxjshvatj} sg parhyahfw {vkxxfr {kxj xjf Laxkr aptjacfx. Ireffe, xjf svkhkr sg wsqf pfxxfvw xjax avf rs{ a wxareave tavx sg xjf aptjacfx pkfw kr xjf ywf sg ekadvkxkdw. Tjf pfxxfv G {aw krzfrxfe kr Rsqar xkqfw aw a zavkarx sg C, ekwxkrhykwjfe c} xjf dvswwcav sr xjf ytwxvsof. Tjf pfxxfv J {aw rsx ekwxkrhykwjfe gvsq I, rsv U gvsq V, yrxkp xjf 16xj dfrxyv} (Saqtwsr 1985: 110). Tjf rf{ pfxxfv 1 kw sczksywp} a zavkarx sr r are ws dsype cf wffr aw krdsvtsvaxkrh a ekadvkxkd xakp. Dkadvkxkdw tvstfv, xjsyhj, avf wffr aw qavow axxadjfe xs a cawf pfxxfv. Ir xjkw wfrwf, m y 1 es rsx krzspzf ekadvkxkdw. Tjf f|xfrwkzf ywf sg ekadvkxkdw xs wyttpfqfrx xjf Laxkr aptjacfx kr dawfw {jfvf kx {aw wffr aw kraefuyaxf gsv xjf wsyrew sg sxjfv parhyahfw kw hfrfvapp} axxvkcyxfe xs xjf vfpkhksyw vfgsvqfv Jar Hyw (1369-1415), {js efzkwfe a vfgsvqfe svxjshvatj} gsv C~fdj krdsvtsvaxkrh 9addfrxfe: pfxxfvw wydj aw ˛ ¹ = > ?. M swx ekadvkxkdw avf tpadfe acszf xjf cawf pfxxfv {kxj {jkdj xjf} avf awwsdkaxfe. A gf{, js{fzfv, avf tpadfe cfps{ kx (aw “) sv xjvsyhj kx (aw B). 1 Laxkr pfxxfvw dsqf kr ps{fv-dawf are yttfv-dawf zfvwksrw. -

Proposal for Generation Panel for Latin Script Label Generation Ruleset for the Root Zone

Generation Panel for Latin Script Label Generation Ruleset for the Root Zone Proposal for Generation Panel for Latin Script Label Generation Ruleset for the Root Zone Table of Contents 1. General Information 2 1.1 Use of Latin Script characters in domain names 3 1.2 Target Script for the Proposed Generation Panel 4 1.2.1 Diacritics 5 1.3 Countries with significant user communities using Latin script 6 2. Proposed Initial Composition of the Panel and Relationship with Past Work or Working Groups 7 3. Work Plan 13 3.1 Suggested Timeline with Significant Milestones 13 3.2 Sources for funding travel and logistics 16 3.3 Need for ICANN provided advisors 17 4. References 17 1 Generation Panel for Latin Script Label Generation Ruleset for the Root Zone 1. General Information The Latin script1 or Roman script is a major writing system of the world today, and the most widely used in terms of number of languages and number of speakers, with circa 70% of the world’s readers and writers making use of this script2 (Wikipedia). Historically, it is derived from the Greek alphabet, as is the Cyrillic script. The Greek alphabet is in turn derived from the Phoenician alphabet which dates to the mid-11th century BC and is itself based on older scripts. This explains why Latin, Cyrillic and Greek share some letters, which may become relevant to the ruleset in the form of cross-script variants. The Latin alphabet itself originated in Italy in the 7th Century BC. The original alphabet contained 21 upper case only letters: A, B, C, D, E, F, Z, H, I, K, L, M, N, O, P, Q, R, S, T, V and X.