Low-Light Image Enhancement of Permanently Shadowed Lunar Regions with Physics-Based Machine Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Technology Today Spring 2013

Spring 2012 TECHNOLOGY® today Southwest Research Institute® San Antonio, Texas Spring 2012 • Volume 33, No. 1 TECHNOLOGY today COVER Director of Communications Craig Witherow Editor Joe Fohn TECHNOLOGY Assistant Editor today Deborah Deffenbaugh D018005-5651 Contributing Editors Tracey Whelan Editorial Assistant Kasey Chenault Design Scott Funk Photography Larry Walther Illustrations Andrew Blanchard, Frank Tapia Circulation Southwest Research Institute San Antonio, Texas Gina Monreal About the cover Full-scale fire tests were performed on upholstered furniture Technology Today (ISSN 1528-431X) is published three times as part of a project to reduce uncertainty in determining the each year and distributed free of charge. The publication cause of fires. discusses some of the more than 1,000 research and develop- ment projects under way at Southwest Research Institute. The materials in Technology Today may be used for educational and informational purposes by the public and the media. Credit to Southwest Research Institute should be given. This authorization does not extend to property rights such as patents. Commercial and promotional use of the contents in Technology Today without the express written consent of Southwest Research Institute is prohibited. The information published in Technology Today does not necessarily reflect the position or policy of Southwest Research Institute or its clients, and no endorsements should be made or inferred. Address correspondence to the editor, Department of Communications, Southwest Research Institute, P.O. Drawer 28510, San Antonio, Texas 78228-0510, or e-mail [email protected]. To be placed on the mailing list or to make address changes, call (210) 522-2257 or fax (210) 522-3547, or visit update.swri.org. -

South Pole-Aitken Basin

Feasibility Assessment of All Science Concepts within South Pole-Aitken Basin INTRODUCTION While most of the NRC 2007 Science Concepts can be investigated across the Moon, this chapter will focus on specifically how they can be addressed in the South Pole-Aitken Basin (SPA). SPA is potentially the largest impact crater in the Solar System (Stuart-Alexander, 1978), and covers most of the central southern farside (see Fig. 8.1). SPA is both topographically and compositionally distinct from the rest of the Moon, as well as potentially being the oldest identifiable structure on the surface (e.g., Jolliff et al., 2003). Determining the age of SPA was explicitly cited by the National Research Council (2007) as their second priority out of 35 goals. A major finding of our study is that nearly all science goals can be addressed within SPA. As the lunar south pole has many engineering advantages over other locations (e.g., areas with enhanced illumination and little temperature variation, hydrogen deposits), it has been proposed as a site for a future human lunar outpost. If this were to be the case, SPA would be the closest major geologic feature, and thus the primary target for long-distance traverses from the outpost. Clark et al. (2008) described four long traverses from the center of SPA going to Olivine Hill (Pieters et al., 2001), Oppenheimer Basin, Mare Ingenii, and Schrödinger Basin, with a stop at the South Pole. This chapter will identify other potential sites for future exploration across SPA, highlighting sites with both great scientific potential and proximity to the lunar South Pole. -

The Illumination Conditions of the South Pole Region of the Moon

EPSC Abstracts, Vol. 4, EPSC2009-314, 2009 European Planetary Science Congress, © Author(s) 2009 The Illumination Conditions of the South Pole Region Of The Moon E.A.Kozlova. (1) Sternberg State Astronomical Institute, Moscow State University. [email protected] The “Lunar Prospector” spacecraft, horizon during only 24% time of diurnal period for launched by NASA toward the Moon in 1998, this point. revealed the areas of high hydrogen content near Fig.2. shows the topographic profile of the both poles of the Moon. The South Pole of the crater Shoemaker (88º S, 38º E, D = 51 km) Moon is located in the southern part of the giant accordingly to date from KAGUYA (SELENE). topographic depression – the South Pole - Aitken The average depth of a crater has made 3,1 kms, basin. This region is heavily cratered and some an inclination of walls - 13º. The small crater is in craters at the poles are never exposed to direct the central part of the flat floor of Shoemaker. sunlight. High hydrogen content was found in the Earlier, we computed the permanently areas coinciding with such craters as Shoemaker shadowed area in the polar regions of the Moon (88º S, 38º E) and Faustini (87,2º S, 75,8º E) and for the real distribution of craters taking into other craters [1]. These craters can presumably be account the variations of the elevation of the Sun considered as "cold traps" in the South Polar during the 230 solar days that make up the period region of the Moon. of regression of the nodes of the lunar orbit [3]. -

![An Atlas of Antient [I.E. Ancient] Geography](https://docslib.b-cdn.net/cover/8605/an-atlas-of-antient-i-e-ancient-geography-1938605.webp)

An Atlas of Antient [I.E. Ancient] Geography

'V»V\ 'X/'N^X^fX -V JV^V-V JV or A?/rfn!JyJ &EO&!AElcr K T \ ^JSlS LIBRARY OF WELLES LEY COLLEGE PRESENTED BY Ruth Campbell '27 V Digitized by the Internet Archive in 2011 with funding from Boston Library Consortium Member Libraries http://www.archive.org/details/atlasofantientieOObutl AN ATLAS OP ANTIENT GEOGRAPHY BY SAMUEL BUTLER, D.D. AUTHOR OF MODERN AND ANTJENT GEOGRAPHY FOR THE USE OF SCHOOLS. STEREOTYPED BY J. HOWE. PHILADELPHIA: BLANQHARD AND LEA. 1851. G- PREFATORY NOTE INDEX OF DR. BUTLER'S ANTIENT ATLAS. It is to be observed in this Index, which is made for the sake of complete and easy refer- ence to the Maps, that the Latitude and Longitude of Rivers, and names of Countries, are given from the points where their names happen to be written in the Map, and not from any- remarkable point, such as their source or embouchure. The same River, Mountain, or City &c, occurs in different Maps, but is only mentioned once in the Index, except very large Rivers, the names of which are sometimes repeated in the Maps of the different countries to which they belong. The quantity of the places mentioned has been ascertained, as far as was in the Author's power, with great labor, by reference to the actual authorities, either Greek prose writers, (who often, by the help of a long vowel, a diphthong, or even an accent, afford a clue to this,) or to the Greek and Latin poets, without at all trusting to the attempts at marking the quantity in more recent works, experience having shown that they are extremely erroneous. -

Geology of Shackleton Crater and the South Pole of the Moon Paul D

GEOPHYSICAL RESEARCH LETTERS, VOL. 35, L14201, doi:10.1029/2008GL034468, 2008 Geology of Shackleton Crater and the south pole of the Moon Paul D. Spudis,1 Ben Bussey,2 Jeffrey Plescia,2 Jean-Luc Josset,3 and Ste´phane Beauvivre4 Received 28 April 2008; revised 10 June 2008; accepted 16 June 2008; published 18 July 2008. [1] Using new SMART-1 AMIE images and Arecibo and details new results on the lighting conditions of the poles Goldstone high resolution radar images of the Moon, we [e.g., Bussey et al., 2008]. investigate the geological relations of the south pole, including the 20 km-diameter crater Shackleton. The south 2. Geological Setting of the South Pole pole is located inside the topographic rim of the South Pole- Aitken (SPA) basin, the largest and oldest impact crater on [3] The dominant feature that influences the geology and the Moon and Shackleton is located on the edge of an topography of the south polar region is the South Pole- interior basin massif. The crater Shackleton is found to be Aitken (SPA) basin, the largest and oldest impact feature on older than the mare surface of the Apollo 15 landing site the Moon [e.g., Wilhelms, 1987]. This feature is over 2600 (3.3 Ga), but younger than the Apollo 14 landing site km in diameter, averages 12 km depth, and is approximately (3.85 Ga). These results suggest that Shackleton may have centered at 56° S, 180° [Spudis et al., 1994]. The basin collected extra-lunar volatile elements for at least the last formed sometime after the crust had solidified and was rigid 2 billion years and is an attractive site for permanent human enough to support significant topographic expression; thus, presence on the Moon. -

Official 2019 Half Marathon Results Book

OFFICIAL RESULTS BOOK November 8-10, 2019 2019 Official Race Results 3 Thanks For Your Participation 4 Elite Review 6 Event Statistics 7 Ad-Merchandise Blowout Sale 8 Half Marathon Finisher & Divisional Winners - Male 9 Half Marathon Overall Results - Male 20 Half Marathon Finisher & Divisional Winners - Female 21 Half Marathon Overall Results - Female 39 By-the-Bay 3K & Pacific Grove Lighthouse 5K 40 5K Divisional Results - Male & Female 41 5K Overall Results - Male 44 5K Overall Results - Female 48 3K Results (alphabetical) 50 Half Marathon Memories 51 Our Volunteers 52 Our Sponsors & Supporters 53 Our Family of Events MONTEREYBAYHALFMARATHON.ORG P.O. Box 222620 Carmel, CA 93922-2620 831.625.6226 [email protected] A Big Sur Marathon Foundation Event James Short Cover photo by Andrew Tronick Thanks For Your Participation Thank you for your participation in the Monterey the Name of Love in June and the year-round Bay Half Marathon weekend of events! JUST RUN® youth fitness program. Our mission is to create beautiful events that promote health and We were so happy to host you this year. After the benefit the community. Each year, our organization cancellation of the Half Marathon in 2018, it was distributes more than $400,000 in grants to other great to finally see the streets of Monterey and non-profit organizations and agencies. Your entry Pacific Grove filled with runners. The conditions for fees and support of our events makes this possible. the Saturday and Sunday races were excellent and we know many of you ran personal bests on our The race wouldn’t happen without our dedicated scenic courses. -

Science Concept 3: Key Planetary

Science Concept 4: The Lunar Poles Are Special Environments That May Bear Witness to the Volatile Flux Over the Latter Part of Solar System History Science Concept 4: The lunar poles are special environments that may bear witness to the volatile flux over the latter part of solar system history Science Goals: a. Determine the compositional state (elemental, isotopic, mineralogic) and compositional distribution (lateral and depth) of the volatile component in lunar polar regions. b. Determine the source(s) for lunar polar volatiles. c. Understand the transport, retention, alteration, and loss processes that operate on volatile materials at permanently shaded lunar regions. d. Understand the physical properties of the extremely cold (and possibly volatile rich) polar regolith. e. Determine what the cold polar regolith reveals about the ancient solar environment. INTRODUCTION The presence of water and other volatiles on the Moon has important ramifications for both science and future human exploration. The specific makeup of the volatiles may shed light on planetary formation and evolution processes, which would have implications for planets orbiting our own Sun or other stars. These volatiles also undergo transportation, modification, loss, and storage processes that are not well understood but which are likely prevalent processes on many airless bodies. They may also provide a record of the solar flux over the past 2 Ga of the Sun‟s life, a period which is otherwise very hard to study. From a human exploration perspective, if a local source of water and other volatiles were accessible and present in sufficient quantities, future permanent human bases on the Moon would become much more feasible due to the possibility of in-situ resource utilization (ISRU). -

Artemis III EVA Opportunities Along a Ridge Extending from Shackleton Crater Towards De Gerlache Crater

Science NASADefinition-requested Team for input Artemis for the (2020 Artemis) III Science Definition Team, delivered September 8, 2020. 2042.pdf Artemis III EVA Opportunities along a Ridge Extending from Shackleton Crater towards de Gerlache Crater David A. Kring*, Natasha Barrett, Sarah J. Boazman, Aleksandra Gawronska, Cosette M. Gilmour, Samuel H. Halim, Harish, Katie McCanaan, Animireddi V. Satyakumar, and Jahnavi Shah Introduction. The 3.15 Ga [1], 21-km-diameter [2] Shackleton crater penetrated a 1900-m-high massif [3] along the margin of the 2,400-km-diameter [4] South Pole-Aitken (SPA) basin. Although the origin of massifs is still uncertain due to insufficient in situ field studies, massifs are thought to be blocks of crystalline crust – solidified portions of the lunar magma ocean and subsequent intrusive magmas – that were mobilized by basin-size impact events and covered with ejecta from those impact events and younger impact events. The surface of the massif will be dominated by reworked ejecta from the Shackleton crater. Hydrocode simulations of the impact that produced Shackleton [5] suggest the ejecta is ~150 m thick and covers target material uplifted >1 km to form the crater rim. That ejecta layer thins with radial distance from the point of impact, but should have covered the entire length of a ridge in the massif that extends from the rim of Shackleton crater (Fig. 1, left panel) towards de Gerlache crater. Points of illumination. Two topographically high points in the area receive an unusually large amount of solar illumination (Fig. 1, center panel). An ~1700 m-high point on the rim of Shackleton crater has an average solar illumination of 85% [6] or greater [7] and an ~1900 m-high point on a massif ridge has an average solar illumination of 89% [6]. -

Case 13-13087-KG Doc 583 Filed 02/04/14 Page 1 of 227 Case 13-13087-KG Doc 583 Filed 02/04/14 Page 2 of 227 FISKER AUTOMOTIVE HOLDINGS,Case INC

Case 13-13087-KG Doc 583 Filed 02/04/14 Page 1 of 227 Case 13-13087-KG Doc 583 Filed 02/04/14 Page 2 of 227 FISKER AUTOMOTIVE HOLDINGS,Case INC. 13-13087-KG - U.S. Mail Doc 583 Filed 02/04/14 Page 3 of 227 Served 1/28/2014 014 IDS - DELAWARE 2BF GLOBAL INVESTMENTS LTD. 3 DIMENSIONAL SERVICES CHARLOTTE, NC 28258-0027 C/O I2BF LLC 2547 PRODUCT DRIVE ATTN: MICHAEL LOUSTEAU ROCHESTER, MI 48309 110 GREENE ST., SUITE 600 NEW YORK, NY 10012 3 FORM 3336603 CANADA INC. 360 HOLDINGS, LLC 2300 SOUTH 2300 WEST SUITE B ATTN: MICHAEL MIKELBERG C/O ADVANCED LITHIUM POWER SALT LAKE CITY, UT 84119 1455 SHERBROOKE STREET WEST, SUITE 200 SUITE 1308, 1030 WEST GEORGIA STREET MONTREAL, QC H3G 1L2 VANCOUVER, BC V6E 2Y3 CANADA CANADA 3D SCANNING AND CONSULTING 3GC GROUP 3M COMPANY 76 SADDLEBACK ROAD 3435 WILSHIRE BLVD. GENERAL OFFICES / 3M CENTER TUSTIN, CA 92780 LOS ANGELES, CA 90010 SAINT PAUL, MN 55144-1000 8888 INVESTMENTS GMBH 911 RESTORATION A & A PROTECTIVE SERVICES REIDSTRASSE 7 1846 S. GRAND PO BOX 66443 CH-6330 CHAM SANTA ANA, CA 92705 LOS ANGELES, CA 90066 SWITZERLAND A COENS A. BROEKHUIZEN A. FIDDER AC SPECIAL CAR RENTAL AND LEASE BV DE PEEL B.V.B.A. FIDDER BEHEER BV EERSTE STEGGE 1 LEEUWERKSTRAAT 24 HET VALLAAT 26 7631 AE OOTMARSUM B-2360 OUD-TURNHOUT 8614 DC SNEEK NETHERLANDS BELGIUM NETHERLANDS A. POLS A. RAFIMANESH A. VAN VEEN RAADHUISTRAAT 112 A&A CAPACITY ATHLON CAR LEASE NEDERLAND B.V. SPRANG-CAPELLE 5161 BJ BONGERDSTRAAT 5A POSBUS 60250 THE NETHERLANDS 1356 AL MARKERBINNEN 1320 AH ALMERE NETHERLANDS NETHERLANDS A.A. -

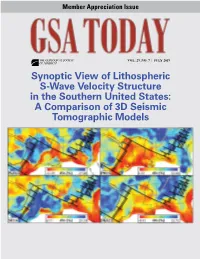

Synoptic View of Lithospheric S-Wave Velocity Structure in the Southern United States: a Comparison of 3D Seismic Tomographic Models 2020 CALENDAR

Member Appreciation Issue VOL. 29, NO. 7 | J U LY 2019 Synoptic View of Lithospheric S-Wave Velocity Structure in the Southern United States: A Comparison of 3D Seismic Tomographic Models 2020 CALENDAR BUY ONLINE } rock.geosociety.org/store | from the 2020 Postcards Field toll-freeBUY 1.888.443.4472 ONLINE | +1.303.357.1000, } rock.geosociety.org/store option 3 | [email protected] JULY 2019 | VOLUME 29, NUMBER 7 SCIENCE 4 Synoptic View of Lithospheric S-Wave Velocity Structure in the Southern United States: GSA TODAY (ISSN 1052-5173 USPS 0456-530) prints news A Comparison of 3D Seismic Tomographic Models and information for more than 22,000 GSA member readers Alden Netto et al. and subscribing libraries, with 11 monthly issues (March- April is a combined issue). GSA TODAY is published by The Cover: Map view of four recent seismic shear wave models of the southern U.S. at 5 km above the Geological Society of America® Inc. (GSA) with offices at 3300 Penrose Place, Boulder, Colorado, USA, and a mail- Moho, plotted as perturbations with respect to the same average 1D model. Solid black lines represent ing address of P.O. Box 9140, Boulder, CO 80301-9140, USA. a proposed rift and transform fault system. The southern U.S. has relatively low seismicity compared GSA provides this and other forums for the presentation to western and northeastern North America, so few local earthquakes are available for imaging, and of diverse opinions and positions by scientists worldwide, there have historically been few seismic stations to record distant earthquakes as well. -

Implications for Clean Water Act Implementation

UNIVERSITY OF CALIFORNIA Los Angeles Protecting and Restoring our Nation’s Waters: The Effects of Science, Law, and Policy on Clean Water Act Jurisdiction with a focus on the Arid West A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Environmental Science and Engineering by Forrest Brown Vanderbilt 2013 © Copyright by Forrest Brown Vanderbilt 2013 ABSTRACT OF THE DISSERTATION Protecting and Restoring our Nation’s Waters: The Effects of Science, Law, and Policy on Clean Water Act Jurisdiction with a focus on the Arid West by Forrest Brown Vanderbilt Doctor of Environmental Science and Engineering University of California, Los Angeles, 2013 Professor Richard F. Ambrose, Chair Since its initial passage in 1972, the Clean Water Act has attempted to restore and protect our Nation’s waters. The definition of ‘our Nation’s waters’ has undergone periodic debate and scrutiny as the U.S. Environmental Protection Agency, the U.S. Army Corps of Engineers, and the Supreme Court have defined and redefined the standards for determining CWA jurisdiction. The Supreme Court’s most recent set of standards, including the “significant nexus” test, appear to both increase the uncertainty in what is regulated and increase the burden of proof for determining CWA jurisdiction. The Arid West was singled out in the most recent EPA and Corps joint jurisdictional guidance as a problematic area. Focusing on the Arid West, my dissertation evaluates the CWA jurisdiction process from three perspectives: law, policy, and ii science, and explores an understanding of the past, present, and potential future path of CWA jurisdiction. -

Atualização Do Cadastro De Imóvel Fica Mais Simples Prefeitura Abre Na Segunda-Feira Prazo Para Contribuinte Fazer a Declaração Anual De Dados Cadastrais

Diário Oficial do Município do Rio de Janeiro | Poder Executivo | Ano XXXV | Nº 68 | Quinta-feira, 17 de Junho de 2021 Atualização do cadastro de imóvel fica mais simples Prefeitura abre na segunda-feira prazo para contribuinte fazer a Declaração Anual de Dados Cadastrais Começa segunda-feira, dia 21, e vai até 31 de julho o prazo para preenchimento e entre- ga da Declaração Anual de Dados Cadastrais (DeCAD). Inédita no país, a DeCAD é uma nova forma de declarar a atualização de in- formações pessoais e de imóveis que os con- tribuintes de IPTU na cidade precisarão fazer anualmente. A declaração, que antes valia ape- nas para proprietários que fizessem alguma modificação em seus imóveis, faz parte de um projeto piloto da Secretaria Municipal de Fazen- da e Planejamento (SMFP), que, além de sim- plificar o processo de cadastramento, poderá resultar em desconto no IPTU do ano seguinte. Com a novidade, os contribuintes que deixa- ram de atualizar suas informações com o fis- co terão a oportunidade de regularizar débitos sem sofrer cobrança retroativa. O processo será totalmente online, por meio do Carioca Digital (carioca.rio), e contemplará inicialmen- te imóveis localizados nas Áreas de Planeja- mento 1 e 2 do município. RIO Diário OficialD.O. do Município do Rio de Janeiro PREFEITURA DA CIDADE DO RIO DE JANEIRO Prefeito Instituto de Previdência e Assistência do Município Secretaria Municipal de Trabalho e Renda - SMTE Eduardo Paes do Rio de Janeiro - PREVI-RIO Sergio Luiz Felippe Melissa Garrido Cabral Vice-Prefeito Secretaria Municipal