Urdu to Punjabi Machine Translation: an Incremental Training Approach

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

(And Potential) Language and Linguistic Resources on South Asian Languages

CoRSAL Symposium, University of North Texas, November 17, 2017 Existing (and Potential) Language and Linguistic Resources on South Asian Languages Elena Bashir, The University of Chicago Resources or published lists outside of South Asia Digital Dictionaries of South Asia in Digital South Asia Library (dsal), at the University of Chicago. http://dsal.uchicago.edu/dictionaries/ . Some, mostly older, not under copyright dictionaries. No corpora. Digital Media Archive at University of Chicago https://dma.uchicago.edu/about/about-digital-media-archive Hock & Bashir (eds.) 2016 appendix. Lists 9 electronic corpora, 6 of which are on Sanskrit. The 3 non-Sanskrit entries are: (1) the EMILLE corpus, (2) the Nepali national corpus, and (3) the LDC-IL — Linguistic Data Consortium for Indian Languages Focus on Pakistan Urdu Most work has been done on Urdu, prioritized at government institutions like the Center for Language Engineering at the University of Engineering and Technology in Lahore (CLE). Text corpora: http://cle.org.pk/clestore/index.htm (largest is a 1 million word Urdu corpus from the Urdu Digest. Work on Essential Urdu Linguistic Resources: http://www.cle.org.pk/eulr/ Tagset for Urdu corpus: http://cle.org.pk/Publication/papers/2014/The%20CLE%20Urdu%20POS%20Tagset.pdf Urdu OCR: http://cle.org.pk/clestore/urduocr.htm Sindhi Sindhi is the medium of education in some schools in Sindh Has more institutional backing and consequent research than other languages, especially Panjabi. Sindhi-English dictionary developed jointly by Jennifer Cole at the University of Illinois Urbana- Champaign and Sarmad Hussain at CLE (http://182.180.102.251:8081/sed1/homepage.aspx). -

Linguistic Survey of India Bihar

LINGUISTIC SURVEY OF INDIA BIHAR 2020 LANGUAGE DIVISION OFFICE OF THE REGISTRAR GENERAL, INDIA i CONTENTS Pages Foreword iii-iv Preface v-vii Acknowledgements viii List of Abbreviations ix-xi List of Phonetic Symbols xii-xiii List of Maps xiv Introduction R. Nakkeerar 1-61 Languages Hindi S.P. Ahirwal 62-143 Maithili S. Boopathy & 144-222 Sibasis Mukherjee Urdu S.S. Bhattacharya 223-292 Mother Tongues Bhojpuri J. Rajathi & 293-407 P. Perumalsamy Kurmali Thar Tapati Ghosh 408-476 Magadhi/ Magahi Balaram Prasad & 477-575 Sibasis Mukherjee Surjapuri S.P. Srivastava & 576-649 P. Perumalsamy Comparative Lexicon of 3 Languages & 650-674 4 Mother Tongues ii FOREWORD Since Linguistic Survey of India was published in 1930, a lot of changes have taken place with respect to the language situation in India. Though individual language wise surveys have been done in large number, however state wise survey of languages of India has not taken place. The main reason is that such a survey project requires large manpower and financial support. Linguistic Survey of India opens up new avenues for language studies and adds successfully to the linguistic profile of the state. In view of its relevance in academic life, the Office of the Registrar General, India, Language Division, has taken up the Linguistic Survey of India as an ongoing project of Government of India. It gives me immense pleasure in presenting LSI- Bihar volume. The present volume devoted to the state of Bihar has the description of three languages namely Hindi, Maithili, Urdu along with four Mother Tongues namely Bhojpuri, Kurmali Thar, Magadhi/ Magahi, Surjapuri. -

English Language in Pakistan: Expansion and Resulting Implications

Journal of Education & Social Sciences Vol. 5(1): 52-67, 2017 DOI: 10.20547/jess0421705104 English Language in Pakistan: Expansion and Resulting Implications Syeda Bushra Zaidi ∗ Sajida Zaki y Abstract: From sociolinguistic or anthropological perspective, Pakistan is classified as a multilingual context with most people speaking one native or regional language alongside Urdu which is the national language. With respect to English, Pakistan is a second language context which implies that the language is institutionalized and enjoying the privileged status of being the official language. This thematic paper is an attempt to review the arrival and augmentation of English language in Pakistan both before and after its creation. The chronological description is carried out in order to identify the consequences of this spread and to link them with possible future developments and implications. Some key themes covered in the paper include a brief historical overview of English language from how it was introduced to the manner in which it has developed over the years especially in relation to various language and educational policies. Based on the critical review of the literature presented in the paper there seems to be no threat to English in Pakistan a prediction true for the language globally as well. The status of English, as it globally elevates, will continue spreading and prospering in Pakistan as well enriching the Pakistani English variety that has already born and is being studied and codified. However, this process may take long due to the instability of governing policies and law-makers. A serious concern for researchers and people in general, however, will continue being the endangered and indigenous varieties that may continue being under considerable pressure from the prestigious varieties. -

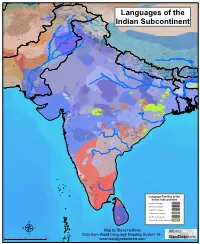

Map by Steve Huffman; Data from World Language Mapping System

Svalbard Greenland Jan Mayen Norwegian Norwegian Icelandic Iceland Finland Norway Swedish Sweden Swedish Faroese FaroeseFaroese Faroese Faroese Norwegian Russia Swedish Swedish Swedish Estonia Scottish Gaelic Russian Scottish Gaelic Scottish Gaelic Latvia Latvian Scots Denmark Scottish Gaelic Danish Scottish Gaelic Scottish Gaelic Danish Danish Lithuania Lithuanian Standard German Swedish Irish Gaelic Northern Frisian English Danish Isle of Man Northern FrisianNorthern Frisian Irish Gaelic English United Kingdom Kashubian Irish Gaelic English Belarusan Irish Gaelic Belarus Welsh English Western FrisianGronings Ireland DrentsEastern Frisian Dutch Sallands Irish Gaelic VeluwsTwents Poland Polish Irish Gaelic Welsh Achterhoeks Irish Gaelic Zeeuws Dutch Upper Sorbian Russian Zeeuws Netherlands Vlaams Upper Sorbian Vlaams Dutch Germany Standard German Vlaams Limburgish Limburgish PicardBelgium Standard German Standard German WalloonFrench Standard German Picard Picard Polish FrenchLuxembourgeois Russian French Czech Republic Czech Ukrainian Polish French Luxembourgeois Polish Polish Luxembourgeois Polish Ukrainian French Rusyn Ukraine Swiss German Czech Slovakia Slovak Ukrainian Slovak Rusyn Breton Croatian Romanian Carpathian Romani Kazakhstan Balkan Romani Ukrainian Croatian Moldova Standard German Hungary Switzerland Standard German Romanian Austria Greek Swiss GermanWalser CroatianStandard German Mongolia RomanschWalser Standard German Bulgarian Russian France French Slovene Bulgarian Russian French LombardRomansch Ladin Slovene Standard -

Genealogical Classification of New Indo-Aryan Languages and Lexicostatistics

Anton I. Kogan Institute of Oriental Studies of the Russian Academy of Sciences (Russia, Moscow); [email protected] Genealogical classification of New Indo-Aryan languages and lexicostatistics Genetic relations among Indo-Aryan languages are still unclear. Existing classifications are often intuitive and do not rest upon rigorous criteria. In the present article an attempt is made to create a classification of New Indo-Aryan languages, based on up-to-date lexicosta- tistical data. The comparative analysis of the resulting genealogical tree and traditional clas- sifications allows the author to draw conclusions about the most probable genealogy of the Indo-Aryan languages. Keywords: Indo-Aryan languages, language classification, lexicostatistics, glottochronology. The Indo-Aryan group is one of the few groups of Indo-European languages, if not the only one, for which no classification based on rigorous genetic criteria has been suggested thus far. The cause of such a situation is neither lack of data, nor even the low level of its historical in- terpretation, but rather the existence of certain prejudices which are widespread among In- dologists. One of them is the belief that real genetic relations between the Indo-Aryan lan- guages cannot be clarified because these languages form a dialect continuum. Such an argu- ment can hardly seem convincing to a comparative linguist, since dialect continuum is by no means a unique phenomenon: it is characteristic of many regions, including those where Indo- European languages are spoken, e.g. the Slavic and Romance-speaking areas. Since genealogi- cal classifications of Slavic and Romance languages do exist, there is no reason to believe that the taxonomy of Indo-Aryan languages cannot be established. -

Map by Steve Huffman Data from World Language Mapping System 16

Tajiki Tajiki Tajiki Shughni Southern Pashto Shughni Tajiki Wakhi Wakhi Wakhi Mandarin Chinese Sanglechi-Ishkashimi Sanglechi-Ishkashimi Wakhi Domaaki Sanglechi-Ishkashimi Khowar Khowar Khowar Kati Yidgha Eastern Farsi Munji Kalasha Kati KatiKati Phalura Kalami Indus Kohistani Shina Kati Prasuni Kamviri Dameli Kalami Languages of the Gawar-Bati To rw al i Chilisso Waigali Gawar-Bati Ushojo Kohistani Shina Balti Parachi Ashkun Tregami Gowro Northwest Pashayi Southwest Pashayi Grangali Bateri Ladakhi Northeast Pashayi Southeast Pashayi Shina Purik Shina Brokskat Aimaq Parya Northern Hindko Kashmiri Northern Pashto Purik Hazaragi Ladakhi Indian Subcontinent Changthang Ormuri Gujari Kashmiri Pahari-Potwari Gujari Bhadrawahi Zangskari Southern Hindko Kashmiri Ladakhi Pangwali Churahi Dogri Pattani Gahri Ormuri Chambeali Tinani Bhattiyali Gaddi Kanashi Tinani Southern Pashto Ladakhi Central Pashto Khams Tibetan Kullu Pahari KinnauriBhoti Kinnauri Sunam Majhi Western Panjabi Mandeali Jangshung Tukpa Bilaspuri Chitkuli Kinnauri Mahasu Pahari Eastern Panjabi Panang Jaunsari Western Balochi Southern Pashto Garhwali Khetrani Hazaragi Humla Rawat Central Tibetan Waneci Rawat Brahui Seraiki DarmiyaByangsi ChaudangsiDarmiya Western Balochi Kumaoni Chaudangsi Mugom Dehwari Bagri Nepali Dolpo Haryanvi Jumli Urdu Buksa Lowa Raute Eastern Balochi Tichurong Seke Sholaga Kaike Raji Rana Tharu Sonha Nar Phu ChantyalThakali Seraiki Raji Western Parbate Kham Manangba Tibetan Kathoriya Tharu Tibetan Eastern Parbate Kham Nubri Marwari Ts um Gamale Kham Eastern -

FACTORS AFFECTING PROFICIENCY AMONG GUJARATI HERITAGE LANGUAGE LEARNERS on THREE CONTINENTS a Dissertation Submitted to the Facu

FACTORS AFFECTING PROFICIENCY AMONG GUJARATI HERITAGE LANGUAGE LEARNERS ON THREE CONTINENTS A Dissertation submitted to the Faculty of the Graduate School of Arts and Sciences of Georgetown University in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Linguistics By Sheena Shah, M.S. Washington, DC May 14, 2013 Copyright 2013 by Sheena Shah All Rights Reserved ii FACTORS AFFECTING PROFICIENCY AMONG GUJARATI HERITAGE LANGUAGE LEARNERS ON THREE CONTINENTS Sheena Shah, M.S. Thesis Advisors: Alison Mackey, Ph.D. Natalie Schilling, Ph.D. ABSTRACT This dissertation examines the causes behind the differences in proficiency in the North Indian language Gujarati among heritage learners of Gujarati in three diaspora locations. In particular, I focus on whether there is a relationship between heritage language ability and ethnic and cultural identity. Previous studies have reported divergent findings. Some have found a positive relationship (e.g., Cho, 2000; Kang & Kim, 2011; Phinney, Romero, Nava, & Huang, 2001; Soto, 2002), whereas others found no correlation (e.g., C. L. Brown, 2009; Jo, 2001; Smolicz, 1992), or identified only a partial relationship (e.g., Mah, 2005). Only a few studies have addressed this question by studying one community in different transnational locations (see, for example, Canagarajah, 2008, 2012a, 2012b). The current study addresses this matter by examining data from members of the same ethnic group in similar educational settings in three multi-ethnic and multilingual cities. The results of this study are based on a survey consisting of questionnaires, semi-structured interviews, and proficiency tests with 135 participants. Participants are Gujarati heritage language learners from the U.K., Singapore, and South Africa, who are either current students or recent graduates of a Gujarati School. -

THE CONSTITUTION (AMENDMENT) BILL, 2015 By

AS INTRODUCED IN LOK SABHA Bill No. 90 of 2015 THE CONSTITUTION (AMENDMENT) BILL, 2015 By SHRI ASHWINI KUMAR CHOUBEY, M.P. A BILL further to amend the Constitution of India. BE it enacted by Parliament in the Sixty-sixth Year of the Republic of India as follows:— 1. This Act may be called the Constitution (Amendment) Act, 2015. Short title. 2. In the Eighth Schedule to the Constitution,— Amendment of the Eighth (i) existing entries 1 and 2 shall be renumbered as entries 2 and 3, respectively, Schedule. 5 and before entry 2 as so renumbered, the following entry shall be inserted, namely:— "1. Angika."; (ii) after entry 3 as so renumbered, the following entry shall be inserted, namely:— "4. Bhojpuri."; and (iii) entries 3 to 22 shall be renumbered as entries 5 to 24, respectively. STATEMENT OF OBJECTS AND REASONS Language is not only a medium of communication but also a sign of respect. Language also reflects on the history, culture, people, system of governance, ecology, politics, etc. 'Bhojpuri' language is also known as Bhozpuri, Bihari, Deswali and Khotla and is a member of the Bihari group of the Indo-Aryan branch of the Indo-European language family and is closely related to Magahi and Maithili languages. Bhojpuri language is spoken in many parts of north-central and eastern regions of this country. It is particularly spoken in the western part of the State of Bihar, north-western part of Jharkhand state and the Purvanchal region of Uttar Pradesh State. Bhojpuri language is spoken by over forty million people in the country. -

Spotlight on Tamil

Heritage Voices: Languages Urdu ABOUT THE URDU LANGUAGE Urdu is an Indo-Aryan language that serves as the primary, secondary, or tertiary language of communication for millions of individuals in Pakistan, India, and sizable migrant communities in the Persian Gulf, the United Kingdom, and the United States. Syntactically Urdu and Hindi are identical, with Urdu incorporating a heavier loan vocabulary from Persian, Arabic, and Turkic and retaining the original spelling of words and sounds from these languages. Speakers of both languages are able to communicate with each other at an informal level without much difficulty. In more formal situations and in higher registers, the two languages diverge significantly. Urdu is written in the Perso-Arabic script called Nasta'liq, which was modified and expanded to incorporate a distinctively South Asian phonology. While Urdu is one of the world's leading languages of Muslim erudition, some of its leading protagonists have been Hindu and Sikh authors taking full advantage of Urdu's rich expressive medium. Urdu has developed a preeminent position in South Asia as a language of literary genius as well as a major medium of communication in the daily lives of people. It is an official language of Pakistan, where it is a link language and probably the most widely understood language across all regions, which also have their own languages (Pashto, Balochi, Sindhi, and Punjabi). It is also one of the national languages of India. Urdu is widely understood by Afghans in Pakistan and Afghanistan, who acquire the language through media, business, and family connections in Pakistan. Urdu serves as a lingua franca for many in South Asia, especially for Muslims. -

Hindi-Urdu (HNU) 1

Hindi-Urdu (HNU) 1 HINDI-URDU (HNU) HNU 111. Elementary Hindi-Urdu I. (3 h) Introduction to modern Hindi-Urdu. Designed for students with no knowledge of the language. Focus is on developing reading, writing, and conversation skills for practical contexts. Instruction in the Devanagari and Nastaliq scripts and the cultures of Indian and Pakistan. Fall only. HNU 112. Elementary Hindi-Urdu II. (3 h) Continued instruction in modern Hindi-Urdu. Students with previous background may place into this course with the instructor’s permission. Focus is on developing reading, writing, and conversation skills for practical contexts. Instruction in the Devanagari and Nastaliq scripts and the cultures of India and Pakistan. Spring only. P-HNU 111. HNU 140. Introduction to the Hindi script (Devanagari). (1 h) Introduction to the Devanagari writing system used in Hindi, as well as other South Asian languages, including Nepali and Sanskrit. Includes an overview of the Hindi-Urdu sound system and language. Students with prior proficiency in spoken Hindi or Urdu may complete this course in preparation for entering Intermediate Hindi-Urdu (HNU 153 and 201). HNU 141. Introduction to the Urdu script (Nastaliq). (1 h) Introduction to the Nastaliq writing system used in Urdu, as well as Persian, Punjabi, and Kashmiri. Includes an overview of the Hindi-Urdu sound system and language. Students with prior proficiency in spoken Hindi or Urdu may complete this course in preparation for entering Intermediate Hindi-Urdu (HNU 153 and 201). HNU 153. Intermediate Hindi-Urdu I. (3 h) Second year of modern Hindi-Urdu. Students with comparable proficiency may place into this course with the instructor’s permission. -

Majority Language Death

Language Documentation & Conservation Special Publication No. 7 (January 2014) Language Endangerment and Preservation in South Asia, ed. by Hugo C. Cardoso, pp. 19-45 KWWSQÀUFKDZDLLHGXOGFVS 2 http://hdl.handle.net/10125/4600 Majority language death Liudmila V. Khokhlova Moscow University The notion of ‘language death’ is usually associated with one of the ‘endangered languages’, i.e. languages that are at risk of falling out of use as their speakers die out or shift to some other language. This paper describes another kind of language death: the situation in which a language remains a powerful identity marker and the mother tongue of a country’s privileged and numerically dominant group with all the features that are treated as constituting ethnicity, and yet ceases to be used as a means of expressing its speakers’ intellectual demands and preserving the FRPPXQLW\¶VFXOWXUDOWUDGLWLRQV7KLVSURFHVVPD\EHGH¿QHG as the ‘intellectual death’ of a language. The focal point of the analysis undertaken is the sociolinguistic status of Punjabi in Pakistan. The aim of the paper is to explore the historical, economic, political, cultural and psychological reasons for the gradual removal of a majority language from the repertoires of native speakers. 1. P REFACE. The Punjabi-speaking community constitutes 44.15% of the total population of Pakistan and 47.56% of its urban population. 1 13DNLVWDQLVDPXOWLOLQJXDOFRXQWU\ZLWKVL[PDMRUODQJXDJHVDQGRYHU¿IW\QLQHVPDOOHU languages. The major languages are Punjabi (44.15% of the population), Pashto (15.42%), Sindhi -

National Languages Policy Recommendation Commission 1994(2050VS)

The Report of National Languages Policy Recommendation Commission 1994(2050VS) National Language Policy Recommendation Commission Academy Building, Kamaladi Kathmandu, Nepal April 13, 1994 (31 Chaitra 2050 VS) National Languages Policy Recommendation Commission Academy Building, Kamaladi Kathmandu, Nepal Date: April 13, 1994(31st Chaitra 2050VS) Honorable Minister Mr. Govinda Raj Joshi Minister of Education, Culture and Social Welfare Keshar Mahal, Kathmandu. Honorable Minister, The constitution promulgated after the restoration of democracy in Nepal following the people's revolution 1990 ending the thirty-year autocratic Panchayat regime, accepts that Nepal is a multicultural and multiethnic country and the languages spoken in Nepal are considered the national languages. The constitution also has ascertained the right to operate school up to the primary level in the mother tongues. There is also a constitutional provision that the state while maintaining the cultural diversity of the country shall pursue a policy of strengthening the national unity. For this purpose, His Majesty's Government had constituted a commission entitled National Language Policy Recommendations Committee in order to suggest the recommendations to Ministry of Education, Culture and Social Welfare about the policies and programmes related to language development, and the strategy to be taken while imparting primary education through the mother tongue. The working area and focus of the commission constituted on May 27, 1993 (14th Jestha 2050 VS) was the development of the national languages and education through the mother tongue. This report, which considers the working area as well as some other relevant aspects, has been prepared over the past 11 months, prior to mid-April 1994 (the end of Chaitra 2050VS), on the basis of the work plan prepared by the commission.