English-Bhojpuri Smt System: Insights

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Multilingual Education and Nepal Appendix 3

19. Skutnabb-Kangas, Tove and Mohanty, Ajit. Policy and Strategy for MLE in Nepal Report by Tove Skutnabb-Kangas and Ajit Mohanty Consultancy visit 4-14 March 2009i Sanothimi, Bhaktapur: Multilingual Education Program for All Non-Nepali Speaking Students of Primary Schools of Nepal. Ministry of Education, Department of Education, Inclusive Section. See also In Press, for an updated version. List of Contents List of Contents List of Appendices List of Tables List of Figures List of Abbreviations 1. Introduction: placing language in education issues in Nepal in a broader societal, economic and political framework 2. Broader Language Policy and Planning Perspectives and Issues 2.1. STEP 1 in Language Policy and Language Planning: Broad-based political debates about the goals of language 2.2. STEP 2 in Educational Language Policy and Language Planning: Realistic language proficiency goal/aim in relation to the baseline 2.3. STEP 3 in Educational Language Planning: ideal goals and prerequisites compared with characteristics of present schools 2.4. STEP 4 in Educational Language Planning: what has characterized programmes with high versus low success? 2.5. STEP 5 in Educational Language Planning: does it pay off to maintain ITM languages? 3. Scenarios 3.1. Introduction 3.2. Models with often harmful results: dominant-language-medium (subtractive assimilatory submersion) 3.3. Somewhat better but not good enough results: early-exit transitional models 3.4. Even better results: late-exit transitional models 3.5. Strongest form: self-evident mother tongue medium models with no transition 4. Experiences from Nepal: the situation today 5. Specific challenges in Nepal: implementation strategies 5.1. -

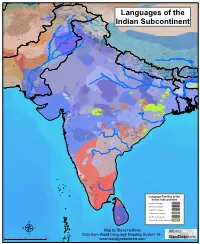

Map by Steve Huffman; Data from World Language Mapping System

Svalbard Greenland Jan Mayen Norwegian Norwegian Icelandic Iceland Finland Norway Swedish Sweden Swedish Faroese FaroeseFaroese Faroese Faroese Norwegian Russia Swedish Swedish Swedish Estonia Scottish Gaelic Russian Scottish Gaelic Scottish Gaelic Latvia Latvian Scots Denmark Scottish Gaelic Danish Scottish Gaelic Scottish Gaelic Danish Danish Lithuania Lithuanian Standard German Swedish Irish Gaelic Northern Frisian English Danish Isle of Man Northern FrisianNorthern Frisian Irish Gaelic English United Kingdom Kashubian Irish Gaelic English Belarusan Irish Gaelic Belarus Welsh English Western FrisianGronings Ireland DrentsEastern Frisian Dutch Sallands Irish Gaelic VeluwsTwents Poland Polish Irish Gaelic Welsh Achterhoeks Irish Gaelic Zeeuws Dutch Upper Sorbian Russian Zeeuws Netherlands Vlaams Upper Sorbian Vlaams Dutch Germany Standard German Vlaams Limburgish Limburgish PicardBelgium Standard German Standard German WalloonFrench Standard German Picard Picard Polish FrenchLuxembourgeois Russian French Czech Republic Czech Ukrainian Polish French Luxembourgeois Polish Polish Luxembourgeois Polish Ukrainian French Rusyn Ukraine Swiss German Czech Slovakia Slovak Ukrainian Slovak Rusyn Breton Croatian Romanian Carpathian Romani Kazakhstan Balkan Romani Ukrainian Croatian Moldova Standard German Hungary Switzerland Standard German Romanian Austria Greek Swiss GermanWalser CroatianStandard German Mongolia RomanschWalser Standard German Bulgarian Russian France French Slovene Bulgarian Russian French LombardRomansch Ladin Slovene Standard -

Interference of Bhojpuri Language in Learning English As a Second Language

ELT VOICES – INDIA INTERNATIONAL JOURNAL FOR TEACHERS OF ENGLISH FEBRUARY 2014 | VOLUME 4, ISSUE 1 | ISSN 2230-9136 (PRINT) 2321-7170 (ONLINE) Interference of Bhojpuri Language in Learning English as a Second Language V. RADHIKA1 ABSTRACT Studying the special features of any language is interesting for exploring many unrevealed facts about that language. The purpose of this paper is to create interest in the mind of readers to identify the special features of Bhojpuri language, which is gaining importance in India in the recent years among the spoken languages and make the readers to get rid of the problem of interference of this language in learning English as a second language. 1. Assistant Professor in English, AVIT, VMU, Paiyanoor, India. ELT VOICES – INDIA February 2014 | Volume 4, Issue 1 1. Introduction The paper proposes to make an in-depth study of Bhojpuri language to enable learners to compare and contrast between two languages, Bhojpuri and English by which communicative skill in English can be properly perceived. Bhojpuri language has its own phonological and morphological pattern. The present study is concerned with tracing the difference between two languages (i.e.) English and Bhojpuri, with reference to their grammatical structure and its relevant information. Aim of the Paper Create interest in the mind of scholars to make the detailed study about the Bhojpuri language with reference to their grammar pattern and to find out possible solution to eliminate the problem of mother tongue interference. 2. Origin of Bhojpuri Language Bhojpuri language gets its name from a place called ‘Bhojpur’ in Bihar. It is believed that Ujjain Rajputs claimed their descent from Raja Bhoj of Malwa in the sixteenth century. -

![Nepal Demographic and Health Survey 2006 [FR191]](https://docslib.b-cdn.net/cover/2463/nepal-demographic-and-health-survey-2006-fr191-1322463.webp)

Nepal Demographic and Health Survey 2006 [FR191]

Nepal Demographic And Health Survey 2006 Population Division Ministry of Health and Population Government of Nepal Kathmandu, Nepal New ERA Kathmandu, Nepal Macro International Inc. Calverton, Maryland, U.S.A. May 2007 New ERA Ministry of Health and Population The 2006 Nepal Demographic and Health Survey (2006 NDHS) is part of the worldwide MEASURE DHS project, which is funded by the United States Agency for International Development (USAID) under contract GPO-C-00- 03-00002-00. The views expressed in this publication do not necessarily reflect the views of the United States Agency for International Development or the United States Government. Additional information about the 2006 NDHS may be obtained from Population Division, Ministry of Health and Population, Government of Nepal, Ramshahpath, Kathmandu, Nepal; Telephone: (977-1) 4262987; New ERA, P.O. Box 722, Kathmandu, Nepal; Telephone: (977-1) 4423176/4413603; Fax: (977-1) 4419562; E-mail: [email protected]. Additional information about the DHS project may be obtained from Macro International Inc., 11785 Beltsville Drive, Calverton, MD 20705 USA; Telephone: 301-572-0200, Fax: 301-572- 0999, E-mail: [email protected], Internet: http://www.measuredhs.com. Recommended citation: Ministry of Health and Population (MOHP) [Nepal], New ERA, and Macro International Inc. 2007. Nepal Demographic and Health Survey 2006. Kathmandu, Nepal: Ministry of Health and Population, New ERA, and Macro International Inc. CONTENTS TABLES AND FIGURES .......................................................................................................... -

Map by Steve Huffman Data from World Language Mapping System 16

Tajiki Tajiki Tajiki Shughni Southern Pashto Shughni Tajiki Wakhi Wakhi Wakhi Mandarin Chinese Sanglechi-Ishkashimi Sanglechi-Ishkashimi Wakhi Domaaki Sanglechi-Ishkashimi Khowar Khowar Khowar Kati Yidgha Eastern Farsi Munji Kalasha Kati KatiKati Phalura Kalami Indus Kohistani Shina Kati Prasuni Kamviri Dameli Kalami Languages of the Gawar-Bati To rw al i Chilisso Waigali Gawar-Bati Ushojo Kohistani Shina Balti Parachi Ashkun Tregami Gowro Northwest Pashayi Southwest Pashayi Grangali Bateri Ladakhi Northeast Pashayi Southeast Pashayi Shina Purik Shina Brokskat Aimaq Parya Northern Hindko Kashmiri Northern Pashto Purik Hazaragi Ladakhi Indian Subcontinent Changthang Ormuri Gujari Kashmiri Pahari-Potwari Gujari Bhadrawahi Zangskari Southern Hindko Kashmiri Ladakhi Pangwali Churahi Dogri Pattani Gahri Ormuri Chambeali Tinani Bhattiyali Gaddi Kanashi Tinani Southern Pashto Ladakhi Central Pashto Khams Tibetan Kullu Pahari KinnauriBhoti Kinnauri Sunam Majhi Western Panjabi Mandeali Jangshung Tukpa Bilaspuri Chitkuli Kinnauri Mahasu Pahari Eastern Panjabi Panang Jaunsari Western Balochi Southern Pashto Garhwali Khetrani Hazaragi Humla Rawat Central Tibetan Waneci Rawat Brahui Seraiki DarmiyaByangsi ChaudangsiDarmiya Western Balochi Kumaoni Chaudangsi Mugom Dehwari Bagri Nepali Dolpo Haryanvi Jumli Urdu Buksa Lowa Raute Eastern Balochi Tichurong Seke Sholaga Kaike Raji Rana Tharu Sonha Nar Phu ChantyalThakali Seraiki Raji Western Parbate Kham Manangba Tibetan Kathoriya Tharu Tibetan Eastern Parbate Kham Nubri Marwari Ts um Gamale Kham Eastern -

THE CONSTITUTION (AMENDMENT) BILL, 2015 By

AS INTRODUCED IN LOK SABHA Bill No. 90 of 2015 THE CONSTITUTION (AMENDMENT) BILL, 2015 By SHRI ASHWINI KUMAR CHOUBEY, M.P. A BILL further to amend the Constitution of India. BE it enacted by Parliament in the Sixty-sixth Year of the Republic of India as follows:— 1. This Act may be called the Constitution (Amendment) Act, 2015. Short title. 2. In the Eighth Schedule to the Constitution,— Amendment of the Eighth (i) existing entries 1 and 2 shall be renumbered as entries 2 and 3, respectively, Schedule. 5 and before entry 2 as so renumbered, the following entry shall be inserted, namely:— "1. Angika."; (ii) after entry 3 as so renumbered, the following entry shall be inserted, namely:— "4. Bhojpuri."; and (iii) entries 3 to 22 shall be renumbered as entries 5 to 24, respectively. STATEMENT OF OBJECTS AND REASONS Language is not only a medium of communication but also a sign of respect. Language also reflects on the history, culture, people, system of governance, ecology, politics, etc. 'Bhojpuri' language is also known as Bhozpuri, Bihari, Deswali and Khotla and is a member of the Bihari group of the Indo-Aryan branch of the Indo-European language family and is closely related to Magahi and Maithili languages. Bhojpuri language is spoken in many parts of north-central and eastern regions of this country. It is particularly spoken in the western part of the State of Bihar, north-western part of Jharkhand state and the Purvanchal region of Uttar Pradesh State. Bhojpuri language is spoken by over forty million people in the country. -

Dealing with Linguistic Divergences in English-Bhojpuri Machine Translation Pitambar Behera*, Neha Maurya1 & Vandana Pandey2 *Centre for Linguistics & 12Dept

Dealing with Linguistic Divergences in English-Bhojpuri Machine Translation Pitambar Behera*, Neha Maurya1 & Vandana Pandey2 *Centre for Linguistics & 12Dept. of Linguistics *Jawaharlal Nehru University & 12Banaras Hindu University New Delhi, Banaras India [pitambarbehera2, neha.mourya8, vandanapandey732]@gmail.com Abstract In Machine Translation, divergence is one of the major barriers which plays a deciding role in determining the efficiency of the system at hand. Translation divergences originate when there is structural discrepancies between the input and the output languages. It can be of various types based on the issues we are addressing to such as linguistic, cultural, communicative and so on. Owing to the fact that two languages owe their origin to different language families, linguistic divergences emerge. The present study attempts at categorizing different types of linguistic divergences: the lexical-semantic and syntactic. In addition, it also helps identify and resolve the divergent linguistic features between English as source language and Bhojpuri as target language pair. Dorr’s theoretical framework (1994, 1994a) has been followed in the classification and resolution procedure. Furthermore, so far as the methodology is concerned, we have adhered to the Dorr’s Lexical Conceptual Structure for the resolution of divergences. This research will prove to be beneficial for developing efficient MT systems if the mentioned factors are incorporated considering the inherent structural constraints between source and target languages. 1 Overview The terminology ‘divergence’ refers to the concept of structural or ‘parametric variation’ between a source language (SL) and a target language (TL) pair in Machine Translation (MT). In other words, it emerges when the decoded output content lacks ‘well-formedness’ because of the inherent linguistic constraints. -

Nepal Early Grade Reading Materials Assessment

NEPAL EARLY GRADE READING MATERIALS ASSESSMENT A Report Submitted to United States Agency for International Development (USAID) Nepal Maharajgunj, Kathmandu, Nepal Submitted by Research Management Cell (RMC) Kathmandu Shiksha Campus (KSC) Ramshah Path, Kathmandu, Nepal May 9, 2014 1 Study Team Core Team SN Name of the Consultant Position/Area of Expertise 1 Prof. Dr Prem Narayan Aryal Team Leader and Early Childhood Development Expert 2 Prof. Dr Yogendra Prasad Yadava Team Member and Linguist 3 Prof. Dr Ram Krishna Maharjan Team Member and Educationist 4 Prof. Dr Hemang Raj Adhikari Team Member and Nepali Language Subject Expert 5 Dr Sushan Acharya Team Member and Gender, Child and Social Inclusion Expert 6 Mr Karnakhar Khatiwada Team Member and Linguist 7 Mr Bhagwan Aryal Team Member and Educationist 8 Mr Shatrughan Prasad Gupta Project Manager and Educationist Advisory Input - Prof. Dr Basu Dev Kafle Subject Experts (Evaluators) SN Name of the Nepali Language Name of the Mother Tongue Language Subject Experts Subject Experts 1 Prof. Dr Hemang Raj Nepali Mr Surya Prasad Yadav Maithili Adhikari 2 Prof. Dr Madhav Bhattarai Nepali Mr Jeevan Kumar Maharjan Nepal Bhasa 3 Prof. Dr Kedar Sharma Nepali Mr Padam Rai Bantawa 4 Prof. Maheshwor Neupane Nepali Mr Purna Bahadur Gharti Magar Athara Magarat 5 Prof. Dr Sanat Kumar Wasti Nepali Mr Jai Prakash Lal Srivastav Awadhi 2 6 Dr Madhav Prasad Poudel Nepali Mr Khenpo Gyurme Tsultrim Mugali 7 Mr Diwakar Dhungel Nepali Ms Bagdevi Rai Chamling 8 Mr Ganesh Prasad Bhattarai Nepali Mr Krishna Raj Sarbahari Tharu 9 Mr Hari Prasad Niraula Nepali Mr Rajesh Prasad Sah Bajjika 10 Ms Rajni Dhimal Nepali Dr Lal-Shyãkarelu Rapacha Sunuwar 11 Prof. -

Home About Resources Bihar Rulers and Its History Bihar School of Yoga

7/2/2014 Bihar Matrimonial | Biharplus - Part 5 Home About Resources Bihar Rulers and Its History Bihar School of Yoga Celebs from Bihar Culture in the City Festivals of Bihar Chhath Puja 2012 Chhath Puja 2013 Chhath Puja Bihar 2011 Madhushravani festival of Mithilanchal Ganga Aarti Patna Gyan key baat History of Bihar Jobs in Bihar Languages of Bihar Angika language Bhojpuri language Magahi language Maithili language Vajjika – Bajjika language New Bihar Museum in Patna Patna City Bus Ride Route and Time People in the city Save Earth Search Listing का समझते हे बहार को Writers in Bihar Food & Entertainment Bihar Website Directory Career Guide http://www.biharplus.in/resources/bihar-matrimonial/page/5/ 1/31 7/2/2014 Bihar Matrimonial | Biharplus - Part 5 List Your business Bihar Talent Bihar Matrimonial Bihar Helpline Numbers News Bihar Events "एक ववाह ऐसा भी " 17th Junior National Sepaktakraw Championship Patna 2014 28-31st March 2014 19th varshik mahila udhyog mela 2014 at Sinha library patna 2nd Patna Literature Festival 2014 Aaj ka Mahabharat Bhojpuri Film Festival 2013 Bihar Diwas 2012 Bihar Diwas 2013 Bihar Diwas 2014 Bihar k Anna Bihar State Pavilion is Focus State in 31st IITF 2011 Bihar utsav 2011 – Patna and Delhi – 99th Bihar Foundation Day Blenders Pride Fashion Tour 2013 at Maurya Patna Brandvilla Health and Fitness Expo 2014 presented by Ruban Patliputra Hospital Cartoon Contest for Kids at Patna Zoo Dahi Handi Utsav in patna bihar on janmashtami day by brandvilla entertainment Destination Bihar Expo 2014 organised by BIA Bihar -

Covid19 Response Activities January

Antenna Foundation Nepal Covid19 Response Activities January - June 2020 Nepal's government imposed a lockdown on 23 March 2020 which has completely halted long-distance transportation services, international flights, and non-essential services in Nepal. People’s lifestyle has abruptly changed and concepts such as work from home and virtual meetings have become the new phenomenon of personal and professional life. Covid-19 lockdown has affected AFN's also to carried out it works however taking this situation as an opportunity we have come up with the implementation of following activities in response to the COVID19. 1. Production of Nepal Chautari, A Daily Talk show Media landscape survey conducted by ShareCast Initiative during lockdown showed that radio listenership pattern is increased. Radio is trusted source of information. On the otherhand people are even deprived of information about Covid19 safety and precaution. Having reviewed feedback of FM partners meeting and by analyzing the finding of media survey AFN decided to restart it's popular daily talk show Nepal Chautari. Nepal Chautari has restarted its production and broadcast from May 31, 2020 and now it is being broadcasting a daily live show. It is a "live" radio talk show produced with the objective of raising awareness among people and stakeholder accountability on contemporary issues prevalent in the nation in view of Covid 19 pandemic. Nepal Chautari incorporates with the specialist's view and listener's question and answers/feedback. Audience are allowed to put their comments, questions and feedback in live talk show by toll free phone number which encourages interaction between service providers and service seekers. -

Language Distinctiveness*

RAI – data on language distinctiveness RAI data Language distinctiveness* Country profiles *This document provides data production information for the RAI-Rokkan dataset. Last edited on October 7, 2020 Compiled by Gary Marks with research assistance by Noah Dasanaike Citation: Liesbet Hooghe and Gary Marks (2016). Community, Scale and Regional Governance: A Postfunctionalist Theory of Governance, Vol. II. Oxford: OUP. Sarah Shair-Rosenfield, Arjan H. Schakel, Sara Niedzwiecki, Gary Marks, Liesbet Hooghe, Sandra Chapman-Osterkatz (2021). “Language difference and Regional Authority.” Regional and Federal Studies, Vol. 31. DOI: 10.1080/13597566.2020.1831476 Introduction ....................................................................................................................6 Albania ............................................................................................................................7 Argentina ...................................................................................................................... 10 Australia ....................................................................................................................... 12 Austria .......................................................................................................................... 14 Bahamas ....................................................................................................................... 16 Bangladesh .................................................................................................................. -

List of Languages and the Countries Where Spoken

List of Languages and the Countries where spoken Name of Language Code DfES Category Name Country where spoken A Abakwariga HAU Hausa Nigeria Abyssinian AMR Amharic Ethiopia Accra GAA Ga Ghana Achanti AKA Akan (Twi/Fante) Ghana Achawa YAO Yao/Chiyao (East Africa) Malawi, Mozambique, Tanzania, Zambia Acholi ACL Acholi Uganda Acoli ACL Acholi Uganda Acooli ACL Acholi Uganda Acra GAA Ga Ghana Adal AFA Afar-Saho Eritrea, Ethiopia Adangme ADA Adangme Ghana Addo EDO Edo/Bini Nigeria Adiwasi ORI Oriya India Adsawa YAO Yao/Chiyao (East Africa) Malawi, Mozambique, Tanzania, Zambia Adsoa YAO Yao/Chiyao (East Africa) Malawi, Mozambique, Tanzania, Zambia Afan Oromo ORM Oromo Ethiopia Afar Af AFA Afar-Saho Eritrea, Ethiopia Afaraf AFA Afar-Saho Eritrea, Ethiopia Afar-Saho AFA Afar-Saho Eritrea, Ethiopia Af-Maxaad Tiri SOM Somali Somalia Afó SID Sidamo Ethiopia Afrikaans AFK Afrikaans South Africa Af-Soomaali SOM Somali Somalia Agar DIN Dinka/Jieng Sudan Agona AKA Akan (Twi/Fante) Ghana Ahafo AKA Akan (Twi/Fante) Ghana Aisorski ASR Assyrian/Aramaic Iraq Ajawa YAO Yao/Chiyao (East Africa) Malawi, Mozambique, Tanzania, Zambia Akan AKA Akan (Twi/Fante) Ghana Akoli ACL Acholi Uganda Akpese KPE Kpelle Guinea, Liberia Akuapem AKA Akan (Twi/Fante) Ghana Akuapim AKA Akan (Twi/Fante) Ghana Akwapi AKA Akan (Twi/Fante) Ghana Akyem Bosome AKA Akan (Twi/Fante) Ghana Albanian ALB Albanian/Shqip Albania Albannach Gaidhlig GAL Gaelic (Scotland) Scotland Alealum MLM Malayalam India Aloro ALU Alur Democratic Republic of Congo Alua ALU Alur Democratic Republic of Congo