Team-Fly® the SSCP® Prep Guide Mastering the Seven Key Areas of System Security

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The First Americans the 1941 US Codebreaking Mission to Bletchley Park

United States Cryptologic History The First Americans The 1941 US Codebreaking Mission to Bletchley Park Special series | Volume 12 | 2016 Center for Cryptologic History David J. Sherman is Associate Director for Policy and Records at the National Security Agency. A graduate of Duke University, he holds a doctorate in Slavic Studies from Cornell University, where he taught for three years. He also is a graduate of the CAPSTONE General/Flag Officer Course at the National Defense University, the Intelligence Community Senior Leadership Program, and the Alexander S. Pushkin Institute of the Russian Language in Moscow. He has served as Associate Dean for Academic Programs at the National War College and while there taught courses on strategy, inter- national relations, and intelligence. Among his other government assignments include ones as NSA’s representative to the Office of the Secretary of Defense, as Director for Intelligence Programs at the National Security Council, and on the staff of the National Economic Council. This publication presents a historical perspective for informational and educational purposes, is the result of independent research, and does not necessarily reflect a position of NSA/CSS or any other US government entity. This publication is distributed free by the National Security Agency. If you would like additional copies, please email [email protected] or write to: Center for Cryptologic History National Security Agency 9800 Savage Road, Suite 6886 Fort George G. Meade, MD 20755 Cover: (Top) Navy Department building, with Washington Monument in center distance, 1918 or 1919; (bottom) Bletchley Park mansion, headquarters of UK codebreaking, 1939 UNITED STATES CRYPTOLOGIC HISTORY The First Americans The 1941 US Codebreaking Mission to Bletchley Park David Sherman National Security Agency Center for Cryptologic History 2016 Second Printing Contents Foreword ................................................................................ -

Tivaware Peripheral Driver Library User's Guide

TivaWare™ Peripheral Driver Library USER’S GUIDE SW-TM4C-DRL-UG-2.0 Copyright © 2006-2013 Texas Instruments Incorporated Copyright Copyright © 2006-2013 Texas Instruments Incorporated. All rights reserved. Tiva and TivaWare are trademarks of Texas Instruments Instruments. ARM and Thumb are registered trademarks and Cortex is a trademark of ARM Limited. Other names and brands may be claimed as the property of others. Please be aware that an important notice concerning availability, standard warranty, and use in critical applications of Texas Instruments semicon- ductor products and disclaimers thereto appears at the end of this document. Texas Instruments 108 Wild Basin, Suite 350 Austin, TX 78746 www.ti.com/tiva-c Revision Information This is version 2.0 of this document, last updated on August 29, 2013. 2 August 29, 2013 Table of Contents Table of Contents Copyright ..................................................... 2 Revision Information ............................................... 2 1 Introduction ................................................. 7 2 Programming Model ............................................ 11 2.1 Introduction .................................................. 11 2.2 Direct Register Access Model ....................................... 11 2.3 Software Driver Model ............................................ 12 2.4 Combining The Models ........................................... 13 3 Analog Comparator ............................................ 15 3.1 Introduction ................................................. -

Seventy-Second Annual Report of the Association of Graduates of the United States Military Academy at West Point, New York, June

SEVENTY-SECOND ANNUAL REPORT of the Association of Graduates of the United States Military Academy at West Point, New York June 10, 1941 C-rinted by The Moore Printing Company, Inc. Newburgh, N. Y¥: 0 C; 42 lcc0 0 0 0 P-,.0 r- 'Sc) CD 0 ct e c; *e H, Ir Annual Report, June 10, 1941 3 Report of the 72nd Annual Meeting of the Association of Graduates, U. S. M. A. Held at West Point, N. Y., June 10, 1941 1. The meeting was called to order at 2:02 p. m. by McCoy '97, President of the Association. There were 225 present. 2. Invocation was rendered by the Reverend H. Fairfield Butt, III, Chaplain of the United States Military Academy. 3. The President presented Brigadier General Robert L. Eichel- berger, '09, Superintendent, U. S. Military Academy, who addressed the Association (Appendix B). 4. It was moved and seconded that the reading of the report of the President be dispensed with, since that Report would later be pub- lished in its entirety in the 1941 Annual Report (Appendix A). The motion was passed. 5. It was moved and seconded that the reading of the Report of the Secretary be dispensed with, since that Report would later be pub- lished in its entirety in the 1941 Annual Report (Appendix C.) The motion was passed. 6. It was moved and seconded that the reading of the Report of the Treasurer be dispensed with, since that Report would later be published in its entirety in the 1941 Annual Report (Appendix D). -

The Dawn of American Cryptology, 1900–1917

United States Cryptologic History The Dawn of American Cryptology, 1900–1917 Special Series | Volume 7 Center for Cryptologic History David Hatch is technical director of the Center for Cryptologic History (CCH) and is also the NSA Historian. He has worked in the CCH since 1990. From October 1988 to February 1990, he was a legislative staff officer in the NSA Legislative Affairs Office. Previously, he served as a Congressional Fellow. He earned a B.A. degree in East Asian languages and literature and an M.A. in East Asian studies, both from Indiana University at Bloomington. Dr. Hatch holds a Ph.D. in international relations from American University. This publication presents a historical perspective for informational and educational purposes, is the result of independent research, and does not necessarily reflect a position of NSA/CSS or any other US government entity. This publication is distributed free by the National Security Agency. If you would like additional copies, please email [email protected] or write to: Center for Cryptologic History National Security Agency 9800 Savage Road, Suite 6886 Fort George G. Meade, MD 20755 Cover: Before and during World War I, the United States Army maintained intercept sites along the Mexican border to monitor the Mexican Revolution. Many of the intercept sites consisted of radio-mounted trucks, known as Radio Tractor Units (RTUs). Here, the staff of RTU 33, commanded by Lieutenant Main, on left, pose for a photograph on the US-Mexican border (n.d.). United States Cryptologic History Special Series | Volume 7 The Dawn of American Cryptology, 1900–1917 David A. -

LONG BRANCH! John

Board Backs Money-Saving Plan ("•• "O, SEE STORY BELOW Snow, Mild FINAL Light snow mixed with rain or sleet and mild today. Red Bank, Freehold Clearing tonight. Fair tomor- Long Branch EDITION row. 7 ,9u Detail*, X>u« 3D Monmouth Counttfs Home Newspaper for 90 Years VOL. 91, NO. 167 RED BANK, N. J., THURSDAY, FEBRUARY 20, 1969 40 PAGES 10 CENTS U. ~S.9 Israel Request Protection of Planes By ASSOCIATED PRESS of Science last night (hat Is- bound to any particular tar- Lebanon denied any part sons on the plane were wound- Israel's hawks today held raelis must be restrained and get." in the Arab commando at' ed and one guerrilla was in abeyance their demands let the diplomats fight their Dayan had just come from tack. , killed. for retaliation for the latest battles for the moment. But a top-secret ministerial secur-, , !'From the information an- Carmel, speaking to Is- . Arab attack on an Israeli ity meeting at the home of nounced about the operation,". rael's ' Parliament yesterday, airliner. They awaited the reliable sources said he Premier Levi Eshkol. Lebanese Premier Rashid Ka- cited the publication in Bei- outcome of calls from their warned Israel's Arab neigh- "We'll let the Arabs stew rami said, "there can be no rut of a statement by the Pop- government and that of the bors: for a while," said one lead excuse to deduce that any re- ular Front for the Liberation United States tor internation- "We regard all our neigh- Israeli commentator. "All sponsibility for it falls on us." of Palestine claiming respon- al action to protect civil avi- boring states as responsible their govexmnetits are cer- Karami's statement was is- sibility for the Zurich attack. -

S : a Flexible Coding for Tree Hashing

S: a flexible coding for tree hashing Guido Bertoni1, Joan Daemen1, Michaël Peeters2, and Gilles Van Assche1 1 STMicroelectronics 2 NXP Semiconductors Abstract. We propose a flexible, fairly general, coding for tree hash modes. The coding does not define a tree hash mode, but instead specifies a way to format the message blocks and chaining values into inputs to the underlying function for any topology, including sequential hashing. The main benefit is to avoid input clashes between different tree growing strategies, even before the hashing modes are defined, and to make the SHA-3 standard tree-hashing ready. Keywords: hash function, tree hashing, indifferentiability, SHA-3 1 Introduction A hashing mode can be seen as a recipe for computing digests over messages by means of a number of calls to an underlying function. This underlying function may be a fixed-input- length compression function, a permutation or even a hash function in its own right. We use the term inner function and symbol f for the underlying function and the term outer hash function and symbol F for the function obtained by applying the hashing mode to the inner function. The hashing mode splits the message into substrings that are assembled into inputs for the inner function, possibly combined with one or more chaining values and so-called frame bits. Such an input to f is called a node [6]. The chaining values are the results of calls to f for other nodes. Hashing modes serve two main purposes. The first is to build a variable-input-length hash function from a fixed-input-length inner function and the second is to build a tree hash function. -

Secure Volunteer Computing for Distributed Cryptanalysis

ysis SecureVolunteer Computing for Distributed Cryptanal Nils Kopal Secure Volunteer Computing for Distributed Cryptanalysis ISBN 978-3-7376-0426-0 kassel university 9 783737 604260 Nils Kopal press kassel university press ! "# $ %& & &'& #( )&&*+ , #()&- ( ./0 12.3 - 4 # 5 (!!&& & 6&( 7"#&7&12./ 5 -839,:,3:3/,2;1/,2% ' 5 -839,:,3:3/,2;13,3% ,' 05( (!!<& &!.2&.81..!")839:3:3/2;133 "=( (!!, #& !(( (2221,;2;13/ '12.97 # ?@7 & &, & ) ? “With magic, you can turn a frog into a prince. With science, you can turn a frog into a Ph.D. and you still have the frog you started with.” Terry Pratchett Abstract Volunteer computing offers researchers and developers the possibility to distribute their huge computational jobs to the computers of volunteers. So, most of the overall cost for computational power and maintenance is spread across the volunteers. This makes it possible to gain computing resources that otherwise only expensive grids, clusters, or supercomputers offer. Most volunteer computing solutions are based on a client-server model. The server manages the distribution of subjobs to the computers of volunteers, the clients, which in turn compute the subjobs and return the results to the server. The Berkeley Open Infrastructure for Network Computing (BOINC) is the most used middleware for volunteer computing. A drawback of any client-server architecture is the server being the single point of control and failure. To get rid of the single point of failure, we developed different distribution algorithms (epoch distribution algorithm, sliding-window distribution algorithm, and extended epoch distribution algorithm) based on unstructured peer-to-peer networks. These algorithms enable the researchers and developers to create volunteer computing networks without any central server. -

Keccak, More Than Just SHA3SUM

Keccak, More Than Just SHA3SUM Guido Bertoni1 Joan Daemen1 Michaël Peeters2 Gilles Van Assche1 1STMicroelectronics 2NXP Semiconductors FOSDEM 2013, Brussels, February 2-3, 2013 1 / 36 Outline 1 How it all began 2 Introducing Keccak 3 More than just SHA3SUM 4 Inside Keccak 5 Keccak and the community 2 / 36 How it all began Outline 1 How it all began 2 Introducing Keccak 3 More than just SHA3SUM 4 Inside Keccak 5 Keccak and the community 3 / 36 How it all began Let’s talk about hash functions... ??? These are “hashes” of some sort, but they ain’t hash functions... 4 / 36 How it all began Cryptographic hash functions ∗ h : f0, 1g ! f0, 1gn Input message Digest MD5: n = 128 (Ron Rivest, 1992) SHA-1: n = 160 (NSA, NIST, 1995) SHA-2: n 2 f224, 256, 384, 512g (NSA, NIST, 2001) 5 / 36 How it all began Why should you care? You probably use them several times a day: website authentication, digital signature, home banking, secure internet connections, software integrity, version control software, … 6 / 36 How it all began Breaking news in crypto 2004: SHA-0 broken (Joux et al.) 2004: MD5 broken (Wang et al.) 2005: practical attack on MD5 (Lenstra et al., and Klima) 2005: SHA-1 theoretically broken (Wang et al.) 2006: SHA-1 broken further (De Cannière and Rechberger) 2007: NIST calls for SHA-3 Who answered NIST’s call? 7 / 36 How it all began Keccak Team to the rescue! 8 / 36 How it all began The battlefield EDON-R BMW Sgàil LANE Grøstl ZK-Crypt Keccak Maraca NKS2D Hamsi MeshHash MD6 Waterfall StreamHash ECOH EnRUPT Twister Abacus MCSSHA3 WaMM Ponic -

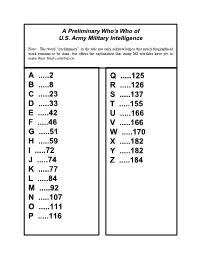

A Preliminary Who's Who of US Army Military Intelligence

A Preliminary Who’s Who of U.S. Army Military Intelligence Note: The word preliminary in the title not only acknowledges that much biographical work remains to be done, but offers the explanation that many MI worthies have yet to make their final contribution. A .....2 Q .....125 B .....8 R .....126 C .....23 S .....137 D .....33 T .....155 E .....42 U .....166 F .....46 V .....166 G .....51 W .....170 H .....59 X .....182 I .....72 Y .....182 J .....74 Z .....184 K .....77 L .....84 M .....92 N .....107 O .....111 P .....116 A Aaron, Harold R. Lt. Gen. [Member of MI Hall of Fame. Aaron Plaza dedicated at Fort Huachuca on 2 July 1992.] U.S. Military Academy Class of June 1943. Distinguished service in special operations and intelligence assignments. Commander, 5th Special Forces Group, Republic of Vietnam; Deputy Chief of Staff, Intelligence, U.S. Army, Europe. Assistant Chief of Staff, Intelligence, Department of the Army; Deputy Director, Defense Intelligence Agency. Extract from Register of Graduates, U.S. Military Academy, 1980: Born in Indiana, 21 June 1921; Infantry; Company Commander, 259 Infantry, Theater Army Europe, 1944 to 1945 (two Bronze Star Medals-Combat Infantry Badge-Commendation Ribbon-Purple Heart); Command and General Staff College, 1953; MA Gtwn U, 1960; Office of Assistant Secretary of Defense, 1961 to 1963; National War College, 1964; PhD Georgetown Univ, 1964; Aide-de-Camp to CG, 8th Army, 1964 to 1965; Office of the Joint Chiefs of Staff, 1965 to 1967; (Legion of Merit); Commander, 1st Special Forces Group, 1967 -

The Cultural Contradictions of Cryptography: a History of Secret Codes in Modern America

The Cultural Contradictions of Cryptography: A History of Secret Codes in Modern America Charles Berret Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy under the Executive Committee of the Graduate School of Arts and Sciences Columbia University 2019 © 2018 Charles Berret All rights reserved Abstract The Cultural Contradictions of Cryptography Charles Berret This dissertation examines the origins of political and scientific commitments that currently frame cryptography, the study of secret codes, arguing that these commitments took shape over the course of the twentieth century. Looking back to the nineteenth century, cryptography was rarely practiced systematically, let alone scientifically, nor was it the contentious political subject it has become in the digital age. Beginning with the rise of computational cryptography in the first half of the twentieth century, this history identifies a quarter-century gap beginning in the late 1940s, when cryptography research was classified and tightly controlled in the US. Observing the reemergence of open research in cryptography in the early 1970s, a course of events that was directly opposed by many members of the US intelligence community, a wave of political scandals unrelated to cryptography during the Nixon years also made the secrecy surrounding cryptography appear untenable, weakening the official capacity to enforce this classification. Today, the subject of cryptography remains highly political and adversarial, with many proponents gripped by the conviction that widespread access to strong cryptography is necessary for a free society in the digital age, while opponents contend that strong cryptography in fact presents a danger to society and the rule of law. -

Codebreakers

1 Some of the things you will learn in THE CODEBREAKERS • How secret Japanese messages were decoded in Washington hours before Pearl Harbor. • How German codebreakers helped usher in the Russian Revolution. • How John F. Kennedy escaped capture in the Pacific because the Japanese failed to solve a simple cipher. • How codebreaking determined a presidential election, convicted an underworld syndicate head, won the battle of Midway, led to cruel Allied defeats in North Africa, and broke up a vast Nazi spy ring. • How one American became the world's most famous codebreaker, and another became the world's greatest. • How codes and codebreakers operate today within the secret agencies of the U.S. and Russia. • And incredibly much more. "For many evenings of gripping reading, no better choice can be made than this book." —Christian Science Monitor THE Codebreakers The Story of Secret Writing By DAVID KAHN (abridged by the author) A SIGNET BOOK from NEW AMERICAN LIBRARV TIMES MIRROR Copyright © 1967, 1973 by David Kahn All rights reserved. No part of this book may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying, recording or by any information storage and retrieval system, without permission in writing from the publisher. For information address The Macmillan Company, 866 Third Avenue, New York, New York 10022. Library of Congress Catalog Card Number: 63-16109 Crown copyright is acknowledged for the following illustrations from Great Britain's Public Record Office: S.P. 53/18, no. 55, the Phelippes forgery, and P.R.O. 31/11/11, the Bergenroth reconstruction. -

Vernam, Mauborgne, and Friedman: the One-Time Pad and the Index of Coincidence

Vernam, Mauborgne, and Friedman: The One-Time Pad and the Index of Coincidence Steven M. Bellovin https://www.cs.columbia.edu/˜smb CUCS-014-14 Abstract The conventional narrative for the invention of the AT&T one-time pad was related by David Kahn. Based on the evidence available in the AT&T patent files and from interviews and correspondence, he concluded that Gilbert Vernam came up with the need for randomness, while Joseph Mauborgne realized the need for a non-repeating key. Examination of other documents suggests a different narrative. It is most likely that Vernam came up with the need for non-repetition; Mauborgne, though, apparently contributed materially to the invention of the two-tape variant. Furthermore, there is reason to suspect that he suggested the need for randomness to Vernam. However, neither Mauborgne, Herbert Yardley, nor anyone at AT&T really understood the security advantages of the true one-time tape. Col. Parker Hitt may have; William Friedman definitely did. Finally, we show that Friedman’s attacks on the two-tape variant likely led to his invention of the index of coincidence, arguably the single most important publication in the history of cryptanalysis. 1 Introduction The one-time pad as we know it today is generally credited to Gilbert Vernam and Joseph O. Mauborgne [22]. (I omit any discussion of whether or not the earlier work by Miller had some influence [2]; it is not relevant to this analysis.) There were several essential components to the invention: • Online encryption, under control of a paper tape containing the key.