POLITECNICO DI TORINO Repository ISTITUZIONALE

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Download Legal Document

Case 1:10-cv-00436-RMC Document 49-3 Filed 08/09/13 Page 1 of 48 IN THE UNITED STATES DISTRICT COURT FOR THE DISTRICT OF COLUMBIA ) AMERICAN CIVIL LIBERTIES ) UNION, et al., ) ) Plaintiffs, ) ) v. ) Civil Action No. 1:1 0-cv-00436 RMC ) CENTRAL INTELLIGENCE AGENCY, ) ) Defendant. ) -----------------------J DECLARATION OF AMY E. POWELL I, Amy E. Powell, declare as follows: 1. I am a trial attorney at the United States Department of Justice, representing the Defendant in the above-captioned matter. I am competent to testify as to the matters set forth in this declaration. 2. Attached to this declaration as Exhibit A is a true and correct copy of a document entitled "Attorney General Eric Holder Speaks at Northwestern University School of Law," dated March 5, 2012, as retrieved on August 9, 2013 from http://www.justice.gov/iso/opa/ag/speeches/ 2012/ag-speech-1203051.html. 3. Attached to this declaration as Exhibit B is a true and correct copy of a Transcript of Remarks by John 0. Brennan on April30, 2012, as copied on August 9, 2013 from http://www.wilsoncenter.org/event/the-efficacy-and-ethics-us-counterterrorism-strategy/ with some formatting changes by the undersigned in order to enhance readability. Case 1:10-cv-00436-RMC Document 49-3 Filed 08/09/13 Page 2 of 48 4. Attached to this declaration as Exhibit C is a true and correct copy of a letter to the Chairman of the Senate Judiciary Committee dated May 22, 2013, as retrieved on August 9, 2013 from http://www.justice.gov/slideshow/AG-letter-5-22-13 .pdf. -

Základní Atributy Značek ČSSD a ODS 2002-2013 Ve Volebních Kampaních

MASARYKOVA UNIVERZITA Fakulta sociálních studií Katedra politologie Mgr. Miloš Gregor Základní atributy značek ČSSD a ODS 2002–2013 ve volebních kampaních Disertační práce Školitel: doc. PhDr. Stanislav Balík, Ph.D. Brno 2016 !1 Čestné prohlášení Prohla"uji, #e jsem p$edkládanou diserta%ní práci Základní atributy značek ČSSD a ODS 2002– 2013 ve volebních kampaních vypracoval samostatn& a k jejímu vypracování pou#il jen t&ch pramen', které jsou uvedeny v seznamu literatury. (ást kapitoly 2.1 vychází z textu Klasické koncepty v politickém marketingu, kter) vy"el v knize Chytilek, R. et al. 2012. Teorie a metody politického marketingu, Brno: CDK. V kapitole 5.3.4 vycházím z kapitoly Předvolební kampaně 2013: prohloubení trendů, nebo nástup nových?, kterou jsem zpracoval spole%n& s Mgr. et Mgr. Alenou Mackovou. Tato kapitola byla publikována v rámci knihy Havlík, V. (Ed.) 2014. Volby do Poslanecké sněmovny 2013, Brno: Munipress. (ásti kapitol 3.9.1, 4.3 a 6.6 pak vychází z mého textu Where Have All the Pledges Gone: An Analysis of ČSSD and ODS Manifesto Promises since 2002, kter) vyjde v Politologickém %asopise v roce 2017. Texty byly pro pot$eby této práce jazykov& upraveny a zkráceny. P$evzaté texty tvo$í p$ibli#n& 5 % p$edlo#ené diserta%ní práce. V Brn&, dne 21. listopadu 2016 Milo" Gregor+ !2 Souhlas spoluautorky s použitím výsledků kolektivní práce Vyslovuji souhlas s tím, aby Milo" Gregor pou#il text Předvolební kampaně 2013: prohloubení trendů, nebo nástup nových?, kter) jsme vypracovali spole%n& a kter) byl publikován v rámci knihy Havlík, V. -

AAUW IOWA CONNECTOR March 2011 An

AAUW IOWA CONNECTOR March 2011 An Electronic Bulletin for AAUW Leaders in Iowa You are invited to share this information with other branch leaders and members by forwarding it to them or providing hard copy. Sandra Keist Wilson, President AAUW IA Happy St. Pats Day to all! April Showers bring State Conference! April 29-30 is the Annual Meeting and “Grow Your Branch, Grow Yourself” Conference Dates. Clarion Inn, Cedar Falls Gallagher Bluedorn Bldg. on UNI Campus The Initiative Newsletter will be in the mail the end of the week with all the particulars. Be sure to read it from beginning to end. If you can’t wait, an on-line copy along with the registration form is on the website, www.aauwiowa.org The conference program will give you hands-on experience, ideas on how to grow your branch and yourself using education, advocacy, and opportunity for community partners. Remember working together gets more done than alone. Come and be inspired for branch programing for 2011-12. Breaking through Barriers-Advocating for Change AAUW Convention 2011 Washington DC June 16-19 How exciting to be in DC. There are opportunities to attend a party at an embassy, visit the capital, participate in a service project, and an inspiring conference program. I hope many of you have an opportunity to attend. Some branches offer a conference scholarship to help cover some of the costs; members who attend return full of enriching experiences to share, ideas for programming, research to make use of, and issues for advocacy. Attendees grow within and show greater interest in leadership roles in their branch and state. -

Lecture Delivered by Laurie Oakes at Curtin University on September 25,2014.

(Lecture delivered by Laurie Oakes at Curtin University on September 25,2014.) Will they still need us? Will they still feed us? Political journalism in the digital age. Good evening. It’s a genuine privilege to be here to help celebrate 40 years of journalism at Curtin University. Journalism matters in our society. It’s fundamental to the operation of our democratic system. It’s a noble craft. Maybe more than that. I came across an interesting line in a Huffington Post article recently. It read: “Someone very sexy once told me journalism is a sexy profession.” I’d have to say I can’t quite see it myself, but maybe I’m wrong. If journalism WAS sexy it might help to answer a question that’s been puzzling me. Why are so many really bright young people still enthusiastic about studying journalism when career prospects these days are so uncertain? Why does including the word “journalism” in the title of a course apparently continue to guarantee bums on seats? I’m pleased that people still want to be journalists. I can’t imagine a better job. But it must be obvious to everyone by now that we’re producing many more journalism graduates than are ever likely to find jobs in the news business in its present state. A well-known American academic and commentator on media issues, Clay Shirkey from New York University, has attacked in quite stark terms people who encourage false hope among young would-be journalists at a time when so much of the industry is battling for survival. -

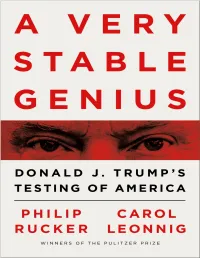

A Very Stable Genius at That!” Trump Invoked the “Stable Genius” Phrase at Least Four Additional Times

PENGUIN PRESS An imprint of Penguin Random House LLC penguinrandomhouse.com Copyright © 2020 by Philip Rucker and Carol Leonnig Penguin supports copyright. Copyright fuels creativity, encourages diverse voices, promotes free speech, and creates a vibrant culture. Thank you for buying an authorized edition of this book and for complying with copyright laws by not reproducing, scanning, or distributing any part of it in any form without permission. You are supporting writers and allowing Penguin to continue to publish books for every reader. Library of Congress Control Number: 2019952799 ISBN 9781984877499 (hardcover) ISBN 9781984877505 (ebook) Cover design by Darren Haggar Cover photograph: Pool / Getty Images btb_ppg_c0_r2 To John, Elise, and Molly—you are my everything. To Naomi and Clara Rucker CONTENTS Title Page Copyright Dedication Authors’ Note Prologue PART ONE One. BUILDING BLOCKS Two. PARANOIA AND PANDEMONIUM Three. THE ROAD TO OBSTRUCTION Four. A FATEFUL FIRING Five. THE G-MAN COMETH PART TWO Six. SUITING UP FOR BATTLE Seven. IMPEDING JUSTICE Eight. A COVER-UP Nine. SHOCKING THE CONSCIENCE Ten. UNHINGED Eleven. WINGING IT PART THREE Twelve. SPYGATE Thirteen. BREAKDOWN Fourteen. ONE-MAN FIRING SQUAD Fifteen. CONGRATULATING PUTIN Sixteen. A CHILLING RAID PART FOUR Seventeen. HAND GRENADE DIPLOMACY Eighteen. THE RESISTANCE WITHIN Nineteen. SCARE-A-THON Twenty. AN ORNERY DIPLOMAT Twenty-one. GUT OVER BRAINS PART FIVE Twenty-two. AXIS OF ENABLERS Twenty-three. LOYALTY AND TRUTH Twenty-four. THE REPORT Twenty-five. THE SHOW GOES ON EPILOGUE Acknowledgments Notes Index About the Authors AUTHORS’ NOTE eporting on Donald Trump’s presidency has been a dizzying R journey. Stories fly by every hour, every day. -

The Obama Administration and the Press Leak Investigations and Surveillance in Post-9/11 America

The Obama Administration and the Press Leak investigations and surveillance in post-9/11 America By Leonard Downie Jr. with reporting by Sara Rafsky A special report of the Committee to Protect Journalists Leak investigations and surveillance in post-9/11 America U.S. President Barack Obama came into office pledging open government, but he has fallen short of his promise. Journalists and transparency advocates say the White House curbs routine disclosure of information and deploys its own media to evade scrutiny by the press. Aggressive prosecution of leakers of classified information and broad electronic surveillance programs deter government sources from speaking to journalists. A CPJ special report by Leonard Downie Jr. with reporting by Sara Rafsky Barack Obama leaves a press conference in the East Room of the White House August 9. (AFP/Saul Loeb) Published October 10, 2013 WASHINGTON, D.C. In the Obama administration’s Washington, government officials are increasingly afraid to talk to the press. Those suspected of discussing with reporters anything that the government has classified as secret are subject to investigation, including lie-detector tests and scrutiny of their telephone and e-mail records. An “Insider Threat Program” being implemented in every government department requires all federal employees to help prevent unauthorized disclosures of information by monitoring the behavior of their colleagues. Six government employees, plus two contractors including Edward Snowden, have been subjects of felony criminal prosecutions since 2009 under the 1917 Espionage Act, accused of leaking classified information to the press— compared with a total of three such prosecutions in all previous U.S. -

Executive Order -- Blocking Property of Certain Persons Contributing to the Situation in Ukraine | the White House

3/7/2014 Executive Order -- Blocking Property of Certain Persons Contributing to the Situation in Ukraine | The White House Get Em a il Upda tes Con ta ct Us Home • Briefing Room • Presidential Actions • Executive Orders Search WhiteHouse.gov The White House Office of the Press Secretary For Immediate Release March 06, 2014 Executive Order -- Blocking Property of Certain Persons Contributing to the Situation in Ukraine EXECUTIVE ORDER - - - - - - - BLOCKING PROPERTY OF CERTAIN PERSONS CONTRIBUTING TO THE SITUATION IN UKRAINE L A T E S T BL O G P O S T S By the authority vested in me as President by the Constitution and the laws of the United States of America, including the International Emergency Economic Powers Act (50 U.S.C. 1701 et seq.) (IEEPA), the National March 07, 2014 11:00 AM EST Emergencies Act (50 U.S.C. 1601 et seq.) (NEA), section 212(f) of the Immigration and Nationality Act of 1952 (8 Ask President Obama Your Health Care U.S.C. 1182(f)), and section 301 of title 3, United States Code, Questions on WebMD President Obama will sit down for his first-ever interview with WebMD, the leading source of I, BARACK OBAMA, President of the United States of America, find that the actions and policies of persons -- health information for consumers and health care including persons who have asserted governmental authority in the Crimean region without the authorization of professionals. Ask your question for President the Government of Ukraine -- that undermine democratic processes and institutions in Ukraine; threaten its Obama now. -

Spygate Exposed

SPYGATE EXPOSED The Failed Conspiracy Against President Trump Svetlana Lokhova DEDICATION I dedicate this book to the loyal men and women of the security services who work tirelessly to protect us from terrorism and other threats. Your constant vigilance allows us, your fellow citizens to live our lives in safety. And to every American citizen who, whether they know it or not—almost lost their birthright; government of the people, by the people, for the people—at the hands of a small group of men who sought to see such freedom perish from the earth. God Bless Us All. © Svetlana Lokhova No part of this book may be reproduced, stored in a retrieval system, or transmitted by any means without the written permission of the author. Svetlana Lokhova www.spygate-exposed.com Table of Contents Introduction 1 Chapter One: “Much Ado about Nothing” 11 Chapter Two: Meet the Spy 22 Chapter Three: Your Liberty under Threat 35 Chapter Four: Blowhard 42 Chapter Five: Dr. Ray S. Cline 50 Chapter Six: Foreign Affairs 65 Chapter Seven: The “Cambridge Club” 74 Chapter Eight: All’s Quiet 79 Chapter Nine: Motive 89 Chapter Ten: Means and Opportunity 96 Chapter Eleven: Follow the Money 116 Chapter Twelve: Treasonous Path 132 Chapter Thirteen: “Nothing Is Coincidence When It Comes to Halper” 145 Chapter Fourteen: Puzzles 162 Chapter Fifteen: Three Sheets to the Wind 185 Chapter Sixteen: Director Brennan 210 Chapter Seventeen: Poisonous Pens 224 Chapter Eighteen: Media Storm 245 Chapter Nineteen: President Trump Triumphant! 269 Conclusion: “I Am Not a Russian Spy” 283 What happened to the President of the United States was one of the greatest travesties in American history. -

New and Updated Content

March 21, 2014 Champions for Coverage: We’re only 10 day away from the end of the Marketplace Open Enrollment! Thank you for your tremendous work in ensuring that individuals and families in your community are informed about their health care options under the Health Insurance Marketplace. Your robust outreach efforts have contributed to the 5 million enrollment milestone we announced this week. We could not do this without your support. In addition to blog link above, please read along for important information for consumers, updated materials, social marketing resources, and recent CMS announcements. Yes, we still want to hear from you! As always, please keep sharing your Marketplace success stories of education, outreach and/or enrollment in your communities by emailing us at [email protected]. Sharing promising practices from the field will help each organization further their education goals. We also welcome you to share anecdotes from individual consumers who have successfully signed up for coverage. If you are a Certified Application Counselor (CAC), please send your enrollment specific questions to [email protected]. Please remember to include your CAC designation number in the email subject line. If you are not a Certified Application Counselor (CAC), but have enrollment specific questions, please call the Marketplace Call Center at: 1-800-318-2596 or go to HealthCare.gov to find additional information. Please note that at any point, if consumers need additional assistance with reporting a change to the Marketplace, a consumer can always call the Call Center for additional assistance. New and Updated Content Please see the two links below that discuss the exemptions from the Health Insurance Marketplace Fee. -

2011 Best Practices for Government Libraries E-Initiatives and E-Efforts: Expanding Our Horizons

2011 Best Practices for Government Libraries e-Initiatives and e-Efforts: Expanding our Horizons Compiled and edited by Marie Kaddell, M.L.S, M.S., M.B.A. Senior Information Professional Consultant LexisNexis BEST PRACTICES 2011 BEST PRACTICES 2011 TABLE OF CONTENTS EMBRACING NEW AVENUES OF COMMUNICATION Blogging at the Largest Law Library in the World Christine Sellers, Legal Reference Specialist, and Andrew Weber, Legislative Information Systems Manager, Law Library of Congress Page 15 ―Friended‖ by the Government? A look at How Social Networking Tools Are Giving Americans Greater Access to Their Government. Kate Follen, MLS, President, Monroe Information Services Page 19 Podcasts Get Information Junkies their Fix Chris Vestal, Supervisory Patent Researcher with ASRC Management Services, U.S. Patent and Trademark Office and DC/SLA‘s 2011 Communication Secretary Page 27 Getting the Most from Social Media from the Least Investment of Time and Energy Tammy Garrison, MLIS, Digitization Librarian at the Combined Arms Research Library at Fort Leavenworth, KS Page 30 Thinking Outside the Email Box: A New E-Newsletter for the Justice Libraries Kate Lanahan, Law Librarian, and Jennifer L. McMahan, Supervisory Librarian, U.S. Department of Justice Page 35 Bill’s Bulletin: Librarians and Court Staff Working Together to Develop an E-Resource Barbara Fritschel, U. S. Courts Library, Milwaukee, WI Page 37 Proletariat’s Speech: Foreign Language Learning with a Common Touch Janice P. Fridie, Law Librarian, U.S. Department of Justice Page 39 Social Media -

Julian Mather Is a WorldClass Videographer Whose Only Camera Is Julian Mather a Smartphone

GET VIDEO SMART GET VIDEO COULD YOU PRESENT A COMPELLING VIDEO RIGHT NOW IF YOU HAD TO? The expectation that businesses know how to present and make videos is upon us. By 2021, four out of five of your customer’s interactions with their phone will involve video. As we are already addicted to our phones - more people on the planet own a phone than a toothbrush - you need to be making videos or your business WILL become invisible. GET VIDEO But wait. The way to make business videos has changed. You no longer have to outsource. Everything you need is in the palm of your hand... literally. This book will fast-track your business into the video age by showing how to use your SMARTphone - that pocket-sized TV studio sitting idle on everyone’s desk - SMARTUSE YOUR SMARTPHONE TO MAKE SMART VIDEOS to make SMART videos that will boost business, generate leads, improve confidence among your team and blow customer satisfaction through the roof. THAT BOOST BUSINESS JULIAN MATHER IS A WORLDCLASS VIDEOGRAPHER WHOSE ONLY CAMERA IS JULIAN MATHER A SMARTPHONE. He spent 25 years behind the camera for ABC TV, National Geographic, Discovery, BBC and 10 years in front presenting 1000 online videos, racking up 30 million views and 140,000 subscribers. exceed customer make your expectations introduce HIS JOURNEYS business visible new products FROM BEHIND THE CAMERA TO IN FRONT OF IT speak to FROM PUBLIC SERVANT younger TO ENTREPRENEUR audiences JULIAN MATHER JULIAN improve FROM STUTTERER TO get more confidence 'If anyone can show you PROFESSIONAL SPEAKER leads how to use video to tell your story it's Julian' UNIQUELY POSITION JULIAN TO GUIDE YOU ON YOUR JOURNEY TO DEBORAH FLEMING GETTING VIDEO SMART. -

Presidential Obstruction of Justice: the Case of Donald J. Trump

PRESIDENTIAL OBSTRUCTION OF JUSTICE: THE CASE OF DONALD J. TRUMP 2ND EDITION AUGUST 22, 2018 BARRY H. BERKE, NOAH BOOKBINDER, AND NORMAN L. EISEN* * Barry H. Berke is co-chair of the litigation department at Kramer Levin Naftalis & Frankel LLP (Kramer Levin) and a fellow of the American College of Trial Lawyers. He has represented public officials, professionals and other clients in matters involving all aspects of white-collar crime, including obstruction of justice. Noah Bookbinder is the Executive Director of Citizens for Responsibility and Ethics in Washington (CREW). Previously, Noah has served as Chief Counsel for Criminal Justice for the United States Senate Judiciary Committee and as a corruption prosecutor in the United States Department of Justice’s Public Integrity Section. Ambassador (ret.) Norman L. Eisen, a senior fellow at the Brookings Institution, was the chief White House ethics lawyer from 2009 to 2011 and before that, defended obstruction and other criminal cases for almost two decades in a D.C. law firm specializing in white-collar matters. He is the chair and co-founder of CREW. We are grateful to Sam Koch, Conor Shaw, Michelle Ben-David, Gabe Lezra, and Shaked Sivan for their invaluable assistance in preparing this report. The Brookings Institution is a nonprofit organization devoted to independent research and policy solutions. Its mission is to conduct high-quality, independent research and, based on that research, to provide innovative, practical recommendations for policymakers and the public. The conclusions and recommendations of any Brookings publication are solely those of its author(s), and do not reflect the views of the Institution, its management, or its other scholars.