Vision-Based Displacement Measurement Sensor Using Modified Taylor Approximation Approach

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Huishang Bank Corporation Limited* 徽 商 銀 行 股 份 有 限

Hong Kong Exchanges and Clearing Limited and The Stock Exchange of Hong Kong Limited take no responsibility for the contents of this announcement, make no representation as to its accuracy or completeness and expressly disclaim any liability whatsoever for any loss howsoever arising from or in reliance upon the whole or any part of the contents of this announcement. Huishang Bank Corporation Limited* 徽商銀行股份有限公司* (A joint stock company incorporated in the People’s Republic of China with limited liability) (Stock Code: 3698 and 4608 (Preference shares)) 2017 INTERIM RESULTS ANNOUNCEMENT The board of directors (the“ Board”) of Huishang Bank Corporation Limited (the “Bank”) is pleased to announce the unaudited interim results of the Bank and its subsidiaries for the six months ended June 30, 2017. This announcement, containing the full text of the 2017 Interim Report of the Bank, complies with the relevant content requirements of the Rules Governing the Listing of Securities on The Stock Exchange of Hong Kong Limited in relation to preliminary announcements of interim results. The printed version of the Bank’s 2017 Interim Report will be delivered to the holders of H Shares of the Bank and available for viewing on the websites of Hong Kong Exchanges and Clearing Limited at www.hkexnews.hk and of the Bank at www.hsbank.com.cn in September 2017. By order of the Board Huishang Bank Corporation Limited* Li Hongming Chairman Hefei, Anhui Province, China August 25, 2017 As at the date of this announcement, the board of directors of the Bank comprises Li Hongming, Wu Xuemin and Ci Yaping as executive directors; Zhang Feifei, Zhu Jiusheng, Qian Li, Lu Hui, Zhao Zongren, Qiao Chuanfu and Gao Yang as non-executive directors; Au Ngai Daniel, Dai Genyou, Wang Shihao, Zhang Shenghuai and Zhu Hongjun as independent non-executive directors. -

Table of Codes for Each Court of Each Level

Table of Codes for Each Court of Each Level Corresponding Type Chinese Court Region Court Name Administrative Name Code Code Area Supreme People’s Court 最高人民法院 最高法 Higher People's Court of 北京市高级人民 Beijing 京 110000 1 Beijing Municipality 法院 Municipality No. 1 Intermediate People's 北京市第一中级 京 01 2 Court of Beijing Municipality 人民法院 Shijingshan Shijingshan District People’s 北京市石景山区 京 0107 110107 District of Beijing 1 Court of Beijing Municipality 人民法院 Municipality Haidian District of Haidian District People’s 北京市海淀区人 京 0108 110108 Beijing 1 Court of Beijing Municipality 民法院 Municipality Mentougou Mentougou District People’s 北京市门头沟区 京 0109 110109 District of Beijing 1 Court of Beijing Municipality 人民法院 Municipality Changping Changping District People’s 北京市昌平区人 京 0114 110114 District of Beijing 1 Court of Beijing Municipality 民法院 Municipality Yanqing County People’s 延庆县人民法院 京 0229 110229 Yanqing County 1 Court No. 2 Intermediate People's 北京市第二中级 京 02 2 Court of Beijing Municipality 人民法院 Dongcheng Dongcheng District People’s 北京市东城区人 京 0101 110101 District of Beijing 1 Court of Beijing Municipality 民法院 Municipality Xicheng District Xicheng District People’s 北京市西城区人 京 0102 110102 of Beijing 1 Court of Beijing Municipality 民法院 Municipality Fengtai District of Fengtai District People’s 北京市丰台区人 京 0106 110106 Beijing 1 Court of Beijing Municipality 民法院 Municipality 1 Fangshan District Fangshan District People’s 北京市房山区人 京 0111 110111 of Beijing 1 Court of Beijing Municipality 民法院 Municipality Daxing District of Daxing District People’s 北京市大兴区人 京 0115 -

Facile Fabrication of Ultra-Light and Highly Resilient PU/RGO Foams for Microwave

Electronic Supplementary Material (ESI) for RSC Advances. This journal is © The Royal Society of Chemistry 2017 Supporting Information Facile fabrication of ultra-light and highly resilient PU/RGO foams for microwave absorption Chunmei Zhanga,+, Hua Lia,b,+,*, Zhangzhi Zhuoc, Roberto Dugnanid, Chongyang Suna , Yujie Chena,*, Hezhou Liua,b aState Key Laboratory of Metal Matrix Composites, School of Materials Science and Engineering, Shanghai Jiao Tong University, Dongchuan Road No. 800, Shanghai 200240, China. bCollaborative Innovation Center for Advanced Ship and deep-Sea Exploration, Shanghai Jiao Tong University. cWuHu State-owned Factory of Machining, Jiujiang District Wanli Village No. 99, WuHu 241000, China. dUniversity of Michigan-Shanghai Jiao Tong University Joint Institute. *Corresponding author: Hua Li (email: [email protected]) or Yujie Chen (email: [email protected]) +These authors contributed equally to this work. Figure S1 SEM images of PU/RGO foams with different loadings of RGO: (a) and (b) sample S- 3, (c) and (d) sample S-6, (e) and (f) sample S-9, (g) and (h) sample S-12, and (i) and (j) sample S-15 Figure S2 The photos of the PU foam and the prepared PU/RGO foams with different RGO loadings Figure S3 Schematic illustration of the dissipation routes of microwaves irradiated in the PU/RGO foams Figure S4 The variation curves of (a) SETotal, (b) SEA, and (c) SER of samples with different RGO loadings in the frequency range of 2 - 18 GHz The samples used for electromagnetic shielding performance measurements were made into toroidal-shaped specimens with an outer diameter of 7.0 mm and an inner diameter of 3.04 mm. -

CHINA VANKE CO., LTD.* 萬科企業股份有限公司 (A Joint Stock Company Incorporated in the People’S Republic of China with Limited Liability) (Stock Code: 2202)

Hong Kong Exchanges and Clearing Limited and The Stock Exchange of Hong Kong Limited take no responsibility for the contents of this announcement, make no representation as to its accuracy or completeness and expressly disclaim any liability whatsoever for any loss howsoever arising from or in reliance upon the whole or any part of the contents of this announcement. CHINA VANKE CO., LTD.* 萬科企業股份有限公司 (A joint stock company incorporated in the People’s Republic of China with limited liability) (Stock Code: 2202) 2019 ANNUAL RESULTS ANNOUNCEMENT The board of directors (the “Board”) of China Vanke Co., Ltd.* (the “Company”) is pleased to announce the audited results of the Company and its subsidiaries for the year ended 31 December 2019. This announcement, containing the full text of the 2019 Annual Report of the Company, complies with the relevant requirements of the Rules Governing the Listing of Securities on The Stock Exchange of Hong Kong Limited in relation to information to accompany preliminary announcement of annual results. Printed version of the Company’s 2019 Annual Report will be delivered to the H-Share Holders of the Company and available for viewing on the websites of The Stock Exchange of Hong Kong Limited (www.hkexnews.hk) and of the Company (www.vanke.com) in April 2020. Both the Chinese and English versions of this results announcement are available on the websites of the Company (www.vanke.com) and The Stock Exchange of Hong Kong Limited (www.hkexnews.hk). In the event of any discrepancies in interpretations between the English version and Chinese version, the Chinese version shall prevail, except for the financial report prepared in accordance with International Financial Reporting Standards, of which the English version shall prevail. -

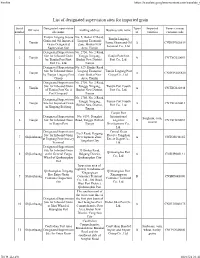

List of Designated Supervision Sites for Imported Grain

Firefox https://translate.googleusercontent.com/translate_f List of designated supervision sites for imported grain Serial Designated supervision Types Imported Venue (venue) Off zone mailing address Business unit name number site name of varieties customs code Tianjin Lingang Jiayue No. 5, Bohai 37 Road, Tianjin Lingang Grain and Oil Imported Lingang Economic 1 Tianjin Jiayue Grain and Oil A CNDGN02S619 Grain Designated Zone, Binhai New Terminal Co., Ltd. Supervision Site Area, Tianjin Designated Supervision No. 2750, No. 2 Road, Site for Inbound Grain Tanggu Xingang, Tianjin Port First 2 Tianjin A CNTXG020051 by Tianjin Port First Binhai New District, Port Co., Ltd. Port Co., Ltd. Tianjin Designated Supervision No. 529, Hunhe Road, Site for Inbound Grain Lingang Economic Tianjin Lingang Port 3 Tianjin A CNDGN02S620 by Tianjin Lingang Port Zone, Binhai New Group Co., Ltd. Group Area, Tianjin Designated Supervision No. 2750, No. 2 Road, Site for Inbound Grain Tanggu Xingang, Tianjin Port Fourth 4 Tianjin A CNTXG020448 of Tianjin Port No. 4 Binhai New District, Port Co., Ltd. Port Company Tianjin No. 2750, No. 2 Road, Designated Supervision Tanggu Xingang, Tianjin Port Fourth 5 Tianjin Site for Imported Grain A CNTXG020413 Binhai New District, Port Co., Ltd. in Xingang Beijiang Tianjin Tianjin Port Designated Supervision No. 6199, Donghai International Sorghum, corn, 6 Tianjin Site for Inbound Grain Road, Tanggu District, Logistics B CNTXG020051 sesame in Tianjin Port Tianjin Development Co., Ltd. Designated Supervision Central Grain No.9 Road, Haigang Site for Inbound Grain Reserve Tangshan 7 Shijiazhuang Development Zone, A CNTGS040165 at Jingtang Port Grocery Direct Depot Co., Tangshan City Terminal Ltd. -

Anhui Intermodal Sustainable Transport Project - Resettlement Monitoring Report

Social Monitoring Report Project Number: 45021-002 April 2020 PRC: Anhui Intermodal Sustainable Transport Project - Resettlement Monitoring Report Prepared by Anhui Zhenhua Comprehensive Transport Research Institute of Anhui Communications Vocational and Technical College for the Anhui Provincial Government and the Asian Development Bank This social monitoring report is a document of the borrower. The views expressed herein do not necessarily represent those of ADB's Board of Directors, Management, or staff, and may be preliminary in nature. In preparing any country program or strategy, financing any project, or by making any designation of or reference to a particular territory or geographic area in this document, the Asian Development Bank does not intend to make any judgments as to the legal or other status of any territory or area. Anhui Communications Vocational &Technical College Anhui Intermodal Sustainable Transport Project Resettlement Monitoring&Evaluation Report (NO. 10) Send to: Foreign Fund Project Management Office, Transport Department of Anhui Province; Project Resettlement Expert of ADB Monitoring Party: Anhui Communications Vocational & Technical College Duration of Monitoring: January 1 to June 30, 2019 Resettlement Monitoring& Evaluation Report NO. 10 Contents 1 Land Acquisition, Demolition and Resettlement.............................................................. - 1 - 1.1 G206 Dongliu-Yaodu Section Construction Engineering.....................................- 1 - 1.1.1 Land Acquisition Demolition.......................................................................... -

Change Pattern and Driving Mechanism of Construction Land In

EARTH SCIENCES RESEARCH JOURNAL LAND USE Earth Sci. Res. J. Vol. 24, No. 2 (June, 2020): 215-223 Change pattern and driving mechanism of construction land in China’s undertaking industrial transfer demonstration area: Taking the Wanjiang City Belt along the Yangtze River as an Example Yuhong Cao1*, Meiyun Liu1, Yuandan Cao1, Chen Chen1, Dapeng Zhang2 1School of Environmental Science and Engineering, Anhui Normal University, Wuhu 241002, China 2School of Geography and Tourism, Anhui Normal University, Wuhu 241002, China * Corresponding author: [email protected] ABSTRACT Keywords: expansion intensity; land use; hotspot The construction land includes urban land, rural residential areas, and other construction lands. The Wanjiang City Belt analysis; the Wanjiang City Belt along the along the Yangtze River is a vital demonstration area for undertaking industrial transfer in China. With the accumulation Yangtze River. of factors relative to economic development, the construction land has increased sharply, and the regional ecological security pattern is facing new challenges. After collecting the image interpretation data of multi-period land use of the Wanjiang City Belt, this work studies the characteristics of construction land change patterns since 1995. The driving mechanism was also analyzed based on the GIS platform, land use transfer matrix, expansion intensity index, hotspot analysis, and mathematical statistics. The results showed that: (1) From 1995 to 2015, the urban land and other construction lands in the Wanjiang City Belt have increased, but the rural residential areas decreased in 2010- 2015. The three types of lands had the most significant changes in 2005-2010, and the other construction land was particularly prominent. -

World Bank-Financed Anhui Aged Care System Demonstration Project

EMP for World Bank-Financed Anhui Aged Care System Demonstration Project SFG3916 Zhongzi Huayu Public Disclosure Authorized G. H. P. Z. J. Zi No. 1051 World Bank-Financed Anhui Aged Care System Demonstration Project Public Disclosure Authorized Environment and Social Management Plan (EMP) Public Disclosure Authorized Commissioned by: Department of Civil Affairs of Anhui Province Prepared by: Beijing Zhongzi Huayu Environmental Public Disclosure Authorized Protection Technology Co., Ltd. Prepared in: December 2017 EMP for World Bank-Financed Anhui Aged Care System Demonstration Project Table of Contents Preface .............................................................................................................................................. 1 1 Project overview ..................................................................................................................... 6 1.1 Project background .............................................................................................................. 6 1.2 Construction contractor ....................................................................................................... 7 1.3 Project Objectives ................................................................................................................ 7 1.4 Project details ...................................................................................................................... 8 2 Basis and standard .............................................................................................................. -

Wuhu Haoxuan Wood Plastic Composite Co.,Ltd Factory Add:Jiangbei Industrial Concentration Area, Shenxiang Town, Jiujiang District, Wuhu City Anhui Province, China

Wuhu Haoxuan Wood Plastic Composite Co.,Ltd Factory add:Jiangbei industrial concentration area, Shenxiang town, Jiujiang district, wuhu city Anhui Province, China. Email:[email protected] Contact: Elaine Zhang Mob: 0086-15155301416 Hosung WPC Catalogue Feeling Wood decking PHOTO ITEM NR. FW148K25A Sliver Gray FW148K25A Teak FW148K25A Mocha FW148K25A Red Brown SECTION PHOTO ITEM NR. FW148K25N04 Black FW148K25N03 Silver grey FW148K25N02 Teak FW148K25N01 Red Brown SECTION PHOTO ITEM NR. FW148K25B Mocha FW148K25B Red Brown FW148K25B Chocolate FW148K25B Black SECTION PHOTO ITEM NR. FW148K25C Sliver Gray FW148K25C Teak FW148K25C Mocha FW148K25C Red Brown SECTION PHOTO ITEM NR. FW148K25D Sliver Gray FW148K25D Teak FW148K25D Mocha FW148K25D Red Brown SECTION Page 1 of 16 PHOTO ITEM NR. FW148K25D Black FW148K25A Teak Mixed FW148K25A Red Brown FW135K25A Teak-Black SECTION PHOTO ITEM NR. FW146Y25 Chocolate FW140S23D Teak FW140S23A Chocolate FW140S23D Red Brown SECTION PHOTO ITEM NR. FW142S25D FW140S10B Teak FW140S10B Red Brown FW140S10B Silver Grey SECTION PHOTO ITEM NR. FW190S15D Silver Grey FW142S25B Step Board HX140LK50 Step Board HX182S10 Ridge SECTION Feeling Wood Wall Cladding PHOTO ITEM NR. FW156W21B Black FW156W21B Chocolate FW156W21B Mocha FW156W21B Red Brown SECTION Page 2 of 16 PHOTO ITEM NR. FW156W21C Chocolate FW156W21C Silver Grey FW156W21D Black FW156W21D Red Brown SECTION PHOTO ITEM NR. FW162Q20 Mixed FW165Q15C Teak SECTION Normal Decking PHOTO ITEM NR. HX120K25 HX120Y25 HX135K25A HX135K25B SECTION PHOTO ITEM NR. HX135K25C HX140K25A HX140K25B HX140K25C SECTION PHOTO ITEM NR. HX140K25D HX140Y23 HX140Y25 HX140K30B SECTION Page 3 of 16 PHOTO ITEM NR. HX140K40A HX145K50 HX145K22 HX146K20A SECTION PHOTO ITEM NR. HX146K20B HX146K24A HX146K24B HX150K25A SECTION PHOTO ITEM NR. HX150K25B HX150K25C HX150K25D HX150K25E SECTION PHOTO ITEM NR. -

CHINA the Church of Almighty God: Prisoners Database (1663 Cases)

CHINA The Church of Almighty God: Prisoners Database (1663 cases) Prison term: 15 years HE Zhexun Date of birth: On 18th September 1963 Date and place of arrest: On 10th March 2009, in Xuchang City, Henan Province Charges: Disturbing social order and using a Xie Jiao organization to undermine law enforcement because of being an upper-level leader of The Church of Almighty God in mainland China, who was responsible for the overall work of the church Statement of the defendant: He disagreed with the decision and said what he believed in is not a Xie Jiao. Court decision: In February 2010, he was sentenced to 15 years in prison by the Zhongyuan District People’s Court of Zhengzhou City, Henan Province. Place of imprisonment: No. 1 Prison of Henan Province Other information: He was regarded by the Chinese authorities as a major criminal of the state and had long been on the wanted list. To arrest him, authorities offered 500,000 RMB as a reward to informers who gave tips leading to his arrest to police. He was arrested at the home of a Christian in Xuchang City, Henan Province. Based on the information from a Christian serving his sentence in the same prison, HE Zhexun was imprisoned in a separate area and not allowed to contact other prisoners. XIE Gao, ZOU Yuxiong, SONG Xinling and GAO Qinlin were arrested in succession alongside him and sentenced to prison terms ranging from 11 to 12 years. Source: https://goo.gl/aGkHBj Prison term: 14 years MENG Xiumei Age: Forty-one years old Date and place of arrest: On 14th August 2014, in Xinjiang Uyghur Autonomous Region Charges: Using a Xie Jiao organization to undermine law enforcement because of being a leader of The Church of Almighty God and organizing gatherings for Christians and the work of preaching the gospel in Ili prefecture Statement of the defendant: She claimed that her act did not constitute crimes. -

World Bank Document

Document of The World Bank FOR OFFICIAL USE ONLY Public Disclosure Authorized Report No: 45059-CN PROJECT APPRAISAL DOCUMENT ON A Public Disclosure Authorized PROPOSED LOAN IN THE AMOUNT OF US$100 MILLION TO THE PEOPLE'S REPUBLIC OF CHINA FOR THE ANHUI MEDIUM CITIES URBAN TRANSPORT PROJECT Public Disclosure Authorized April 14, 2010 China and Mongolia Sustainable Development Unit Sustainable Development Department East Asia and Pacific Region Public Disclosure Authorized This document has a restricted distribution and may be used by recipients only in the performance of their official duties. Its contents may not otherwise be disclosed without World Bank authorization. CURRENCY EQUIVALENTS (Exchange Rate Effective June 2009) Currency Unit = RMB or Chinese Yuan US$1 = 6.8 RMB FISCAL YEAR January 1 – December 31 ABBREVIATIONS AND ACRONYMS APAO Anhui Provincial Audit Office MBD Model bidding documents APFB Anhui Provincial Finance Bureau MFB Municipal finance bureau APMO Anhui Project Management Office MV Motorized vehicles BRT Bus rapid transit NCB National competitive bidding CQS Consultant qualifications selection NGO Non-governmental organization DA Designated account NMV Non-motorized vehicles DO Development objective NPV Net present value EA Environmental assessment OP Operational policy EIA Environmental impact assessment PMO Project management office EIRR Economic internal rate of return PT Public transport EMP Environmental management plan QBS Quality-based selection FM Financial management QCBS Quality- and cost-based selection FSL Fixed-spread loan RAP Resettlement action plan IC Integrated corridor development SBD Standard bidding document ICB International competitive bidding SOE State-owned enterprise I&M Inspection and maintenance TS Traffic safety and management LA Local access and freight UNDB United Nations Development Business LG Leading group VSL Variable-spread loan Vice President: James W. -

Anhui Intermodal Sustainable Transport Project

Social Monitoring Report Semi-Annual Report June 2016 PRC: Anhui Intermodal Sustainable Transport Project Prepared by Anhui Communications Vocational and Technical College for the People’s Republic of China and the Asian Development Bank. This social monitoring report is a document of the borrower. The views expressed herein do not necessarily represent those of ADB's Board of Directors, Management, or staff, and may be preliminary in nature. In preparing any country program or strategy, financing any project, or by making any designation of or reference to a particular territory or geographic area in this document, the Asian Development Bank does not intend to make any judgments as to the legal or other status of any territory or area. Asian Development Bank Loan External Resettlement Monitoring Report On Anhui Intermodal Sustainable Transport Development Project (No.4) Anhui Communications Vocational and Technical College June 2016 Contents 1 Monitoring on Land Acquisition, House Demolition and resettlement of G206 from Dongliu to Yaodu Section ......................................................................................................... - 1 - 2 Monitoring on LAR of S319 from Erba to Wuwei Section ................................................ - 1 - 2.1 Progress of LAR of S319 ............................................................................................... - 1 - 2.2 State-owned Land Acquisition of S319 .......................................................................... - 1 - 2.3 Compensation for Movement