I/O Scheduling in Freebsd's CAM Subsystem

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

SAS Enters the Mainstream Although Adoption of Serial Attached SCSI

SAS enters the mainstream By the InfoStor staff http://www.infostor.com/articles/article_display.cfm?Section=ARTCL&C=Newst&ARTICLE_ID=295373&KEYWORDS=Adaptec&p=23 Although adoption of Serial Attached SCSI (SAS) is still in the infancy stages, the next 12 months bode well for proponents of the relatively new disk drive/array interface. For example, in a recent InfoStor QuickVote reader poll, 27% of the respondents said SAS will account for the majority of their disk drive purchases over the next year, although Serial ATA (SATA) topped the list with 37% of the respondents, followed by Fibre Channel with 32%. Only 4% of the poll respondents cited the aging parallel SCSI interface (see figure). However, surveys of InfoStor’s readers are skewed by the fact that almost half of our readers are in the channel (primarily VARs and systems/storage integrators), and the channel moves faster than end users in terms of adopting (or at least kicking the tires on) new technologies such as serial interfaces. Click here to enlarge image To get a more accurate view of the pace of adoption of serial interfaces such as SAS, consider market research predictions from firms such as Gartner and International Data Corp. (IDC). Yet even in those firms’ predictions, SAS is coming on surprisingly strong, mostly at the expense of its parallel SCSI predecessor. For example, Gartner predicts SAS disk drives will account for 16.4% of all multi-user drive shipments this year and will garner almost 45% of the overall market in 2009 (see figure on p. 18). -

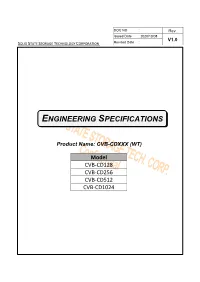

Engineering Specifications

DOC NO : Rev. Issued Date : 2020/10/08 V1.0 SOLID STATE STORAGE TECHNOLOGY CORPORATION 司 Revised Date : ENGINEERING SPECIFICATIONS Product Name: CVB-CDXXX (WT) Model CVB-CD128 CVB-CD256 CVB-CD512 CVB-CD1024 Author: Ken Liao DOC NO : Rev. Issued Date : 2020/10/08 V1.0 SOLID STATE STORAGE TECHNOLOGY CORPORATION 司 Revised Date : Version History Date 0.1 Draft 2020/07/20 1.0 First release 2020/10/08 DOC NO : Rev. Issued Date : 2020/10/08 V1.0 SOLID STATE STORAGE TECHNOLOGY CORPORATION 司 Revised Date : Copyright 2020 SOLID STATE STORAGE TECHNOLOGY CORPORATION Disclaimer The information in this document is subject to change without prior notice in order to improve reliability, design, and function and does not represent a commitment on the part of the manufacturer. In no event will the manufacturer be liable for direct, indirect, special, incidental, or consequential damages arising out of the use or inability to use the product or documentation, even if advised of the possibility of such damages. This document contains proprietary information protected by copyright. All rights are reserved. No part of this datasheet may be reproduced by any mechanical, electronic, or other means in any form without prior written permission of SOLID STATE STORAGE Technology Corporation. DOC NO : Rev. Issued Date : 2020/10/08 V1.0 SOLID STATE STORAGE TECHNOLOGY CORPORATION 司 Revised Date : Table of Contents 1 Introduction ....................................................................... 5 1.1 Overview ............................................................................................. -

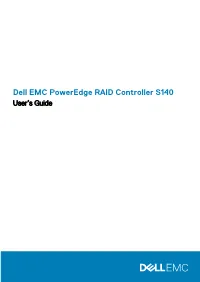

Dell EMC Poweredge RAID Controller S140 User’S Guide Notes, Cautions, and Warnings

Dell EMC PowerEdge RAID Controller S140 User’s Guide Notes, cautions, and warnings NOTE: A NOTE indicates important information that helps you make better use of your product. CAUTION: A CAUTION indicates either potential damage to hardware or loss of data and tells you how to avoid the problem. WARNING: A WARNING indicates a potential for property damage, personal injury, or death. © 2018 - 2019 Dell Inc. or its subsidiaries. All rights reserved. Dell, EMC, and other trademarks are trademarks of Dell Inc. or its subsidiaries. Other trademarks may be trademarks of their respective owners. 2019 - 12 Rev. A09 Contents 1 Overview..................................................................................................................................... 6 PERC S140 specifications.....................................................................................................................................................6 Supported operating systems..............................................................................................................................................8 Supported PowerEdge systems.......................................................................................................................................... 9 Supported physical disks...................................................................................................................................................... 9 Management applications for the PERC S140................................................................................................................ -

Datasheet (PDF)

DOC NO : Rev. Issued Date : 2020/10/07 V1.0 SOLID STATE STORAGE TECHNOLOGY CORPORATION 司 Revised Date : ENGINEERING SPECIFICATIONS Product Name: CVB-8DXXX-WT Model CVB-8D128- WT CVB-8D256 - WT CVB-8D512- WT CVB-8D1024 - WT Author: Ken Liao DOC NO : Rev. Issued Date : 2020/10/07 V1.0 SOLID STATE STORAGE TECHNOLOGY CORPORATION 司 Revised Date : Version History Date 0.1 Draft 2020/03/30 1.0 First release 2020/10/07 DOC NO : Rev. Issued Date : 2020/10/07 V1.0 SOLID STATE STORAGE TECHNOLOGY CORPORATION 司 Revised Date : Copyright 2020 SOLID STATE STORAGE TECHNOLOGY CORPORATION Disclaimer The information in this document is subject to change without prior notice in order to improve reliability, design, and function and does not represent a commitment on the part of the manufacturer. In no event will the manufacturer be liable for direct, indirect, special, incidental, or consequential damages arising out of the use or inability to use the product or documentation, even if advised of the possibility of such damages. This document contains proprietary information protected by copyright. All rights are reserved. No part of this datasheet may be reproduced by any mechanical, electronic, or other means in any form without prior written permission of SOLID STATE STORAGE Technology Corporation. DOC NO : Rev. Issued Date : 2020/10/07 V1.0 SOLID STATE STORAGE TECHNOLOGY CORPORATION 司 Revised Date : Table of Contents 1 Introduction ....................................................................... 5 1.1 Overview ............................................................................................. -

3.5-Inch, SAS 15K Enterprise Hard Disk Drives MAX3036RC • MAX3073RC • MAX3147RC

Storage High performance and high capacity for your mission critical storage applications. 3.5-Inch, SAS 15K Enterprise Hard Disk Drives MAX3036RC • MAX3073RC • MAX3147RC State-of-the-art hard disk drives for your most demanding enterprise applications 3.5-inch RoHS compliant enterprise hard disk drives offer 15K RPM spindle speed and feature storage capacities up to 147GB1 Ideal for applications requiring high performance and high bandwidth Native Command Queuing (NCQ), providing faster execution of operation commands Uses smaller connectors and cables than U320, a benefit acknowledged by the SCSI Trade Association (STA) Point-to-point connection allows for improved input-output per second (IOPS) in proportion to the number of drives connected 1 One gigabyte (GB) = one billion bytes; accessible capacity will be less and actual capacity depends on the operating environment and formatting. 3.5-Inch, SAS 15K Enterprise Hard Disk Drives MAX3036RC • MAX3073RC • MAX3147RC 3.5-Inch, SAS 15K RPM Series Hard Disk Drive Specifications With more than 35 years of experience in hard disk Description MAX3036RC MAX3073RC MAX3147RC drive technology, Fujitsu can offer state-of-the-art Functional Specifications hard disk drives for your most demanding enterprise Storage capacity (formatted)1 36.7 GB 73.5 GB 147.0 GB applications. Our latest SAS offering is another Disks 1 2 4 Heads (read/write) 2 4 8 example, building on our market leadership in the Bytes/sector 512 Seek time Track to track Read: 0.2 ms (typ.) / Write: 0.4 ms (typ.) exploding SAS market. Average Read: 3.3 ms (typ) / Write: 3.8 ms (typ) The availability of this latest SAS offering Full track Read: 8.0 ms (typ) / Write: 9.0 ms (typ) Average latency time 2.00 ms solidifies Fujitsu as the SAS leader for all form factors Rotational speed (RPM) 15,000 in the enterprise market, reflecting a determination Areal density 59 Gbits/sq. -

Run-Time Detection of Protocol Bugs in Storage I/O Device Drivers Domenico Cotroneo, Luigi De Simone, Roberto Natella

1 Run-Time Detection of Protocol Bugs in Storage I/O Device Drivers Domenico Cotroneo, Luigi De Simone, Roberto Natella Abstract—Protocol violation bugs in storage device drivers are necessarily lead to such symptoms. The lack of detection can a critical threat for data integrity, since these bugs can silently lead to silent corruptions of users’ data, thus exacerbating the corrupt the commands and data flowing between the OS and cost of software failures. storage devices. Due to their nature, these bugs are notoriously difficult to find by traditional testing. In this paper, we propose In this paper, we propose a novel approach for detecting I/O a run-time monitoring approach for storage device drivers, in protocol violations in storage device drivers, by monitoring at order to detect I/O protocol violations that would otherwise run-time the interactions between the driver and the hardware silently escalate in corruptions of users’ data. The monitoring device controller. The purpose of the run-time monitor is to de- approach detects violations of I/O protocols by automatically learning a reference model from failure-free execution traces. The tect device driver failures in a timely manner. This solution is approach focuses on selected portions of the storage controller meant both to users and engineers of high-availability storage interface, in order to achieve a good trade-off in terms of low systems, including: administrators and end-users of the system, performance overhead and high coverage and accuracy of failure which need to get alarms about the onset of data corruptions in detection. -

PCI Express X4 Card > 2 X Internal M.2 Key B + 1 X Internal Nvme M.2

PCI Express x4 Card > 2 x internal M.2 Key B + 1 x internal NVMe M.2 Key M - Low Profile Form Factor Description This PCI Express card by Delock expands the PC by three M.2 slots. On the card can be connected up to two M.2 SATA modules in format 22110, 2280, 2260, 2242, 2230 and one M.2 PCIe module in format 22110, 2280, 2260, 2242, 2230. The SATA modules will be connected via SATA cables to the motherboard. Specification Item no. 89394 • Connectors: EAN: 4043619893942 internal: 2 x 67 pin M.2 key B slot Country of origin: Taiwan, Republic of 1 x 67 pin M.2 key M slot China 1 x PCI Express x4, V3.0 2 x SATA 6 Gb/s 7 pin receptacle Package: Box 1 x SATA 15 pin power plug • Interface: SATA + PCIe • Supports two M.2 modules in format 22110, 2280, 2260, 2242 and 2230 with key B or key B+M based on SATA and one M.2 module in format 22110, 2280, 2260, 2242 and 2230 with key M or key B+M based on PCIe • Maximum height of the components on the module: 1.5 mm, application of double-sided assembled modules supported • Data transfer rate up to 6 Gb/s • Power supply via PCI Express interface or via SATA 15 pin power connector • Supports Native Command Queuing (NCQ) • Supports NVM Express (NVMe) System requirements • Windows Vista/Vista-64/7/7-64/8.1/8.1-64/10/10-64, Linux Kernel 3.2.0 • PC with one free PCI Express x4 / x8 / x16 / x32 slot • One free SATA 7 pin interface for each connected M.2 module based on SATA Package content • PCI Express card • Low profile bracket • 3 x fixing screw © 2021 by Delock. -

Delock PCI Express X16 Card to 1 X M.2 Key B + 1 X Nvme M.2 Key M + 1 X Msata - Low Profile Form Factor

Delock PCI Express x16 Card to 1 x M.2 Key B + 1 x NVMe M.2 Key M + 1 x mSATA - Low Profile Form Factor Description This PCI Express card by Delock expands the PC bytwo M.2 slots as well as an mSATA slot. One M.2 SATA module can be connected in format 2280, 2260, 2242, 2230 with this card and connected via SATA cable to the motherboard. Furthermore, oneM.2 NVMe / PCIe module in format 2280, 2260, 2242, 2230 can be connected. Additionally, one mSATA memory can be connected via SATA cable to the motherboard. All slots can be used separate or at the same time. Specification Item no. 90486 • Connectors: EAN: 4043619904860 1 x 67 pin M.2 key B slot 1 x 67 pin M.2 key M slot Country of origin: China 1 x mSATA slot (full size) 1 x PCI Express x16, V4.0 Package: • Retail Box 2 x SATA 6 Gb/s 7 pin plug • Interface: PCIe / SATA • Supports one M.2 module in format 2280, 2260, 2242 and 2230 with key B or key B+M based on SATA and one M.2 module in format 2280, 2260, 2242 and 2230 with key M or key B+M based on PCIe • Maximum height of the components on the module: 1.5 mm, application of double-sided assembled modules supported • 3 x LED indicator • Bootable • Supports NVM Express (NVMe) • Supports Native Command Queuing (NCQ) • Supports S.M.A.R.T. • Supports TRIM • Supports DevSleep • Hot Swap System requirements • Linux Kernel 4.6.4 or above • Windows 8.1/8.1-64/10/10-64 • PC with one free PCI Express x16 / x32 slot Package content • PCI Express card • Low profile bracket • 2 x SATA cable, length ca. -

SATA Multi Level Cell SSD for Use in Embedded Computing

Avnet Embedded. Support Around The Board™ Device Specification. DeLKIN DEVICES SSD-MLC www.avnet-embedded.eu SATA SSD Overview Features The Delkin Palladium™ SATA SSDs provide large capacity ▪ Drop-in replacement for 2.5 & 1.8 inch SATA HDD and high speed performance while improving system ▪ Boot from Windows 98/2000/XP/Vista/7 and Linux responsiveness in the standard 2.5 and 1.8 inch form ▪ Serial ATA Interface: SATA 3.0Gb/s factors. Product dependability is guaranteed using leading ▪ Fully compatible with ATA-7; Standard PIO 0-4 edge flash controller designs, MLC NAND Flash, premium ▪ SMART, TRIM & Secure Erase Support components and enhanced feature sets. The Palladium SSD ▪ NSA 9-12 Compliant product platform is targeted for SATA based client PCs, ▪ Low power consumption rugged mobile devices, medical devices, military, aerospace ▪ Supports Native Command Queuing (NCQ) and a variety of embedded system OEM applications. MLC NAND flash has the benefit of being a more cost effective solution compared to SLC due to the denser Specifications storage method used. Compared to HDDs, SSDs have much faster random access, silent operation and lower Capacity 8GB to 256GB* power consumption. With no moving parts, SATA SSDs Form Factor 2.5” Type (100.0 x 70.0 x 9.5 min)1.8” allow for faster start up, lower latency, consistent read Type (78.0 x 54.0 x 5 min) performance, and a higher reliability by eliminating the Host Interface Serial ATA interface of 3.0Gb/s risk of mechanical failures. Fully complies with ATA-7 Specification NOTICES: Product information, pricing and availability are subject to change at any time without notice. -

Intel® SSD DC S3700 Product Specification

Intel® Solid-State Drive DC S3700 Product Specification Capacity: 2.5-inch : 100/200/400/800 GB Power Management 1.8-inch : 200/400 GB − 2.5 inch: 5 V or 12 V SATA Supply Rail9 Components: − 1.8 inch: 3.3 V SATA Supply Rail − Intel® 25nm NAND Flash Memory − SATA Interface Power Management − High Endurance Technology (HET) Multi-Level Cell − OS-aware hot plug/removal (MLC) − Enhanced power-loss data protection Form Factor: 2.5- and 1.8-inch Power10 − Active: Up to 6 W (TYP) Read and Write IOPS1,2 (Full LBA Range, Iometer* Queue Depth 32) − Idle: 650 mW Weight: − Random 4 KB3 Reads: Up to 75,000 IOPS − 2.5” 200,400,800 GB: 73.6 grams ± 2 grams − Random 4 KB Writes: Up to 36,000 IOPS − 2.5” 100 GB: 70 grams ± 2 grams − Random 8 KB3 Reads: Up to 47,500 IOPS − 1.8” 200, 400 GB: 49 grams ± 2 grams − Random 8 KB Writes: Up to 20,000 IOPS Temperature 1 Bandwidth Performance o o − Operating: 0 C to 70 C 4 − Sustained Sequential Read: Up to 500 MB/s 11 o o − Non-Operating : -55 C to 95 C − Sustained Sequential Write: Up to 460 MB/s − Temperature monitoring and logging 5 Endurance: 10 drive writes per day for 5 years − Thermal throttling Latency (average sequential) Shock (operating and non-operating): − Read: 50 µs (TYP) − 1,000 G/0.5 msec − Write: 65 µs (TYP) Vibration 6,8 Quality of Service − Operating: 2.17 GRMS (5-700 Hz) − Read/Write: 500 µs (99.9%) − Non-operating: 3.13 GRMS (5-800 Hz) 7,8 Performance Consistency Reliability Read/Write: Up to 90%/90% (99.9%) − Uncorrectable Bit Error Rate (UBER): AES 256-bit Encryption 1 -

Exploitation from Malicious Pci Express

EXPLOITATION FROM MALICIOUSPCIEXPRESS PERIPHERALS Colin Lewis Rothwell Churchill College September 2017 This dissertation is submitted for the degree of Doctor of Philosophy. This thesis is set in the serif Crimson and sans-serif Linux Biolinum, both open source. It was produced using the LuaTeX engine for LATEX, and the memoir document class. As much as possible, I have tried to follow the rules given in Robert Bringhurt’s The Elements of Typographic Style, which is also my source for the first of the following quotes. – A true revelation, it seems to me, will emerge only from stubborn concentration on a solitary problem. I am not in league with inventors or adventurers, nor with travellers to exotic destinations. The surest – also the quickest – way to awake the sense of wonder in ourselves is to look intently, undeterred, at a single object. Suddenly, miraculously, it will reveal itself as something we have never seen before. – Cesare Pavese – polonius What do you read, my lord? hamlet Words, words, words. (Shakespeare, Hamlet,Act2,Scene 2) EXPLOITATION FROM MALICIOUS PCI EXPRESS PERIPHERALS Colin Lewis Rothwell The thesis of this dissertation is that, despite widespread belief in the security community, systems are still vulnerable to attacks from malicious peripherals de- livered over the pci Express (pcie) protocol. Malicious peripherals can be plugged directly into internal pcie slots, or connected via an external Thunderbolt con- nection. To prove this thesis, we designed and built a new pcie attack platform. We discovered that a simple platform was insufficient to carry out complex attacks, so created the first pcie attack platform that runs a full, conventional os. -

Li H Isl ;R¥ ISJ I Wwê W

•* b\ica. i ^.j iecadoSenadOpra^daRepU *¦-* < ^^MaM^____-_g__e--5jgg^^ _ag__»g]—»C--!Bi^CT REDACÇÃO E ADMINISTRAÇÃO ASSIGNATURAS fiedacíor-cheíe — OR... FERNANDO MENDES DE ALMEIDA 54 RUA GONÇALVES DIAS mm rasa o laaan. N. 31 BISTXXBUIÇÃO E EXPEDIÇÃO APO XII •AB0*toe»»* *«*••••• SOfOOO Trimestrs (t edlçftet) . fí *d_;-e-).... Imooi. ¦XTiaioa „zkJ: ss KVA eo\ÇAI.Vt_S BUl It ¦emettre 16IOC:* Anr.o eeinrA 28 DE DEZEMBRO DE 19-2 Y-tophemB B. f B - (1 ediçoet). tajoou • (. edicOaX.... ttoioca MO DE JANEIRO-DOMINGO. l-E_E_a--_-^*K-,---g*a----»«_Sei--. ¦»!.' III .-B-g-T» ,^J*lfWWW_( 1 feito pelo putiio-ismo de centena- com mercaJorias ne diversas pro res de portuguezes: cedencias. da varias vezes,mudado seu nome A assignatura do contrato com Di Beira sabe-se que continua a cida momento, augmentado ou a casa Car.et para o fornecimento _„ ,-,-','»„nt3 desenvolvimento o Z PAGINAS diminuído, alternativamente, o cc Por Julião Machado da artiiheria realizar-se-á nos pri- caminho rie ferro dc » traíeffO sou cíiectivo, sem saliir das mu- meiros dias desta semana. pelo tle FESTAS ralhas uma fortaleza absolcta Trata-se de adiantar o mais pos. Pa do rendimento mensal RÉIS . c dos limites estreites de um re- sivel a construcção tio navio, A me ¦¦",^'•7 200 ii è de bbras. o que cor- gulamcnto coercitivo de legitimas para se evitar quê soffra a inver feüome- aspirações. responde a 4ã5.'»h«s por nia no Tejo. const»We uma receita De Nantes sei tros. c EJ-.*- SBSW-Bffl-S-aBS Em um paiz de littoral tfio ex- que partiu para importante.