To Bridge Neural Network Design and Real-World Performance: a Behaviour Study for Neural Networks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Accenture AI Inferencing in Action

POV POV PUT YOUR AI SOLUTION ON STEROIDS POV PUT YOUR AI SOLUTION ON STEROIDS POINT OF VIEW POV PUT YOUR AI SOLUTION ON STEROIDS POV MATCH GPU PERFORMANCE AT HALF THE COST FOR AI INFERENCE WORKLOADS Proven CPU-based solution from Accenture and Intel boosts the performance and lowers the cost of AI inferencing by enabling an easy-to-deploy, scalable, and cost-efficient architecture AI INFERENCING—THE NEXT CRITICAL STEP AFTER AI ALGORITHM TRAINING Artificial Intelligence (AI) solutions include three main functions—identifying and preparing data, training an artificial intelligence algorithm, and using the algorithm for inferring new outcomes. Each function requires different compute recourses and deployment architecture. The choices of infrastructure components and technologies significantly impact the performance and costs associated with deploying an end-to-end AI solution. Data scientists and machine learning (ML) engineers spend significant time devising the right architecture for all stages of the AI pipeline. MODEL DATA TRAINING AND SCORING AND PREPARATION OPTIMIZATION INFERENCE Once an AI computer/algorithm has been trained through traditional or deep learning techniques, it can deliver value by interpreting data (i.e., inferring). Through inference, an AI algorithm can analyze data to: • Differentiate between various items • Identify trends and patterns that can be leveraged during decision-making • Reveal opportunities and possible solutions • Recognize voices, faces, images, etc. POV PUT YOUR AI SOLUTION ON STEROIDS POV Revealing hidden As we look to the future, AI inference will become increasingly possibilities— important to businesses operating in all segments—from health care to financial services to aerospace. And as the reliance on AI inference continues to grow, so Accenture AIP, does the importance of choosing the right AI infrastructure to support it. -

GPU Developments 2018

GPU Developments 2018 2018 GPU Developments 2018 © Copyright Jon Peddie Research 2019. All rights reserved. Reproduction in whole or in part is prohibited without written permission from Jon Peddie Research. This report is the property of Jon Peddie Research (JPR) and made available to a restricted number of clients only upon these terms and conditions. Agreement not to copy or disclose. This report and all future reports or other materials provided by JPR pursuant to this subscription (collectively, “Reports”) are protected by: (i) federal copyright, pursuant to the Copyright Act of 1976; and (ii) the nondisclosure provisions set forth immediately following. License, exclusive use, and agreement not to disclose. Reports are the trade secret property exclusively of JPR and are made available to a restricted number of clients, for their exclusive use and only upon the following terms and conditions. JPR grants site-wide license to read and utilize the information in the Reports, exclusively to the initial subscriber to the Reports, its subsidiaries, divisions, and employees (collectively, “Subscriber”). The Reports shall, at all times, be treated by Subscriber as proprietary and confidential documents, for internal use only. Subscriber agrees that it will not reproduce for or share any of the material in the Reports (“Material”) with any entity or individual other than Subscriber (“Shared Third Party”) (collectively, “Share” or “Sharing”), without the advance written permission of JPR. Subscriber shall be liable for any breach of this agreement and shall be subject to cancellation of its subscription to Reports. Without limiting this liability, Subscriber shall be liable for any damages suffered by JPR as a result of any Sharing of any Material, without advance written permission of JPR. -

Persistent Memory for Artificial Intelligence

Persistent Memory for Artificial Intelligence Bill Gervasi Principal Systems Architect [email protected] Santa Clara, CA August 2018 1 Demand Outpacing Capacity In-Memory Computing Artificial Intelligence Machine Learning Deep Learning Memory Demand DRAM Capacity Santa Clara, CA August 2018 2 Driving New Capacity Models Non-volatile memories Industry successfully snuggling large memories to the processors… Memory Demand DRAM Capacity …but we can do oh! so much more Santa Clara, CA August 2018 3 My Three Talks at FMS NVDIMM Analysis Memory Class Storage Artificial Intelligence Santa Clara, CA August 2018 4 History of Architectures Let’s go back in time… Santa Clara, CA August 2018 5 Historical Trends in Computing Edge Co- Computing Processing Power Failure Santa Clara, CA August 2018 Data Loss 6 Some Moments in History Central Distributed Processing Processing Shared Processor Processor per user Dumb terminals Peer-to-peer networks Santa Clara, CA August 2018 7 Some Moments in History Central Distributed Processing Processing “Native Signal Processing” Hercules graphics Main CPU drivers Sound Blaster audio Cheap analog I/O Rockwell modem Ethernet DSP Tightly-coupled coprocessing Santa Clara, CA August 2018 8 The Lone Survivor… Integrated graphics Graphics add-in cards …survived the NSP war Santa Clara, CA August 2018 9 Some Moments in History Central Distributed Processing Processing Phone providers Phone apps provide controlled all local services data processing Edge computing reduces latency Santa Clara, CA August 2018 10 When the Playing -

AI Accelerator Latencies in Hybrid Vehicular Simulation

AI Accelerator Latencies in Hybrid Vehicular Simulation Jussi Hanhirova Matias Hyyppä Abstract Aalto University Aalto University We study the use of accelerators for vehicular AI (Artifi- Espoo, Finland Espoo, Finland cial Intelligence) applications. Managing the computation jussi.hanhirova@aalto.fi juho.hyyppa@aalto.fi is complex as vehicular AI applications call for high per- formance computations in a real-time distributed environ- ment, in which low and predictable latencies are essential. We have used the CARLA simulator together with machine learning based on CNNs (Convolutional Neural Networks) in our research. In this paper, we present the latency be- Anton Debner Vesa Hirvisalo havior with GPU acceleration for CNN processing. Our ex- Aalto University Aalto University perimentation is motivated by using the simulator to find the Espoo, Finland Espoo, Finland corner cases that are demanding for the accelerated CNN anton.debner@aalto.fi vesa.hirvisalo@aalto.fi processing. Author Keywords Computation acceleration; GPU; deep learning ACM Classification Keywords D.4.8 [Performance]: Simulation; I.2.9 [Robotics]: Autonomous vehicles; I.3.7 [Three-Dimensional Graphics and Realism]: Virtual reality Introduction In this paper, we address the usage of accelerators in ve- hicular AI (Artificial Intelligence) systems and in the simula- tors that are needed in the development of such systems. The recent development of AI system is enabling many new Convolutional Neural Net- applications including autonomous driving of motor vehi- software [5] together with deep learning based inference on works (CNN) are a specific cles on public roads. Many of such systems process sen- TensorFlow [6]. Our measurements show the basic viability class of neural networks that sor data related to environment perception in real-time, be- of the hybrid simulation approach, but they also underline are often used in deep form cause they trigger actions which have latency requirements. -

Unified Inference and Training at the Edge

Unified Inference and Training at the Edge By Linley Gwennap Principal Analyst October 2020 www.linleygroup.com Unified Inference and Training at the Edge By Linley Gwennap, Principal Analyst, The Linley Group As more edge devices add AI capabilities, some applications are becoming increasingly complex. Wearables and other IoT devices often have multiple sensors, requiring different neural networks for each sensor, or they may use a single complex network to combine all the input data, a tech- nique called sensor fusion. Others implement on-device training to customize the application. The GPX-10 processor can handle these advanced AI applications while keeping power to a minimum. Ambient Scientific sponsored this white paper, but the opinions and analysis are those of the author. Introduction As more IoT products implement AI inferencing on the device, some applications are becoming increasingly complex. Instead of just a single sensor, they may have several, such as a wearable device that has accelerometer, pulse rate, and temperature sensors. Each sensor may require a different neural network, or a single complex network can combine all the input data, a technique called sensor fusion. A microcontroller (e.g., Cortex-M) or DSP can handle a single sensor, but these simple cores are inefficient for more complex IoT applications. Training introduces additional complication to edge devices. Today’s neural networks are trained in the cloud using generic data from many users. This approach takes advan- tage of the massive compute power in cloud data centers, but it creates a one-size-fits-all AI model. The trained model can be distributed to many devices, but even if each device performs the inferencing, the model performs the same way for all users. -

Low-Power Ultra-Small Edge AI Accelerators for Image Recog- Nition with Convolution Neural Networks: Analysis and Future Directions

Preprints (www.preprints.org) | NOT PEER-REVIEWED | Posted: 16 July 2021 doi:10.20944/preprints202107.0375.v1 Review Low-power Ultra-small Edge AI Accelerators for Image Recog- nition with Convolution Neural Networks: Analysis and Future Directions Weison Lin 1, *, Adewale Adetomi 1 and Tughrul Arslan 1 1 Institute for Integrated Micro and Nano Systems, University of Edinburgh, Edinburgh EH9 3FF, UK; [email protected]; [email protected] * Correspondence: [email protected] Abstract: Edge AI accelerators have been emerging as a solution for near customers’ applications in areas such as unmanned aerial vehicles (UAVs), image recognition sensors, wearable devices, ro- botics, and remote sensing satellites. These applications not only require meeting performance tar- gets but also meeting strict reliability and resilience constraints due to operations in harsh and hos- tile environments. Numerous research articles have been proposed, but not all of these include full specifications. Most of these tend to compare their architecture with other existing CPUs, GPUs, or other reference research. This implies that the performance results of the articles are not compre- hensive. Thus, this work lists the three key features in the specifications such as computation ability, power consumption, and the area size of prior art edge AI accelerators and the CGRA accelerators during the past few years to define and evaluate the low power ultra-small edge AI accelerators. We introduce the actual evaluation results showing the trend in edge AI accelerator design about key performance metrics to guide designers on the actual performance of existing edge AI acceler- ators’ capability and provide future design directions and trends for other applications with chal- lenging constraints. -

Survey and Benchmarking of Machine Learning Accelerators

1 Survey and Benchmarking of Machine Learning Accelerators Albert Reuther, Peter Michaleas, Michael Jones, Vijay Gadepally, Siddharth Samsi, and Jeremy Kepner MIT Lincoln Laboratory Supercomputing Center Lexington, MA, USA freuther,pmichaleas,michael.jones,vijayg,sid,[email protected] Abstract—Advances in multicore processors and accelerators components play a major role in the success or failure of an have opened the flood gates to greater exploration and application AI system. of machine learning techniques to a variety of applications. These advances, along with breakdowns of several trends including Moore’s Law, have prompted an explosion of processors and accelerators that promise even greater computational and ma- chine learning capabilities. These processors and accelerators are coming in many forms, from CPUs and GPUs to ASICs, FPGAs, and dataflow accelerators. This paper surveys the current state of these processors and accelerators that have been publicly announced with performance and power consumption numbers. The performance and power values are plotted on a scatter graph and a number of dimensions and observations from the trends on this plot are discussed and analyzed. For instance, there are interesting trends in the plot regarding power consumption, numerical precision, and inference versus training. We then select and benchmark two commercially- available low size, weight, and power (SWaP) accelerators as these processors are the most interesting for embedded and Fig. 1. Canonical AI architecture consists of sensors, data conditioning, mobile machine learning inference applications that are most algorithms, modern computing, robust AI, human-machine teaming, and users (missions). Each step is critical in developing end-to-end AI applications and applicable to the DoD and other SWaP constrained users. -

Efficient Management of Scratch-Pad Memories in Deep Learning

Efficient Management of Scratch-Pad Memories in Deep Learning Accelerators Subhankar Pal∗ Swagath Venkataramaniy Viji Srinivasany Kailash Gopalakrishnany ∗ y University of Michigan, Ann Arbor, MI IBM TJ Watson Research Center, Yorktown Heights, NY ∗ y [email protected] [email protected] fviji,[email protected] Abstract—A prevalent challenge for Deep Learning (DL) ac- TABLE I celerators is how they are programmed to sustain utilization PERFORMANCE IMPROVEMENT USING INTER-NODE SPM MANAGEMENT. Incep Incep Res Multi- without impacting end-user productivity. Little prior effort has Alex VGG Goog SSD Res Mobile Squee tion- tion- Net- Head Geo been devoted to the effective management of their on-chip Net 16 LeNet 300 NeXt NetV1 zeNet PTB v3 v4 50 Attn Mean Scratch-Pad Memory (SPM) across the DL operations of a 1 SPM 1.04 1.19 1.94 1.64 1.58 1.75 1.31 3.86 5.17 2.84 1.02 1.06 1.76 Deep Neural Network (DNN). This is especially critical due to 1-Step 1.04 1.03 1.01 1.10 1.11 1.33 1.18 1.40 2.84 1.57 1.01 1.02 1.24 trends in complex network topologies and the emergence of eager execution. This work demonstrates that there exists up to a speedups of 12 ConvNets, LSTM and Transformer DNNs [18], 5.2× performance gap in DL inference to be bridged using SPM management, on a set of image, object and language networks. [19], [21], [26]–[33] compared to the case when there is no We propose OnSRAM, a novel SPM management framework SPM management, i.e. -

Infiltration of AI/ML in Particle Physics Aspects of Data Handling

Infiltration of AI/ML in Particle Physics Aspects of data handling Snowmass Community Planning Meeting Oct 5-8, 2020 Jean-Roch Vlimant [email protected] @vlimant Outline I.Motivations for Machine Learning II.Path to the Future of AI in HEP AI/ML in HEP, J-R Vlimant, Snowmass CPM !2 Motivations for Using Machine Learning in High Energy Physics and elsewhere ... 8/27/20 AI/ML in HEP, J-R Vlimant, Snowmass CPM !3 Machine Learning in Industry https://www.nvidia.com/en-us/deep-learning-ai/ http://www.shivonzilis.com/machineintelligence ● Prominent field in industry nowadays ● Lots of data, lots of applications, lots of potential use cases, lots of money ➔ Knowing machine learning can open significantly career horizons !4 8/27/20 AI/ML in HEP, J-R Vlimant, Snowmass CPM Learning to Control Learning to Walk via Deep Reinforcement Learning https://arxiv.org/abs/1812.11103 Mastering the game of Go with deep neural networks and tree search, https://doi.org/10.1038/nature16961 Modern machine learning boosts control technologies. AI, gaming, robotic, self-driving vehicle, etc. AI/ML in HEP, J-R Vlimant, Snowmass CPM !5 Learning from Complexity Machine learning model can extract information from complex dataset. More classical algorithm counter part may take years of development. !6 8/27/20 AI/ML in HEP, J-R Vlimant, Snowmass CPM Learn Physics P. Komiske, E. Metodiev, J. Thaler, https://arxiv.org/abs/1810.05165 Machine Learning can help understand Physics. !7 8/27/20 AI/ML in HEP, J-R Vlimant, Snowmass CPM Use Physics A. -

Ai Accelerator Ecosystem: an Overview

AI ACCELERATOR ECOSYSTEM: AN OVERVIEW DAVID BURNETTE, DIRECTOR OF ENGINEERING, MENTOR, A SIEMENS BUSINESS HIGH-LEVEL SYNTHESIS WHITEPAPER www.mentor.com AI Accelerator Ecosystem: An Overview One of the fastest growing areas of hardware and software design is Artificial Intelligence (AI)/Machine Learning (ML), fueled by the demand for more autonomous systems like self-driving vehicles and voice recognition for personal assistants. Many of these algorithms rely on convolutional neural networks (CNNs) to implement deep learning systems. While the concept of convolution is relatively straightforward, the application of CNNs to the ML domain has yielded dozens of different neural network approaches. While these networks can be executed in software on CPUs/GPUs, the power requirements for these solutions make them impractical for most inferencing applications, the majority of which involve portable, low-power devices. To improve the power/performance, hardware teams are forming to create ML hardware acceleration blocks. However, the process of taking any one of these compute-intensive networks into hardware, especially energy-efficient hardware, is a time consuming process if the team employs a traditional RTL design flow. Consider all of these interdependent activities using a traditional flow: ■ Expressing the algorithm correctly in RTL. ■ Choosing the optimal bit-widths for kernel weights and local storage to meet the memory budget. ■ Designing the microarchitecture to have a low enough latency to be practical for the target application, while determining how the accelerator communicates across the system bus without killing the latency the team just fought for. ■ Verifying the algorithm early on and throughout the implementation process, especially in the context of the entire system. -

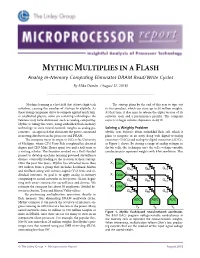

MYTHIC MULTIPLIES in a FLASH Analog In-Memory Computing Eliminates DRAM Read/Write Cycles

MYTHIC MULTIPLIES IN A FLASH Analog In-Memory Computing Eliminates DRAM Read/Write Cycles By Mike Demler (August 27, 2018) ................................................................................................................... Machine learning is a hot field that attracts high-tech The startup plans by the end of this year to tape out investors, causing the number of startups to explode. As its first product, which can store up to 50 million weights. these young companies strive to compete against much larg- At that time, it also aims to release the alpha version of its er established players, some are revisiting technologies the software tools and a performance profiler. The company veterans may have dismissed, such as analog computing. expects to begin volume shipments in 4Q19. Mythic is riding this wave, using embedded flash-memory technology to store neural-network weights as analog pa- Solving a Weighty Problem rameters—an approach that eliminates the power consumed Mythic uses Fujitsu’s 40nm embedded-flash cell, which it in moving data between the processor and DRAM. plans to integrate in an array along with digital-to-analog The company traces its origin to 2012 at the University converters (DACs) and analog-to-digital converters (ADCs), of Michigan, where CTO Dave Fick completed his doctoral as Figure 1 shows. By storing a range of analog voltages in degree and CEO Mike Henry spent two and a half years as the bit cells, the technique uses the cell’s voltage-variable a visiting scholar. The founders worked on a DoD-funded conductance to represent weights with 8-bit resolution. This project to develop machine-learning-powered surveillance drones, eventually leading to the creation of their startup. -

Synopsys Insight

Synopsys Insight DESIGNING A SMARTER FUTURE FROM SILICON TO SOFTWARE Subscribe Issue 2 | 2016 Smart, Secure Everything …from Silicon to Software EDA Tools Semiconductor IP Software Integrity Verify Software Bring-Up IP for the Era of FinFET and Software Is Everywhere — And on Your IC Design Before Smart Designs So Are the Vulnerabilities Sending It to the Fab From Silicon to Software: A Agile Development for How to Create a Testbench Quick Guide to Securing IoT Application Security Managers With Synopsys Verification IP Edge Devices and UVM in 5 Steps Addressing Physical Design Challenges in the Age of Customer Spotlight Additional Resources FinFET and Smarter Design Multi-Level Physical Hierarchy Movidius Keynotes IC Compiler Events Floorplanning vs. Two-Level II Technology Symposium With Floorplanning Vision of Smart, Connected Seminars Machines and Networks Webinars Synopsys Users Group (SNUG) Contact us: [email protected] Customer Spotlight Movidius Keynotes IC Compiler II Technology Symposium With Vision of Smart, Connected Machines and Networks Mike Santarini, Director of Corporate Communications Strategy, Synopsys “Is it a Chihuahua’s face or a blueberry muffin?” That is one of many problems that can be solved when deep neural networks are implemented close to the image sensors at the network’s edge, where SoCs with smart vision sensing can perform low-power image classification—a system envisioned by the high-tech industry now striving to bring higher intelligence to everything connected. In the keynote at the Synopsys IC Compiler II Technology Symposium (where Intel, Samsung, Broadcom, Mellanox and ecosystem partners ARM and TSMC also presented), Movidius’ vice president of marketing, Gary Brown, captivated the audience of 250 IC design experts by laying out Movidius’ vision of how a mix of innovative architectural design and advanced IC design tools (such as Synopsys’ IC Compiler II™) is enabling companies like Movidius to implement SoCs with greater algorithmic intelligence, advanced vision sensing, and deep learning techniques.