Input Technologies and Techniques

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Desktop Migration and Administration Guide

Red Hat Enterprise Linux 7 Desktop Migration and Administration Guide GNOME 3 desktop migration planning, deployment, configuration, and administration in RHEL 7 Last Updated: 2021-05-05 Red Hat Enterprise Linux 7 Desktop Migration and Administration Guide GNOME 3 desktop migration planning, deployment, configuration, and administration in RHEL 7 Marie Doleželová Red Hat Customer Content Services [email protected] Petr Kovář Red Hat Customer Content Services [email protected] Jana Heves Red Hat Customer Content Services Legal Notice Copyright © 2018 Red Hat, Inc. This document is licensed by Red Hat under the Creative Commons Attribution-ShareAlike 3.0 Unported License. If you distribute this document, or a modified version of it, you must provide attribution to Red Hat, Inc. and provide a link to the original. If the document is modified, all Red Hat trademarks must be removed. Red Hat, as the licensor of this document, waives the right to enforce, and agrees not to assert, Section 4d of CC-BY-SA to the fullest extent permitted by applicable law. Red Hat, Red Hat Enterprise Linux, the Shadowman logo, the Red Hat logo, JBoss, OpenShift, Fedora, the Infinity logo, and RHCE are trademarks of Red Hat, Inc., registered in the United States and other countries. Linux ® is the registered trademark of Linus Torvalds in the United States and other countries. Java ® is a registered trademark of Oracle and/or its affiliates. XFS ® is a trademark of Silicon Graphics International Corp. or its subsidiaries in the United States and/or other countries. MySQL ® is a registered trademark of MySQL AB in the United States, the European Union and other countries. -

Chapter 2 3D User Interfaces: History and Roadmap

30706 02 pp011-026 r1jm.ps 5/6/04 3:49 PM Page 11 CHAPTER 2 3D3D UserUser Interfaces:Interfaces: HistoryHistory andand RoadmapRoadmap Three-dimensional UI design is not a traditional field of research with well-defined boundaries. Like human–computer interaction (HCI), it draws from many disciplines and has links to a wide variety of topics. In this chapter, we briefly describe the history of 3D UIs to set the stage for the rest of the book. We also present a 3D UI “roadmap” that posi- tions the topics covered in this book relative to associated areas. After reading this chapter, you should have an understanding of the origins of 3D UIs and its relation to other fields, and you should know what types of information to expect from the remainder of this book. 2.1. History of 3D UIs The graphical user interfaces (GUIs) used in today’s personal computers have an interesting history. Prior to 1980, almost all interaction with com- puters was based on typing complicated commands using a keyboard. The display was used almost exclusively for text, and when graphics were used, they were typically noninteractive. But around 1980, several technologies, such as the mouse, inexpensive raster graphics displays, and reasonably priced personal computer parts, were all mature enough to enable the first GUIs (such as the Xerox Star). With the advent of GUIs, UI design and HCI in general became a much more important research area, since the research affected everyone using computers. HCI is an 11 30706 02 pp011-026 r1jm.ps 5/6/04 3:49 PM Page 12 12 Chapter 2 3D User Interfaces: History and Roadmap 1 interdisciplinary field that draws from existing knowledge in perception, 2 cognition, linguistics, human factors, ethnography, graphic design, and 3 other areas. -

Input for Winforms

ComponentOne Input for WinForms ComponentOne, a division of GrapeCity 201 South Highland Avenue, Third Floor Pittsburgh, PA 15206 USA Website: http://www.componentone.com Sales: [email protected] Telephone: 1.800.858.2739 or 1.412.681.4343 (Pittsburgh, PA USA Office) Trademarks The ComponentOne product name is a trademark and ComponentOne is a registered trademark of GrapeCity, Inc. All other trademarks used herein are the properties of their respective owners. Warranty ComponentOne warrants that the media on which the software is delivered is free from defects in material and workmanship, assuming normal use, for a period of 90 days from the date of purchase. If a defect occurs during this time, you may return the defective media to ComponentOne, along with a dated proof of purchase, and ComponentOne will replace it at no charge. After 90 days, you can obtain a replacement for the defective media by sending it and a check for $2 5 (to cover postage and handling) to ComponentOne. Except for the express warranty of the original media on which the software is delivered is set forth here, ComponentOne makes no other warranties, express or implied. Every attempt has been made to ensure that the information contained in this manual is correct as of the time it was written. ComponentOne is not responsible for any errors or omissions. ComponentOne’s liability is limited to the amount you paid for the product. ComponentOne is not liable for any special, consequential, or other damages for any reason. Copying and Distribution While you are welcome to make backup copies of the software for your own use and protection, you are not permitted to make copies for the use of anyone else. -

The Three-Dimensional User Interface

32 The Three-Dimensional User Interface Hou Wenjun Beijing University of Posts and Telecommunications China 1. Introduction This chapter introduced the three-dimensional user interface (3D UI). With the emergence of Virtual Environment (VE), augmented reality, pervasive computing, and other "desktop disengage" technology, 3D UI is constantly exploiting an important area. However, for most users, the 3D UI based on desktop is still a part that can not be ignored. This chapter interprets what is 3D UI, the importance of 3D UI and analyses some 3D UI application. At the same time, according to human-computer interaction strategy and research methods and conclusions of WIMP, it focus on desktop 3D UI, sums up some design principles of 3D UI. From the principle of spatial perception of people, spatial cognition, this chapter explained the depth clues and other theoretical knowledge, and introduced Hierarchical Semantic model of “UE”, Scenario-based User Behavior Model and Screen Layout for Information Minimization which can instruct the design and development of 3D UI. This chapter focuses on basic elements of 3D Interaction Behavior: Manipulation, Navigation, and System Control. It described in 3D UI, how to use manipulate the virtual objects effectively by using Manipulation which is the most fundamental task, how to reduce the user's cognitive load and enhance the user's space knowledge in use of exploration technology by using navigation, and how to issue an order and how to request the system for the implementation of a specific function and how to change the system status or change the interactive pattern by using System Control. -

What Is Interaction for Data Visualization? Evanthia Dimara, Charles Perin

What is Interaction for Data Visualization? Evanthia Dimara, Charles Perin To cite this version: Evanthia Dimara, Charles Perin. What is Interaction for Data Visualization?. IEEE Transactions on Visualization and Computer Graphics, Institute of Electrical and Electronics Engineers, 2020, 26 (1), pp.119 - 129. 10.1109/TVCG.2019.2934283. hal-02197062 HAL Id: hal-02197062 https://hal.archives-ouvertes.fr/hal-02197062 Submitted on 30 Jul 2019 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. What is Interaction for Data Visualization? Evanthia Dimara and Charles Perin∗ Abstract—Interaction is fundamental to data visualization, but what “interaction” means in the context of visualization is ambiguous and confusing. We argue that this confusion is due to a lack of consensual definition. To tackle this problem, we start by synthesizing an inclusive view of interaction in the visualization community – including insights from information visualization, visual analytics and scientific visualization, as well as the input of both senior and junior visualization researchers. Once this view takes shape, we look at how interaction is defined in the field of human-computer interaction (HCI). By extracting commonalities and differences between the views of interaction in visualization and in HCI, we synthesize a definition of interaction for visualization. -

A Review of User Interface Design for Interactive Machine Learning

1 A Review of User Interface Design for Interactive Machine Learning JOHN J. DUDLEY, University of Cambridge, United Kingdom PER OLA KRISTENSSON, University of Cambridge, United Kingdom Interactive Machine Learning (IML) seeks to complement human perception and intelligence by tightly integrating these strengths with the computational power and speed of computers. The interactive process is designed to involve input from the user but does not require the background knowledge or experience that might be necessary to work with more traditional machine learning techniques. Under the IML process, non-experts can apply their domain knowledge and insight over otherwise unwieldy datasets to find patterns of interest or develop complex data driven applications. This process is co-adaptive in nature and relies on careful management of the interaction between human and machine. User interface design is fundamental to the success of this approach, yet there is a lack of consolidated principles on how such an interface should be implemented. This article presents a detailed review and characterisation of Interactive Machine Learning from an interactive systems perspective. We propose and describe a structural and behavioural model of a generalised IML system and identify solution principles for building effective interfaces for IML. Where possible, these emergent solution principles are contextualised by reference to the broader human-computer interaction literature. Finally, we identify strands of user interface research key to unlocking more efficient and productive non-expert interactive machine learning applications. CCS Concepts: • Human-centered computing → HCI theory, concepts and models; Additional Key Words and Phrases: Interactive machine learning, interface design ACM Reference Format: John J. -

Actionscript 3.0 Cookbook.Pdf

ActionScript 3.0 Cookbook By Joey Lott, Darron Schall, Keith Peters ............................................... Publisher: O'Reilly Pub Date: October 01, 2006 ISBN: 0-596-52695-4 Pages: 592 Table of Contents | Index Well before Ajax and Microsoft's Windows Presentation Foundation hit the scene, Macromedia offered the first method for building web pages with the responsiveness and functionality of desktop programs with its Flash-based "Rich Internet Applications". Now, new owner Adobe is taking Flash and its powerful capabilities beyond the Web and making it a full-fledged development environment. Rather than focus on theory, the ActionScript 3.0 Cookbook concentrates on the practical application of ActionScript, with more than 300 solutions you can use to solve a wide range of common coding dilemmas. You'll find recipes that show you how to: Detect the user's Flash Player version or their operating system Build custom classes Format dates and currency types Work with strings Build user interface components Work with audio and video Make remote procedure calls using Flash Remoting and web services Load, send, and search XML data And much, much more ... Each code recipe presents the Problem, Solution, and Discussion of how you can use it in other ways or personalize it for your own needs, and why it works. You can quickly locate the recipe that most closely matches your situation and get the solution without reading the whole book to understand the underlying code. Solutions progress from short recipes for small problems to more complex scripts for thornier riddles, and the discussions offer a deeper analysis for resolving similar issues in the future, along with possible design choices and ramifications. -

Interaction Design and Children

Foundations and TrendsR in Human–Computer Interaction Vol. 1, No. 4 (2007) 277–392 c 2008 J. P. Hourcade DOI: 10.1561/1100000006 Interaction Design and Children Juan Pablo Hourcade University of Iowa, USA, [email protected] Abstract Children are increasingly using computer technologies as reflected in reports of computer use in schools in the United States. Given the greater exposure of children to these technologies, it is imperative that they be designed taking into account children’s abilities, interests, and developmental needs. This survey aims to contribute toward this goal through a review of research on children’s cognitive and motor development, safety issues related to technologies and design method- ologies and principles. It also provides and overview of current research trends in the field of interaction design and children and identifies chal- lenges for future research. To understand children’s developmental needs it is important to be aware of the factors that affect children’s intellectual development. This survey analyzes the relevance of constructivist, socio-cultural, and other modern theories with respect to the design of technologies for chil- dren. It also examines the significance of research on children’s cognitive development in terms of perception, memory, symbolic representation, problem solving, and language. Since interacting with technologies most often involves children’s hands this survey also reviews literature on children’s fine motor development including manipulation and reach- ing movements. Just as it is important to know how to aid children’s development it is also crucial to avoid harming development. This survey summarizes research on how technologies can negatively affect children’s physical, intellectual, social, emotional, and moral devel- opment. -

Windows Powershell Best Practices Windows Powershell Best Practices

Windows PowerShell Best Practices Windows PowerShell Best Practices Expert recommendations, pragmatically applied Automate system administration using Windows PowerShell best practices—and optimize your operational efficiency. With this About the Author practical guide, Windows PowerShell expert and instructor Ed Ed Wilson, MCSE, CISSP, is a well-known Wilson delivers field-tested tips, real-world examples, and candid scripting expert and author of “Hey Windows Scripting Guy!”—one of the most popular advice culled from administrators across a range of business and blogs on Microsoft TechNet. He’s written technical scenarios. If you’re an IT professional with Windows several books on Windows scripting PowerShell experience, this book is ideal. for Microsoft Press, including Windows PowerShell 2.0 Best Practices and Windows PowerShell Scripting Guide. Discover how to: PowerShell • Use Windows PowerShell to automate Active Directory tasks • Explore available WMI classes and methods with CIM cmdlets • Identify and track scripting opportunities to avoid duplication • Use functions to encapsulate business logic and reuse code • Design your script’s best input method and output destination • Test scripts by checking their syntax and performance • Choose the most suitable method for running remote commands • Manage software services with Desired State Configuration Wilson BEST PRACTICES microsoft.com/mspress ISBN 978-0-7356-6649-8 U.S.A. $59.99 55999 Canada $68.99 [Recommended] 9 780735 666498 Operating Systems/Windows Server Celebrating 30 years! Ed Wilson 666498_Win_PowerShell_Best_Practices.indd 1 4/11/14 10:30 AM Windows PowerShell Best Practices Ed Wilson 666498_book.indb 1 12/20/13 10:50 AM Published with the authorization of Microsoft Corporation by: O’Reilly Media, Inc. -

Powershell Notes for Professionals ™

PowerShell Notes for Professionals ™ PowerShellNotes for Professionals 100+ pages of professional hints and tricks Disclaimer This is an unocial free book created for educational purposes and is GoalKicker.com not aliated with ocial PowerShell™ group(s) or company(s). Free Programming Books All trademarks and registered trademarks are the property of their respective owners Contents About ................................................................................................................................................................................... 1 Chapter 1: Getting started with PowerShell .................................................................................................... 2 Section 1.1: Allow scripts stored on your machine to run un-signed ........................................................................ 2 Section 1.2: Aliases & Similar Functions ....................................................................................................................... 2 Section 1.3: The Pipeline - Using Output from a PowerShell cmdlet ........................................................................ 3 Section 1.4: Calling .Net Library Methods .................................................................................................................... 4 Section 1.5: Installation or Setup .................................................................................................................................. 5 Section 1.6: Commenting .............................................................................................................................................. -

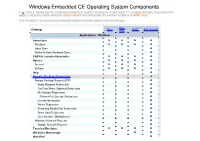

Windows Embedded CE Operating System Components

Windows Embedded CE Operating System Components Core Catalog Core C6G** Professional Plus Applications - End User ActiveSync File Sync Inbox Sync Pocket Outlook Database Sync CAB File Installer/Uninstaller Games Freecell Solitaire Help Remote Desktop Connection Remote Desktop Protocol (RDP) Audio Playback Redirection Cut/Copy/Paste Clipboard Redirection File Storage Redirection Filtered File Storage Redirection License Information Printer Redirection Serial and Parallel Port Redirection Smart Card Redirection User Interface Dialog Boxes Window s Netw ork Projector Sample Netw ork Projector Terminal Emulator Windows Messenger WordPad PRO version Applications and Services Development .NET Compact Framew ork 2.0 .NET Compact Framew ork 2.0 .NET Compact Framew ork 2.0 String Resources .Net Compact Framework 2.0 Localized String Resources String Resources Chinese(PRC) String Resources Chinese(Taiw an) String Resources French(France) String Resources German(Germany) String Resources Italian(Italy) String Resources Japanese(Japan) String Resources Korean(Korea) String Resources Portuguese(Brazil) String Resources Spanish(International Sort) .NET Compact Framew ork 2.0 - Headless .NET Compact Framew ork 2.0 String Resources - Headless .Net Compact Framework 2.0 Localized String Resources - Headless String Resources Chinese(PRC) – Headless String Resources Chinese(Taiw an) – Headless String Resources French(France) – Headless String Resources German(Germany) – Headless String Resources Italian(Italy) – Headless String Resources Japanese(Japan) -

Techniques and Architectures for 3D Interaction About the Cover the Cover Depicts the Embedding of 3D Structures in Both Space and Mind

Techniques and Architectures for 3D Interaction About the cover The cover depicts the embedding of 3D structures in both space and mind. At a quick glance or when viewed at an angle or curved page, the structures appear carefully organized. Closer inspection reveals that the shapes are all tilted at different angles. The background image was ray traced in the Blender 3D content creation suite, us- ing subsurface scattering for the curved surface and ambient occlusion to emphasize structure. The final cover was typeset in Inkscape, with the BitStream Vera Serif font. Techniques and Architectures for 3D Interaction Proefschrift ter verkrijging van de graad doctor aan de Technische Universiteit Delft, op gezag van de Rector Magnificus prof. dr. ir. J.T. Fokkema, voorzitter van het College voor Promoties, in het openbaar te verdedigen op woensdag 2 september 2009 om 10:00 uur door Gerwin DE HAAN informatica ingenieur geboren te Rotterdam. Dit proefschrift is goedgekeurd door de promotor: Prof. dr. ir. F.W. Jansen Copromotor: Ir. F.H. Post Samenstelling promotiecommissie: Rector Magnificus voorzitter Prof. dr. ir. F.W. Jansen Technische Universiteit Delft, promotor Ir. F.H. Post Technische Universiteit Delft, copromotor Prof. dr. I.E.J.R. Heynderickx Technische Universiteit Delft Prof. dr. A. van Deursen Technische Universiteit Delft Prof. dr. ir. P.J.M. van Oosterom Technische Universiteit Delft Prof. dr. ir. R. van Liere Technische Universiteit Eindhoven Prof. dr. B. Frohlich¨ Bauhaus-Universitat¨ Weimar This work was carried out in the ASCI graduate school. ASCI dissertation series number 180. Part of this research has been funded by the Dutch BSIK/BRICKS project (MSV2).