Overcoming Limitations of the Hololens for Use in Product Assembly

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Desktop Migration and Administration Guide

Red Hat Enterprise Linux 7 Desktop Migration and Administration Guide GNOME 3 desktop migration planning, deployment, configuration, and administration in RHEL 7 Last Updated: 2021-05-05 Red Hat Enterprise Linux 7 Desktop Migration and Administration Guide GNOME 3 desktop migration planning, deployment, configuration, and administration in RHEL 7 Marie Doleželová Red Hat Customer Content Services [email protected] Petr Kovář Red Hat Customer Content Services [email protected] Jana Heves Red Hat Customer Content Services Legal Notice Copyright © 2018 Red Hat, Inc. This document is licensed by Red Hat under the Creative Commons Attribution-ShareAlike 3.0 Unported License. If you distribute this document, or a modified version of it, you must provide attribution to Red Hat, Inc. and provide a link to the original. If the document is modified, all Red Hat trademarks must be removed. Red Hat, as the licensor of this document, waives the right to enforce, and agrees not to assert, Section 4d of CC-BY-SA to the fullest extent permitted by applicable law. Red Hat, Red Hat Enterprise Linux, the Shadowman logo, the Red Hat logo, JBoss, OpenShift, Fedora, the Infinity logo, and RHCE are trademarks of Red Hat, Inc., registered in the United States and other countries. Linux ® is the registered trademark of Linus Torvalds in the United States and other countries. Java ® is a registered trademark of Oracle and/or its affiliates. XFS ® is a trademark of Silicon Graphics International Corp. or its subsidiaries in the United States and/or other countries. MySQL ® is a registered trademark of MySQL AB in the United States, the European Union and other countries. -

Input for Winforms

ComponentOne Input for WinForms ComponentOne, a division of GrapeCity 201 South Highland Avenue, Third Floor Pittsburgh, PA 15206 USA Website: http://www.componentone.com Sales: [email protected] Telephone: 1.800.858.2739 or 1.412.681.4343 (Pittsburgh, PA USA Office) Trademarks The ComponentOne product name is a trademark and ComponentOne is a registered trademark of GrapeCity, Inc. All other trademarks used herein are the properties of their respective owners. Warranty ComponentOne warrants that the media on which the software is delivered is free from defects in material and workmanship, assuming normal use, for a period of 90 days from the date of purchase. If a defect occurs during this time, you may return the defective media to ComponentOne, along with a dated proof of purchase, and ComponentOne will replace it at no charge. After 90 days, you can obtain a replacement for the defective media by sending it and a check for $2 5 (to cover postage and handling) to ComponentOne. Except for the express warranty of the original media on which the software is delivered is set forth here, ComponentOne makes no other warranties, express or implied. Every attempt has been made to ensure that the information contained in this manual is correct as of the time it was written. ComponentOne is not responsible for any errors or omissions. ComponentOne’s liability is limited to the amount you paid for the product. ComponentOne is not liable for any special, consequential, or other damages for any reason. Copying and Distribution While you are welcome to make backup copies of the software for your own use and protection, you are not permitted to make copies for the use of anyone else. -

Actionscript 3.0 Cookbook.Pdf

ActionScript 3.0 Cookbook By Joey Lott, Darron Schall, Keith Peters ............................................... Publisher: O'Reilly Pub Date: October 01, 2006 ISBN: 0-596-52695-4 Pages: 592 Table of Contents | Index Well before Ajax and Microsoft's Windows Presentation Foundation hit the scene, Macromedia offered the first method for building web pages with the responsiveness and functionality of desktop programs with its Flash-based "Rich Internet Applications". Now, new owner Adobe is taking Flash and its powerful capabilities beyond the Web and making it a full-fledged development environment. Rather than focus on theory, the ActionScript 3.0 Cookbook concentrates on the practical application of ActionScript, with more than 300 solutions you can use to solve a wide range of common coding dilemmas. You'll find recipes that show you how to: Detect the user's Flash Player version or their operating system Build custom classes Format dates and currency types Work with strings Build user interface components Work with audio and video Make remote procedure calls using Flash Remoting and web services Load, send, and search XML data And much, much more ... Each code recipe presents the Problem, Solution, and Discussion of how you can use it in other ways or personalize it for your own needs, and why it works. You can quickly locate the recipe that most closely matches your situation and get the solution without reading the whole book to understand the underlying code. Solutions progress from short recipes for small problems to more complex scripts for thornier riddles, and the discussions offer a deeper analysis for resolving similar issues in the future, along with possible design choices and ramifications. -

Windows Powershell Best Practices Windows Powershell Best Practices

Windows PowerShell Best Practices Windows PowerShell Best Practices Expert recommendations, pragmatically applied Automate system administration using Windows PowerShell best practices—and optimize your operational efficiency. With this About the Author practical guide, Windows PowerShell expert and instructor Ed Ed Wilson, MCSE, CISSP, is a well-known Wilson delivers field-tested tips, real-world examples, and candid scripting expert and author of “Hey Windows Scripting Guy!”—one of the most popular advice culled from administrators across a range of business and blogs on Microsoft TechNet. He’s written technical scenarios. If you’re an IT professional with Windows several books on Windows scripting PowerShell experience, this book is ideal. for Microsoft Press, including Windows PowerShell 2.0 Best Practices and Windows PowerShell Scripting Guide. Discover how to: PowerShell • Use Windows PowerShell to automate Active Directory tasks • Explore available WMI classes and methods with CIM cmdlets • Identify and track scripting opportunities to avoid duplication • Use functions to encapsulate business logic and reuse code • Design your script’s best input method and output destination • Test scripts by checking their syntax and performance • Choose the most suitable method for running remote commands • Manage software services with Desired State Configuration Wilson BEST PRACTICES microsoft.com/mspress ISBN 978-0-7356-6649-8 U.S.A. $59.99 55999 Canada $68.99 [Recommended] 9 780735 666498 Operating Systems/Windows Server Celebrating 30 years! Ed Wilson 666498_Win_PowerShell_Best_Practices.indd 1 4/11/14 10:30 AM Windows PowerShell Best Practices Ed Wilson 666498_book.indb 1 12/20/13 10:50 AM Published with the authorization of Microsoft Corporation by: O’Reilly Media, Inc. -

Powershell Notes for Professionals ™

PowerShell Notes for Professionals ™ PowerShellNotes for Professionals 100+ pages of professional hints and tricks Disclaimer This is an unocial free book created for educational purposes and is GoalKicker.com not aliated with ocial PowerShell™ group(s) or company(s). Free Programming Books All trademarks and registered trademarks are the property of their respective owners Contents About ................................................................................................................................................................................... 1 Chapter 1: Getting started with PowerShell .................................................................................................... 2 Section 1.1: Allow scripts stored on your machine to run un-signed ........................................................................ 2 Section 1.2: Aliases & Similar Functions ....................................................................................................................... 2 Section 1.3: The Pipeline - Using Output from a PowerShell cmdlet ........................................................................ 3 Section 1.4: Calling .Net Library Methods .................................................................................................................... 4 Section 1.5: Installation or Setup .................................................................................................................................. 5 Section 1.6: Commenting .............................................................................................................................................. -

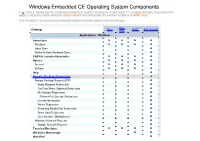

Windows Embedded CE Operating System Components

Windows Embedded CE Operating System Components Core Catalog Core C6G** Professional Plus Applications - End User ActiveSync File Sync Inbox Sync Pocket Outlook Database Sync CAB File Installer/Uninstaller Games Freecell Solitaire Help Remote Desktop Connection Remote Desktop Protocol (RDP) Audio Playback Redirection Cut/Copy/Paste Clipboard Redirection File Storage Redirection Filtered File Storage Redirection License Information Printer Redirection Serial and Parallel Port Redirection Smart Card Redirection User Interface Dialog Boxes Window s Netw ork Projector Sample Netw ork Projector Terminal Emulator Windows Messenger WordPad PRO version Applications and Services Development .NET Compact Framew ork 2.0 .NET Compact Framew ork 2.0 .NET Compact Framew ork 2.0 String Resources .Net Compact Framework 2.0 Localized String Resources String Resources Chinese(PRC) String Resources Chinese(Taiw an) String Resources French(France) String Resources German(Germany) String Resources Italian(Italy) String Resources Japanese(Japan) String Resources Korean(Korea) String Resources Portuguese(Brazil) String Resources Spanish(International Sort) .NET Compact Framew ork 2.0 - Headless .NET Compact Framew ork 2.0 String Resources - Headless .Net Compact Framework 2.0 Localized String Resources - Headless String Resources Chinese(PRC) – Headless String Resources Chinese(Taiw an) – Headless String Resources French(France) – Headless String Resources German(Germany) – Headless String Resources Italian(Italy) – Headless String Resources Japanese(Japan) -

Accessibility in Windows 8

Accessibility in Windows 8 Overview of accessibility in Windows 8, tutorials, and keyboard shortcuts Published by Microsoft Corporation, Trustworthy Computing One Microsoft Way Redmond, Washington 98052 Copyright 2012 Microsoft Corporation. All rights reserved. No part of the contents of this document may be reproduced or transmitted in any form or by any means without the written permission of the publisher. For permissions, visit www.microsoft.com. Microsoft and Windows are trademarks of Microsoft Corporation in the United States and/or other countries. Find further information on Microsoft Trademarks (http://www.microsoft.com/about/legal/en/us/IntellectualProperty/Trademarks/EN-US.aspx). Table of Contents Overview of Accessibility in Windows 8 .................................................................................................. 7 What’s new in Windows 8 accessibility ...................................................................................................................................7 Narrator and touch-enabled devices .................................................................................................................................................. 7 Magnifier and touch-enabled devices ............................................................................................................................................... 9 Ease of Access .............................................................................................................................................. 12 Make -

Javafx 2.0 Dr

1 <Insert Picture Here> JavaFX 2.0 Dr. Stefan Schneider Chief Technologist ISV Engineering The following is intended to outline our general product direction. It is intended for information purposes only, and may not be incorporated into any contract. It is not a commitment to deliver any material, code, or functionality, and should not be relied upon in making purchasing decisions. The development, release, and timing of any features or functionality described for Oracle’s products remains at the sole discretion of Oracle. 3 JavaFX 2.0 Agenda • Overview <Insert Picture Here> • Details • Architecture • Roadmap 4 What is JavaFX? 5 JavaFX is the evolution of the Java rich client platform, designed to address the needs of today’s and tomorrow’s customers. 6 Who is JavaFX for? 7 Target Developers • Current Java Developers • Former Java Developers • Web Developers • New Developers 8 What makes JavaFX special? 9 JavaFX defines itself as being a well designed, cross platform application development platform. It is fast. It is flexible. It delivers exceptional value to developers and end users. It is competitive. Most importantly, it leverages the massive existing Java eco system. Nobody else offers the complete package – exceptional developer experience, user experience, cross platform development and massive ecosystem. 10 TIOBE Index Popularity of a Language 11 The Java Eco System • Over 9 million Java developers • Java is taught in universities around the globe • Every major company uses Java 12 Value Proposition for Client Java • Leverage existing Java investment • Easier to find and train developers • Reduce risk by reducing complexity 13 Why is JavaFX important? 14 JavaFX encourages companies to use Java on the client and the server. -

A Chinese Mobile Phone Input Method Based on the Dynamic and Self-Study Language Model

A Chinese Mobile Phone Input Method Based on the Dynamic and Self-study Language Model Zhu Qiaoming, Li Peifeng, Gu Ping, Qian Peide School of Computer Science & Technology of Soochow University,Suzhou,215006 {qmzhu, pfli, pgu ,pdqian}@suda.edu.cn Abstract. This paper birefly introduces a Chinese digital input method named as CKCDIM (CKC Digital Input Method) and then applies it to the Symbian OS as an example, and it also proposes a framework of input method which adopted the Client/Server architecture for the handheld computers. To improve the performance of CKCDIM, this paper puts forward a dynamic and self-study language model which based on a general language model and user language model, and proposes two indexes which are the average number of pressed-keys (ANPK) and the hit rate of first characters (HRFC) to measure the performance of the input method. Meanwhile, this paper brings forward a modified Church-Gale smoothing method to reduce the size of general language model to meet the need of mobile phone. At last, the experiments prove that the dynamic and self-study language model is a steady model and can improve the performance of CKCDIM. Key Words: Chinese Digital Input Method, Architecture of Input Method, Dynamic and Self-study Language Model, HRFC, ANPK 1 Introduction With the developing of communication technology and the popularization of the mobile phone in China, the use of text message in mobile phone is growing rapidly. According to CCTV financial news report, the total number of Short Message Service use will grow from 300 billions in 2005 to 450 billions in 2006 in China. -

Silk4net User Guide Micro Focus 575 Anton Blvd., Suite 510 Costa Mesa, CA 92626

Silk Test 15.5 Silk4NET User Guide Micro Focus 575 Anton Blvd., Suite 510 Costa Mesa, CA 92626 Copyright © Micro Focus 2014. All rights reserved. Portions Copyright © 1992-2009 Borland Software Corporation (a Micro Focus company). MICRO FOCUS, the Micro Focus logo, and Micro Focus product names are trademarks or registered trademarks of Micro Focus IP Development Limited or its subsidiaries or affiliated companies in the United States, United Kingdom, and other countries. BORLAND, the Borland logo, and Borland product names are trademarks or registered trademarks of Borland Software Corporation or its subsidiaries or affiliated companies in the United States, United Kingdom, and other countries. All other marks are the property of their respective owners. 2014-06-26 ii Contents Licensing Information ........................................................................................7 Silk4NET ..............................................................................................................8 Silk Test Product Suite ........................................................................................................8 Product Notification Service ................................................................................................ 9 Contacting Micro Focus ...................................................................................................... 9 Information Needed by Micro Focus SupportLine .................................................. 10 What's New in Silk4NET ...................................................................................11 -

Ohiou1090939388.Pdf (573.32

A RE-CONFIGURABLE HARDWARE-IN-THE-LOOP FLIGHT SIMULATOR A thesis presented to the faculty of the Russ College of Engineering and Technology of Ohio University in partial fulfillment of the requirements for the degree Master of Science Eric Root June 2004 This Thesis Entitled A RE-CONFIGURABLE HARDWARE-IN-THE-LOOP FLIGHT SIMULATOR BY ERIC ROOT has been approved for the School of Electrical Engineering and Computer Science and the Russ College of Engineering and Technology by Maarten Uijt de Haag Assistant Professor of Electrical Engineering and Computer Science R. Dennis Irwin Dean, Russ College of Engineering and Technology ROOT, ERIC. M.S. June 2004. EECS A Re-Configurable Hardware-in-the-Loop Flight Simulator (70pp) Director of Thesis: Maarten Uijt de Haag This thesis describes the development of a fixed base flight simulator capable of hardware-in-the-loop testing of aviation electronics, or “avionics”. The system is composed of a pilot controlled 6 degree-of-freedom aircraft model, a flight display, and a hardware interface to an ARINC 429 avionics databus. Avionics can be connected to this databus for testing. These systems are combined in a modular fashion allowing easy re- configuration for use with various aircraft models, and testing of avionics using databuses other than ARINC 429. This modular design is achieved by using an aircraft model constructed in Matlab’s Simulink, and by relaying the model data to the display and ARINC 429 interface using the UDP network protocol. Approved: Maarten Uijt de Haag Assistant Professor of EECS Acknowledgments I would like to thank Dr. -

Red Hat Enterprise Linux 8 Using the Desktop Environment in RHEL 8

Red Hat Enterprise Linux 8 Using the desktop environment in RHEL 8 Configuring and customizing the GNOME 3 desktop environment on RHEL 8 Last Updated: 2021-09-10 Red Hat Enterprise Linux 8 Using the desktop environment in RHEL 8 Configuring and customizing the GNOME 3 desktop environment on RHEL 8 Legal Notice Copyright © 2021 Red Hat, Inc. The text of and illustrations in this document are licensed by Red Hat under a Creative Commons Attribution–Share Alike 3.0 Unported license ("CC-BY-SA"). An explanation of CC-BY-SA is available at http://creativecommons.org/licenses/by-sa/3.0/ . In accordance with CC-BY-SA, if you distribute this document or an adaptation of it, you must provide the URL for the original version. Red Hat, as the licensor of this document, waives the right to enforce, and agrees not to assert, Section 4d of CC-BY-SA to the fullest extent permitted by applicable law. Red Hat, Red Hat Enterprise Linux, the Shadowman logo, the Red Hat logo, JBoss, OpenShift, Fedora, the Infinity logo, and RHCE are trademarks of Red Hat, Inc., registered in the United States and other countries. Linux ® is the registered trademark of Linus Torvalds in the United States and other countries. Java ® is a registered trademark of Oracle and/or its affiliates. XFS ® is a trademark of Silicon Graphics International Corp. or its subsidiaries in the United States and/or other countries. MySQL ® is a registered trademark of MySQL AB in the United States, the European Union and other countries. Node.js ® is an official trademark of Joyent.