Techniques Involved in Developing the Australian Climate Observations Reference Network – Surface Air Temperature (ACORN-SAT) Dataset

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Ships Observing Marine Climate a Catalogue of the Voluntary

WORLD METEOROLOGICAL ORGANIZATION INTERGOVERNMENTAL OCEANOGRAPIDC COMMISSION (Of UNESCO) MARINE METEOROLOGY AND RELATED OCEANOGRAPHIC ACTIVITIES REPORT NO. 25 SHIPS OBSERVING MARINE CLIMATE A CATALOGUE OF THE VOLUNTARY OBSERVING SHIPS PARTICIPATING IN THE VSOP-NA WMO/TD-No. 456 1991 NOTE The designations employed and the presentation of material in this publication do not imply the expression ofany opinion whatsoever on the part of the Secretariats of the World Meteorological Organization and the Intergovernmental Oceanographic Commission concerning the legal status of any country, territory, city or area, or of its authorities, or concerning the delimitation ofits frontiers or boundaries. Editorial note: This publication is an offset reproduction of a typescript submitted by the authors and has been produced withoutadditional revision by the WMO and IOC Secretariats. SHIPS OBSERVING MARINE CLIMATE A CATALOGUE OF THE VOLUNTARY OBSERVING SHIPS PARTICIPATING IN THE VSOP-NA Elizabeth.C.Kent and Peter.K.Taylor .James .Rennell. centre for Ocean Circulation, Chilworth Research Park. Southampton. UK PREFACE Meteorological observations made onboard merchant vessels of the i'H) vollIDtary observing shipa (ves) scheme, when transmitted to shore in real-time:J are a substantial canponent of the Global Observing System of the World Weather Watch and are essential tc> the pr<>vision of marine lleteorological services ~ as well as tc> ID€:teorol.ogical analyses and forecasts generally. These observations are also recorded in ships r meteorological log1xx>ks:t for later exchange., archival and p:roc-essing -through the i'H) Marine CliJDa.tc>logical StlJllDa'ries Scheme, and as such. they conatitute an equally essential source of data for determining the cliJDa.tc>logy of the marineatlooaphere and ocean surface, and 'for OJIIIPUt.ing a variety of air-sea fluxes. -

Results from Public Consultation

1 Regional Plan (Update) 2016-2020 Results from Public Consultation 169 survey respondents: Which industry do you represent? Transport, logistics and warehousing 0.0% Tourism, accommodation and food services 11.8% Retail 2.4% Public services 8.3% Professional, scientific and technical services 14.8% Other 5.9% Not for profit 21.9% Media and telecommunications 1.8% Manufacturing 2.4% Health care, aged care and social assistance 8.3% Food growers and producers 4.1% Financial and insurance services 3.6% Electricity, gas, water and waste services 1.2% Education and training 8.9% Construction 0.6% Arts 4.1% 0% 20% 40% 60% 80% 100% Which local government area do you live in? Outside the region 3.0% Coffs Harbour 35.5% Bellingen 8.3% Nambucca 13.6% Kempsey 14.8% Port Macquarie -… 18.9% Greater Taree 5.9% 0% 20% 40% 60% 80% 100% 2 Careers advice linked to traineeships and apprenticeships Issue: Youth unemployment is high and large numbers of young people leave the region after high school seeking career opportunities, however, skills gaps currently exist in many fields suited to traineeships and apprenticeships. Target outcome: To foster employment and retention of more young people by providing careers advice on local industries with employment potential, linked with more available traineeships and apprenticeships. Key points: - Our region’s youth unemployment rate is 50% higher than the NSW state average. - There is a decline in the number of apprenticeships being offered in the region. - The current school based traineeship process is overly complicated for students, teachers and employers. -

Response to Comments the Authors Thank the Reviewers for Their

Response to comments The authors thank the reviewers for their constructive comments, which provide the basis to improve the quality of the manuscript and dataset. We address all points in detail and reply to all comments here below. We also updated SCDNA from V1 to V1.1 on Zenodo based on the reviewer’s comments. The modifications include adding station source flag, adding original files for location merged stations, and adding a quality control procedure based on the final SCDNA. SCDNA estimates are generally consistent between the two versions, with the total number of stations reduced from 27280 to 27276. Reviewer 1 General comment The manuscript presents and advertises a very interesting dataset of temperature and precipitation observation collected over several years in North America. The work is certainly well suited for the readership of ESSD and it is overall very important for the meteorological and climatological community. Furthermore, creation of quality controlled databases is an important contribution to the scientific community in the age of data science. I have a few points to consider before publication, which I recommend, listed below. 1. Measurement instruments: from my background, I am much closer to the instruments themselves (and their peculiarities and issues), as hardware tools. What I missed here was a description of the stations and their instruments. Questions like: which are the instruments deployed in the stations? How is precipitation measured (tipping buckets? buckets? Weighing gauges? Note for example that some instruments may have biases when measuring snowfall while others may not)? How is it temperature measured? How is this different from station to station in your database? Response: We have added the descriptions of measurement instruments in both the manuscript and dataset documentation. -

Creating an Atmosphere for STEM Literacy in the Rural South Through

JOURNAL OF GEOSCIENCE EDUCATION 63, 105–115 (2015) Creating an Atmosphere for STEM Literacy in the Rural South Through Student-Collected Weather Data Lynn Clark,1,a Saswati Majumdar,1 Joydeep Bhattacharjee,2 and Anne Case Hanks3 ABSTRACT This paper is an examination of a teacher professional development program in northeast Louisiana, that provided 30 teachers and their students with the technology, skills, and content knowledge to collect data and explore weather trends. Data were collected from both continuous monitoring weather stations and simple school-based weather stations to better understand core disciplinary ideas connecting Life and Earth sciences. Using a curricular model that combines experiential and place-based educational approaches to create a rich and relevant atmosphere for STEM learning, the goal of the program was to empower teachers and their students to engage in ongoing data collection analysis that could contribute to greater understanding and ownership of the environment at the local and regional level. The program team used a mixed- methodological approach that examined implementation at the site level and student impact. Analysis of teacher and student surveys, teacher interviews and classroom observation data suggest that the level of implementation of the program related directly to the ways in which students were using the weather data to develop STEM literacy. In particular, making meaning out of the data by studying patterns, interpreting the numbers, and comparing with long-term data from other sites seemed to drive critical thinking and STEM literacy in those classrooms that fully implemented the program. Findings also suggest that the project has the potential to address the unique needs of traditionally underserved students in the rural south, most notably, those students in high-needs rural settings that rely on an agrarian economy. -

Premium Location Surcharge

Premium Location Surcharge The Premium Location Surcharge (PLS) is a levy applied on all rentals commencing at any Airport location throughout Australia. These charges are controlled by the Airport Authorities and are subject to change without notice. LOCATION PREMIUM LOCATION SURCHARGE Adelaide Airport 14% on all rental charges except fuel costs Alice Springs Airport 14.5% on time and kilometre charges Armidale Airport 9.5% on all rental charges except fuel costs Avalon Airport 12% on all rental charges except fuel costs Ayers Rock Airport & City 17.5% on time and kilometre charges Ballina Airport 11% on all rental charges except fuel costs Bathurst Airport 5% on all rental charges except fuel costs Brisbane Airport 14% on all rental charges except fuel costs Broome Airport 10% on time and kilometre charges Bundaberg Airport 10% on all rental charges except fuel costs Cairns Airport 14% on all rental charges except fuel costs Canberra Airport 18% on time and kilometre charges Coffs Harbour Airport 8% on all rental charges except fuel costs Coolangatta Airport 13.5% on all rental charges except fuel costs Darwin Airport 14.5% on time and kilometre charges Emerald Airport 10% on all rental charges except fuel costs Geraldton Airport 5% on all rental charges Gladstone Airport 10% on all rental charges except fuel costs Grafton Airport 10% on all rental charges except fuel costs Hervey Bay Airport 8.5% on all rental charges except fuel costs Hobart Airport 12% on all rental charges except fuel costs Kalgoorlie Airport 11.5% on all rental -

Danish Meteorological Institute Ministry of Transport

DANISH METEOROLOGICAL INSTITUTE MINISTRY OF TRANSPORT ——— TECHNICAL REPORT ——— 98-14 The Climate of The Faroe Islands - with Climatological Standard Normals, 1961-1990 John Cappelen and Ellen Vaarby Laursen COPENHAGEN 1998 Front cover picture Gásadalur located north west of Sørvágur on the western part of the island of Vágar. Heinanøv Fjeld, 813 m high can be seen in the north and Mykinesfjørdur in the west. The heliport is located to the right in the picture - near the river Dalsá. The photo was taken during a helicopter trip in May 1986. Photographer: Helge Faurby ISSN 0906-897X Contents 1. Introduction....................................................................................................3 2. Weather and climate in the Faroe Islands..................................................5 3. Observations and methods............................................................................9 3.1. General methods...................................................................9 3.2. Observations........................................................................9 4. Station history and metadata.......................................................................13 5. Standard Normal Homogeneity Test..........................................................15 5.1. Background.........................................................................15 5.2. Testing for homogeneity.....................................................15 6. Climatological normals.................................................................................17 -

Guide Wave Analysis and Forecasting

WORLD METEOROLOGICAL ORGANIZATION GUIDE TO WAVE ANALYSIS AND FORECASTING 1998 (second edition) WMO-No. 702 WORLD METEOROLOGICAL ORGANIZATION GUIDE TO WAVE ANALYSIS AND FORECASTING 1998 (second edition) WMO-No. 702 Secretariat of the World Meteorological Organization – Geneva – Switzerland 1998 © 1998, World Meteorological Organization ISBN 92-63-12702-6 NOTE The designations employed and the presentation of material in this publication do not imply the expression of any opinion whatsoever on the part of the Secretariat of the World Meteorological Organization concerning the legal status of any country, territory, city or area, or of its authorities or concerning the delimitation of its fontiers or boundaries. CONTENTS Page FOREWORD . V ACKNOWLEDGEMENTS . VI INTRODUCTION . VII Chapter 1 – AN INTRODUCTION TO OCEAN WAVES 1.1 Introduction . 1 1.2 The simple linear wave . 1 1.3 Wave fields on the ocean . 6 Chapter 2 – OCEAN SURFACE WINDS 2.1 Introduction . 15 2.2 Sources of marine data . 16 2.3 Large-scale meteorological factors affecting ocean surface winds . 21 2.4 A marine boundary-layer parameterization . 27 2.5 Statistical methods . 32 Chapter 3 – WAVE GENERATION AND DECAY 3.1 Introduction . 35 3.2 Wind-wave growth . 35 3.3 Wave propagation . .36 3.4 Dissipation . 39 3.5 Non-linear interactions . .40 3.6 General notes on application . 41 Chapter 4 – WAVE FORECASTING BY MANUAL METHODS 4.1 Introduction . 43 4.2 Some empirical working procedures . 45 4.3 Computation of wind waves . 45 4.4 Computation of swell . 47 4.5 Manual computation of shallow-water effects . 52 Chapter 5 – INTRODUCTION TO NUMERICAL WAVE MODELLING 5.1 Introduction . -

By: ESSA RAMADAN MOHAMMAD D F Superintendent of Stations Kuwait

By: ESSA RAMADAN MOHAMMAD Superintendent O f Stations Kuwait Met. Departmentt Geoggpyraphy and climate Kuwait consists mostly of desert and little difference in elevation. It has nine islands, the largest of which is Bubiyan, which is linked to the mainland by a concrete bridge. Summers (April to October) are extremely hot and dry with temperatures exceeding 51 °C(124°F) in Kuwait City several times during the hottest months of June, July and August. April and October are more moderate with temperatures over 40 °C uncommon . Winters (November through February) are cool with some precipitation and average temperatures around 13 °C(56°F) with extremes from ‐2 °Cto27°C. The spring season (Marc h) iswarmand pltleasant with occasilional thund ers torms. Surface coastal water temperatures range from 15 °C(59°F) in February to 35 °C(95°F) in August. The driest months are June through September, while the wettest are January through March. Thunderstorms and hailstorms are common in November, March and April when warm and moist Arabian Gulf air collides with cold air masses from Europe. One such thunderstorm in November 1997 dumped more than ten inches of rain on Kuwait. Kuwait Meteorology Dep artment Meteorological Department Forecasting Supervision Clima tes SiiSupervision Stations & Upper Air Supervision Communications Supervision Maintenance Supervision Stations and Upper air Supervision 1‐ SfSurface manned Sta tions & AWOS 2‐ upper air Stations & Ozone Kuwait Int. Airport Station In December 1962 one manned synoptic, climate, agro stations started to report on 24 hour basis and sending data to WMO Kuwait Int. Airport Station Kuwait started to observe and report meteorological data in the early 1940 with Kuwait Britsh oil company but most of the report were very limited. -

National Report From

WORLD METEOROLOGICAL ORGANIZATION INTERGOVERNMENTAL OCEANOGRAPHIC COMMISSION (OF UNESCO) _____________ ___________ JCOMM SHIP OBSERVATIONS TEAM SECOND SESSION London, United Kingdom, 28 July – 1 August 2003 NATIONAL REPORTS OCA website only: http://www.wmo.ch/web/aom/marprog/Publications/publications.htm WMO/TD-No. 1170 2003 JCOMM Technical Report No. 20 C O N T E N T S Note: To go directly to a particular national report, click on the report in the "Contents". To return to "Contents", click on the return arrow ← in Word. Argentina......................................................................................................................................... 1 Australia .......................................................................................................................................... 5 Canada ......................................................................................................................................... 17 Croatia .......................................................................................................................................... 27 France........................................................................................................................................... 33 Germany ....................................................................................................................................... 45 Greece ......................................................................................................................................... -

Avis Australia Commercial Vehicle Fleet and Location Guide

AVIS AUstralia COMMErcial VEHICLES FLEET SHEET UTILITIES & 4WDS 4X2 SINGLE CAB UTE | A | MPAR 4X2 DUAL CAB UTE | L | MQMD 4X4 WAGON | E | FWND • Auto/Manual • Auto/Manual • Auto/Manual • ABS • ABS • ABS SPECIAL NOTES • Dual Airbags • Dual Airbags • Dual Airbags • Radio/CD • Radio/CD • Radio/CD The vehicles featured here should • Power Steering • Power Steering • Power Steering be used as a guide only. Dimensions, carrying capacities and accessories Tray: Tray: are nominal and vary from location 2.3m (L), 1.8m (W) 1.5m (L), 1.5m (W), 1.1m (wheelarch), tub/styleside to location. All vehicles and optional 4X4 SINGLE CAB UTE | B | MPBD 4X4 DUAL CAB UTE | D | MQND 4X4 DUAL CAB UTE CANOPY | Z | IQBN extras are subject to availability. • Auto/Manual • Auto/Manual • Auto/Manual For full details including prices, vehicle • ABS • ABS • ABS availability and options, please visit • Dual Airbags • Dual Airbags • Dual Airbags • Radio/CD • Radio/CD • Radio/CD www.avis.com.au, call 1800 141 000 • Power Steering • Power Steering • Power Steering or contact your nearest Avis location. Tray: Tray: Tray: 1.5m (L), 1.5m (W), 2.3m (L), 1.8m (W) 1.8m (L), 1.8m (W) 0.9m (H) lockable canopy VANS & BUSES DELIVERY VAN | C | IKAD 12 SEATER BUS | W | GVAD LARGE BUS | K | PVAD • Air Con • Air Con • Air Con • Cargo Barrier • Tow Bar • Tow Bar • Car Licence • Car Licence • LR Licence Specs: 5m3 2.9m (L), 1.5m (W), Specs: 12 People Specs: 1.1m (wheelarch) including Driver 20-25 People HITop VAN | H | SKAD 4.2M MovING VAN | F | FKAD 6.4M MovING VAN | S | PKAD 7.3M VAN | V | PQMR • Air Con • Air Con • Air Con • Air Con • Power Steering • Ramp/Lift • Ramp/Lift • Ramp/Lift • Car Licence • Car Licence • MR Licence • MR Licence Specs: 3.7m (L), 1.75m (W), Specs: Specs: Specs: 19m3, 4.2m (L), 34m3, 6.4m (L), 42m3, 7.3m (L), 1.9m (H), between 2.1m (W), 2.1m (H), 2.3m (W), 2.3m (H), 2.4m (W), 2.4m (H), wheel arch 1.35m (L) up to 3 pallets up to 10 pallets up to 12 pallets *Minimum specs. -

AAA SA Meeting Minutes

MINUTES SOUTH AUSTRALIAN AAA DIVISION MEETING AND AGM Stamford Grand Adelaide, Moseley Square, Glenelg 25 & 26 August 2016 ATTENDEES PRESENT: Adam Branford (Mount Gambier Airport), Ian Fritsch (Mount Gambier Airport), George Gomez Moss (Jacobs), Alan Braggs (Jacobs), Cr Julie Low (Mayor, District Council of Lower Eyre Peninsula), Barrie Rogers (Airport Manager District Council of Lower Eyre Peninsula), Ken Stratton (Port Pirie Regional Council), Peter Francis (Aerodrome Design), Bill Chapman (Mildura Airport), Laura McColl (ADB Safegate), Shane Saal (Port Augusta City Council), Heidi Yates (District Council of Ceduna), Howard Aspey (Whyalla City Council), Damon Barrett (OTS), James Michie (District of Coober Pedy), Phil Van Poorten (District of Coober Pedy), Cliff Anderson (Fulton Hogan), David Blackwell (Adelaide Airport), Gerard Killick (Fulton Hogan), Oliver Georgelin (Smiths Detection), Martin Chlupac (Airport Lighting Specialists), Bridget Conroy (Rehbein Consulting), Ben Hargreaves (Rehbein Consulting), David West (Kangaroo Island Council), Andrew Boardman (Kangaroo Island Council), Phil Baker (Philbak Pty Ltd), Cr Scott Dornan (Action line marking), Allan Briggs (Briggs Communications), David Boots (Boral Asphalt), Eric Rossi (Boral Asphalt), Jim Parsons (Fulton Hogan), Nick Lane (AAA National), Leigh Robinson (Airport Equipment), Terry Buss (City of West Torrens), David Bendo (Downer Infrastructure), Erica Pasfield (Department of Planning, Transport and Infrastructure), Chris Van Laarhoven (BHP Billiton), Glen Crowhurst (BHS Billiton). -

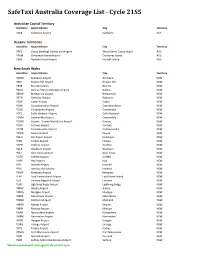

Safetaxi Australia Coverage List - Cycle 21S5

SafeTaxi Australia Coverage List - Cycle 21S5 Australian Capital Territory Identifier Airport Name City Territory YSCB Canberra Airport Canberra ACT Oceanic Territories Identifier Airport Name City Territory YPCC Cocos (Keeling) Islands Intl Airport West Island, Cocos Island AUS YPXM Christmas Island Airport Christmas Island AUS YSNF Norfolk Island Airport Norfolk Island AUS New South Wales Identifier Airport Name City Territory YARM Armidale Airport Armidale NSW YBHI Broken Hill Airport Broken Hill NSW YBKE Bourke Airport Bourke NSW YBNA Ballina / Byron Gateway Airport Ballina NSW YBRW Brewarrina Airport Brewarrina NSW YBTH Bathurst Airport Bathurst NSW YCBA Cobar Airport Cobar NSW YCBB Coonabarabran Airport Coonabarabran NSW YCDO Condobolin Airport Condobolin NSW YCFS Coffs Harbour Airport Coffs Harbour NSW YCNM Coonamble Airport Coonamble NSW YCOM Cooma - Snowy Mountains Airport Cooma NSW YCOR Corowa Airport Corowa NSW YCTM Cootamundra Airport Cootamundra NSW YCWR Cowra Airport Cowra NSW YDLQ Deniliquin Airport Deniliquin NSW YFBS Forbes Airport Forbes NSW YGFN Grafton Airport Grafton NSW YGLB Goulburn Airport Goulburn NSW YGLI Glen Innes Airport Glen Innes NSW YGTH Griffith Airport Griffith NSW YHAY Hay Airport Hay NSW YIVL Inverell Airport Inverell NSW YIVO Ivanhoe Aerodrome Ivanhoe NSW YKMP Kempsey Airport Kempsey NSW YLHI Lord Howe Island Airport Lord Howe Island NSW YLIS Lismore Regional Airport Lismore NSW YLRD Lightning Ridge Airport Lightning Ridge NSW YMAY Albury Airport Albury NSW YMDG Mudgee Airport Mudgee NSW YMER Merimbula