COLOR- and PIECE-BLIND CHESS 1. Impressing Humans What Better

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

1999/6 Layout

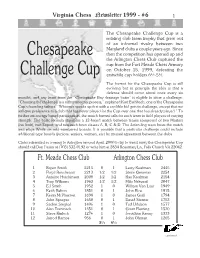

Virginia Chess Newsletter 1999 - #6 1 The Chesapeake Challenge Cup is a rotating club team trophy that grew out of an informal rivalry between two Maryland clubs a couple years ago. Since Chesapeake then the competition has opened up and the Arlington Chess Club captured the cup from the Fort Meade Chess Armory on October 15, 1999, defeating the 1 1 Challenge Cup erstwhile cup holders 6 ⁄2-5 ⁄2. The format for the Chesapeake Cup is still evolving but in principle the idea is that a defense should occur about once every six months, and any team from the “Chesapeake Bay drainage basin” is eligible to issue a challenge. “Choosing the challenger is a rather informal process,” explained Kurt Eschbach, one of the Chesapeake Cup's founding fathers. “Whoever speaks up first with a credible bid gets to challenge, except that we will give preference to a club that has never played for the Cup over one that has already played.” To further encourage broad participation, the match format calls for each team to field players of varying strength. The basic formula stipulates a 12-board match between teams composed of two Masters (no limit), two Expert, and two each from classes A, B, C & D. The defending team hosts the match and plays White on odd-numbered boards. It is possible that a particular challenge could include additional type boards (juniors, seniors, women, etc) by mutual agreement between the clubs. Clubs interested in coming to Arlington around April, 2000 to try to wrest away the Chesapeake Cup should call Dan Fuson at (703) 532-0192 or write him at 2834 Rosemary Ln, Falls Church VA 22042. -

Kolov LEADS INTERZONAL SOVIET PLAYERS an INVESTMENT in CHESS Po~;T;On No

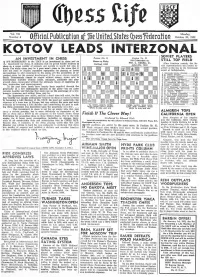

Vol. Vll Monday; N umber 4 Offjeitll Publication of me Unttecl States (bessTederation October 20, 1952 KOlOV LEADS INTERZONAL SOVIET PLAYERS AN INVESTMENT IN CHESS Po~;t;on No. 91 POI;l;"n No. 92 IFE MEMBERSHIP in the USCF is an investment in chess and an Euwe vs. Flohr STILL TOP FIELD L investment for chess. It indicates that its proud holder believes in C.1rIbad, 1932 After fOUl't~n rounds, the S0- chess ns a cause worthy of support, not merely in words but also in viet rcpresentatives still erowd to deeds. For while chess may be a poor man's game in the sense that it gether at the top in the Intel'l'onal does not need or require expensive equipment fm' playing or lavish event at Saltsjobaden. surroundings to add enjoyment to the game, yet the promotion of or· 1. Alexander Kot()v (Russia) .w._.w .... 12-1 ganized chess for the general development of the g'lmc ~ Iway s requires ~: ~ ~~~~(~tu(~~:I;,.i ar ·::::~ ::::::::::~ ~!~t funds. Tournaments cannot be staged without money, teams sent to international matches without funds, collegiate, scholastic and play· ;: t.~h!"'s~~;o il(\~::~~ ry i.. ··::::::::::::ij ); ~.~ ground chess encouraged without the adequate meuns of liupplying ad· 6. Gidcon S tahl ~rc: (Sweden) ...... 81-5l vice, instruction and encouragement. ~: ~,:ct.~.:~bG~~gO~~(t3Ji;Oi· · ·:::: ::::::7i~~ In the past these funds have largely been supplied through the J~: ~~j~hk Elrs'l;~san(A~~;t~~~ ) ::::6i1~ generosity of a few enthusiastic patrons of the game-but no game 11. -

Interview by Susanne Schuricht with IEPE B.T. Rubingh

Interview by Susanne Schuricht with IEBE B.T.Rubingh, Berlin 2005 (long version / english) SU: How was chessboxing born? Where did the idea come from? IEPE: Chessboxing was born in 2002; I was looking for new ideas after finishing my work called "Miracle of Berlin". In Amsterdam I met Luis, a.k.a. "Luis the Lawyer". We were hanging out in a bar and talking, and we found out that both of us had started boxing a year earlier and both of us have been playing chess for many years. The first idea we had was: Let's have a boxing match. Then I came up with the chessboxing idea from the comic "Froid équateur" by ENKI BILAL, which is set in 2096 and sees inhabitants of a ravaged planet competing in various games, including chessboxing. SU: Had any of your projects been inspired by a comic before? IEPE: Yes, a lot of them. My father owned a nice comic collection. I always had some of the pictures in mind. The project "Jokers performances" (joker.iepe.net) was inspired by the comic strip "Face de lune" by Jodorowsky & Boucq. Another source of inspiration was an essay out of the book "Beyond The Brillo Box" written by the art critic Arthur C. Danto. SU: How does it work? Could you explain to us the rules of chessboxing? IEPE: In the first place we had no idea what it should be like and which rules the match should have. We had long discussions with members of the Dutch chess and boxing federations in order to develop the idea. -

Altogether Now in My Decades of Playing Sub-Optimal Chess, I Have

Altogether now In my decades of playing sub-optimal chess, I have been given several pieces of advice about how best to play simultaneous chess. I have faced several grandmasters over the board in simuls and tried to adopt these tips, but with very little success. In fact, no success. One suggestion was to tactically muddy the waters. The theory being if you play a closed positional game the GM (or whoever is giving the simul) will easily overcome you end in the end with their superior technique. On the other hand, although they are, of course, much better than you tactically if they have 20 odd other boards to focus on, effectively playing their moves at a rate akin to blitz, there is a chance they might slip up and give you some winning chances when faced with a messy position. This is fine in theory and may work well for those players stronger then myself who are adept at tactics but my record of my games shows a whopping nil points for me with this approach. In fact, I have found the opposite to be true. I am pleased to report that I have achieved a couple of draws from playing a completely blocked stagnant position. The key here has been to try to make the games last sufficiently long so that in the end then GM kindly offers you a draw in a desperate attempt to get his last bus home. Although loathsome this is when you want as many of those horrible creatures who play on when they are a rook and a bishop down with no compensation (or similar) to keep on playing against the GM. -

2001 07 En Passant

5604 Solway Street Suite 209 July 2001 Pittsburgh PA 15217-1270 Vol 57 No 4 412-421-1881 “I swear, Bob, part of me wants to go to the World Series with you, but another part wants to analyze game 19 of the Spassky-Fischer match.” to PCC members. Re-entry: $15. 3-day schedule: Reg ends En Passant Fri 6:30pm, Rds Fri 7, Sat 12:30 - 5:30, Sun 10 - 3. 2-day Journal of the Pittsburgh Chess Club schedule: Reg Sat 9:30-9:45am, 1st Round 10am, then merges with 3-day. Bye: 1-5, rds 4 & 5 must commit before rd Chess Journalists of America Award 2. HR: $31-41, Univ. of Pittsburgh Main Towers dorms 412- Best Club Bulletin, 2000 648-1206. Info: 412-681-7590. Ent: Tom Martinak. http://trfn.clpgh.org/orgs/pcc/ July 17. PCC Executive Committee Meeting. Pittsburgh Club Telephone: 412-421-1881 Chess Club. 6pm. Hours: Wednesday 1 - 10 PM; Saturday Noon - 10:30 PM July 17 - August 14. 10th Wild Card Open. 5-SS. Pitts- burgh Chess Club. 2 sections: Championship. TL: 30/90, Editor: Bobby Dudley, 107 Crosstree Road SD/60. EF: $28 postmarked by 7/9, $38 at site, $2 discount to Moon Township PA 15108-2607 PCC members. $$ (540 b/27): 140-100-90-80-70-60. 412-262-2138 or 412-262-4079 Booster, open to U1600. TL: Game/60. EF: $14 postmarked [email protected] by 7/9, $19 at site, $1 discount to PCC members. Trophies to Interim Editor: Thomas Martinak, 320 N Neville St Apt 22 1st & 2nd, Ribbons to 3rd. -

PNWCC FIDE Open – Olympiad Gold

https://www.pnwchesscenter.org [email protected] Pacific Northwest Chess Center 12020 113th Ave NE #C-200, Kirkland, WA 98034 PNWCC FIDE Open – Olympiad Gold Jan 18-21, 2019 Description A 3-section, USCF and FIDE rated 7-round Swiss tournament with time control of 40/90, SD 30 with 30-second increment from move one, featuring two Chess Olympiad Champion team players from two generations and countries. Featured Players GM Bu, Xiangzhi • World’s currently 27th ranked chess player with FIDE Elo 2726 (“Super GM”) • 2018 43rd Chess Olympia Champion (Team China, Batumi, Georgia) • 2017 Chess World Cup Round 4 (Eliminated World Champion GM Magnus Carlsen in Round 3. Watch video here) • 2015 World Team Chess Champion (Team China, Tsaghkadzor, Armenia) • 6th Youngest Chess Grand Master in human history (13 years, 10 months, 13 days) GM Tarjan, James • 2017 Beat former World Champion GM Vladimir Kramnik in Isle of Man Chess Tournament Round 3. Watch video here • Played for the Team USA at five straight Chess Olympiads from 1974-1982 • 1976 22nd Chess Olympiad Champion (Team USA, Haifa, Israel) • Competed in several US Championships during the 1970s and 1980s with the best results of clear second in 1978 GM Bu, Xiangzhi Bio – Bu was born in Qingdao, a famous seaside city of China in 1985 and started chess training since age 6, inspired by his compatriot GM Xie Jun’s Women’s World Champion victory over GM Maya Chiburdanidze in 1991. A few years later Bu easily won in the Chinese junior championship and went on to achieve success in the international arena: he won 3rd place in the U12 World Youth Championship in 1997 and 1st place in the U14 World Youth Championship in 1998. -

1 Forward Search, Pattern Recognition and Visualization in Expert Chess: A

CORE Metadata, citation and similar papers at core.ac.uk Provided by Brunel University Research Archive 1 Forward search, pattern recognition and visualization in expert chess: A reply to Chabris and Hearst (2003) Fernand Gobet School of Psychology University of Nottingham Address for correspondence: Fernand Gobet School of Psychology University of Nottingham Nottingham NG7 2RD United Kingdom + 44 (115) 951 5402 (phone) + 44 (115) 951 5324 (fax) [email protected] After September 30 th , 2003: Department of Human Sciences Brunel University Uxbridge Middlesex, UB8 3PH United Kingdom [email protected] 2 Abstract Chabris and Hearst (2003) produce new data on the question of the respective role of pattern recognition and forward search in expert behaviour. They argue that their data show that search is more important than claimed by Chase and Simon (1973). They also note that the role of mental imagery has been neglected in expertise research and propose that theories of expertise should integrate pattern recognition, search, and mental imagery. In this commentary, I show that their results are not clear-cut and can also be taken as supporting the predominant role of pattern recognition. Previous theories such as the chunking theory (Chase & Simon, 1973) and the template theory (Gobet & Simon, 1996a), as well as a computer model (SEARCH; Gobet, 1997) have already integrated mechanisms of pattern recognition, forward search and mental imagery. Methods for addressing the respective roles of pattern recognition and search are proposed. Keywords Decision making, expertise, imagery, pattern recognition, problem solving, search 3 Introduction The respective roles of pattern recognition and search in expert problem solving have been an important topic of research in cognitive science. -

Historical WASHINGTON CHESS LETTER Recaps

Historical WASHINGTON CHESS LETTER recaps (in the pages of Washington Chess Letter and Northwest Chess) by Russell Miller 1948-1988 at ten-year intervals December 1948 (WCL) From the Dec. 1958 WASHINGTON CHESS LETTER by R. R. Merk T. Patrick Corbett contributed to the December 1948 issue of WCL. As Omar said eight hundred years ago: “Tis all chequer board of nights and days Where Destiny with men for pieces plays Hither and thither moves and mates and slays And one by one, back in the closet lays!” The Northwest Washington 6 round Swiss tournament was announced for Jan 29th & 30th at Everett. The Seattle City tournament on Feb. 26th & 27th at Seattle Chess Club limited to residents of King County. The US Open Champion, Weaver Adams gave two interesting exhibitions at The Seattle Chess Club and a simultaneous exhibition at the Seattle Y.M.C.A. George Rehberg resigned his position as Feature Editor on the WCL and as secretary of the Kitsap Club. He was succeeded by Jack Nourse as secretary of the Kitsap Club. It was announced that Dick Allen would contribute a regular column to WCL beginning with the next issue. Whidbey Island team won a tight match from the Seattle Y.M.C.A. by a score of 6.5 to 3.5. Jack Finnegan won an all expense paid trip to the Rose Bowl game in Pasadena on New Year’s Day. He represented the Seattle P.I. as a special reporter. Jack at that time was quite prominent in Puget Sound chess circles. -

October, 2007

Colorado Chess Informant YOUR COLORADOwww.colorado-chess.com STATE CHESS ASSOCIATION’S Oct 2007 Volume 34 Number 4 ⇒ On the web: http://www.colorado-chess.com Volume 34 Number 4 Oct 2007/$3.00 COLORADO CHESS INFORMANT Inside This Issue Reports: pg(s) Colorado Open 3 Jackson’s Trip to Nationals 11 CSCA Membership Meeting Minutes 22 Front Range League Report 27 Crosstables Colorado Open 3 Membership Meeting Open 10 Pike’s Peak Open 14 Pueblo Open 25 Boulder Invitational/Chess Festival 26 Games The King (of chess) and I 6 Membership Meeting Open Games 12 Pike’s Peak Games 15 The $116.67 Endgame 20 Snow White 24 Departments CSCA Info. 2 Humor 27 Club Directory 28 Colorado Tour Update 29 Tournament announcements 30 Features “How to Play Chess Like an “How to Play Chess Like an Animal” 8 Cutting Off the King 9 “How to Beat Granddad at Checkers” 11 Animal”, written by Shipp’s Log 18 BrianPage Wall 1 and Anthea Carson Colorado Chess Informant www.colorado-chess.com Oct 2007 Volume 34 Number 4 COLORADO STATE Treasurer: The Passed Pawn CHESS ASSOCIATION Richard Buchanan 844B Prospect Place CO Chess Informant Editor The COLORADO STATE Manitou Springs, CO 80829 Randy Reynolds CHESS ASSOCIATION, (719) 685-1984 INC, is a Sec. 501 (C) (3) [email protected] Greetings Chess Friends, tax-exempt, non-profit edu- cational corporation formed Members at Large: Another Colorado Open and to promote chess in Colo- Todd Bardwick CSCA membership meeting rado. Contributions are tax- (303) 770-6696 are in the history books, and deductible. -

Yearbook.Indb

PETER ZHDANOV Yearbook of Chess Wisdom Cover designer Piotr Pielach Typesetting Piotr Pielach ‹www.i-press.pl› First edition 2015 by Chess Evolution Yearbook of Chess Wisdom Copyright © 2015 Chess Evolution All rights reserved. No part of this publication may be reproduced, stored in a retrieval sys- tem or transmitted in any form or by any means, electronic, electrostatic, magnetic tape, photocopying, recording or otherwise, without prior permission of the publisher. isbn 978-83-937009-7-4 All sales or enquiries should be directed to Chess Evolution ul. Smutna 5a, 32-005 Niepolomice, Poland e-mail: [email protected] website: www.chess-evolution.com Printed in Poland by Drukarnia Pionier, 31–983 Krakow, ul. Igolomska 12 To my father Vladimir Z hdanov for teaching me how to play chess and to my mother Tamara Z hdanova for encouraging my passion for the game. PREFACE A critical-minded author always questions his own writing and tries to predict whether it will be illuminating and useful for the readers. What makes this book special? First of all, I have always had an inquisitive mind and an insatiable desire for accumulating, generating and sharing knowledge. Th is work is a prod- uct of having carefully read a few hundred remarkable chess books and a few thousand worthy non-chess volumes. However, it is by no means a mere compilation of ideas, facts and recommendations. Most of the eye- opening tips in this manuscript come from my refl ections on discussions with some of the world’s best chess players and coaches. Th is is why the book is titled Yearbook of Chess Wisdom: it is composed of 366 self-suffi - cient columns, each of which is dedicated to a certain topic. -

Dressed for Success How London Is Becoming the Chess Capital of the World

Chess.May.21/4/11 21/4/11 14:51 Page 1 www.chess.co.uk Volume 76 No.2 May 2011 £3.95 UK $9.95 Canada Dressed for success How London is becoming the chess capital of the world Drunken Knights Vs Wood Green The strongest ever English League match! ChessChess andand FFootballootball Tournament Reports Amber (Blindfold + Rapid), 2011 European Championships, 4NCL Contents May 2011_Chess mag - 21_6_10 27/04/2011 17:38 Page 1 Chess Contents Chess Magazine is published monthly. Founding Editor: B.H. Wood, OBE. M.Sc † Editorial Editor: Jimmy Adams Malcolm Pein on the latest developments in chess 4 Acting Editor: John Saunders Executive Editor: Malcolm Pein European Individual Championship, Aix les Bains Subscription Rates: Vladimir Potkin headed a stellar field of GMs in Aix but the English United Kingdom competitors - professional and amateur - had their moments. 7 1 year (12 issues) £44.95 2 year (24 issues) £79.95 Problem Album 3 year (36 issues) £109.95 Colin Russ presents Werner Speckmann compositions 11 Europe 1 year (12 issues) £54.95 Melody Amber 2 year (24 issues) £99.95 The Amber light changed to red in Monte Carlo - Levon Aronian 3 year (36 issues) £149.95 had the honour of the ‘last tango in Monte Carlo’. 12 USA & Canada 1 year (12 issues) $90 4NCL British Team League 2 year (24 issues) $170 Andrew Greet reports on the fourth weekend in Daventry, with all 3 year (36 issues) $250 the contenders in their final pools. Some superb chess. 18 Rest of World (Airmail) RAC Centenary Celebration 1 year (12 issues) £64.95 2 year (24 issues) £119.95 The Royal Automobile Club celebrated its 100th birthday in style, 3 year (36 issues) £170 with ten GMs taking on 100 opponents in palatial Pall Mall. -

Mar-Apr 2007

MARCH2007 APRIL IN THIS ISSUE .*%8&45$-"44"//05"5*0/4'30. *."/(&-0:06/( 5)&$0"$)4$03/&38*5)7*/$&)"35 '."-#&35$)088&*()4*/0/ i50*-&5("5&w 5)&30"%8"33*03 "/%.6$).03& ILLINOIS CHESS BULLETIN Illinois Chess Bulletin Contents Page 2 Table of Contents e-ICB http://ilchess.org/e.htm Ica Supporters Life Patron Members Helen Warren James Warren Todd Barre Features Century Club Patron Members Michael Aaron Kevin Bachler Bill Brock Lawrence Cohen Vladimir Djordjevic Sometimes a Draw is OK ..... 6 William Dwyer In Memory of Victor George by Vince Hart Thomas Fineberg Thomas Friske Samuel Naylor IV James Novotny A Master’s Notes on “Toilet Gate” ... 12 Daniel Pradt Randall Ryner Frederick W Schmidt, Jr. Pradip Sethi Games from the ICCA Individual . ...... 8 Scott Silverman Bill Smythe Kurt W Stein Phillip Wong Gold Card Patron Members Todd Barre Clyde Blanke Jim Brontsos departments Phil Bossaers Aaron Chen Chess-Now Ltd. David Cook Joseph Delay John Dueker Editor’s Desk .......................... 4 Fred Gruenberg David Heis Vincent Hart Steven Klink Richard Lang Mark Marovitch Games from IM Young ............. 10 Mark Nibbelin Alex Pehas Joseph Splinter Michael Sweig James Tanaka Robert Widing Road Warrior ..................................... 18 Patron Members ICA Calendar ...................................... 22 Bacil Alexy Adwar Dominic Amodei Roy Benedek Roger Birkeland Jack Bishop Foster L Boone, Jr. Dennis Bourgerie Robert J Carlton Mike Cronin Tom Duncan Brian Dupuis Charles Fenner Gregory Fischer Shizuko Fukuhara Fulk Alan Gasiecki David