Programmable Aperture Camera Using Lcos

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Depth of Field PDF Only

Depth of Field for Digital Images Robin D. Myers Better Light, Inc. In the days before digital images, before the advent of roll film, photography was accomplished with photosensitive emulsions spread on glass plates. After processing and drying the glass negative, it was contact printed onto photosensitive paper to produce the final print. The size of the final print was the same size as the negative. During this period some of the foundational work into the science of photography was performed. One of the concepts developed was the circle of confusion. Contact prints are usually small enough that they are normally viewed at a distance of approximately 250 millimeters (about 10 inches). At this distance the human eye can resolve a detail that occupies an angle of about 1 arc minute. The eye cannot see a difference between a blurred circle and a sharp edged circle that just fills this small angle at this viewing distance. The diameter of this circle is called the circle of confusion. Converting the diameter of this circle into a size measurement, we get about 0.1 millimeters. If we assume a standard print size of 8 by 10 inches (about 200 mm by 250 mm) and divide this by the circle of confusion then an 8x10 print would represent about 2000x2500 smallest discernible points. If these points are equated to their equivalence in digital pixels, then the resolution of a 8x10 print would be about 2000x2500 pixels or about 250 pixels per inch (100 pixels per centimeter). The circle of confusion used for 4x5 film has traditionally been that of a contact print viewed at the standard 250 mm viewing distance. -

Depth of Focus (DOF)

Erect Image Depth of Focus (DOF) unit: mm Also known as ‘depth of field’, this is the distance (measured in the An image in which the orientations of left, right, top, bottom and direction of the optical axis) between the two planes which define the moving directions are the same as those of a workpiece on the limits of acceptable image sharpness when the microscope is focused workstage. PG on an object. As the numerical aperture (NA) increases, the depth of 46 focus becomes shallower, as shown by the expression below: λ DOF = λ = 0.55µm is often used as the reference wavelength 2·(NA)2 Field number (FN), real field of view, and monitor display magnification unit: mm Example: For an M Plan Apo 100X lens (NA = 0.7) The depth of focus of this objective is The observation range of the sample surface is determined by the diameter of the eyepiece’s field stop. The value of this diameter in 0.55µm = 0.6µm 2 x 0.72 millimeters is called the field number (FN). In contrast, the real field of view is the range on the workpiece surface when actually magnified and observed with the objective lens. Bright-field Illumination and Dark-field Illumination The real field of view can be calculated with the following formula: In brightfield illumination a full cone of light is focused by the objective on the specimen surface. This is the normal mode of viewing with an (1) The range of the workpiece that can be observed with the optical microscope. With darkfield illumination, the inner area of the microscope (diameter) light cone is blocked so that the surface is only illuminated by light FN of eyepiece Real field of view = from an oblique angle. -

A Guide to Smartphone Astrophotography National Aeronautics and Space Administration

National Aeronautics and Space Administration A Guide to Smartphone Astrophotography National Aeronautics and Space Administration A Guide to Smartphone Astrophotography A Guide to Smartphone Astrophotography Dr. Sten Odenwald NASA Space Science Education Consortium Goddard Space Flight Center Greenbelt, Maryland Cover designs and editing by Abbey Interrante Cover illustrations Front: Aurora (Elizabeth Macdonald), moon (Spencer Collins), star trails (Donald Noor), Orion nebula (Christian Harris), solar eclipse (Christopher Jones), Milky Way (Shun-Chia Yang), satellite streaks (Stanislav Kaniansky),sunspot (Michael Seeboerger-Weichselbaum),sun dogs (Billy Heather). Back: Milky Way (Gabriel Clark) Two front cover designs are provided with this book. To conserve toner, begin document printing with the second cover. This product is supported by NASA under cooperative agreement number NNH15ZDA004C. [1] Table of Contents Introduction.................................................................................................................................................... 5 How to use this book ..................................................................................................................................... 9 1.0 Light Pollution ....................................................................................................................................... 12 2.0 Cameras ................................................................................................................................................ -

Samyang T-S 24Mm F/3.5 ED AS

GEAR GEAR ON TEST: Samyang T-S 24mm f/3.5 ED AS UMC As the appeal of tilt-shift lenses continues to broaden, Samyang has unveiled a 24mm perspective-control optic in a range of popular fittings – and at a price that’s considerably lower than rivals from Nikon and Canon WORDS TERRY HOPE raditionally, tilt-shift lenses have been seen as specialist products, aimed at architectural T photographers wanting to correct converging verticals and product photographers seeking to maximise depth-of-field. The high price of such lenses reflects the low numbers sold, as well as the precision nature of their design and construction. But despite this, the tilt-shift lens seems to be undergoing something of a resurgence in popularity. This increasing appeal isn’t because photographers are shooting more architecture or box shots. It’s more down to the popularity of miniaturisation effects in landscapes, where such a tiny part of the frame is in focus that it appears as though you are looking at a scale model, rather than the real thing. It’s an effect that many hobbyist DSLRs, CSCs and compacts can generate digitally, and it’s straightforward to create in Photoshop too, but these digital recreations are just approximations. To get the real thing you’ll need to shoot with a tilt-shift lens. Up until now that would cost you over £1500, and when you consider the number of assignments you might shoot in a year using a tilt-shift lens, that’s not great value for money. Things are set to change, though, because there’s The optical quality of the lens is very good, and is a new kid on the block in the shape of the Samyang T-S testament to the reputation that Samyang is rapidly 24mm f/3.5 ED AS UMC, which has a street price of less gaining in the optics market. -

Backlighting

Challenge #1 - Capture Light Bokeh Have you seen those beautiful little circles of colour and light in the background of photos? It’s called Bokeh and it comes from the Japanese word “boke” meaning blur. It’s so pretty and not only does it make a gorgeous backdrop for portraits, but it can be the subject in it’s own right too! It’s also one of the most fun aspects of learning photography, being able to capture your own lovely bokeh! And that’s what our first challenge is all about, Capturing Light Bokeh! And remember - just as I said in this video, the purpose of these challenges is NOT to take perfect photos the first time you try something new… it’s about looking for light, trying new techniques, and exploring your creativity to help you build your skills and motivate and inspire you. So lets have fun & I can’t wait to see your photos! Copyright 2017 - www.clicklovegrow.com How to Achieve Light Bokeh Light Bokeh is created when light reflects off, or through, a background; and is then captured with a wide open aperture of your lens. As light reflects differently off flat surfaces, you’re not likely to see this effect when using a plain wall, for example. Instead, you’ll see it when your background has a little texture, such as light reflecting off leaves in foliage of a garden, or wet grass… or when light is broken up when streaming through trees. Looking for Light One of the key take-aways for this challenge is to start paying attention to light & how you can capture it in photos… both to add interest and feeling to your portraits, but as a creative subject in it’s own right. -

To Photographing the Planets, Stars, Nebulae, & Galaxies

Astrophotography Primer Your FREE Guide to photographing the planets, stars, nebulae, & galaxies. eeBook.inddBook.indd 1 33/30/11/30/11 33:01:01 PPMM Astrophotography Primer Akira Fujii Everyone loves to look at pictures of the universe beyond our planet — Astronomy Picture of the Day (apod.nasa.gov) is one of the most popular websites ever. And many people have probably wondered what it would take to capture photos like that with their own cameras. The good news is that astrophotography can be incredibly easy and inexpensive. Even point-and- shoot cameras and cell phones can capture breathtaking skyscapes, as long as you pick appropriate subjects. On the other hand, astrophotography can also be incredibly demanding. Close-ups of tiny, faint nebulae, and galaxies require expensive equipment and lots of time, patience, and skill. Between those extremes, there’s a huge amount that you can do with a digital SLR or a simple webcam. The key to astrophotography is to have realistic expectations, and to pick subjects that are appropriate to your equipment — and vice versa. To help you do that, we’ve collected four articles from the 2010 issue of SkyWatch, Sky & Telescope’s annual magazine. Every issue of SkyWatch includes a how-to guide to astrophotography and visual observing as well as a summary of the year’s best astronomical events. You can order the latest issue at SkyandTelescope.com/skywatch. In the last analysis, astrophotography is an art form. It requires the same skills as regular photography: visualization, planning, framing, experimentation, and a bit of luck. -

Aperture Digital Photography Fundamentals

Aperture Digital Photography Fundamentals K Apple Computer, Inc. © 2005 Apple Computer, Inc. All rights reserved. No part of this publication may be reproduced or transmitted for commercial purposes, such as selling copies of this publication or for providing paid for support services. Every effort has been made to ensure that the information in this manual is accurate. Apple is not responsible for printing or clerical errors. The Apple logo is a trademark of Apple Computer, Inc., registered in the U.S. and other countries. Use of the “keyboard” Apple logo (Option-Shift-K) for commercial purposes without the prior written consent of Apple may constitute trademark infringement and unfair competition in violation of federal and state laws. Apple, the Apple logo, Apple Cinema Display and ColorSync are trademarks of Apple Computer, Inc., registered in the U.S. and other countries. Aperture is a trademark of Apple Computer, Inc. 1 Contents Preface 5 An Introduction to Digital Photography Fundamentals Chapter 1 7 How Digital Cameras Capture Images 7 Types of Digital Cameras 8 Digital Single-Lens Reflex (DSLR) 9 Digital Rangefinder 11 Camera Components and Concepts 11 Lens 12 Understanding Lens Multiplication with DSLRs 14 Understanding Digital Zoom 14 Aperture 15 Understanding Lens Speed 16 Shutter 17 Using Reciprocity to Compose Your Image 17 Digital Image Sensor 20 Memory Card 20 External Flash 21 Understanding RAW, JPEG, and TIFF 21 RAW 21 Why Shoot RAW Files? 22 JPEG 22 TIFF 22 Shooting Tips 22 Reducing Camera Shake 23 Minimizing Red-Eye in Your Photos 25 Reducing Digital Noise Chapter 2 27 How Digital Images Are Displayed 27 The Human Eye’s Subjective View of Color 29 Understanding How the Eye Sees Light and Color 30 Sources of Light 30 The Color Temperature of Light 31 How White Balance Establishes Color Temperature 3 31 Measuring the Intensity of Light 32 Bracketing the Exposure of an Image 33 Understanding How a Digital Image Is Displayed 33 Additive vs. -

Shift and Tilt Bellows System

SHIFT & TILT BELLOWS SYSTEM Instruction Manual as of: May 1995 ALL ARTWORK, PICTURES AND TEXTS ARE COVERED BY OUR COPY- RIGHT. THEY MUST NOT BE COPIED FOR REPRODUCTION (E.G. ON CD-ROM DISKS OR INTERNET-SITES) OR USED IN THEIR ENTIRE FORM OR IN EXCERPTS WITHOUT OUR PREVIOUS WRITTEN AGREEMENT. IF YOU ARE DOWNLOADING PDF-FILES FROM OUR INTERNET HOME- PAGE FOR YOUR PERSONAL USE, MAKE SURE TO CHECK FOR UPDATED VERSIONS. WE CANNOT TAKE ANY LIABILITY WHATSOEVER FOR DOWNLOADED FILES, AS TECHNICAL DATA ARE SUBJECT TO CHANGE WITHOUT NOTICE. lock for lens rotation mark for lens rotation lens rotation scale for tilting lock for tilting scale for shifting index pin shifting tilting support rod clamp scale for swinging lock for focus swinging support rod clamp focus-knob adapter for 15 mm support rods support rod clamp 1. Place scale in object plane note: magnification scales refers to 2. Align arrow with left edge of the frame projection aperture 1:1.85 3. Magnification ratio and the corresponding (width = 21mm/ 0.825") exposure increase can be read off at the right edge of the frame exposure increase in stops 1 2 1 3 2 1 1 432 /2 21 /31 /3 1 /4 /3 /2 /3 align with left edge of the frame 4:1 2:1 1:1 1:2 1:3 1:4 1:5 1:6 1:7 1:8 magnification ratio Shift & Tilt Bellows System 1. Safety Specifications Warnings Danger of injury or equipment damage possible! General Safety Specifications Due to the flexible bellows design, adjustments are possible which surpass the technical possibilities of the attached lens. -

Introduction to Aperture in Digital Photography Helpful

9/7/2015 Introduction to Aperture - Digital Photography School Tips & Tutorials Cameras & Equipment Post Production Books Presets Courses Forums Search Introduction to Aperture in Join 1,417,294 Subscribers! Digital Photography A Post By: Darren Rowse 142K 23.1K 661 164 1200 96 0 35 Shares Share Share Share Pin it Share Other Comments Photography Tips & Tutorials Tips for Getting Started with Urban Landscape Photography 6 Tips for Creating More Captivating Landscape Photographs How to Solve 5 Composition Tempest by Sheldon Evans on 500px Conundrums Faced by Over the last couple of weeks I’ve been writing a series of posts on elements Landscape Photographers that digital photographers need to learn about in order to get out of Auto mode and learn how to manually set the exposure of their shots. I’ve largely focussed Landscape Photography - upon three elements of the ‘exposure triangle‘ – ISO, Shutter Speed and Shooting the Same Aperture. I’ve previously written about the first two and today would like to Location Through the turn our attention to Aperture. Seasons Before I start with the explanations let me say this. If you can master aperture you put into your grasp real creative control over your camera. In my opinion – aperture is where a lot of the magic happens in photography and as we’ll see below, changes in it can mean the difference between one dimensional and multi dimensional shots. What is Aperture? Put most simply – Aperture is ‘the opening in the lens.’ Cameras & Equipment When you hit the shutter release button of your camera a hole opens up that Sirui T-004X Aluminum allows your cameras image sensor to catch a glimpse of the scene you’re Tripod Review wanting to capture. -

The F-Word in Optics

The F-word in Optics F-number, was first found for fixing photographic exposure. But F/number is a far more flexible phenomenon for finding facts about optics. Douglas Goodman finds fertile field for fixes for frequent confusion for F/#. The F-word of optics is the F-number,* also known as F/number, F/#, etc. The F- number is not a natural physical quantity, it is not defined and used consistently, and it is often used in ways that are both wrong and confusing. Moreover, there is no need to use the F-number, since what it purports to describe can be specified rigorously and unambiguously with direction cosines. The F-number is usually defined as follows: focal length F-number= [1] entrance pupil diameter A further identification is usually made with ray angle. Referring to Figure 1, a ray from an object at infinity that is parallel to the axis in object space intersects the front principal plane at height Y and presumably leaves the rear principal plane at the same height. If f is the rear focal length, then the angle of the ray in image space θ′' is given by y tan θ'= [2] f In this equation, as in the others here, a sign convention for angles heights and magnifications is not rigorously enforced. Combining these equations gives: 1 F−= number [3] 2tanθ ' For an object not at infinity, by analogy, the F-number is often taken to be the half of the inverse of the tangent of the marginal ray angle. However, Eq. 2 is wrong. -

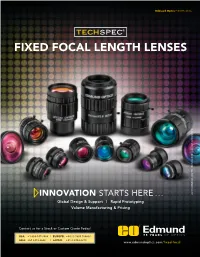

TECHSPEC® Fixed Focal Length Lenses

Edmund Optics® BROCHURE FIXED FOCAL LENGTH LENSES ® COPYRIGHT 2017 EDMUND OPTICS, INC. ALL RIGHTS RESERVED 7/17 RIGHTS RESERVED ALL 2017 EDMUND OPTICS, INC. ® COPYRIGHT INNOVATION STARTS HERE . Global Design & Support | Rapid Prototyping Volume Manufacturing & Pricing Contact us for a Stock or Custom Quote Today! USA: +1-856-547-3488 | EUROPE: +44 (0) 1904 788600 ASIA: +65 6273 6644 | JAPAN: +81-3-3944-6210 www.edmundoptics.com/fixed-focal UC SERIES FIXED FOCAL LENGTH LENSES • Ultra-Compact (UC) Form Factor • 4K Resolution Designed for Small Pixels (≤2.2µm) • Optimized for 1/2•5" Sensors and Supports up to 1/1•8" Our ultra-compact, TECHSPEC® UC Series Fixed Focal Length Lenses are designed to optimize performance, cost, and size without sacrificing quality or feel. Designed for pixels that are ≤2.2μm, these lenses provide high levels of resolution (>200 lp/mm) across the sensor and are compatible with all standard C-Mount cameras. TECHSPEC® UC Series Fixed Focal Length Lenses feature focus and iris adjustments, as well as re- cessed set screws, and are manufactured for use at typical machine vision working distances. While they are optimized for 1/2.5" sensors, many focal lengths will work on sensors up to 1/1.8". The TECHSPEC® UC Series lenses are an outstanding option for use on all smaller format camera sensors, along with both short and long working dis- tance applications, making them ideal for inspection, factory automation, biomedical devices, and a broad range of other applications. Dimensions Units: mm Focal Length A B C D Filter Thread Focus Adjustment Iris Locking Screw Iris Adjustment Focus Locking Screw C-Mount 4mm 40 40.6 30 2.8 M62.0 x 0.75 with required filter adapter #33-308 6mm 36 40.9 30 3.2 M34.0 x 0.5 Max. -

Digital Cameras Lenses Lenses Lenses

Lenses Digital Cameras • Light focused by the lens Lenses Aperture Shutter Speed Holding a Camera Steady Shooting Modes ISO Lenses Lenses • Positive lens What is difference in perspective between these – Converging lens two pictures? – Focuses image • Negative lens – Diverging lens – Spreads image out • Actual camera lens – Collection of lenses, called “elements” 1 Lenses Lenses • Field of view depends on focal length of lens. For a simple lens the focal Most digital cameras have zoom lenses length is the distance from the center of the lens to the sensor. • Wide Angle – Wide field of view – Most of subject reaches sensor – Expansive photo • Telephoto – Narrow field of view – Small portion of subject reaches sensor Wide angle Telephoto – Magnified Optical zoom is good. Digital zoom is not. Zoom Lenses Telephoto Both of these pictures were taken with a telephoto lens. • Simplified diagram below What features of telephoto shots do they illustrate? 2 Telephoto Wide Angle Compresses and Isolates the subject Both of these pictures were shot with a wide angle lens. flattens the image What features of wide angle shots do they illustrate? Wide Angle Focal Length and Lens Type 1. Expansive view 2. Easier to keep Perspective distortion • 35 mm camera everything in focus – Normal lens: focal length = 50 to 55 mm – Wide angle lens: 35 mm or smaller – Telephoto: 65 mm lens or larger • Digital camera – Smaller sensor – Lenses more like telephoto 3 Digital Multiplier Aperture • Digital multiplier converts focal length of digital camera • The diaphragm