A Guide to Smartphone Astrophotography National Aeronautics and Space Administration

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Improve Your Night Photography

IMPROVE YOUR NIGHT PHOTOGRAPHY By Jim Harmer SMASHWORDS EDITION * * * * * Improve Your Night Photography Copyright © 2010 Jim Harmer. All rights reserved THE SALES FROM THIS BOOK HELP TO SUPPORT THE AUTHOR AND HIS FAMILY. PLEASE CONSIDER GIVING THIS BOOK A 5-STAR REVIEW ON THE EBOOK STORE FROM WHICH IT WAS PURCHASED. * * * * * All rights reserved. Without limiting the rights under copyright reserved above, no part of this publication may be reproduced, stored in or introduced into a retrieval system, or transmitted, in any form, or by any means (electronic, mechanical, photocopying, recording, or otherwise) without the prior written permission of both the copyright owner and the above publisher of this book. This is a work of non-fiction, but all examples of persons contained herein are fictional. Persons, places, brands, media, and incidents are either the product of the author's imagination or are used fictitiously. The trademarked and/or copyrighted status and trademark and/or copyright owners of various products referenced in this work of fiction, which have been used without permission, is acknowledged. The publication/use of these trademarks and/or copyrights isn’t authorized, associated, or sponsored by the owners. The copyright notice and legal disclaimer at the end of this work is fully incorporated herein. Smashwords Edition License Notes This ebook is licensed for your personal enjoyment only. This ebook may not be re-sold or given away to other people. If you would like to share this book with another person, please purchase an additional copy for each person you share it with. If you're reading this book and did not purchase it, or it was not purchased for your use only, then you should return to Smashwords.com and purchase your own copy. -

Noise and ISO CS 178, Spring 2014

Noise and ISO CS 178, Spring 2014 Marc Levoy Computer Science Department Stanford University Outline ✦ examples of camera sensor noise • don’t confuse it with JPEG compression artifacts ✦ probability, mean, variance, signal-to-noise ratio (SNR) ✦ laundry list of noise sources • photon shot noise, dark current, hot pixels, fixed pattern noise, read noise ✦ SNR (again), dynamic range (DR), bits per pixel ✦ ISO ✦ denoising • by aligning and averaging multiple shots • by image processing will be covered in a later lecture 2 © Marc Levoy Nokia N95 cell phone at dusk • 8×8 blocks are JPEG compression • unwanted sinusoidal patterns within each block are JPEG’s attempt to compress noisy pixels 3 © Marc Levoy Canon 5D II at dusk • ISO 6400 • f/4.0 • 1/13 sec • RAW w/o denoising 4 © Marc Levoy Canon 5D II at dusk • ISO 6400 • f/4.0 • 1/13 sec • RAW w/o denoising 5 © Marc Levoy Canon 5D II at dusk • ISO 6400 • f/4.0 • 1/13 sec 6 © Marc Levoy Photon shot noise ✦ the number of photons arriving during an exposure varies from exposure to exposure and from pixel to pixel, even if the scene is completely uniform ✦ this number is governed by the Poisson distribution 7 © Marc Levoy Poisson distribution ✦ expresses the probability that a certain number of events will occur during an interval of time ✦ applicable to events that occur • with a known average rate, and • independently of the time since the last event ✦ if on average λ events occur in an interval of time, the probability p that k events occur instead is λ ke−λ p(k;λ) = probability k! density function 8 © Marc Levoy Mean and variance ✦ the mean of a probability density function p(x) is µ = ∫ x p(x)dx ✦ the variance of a probability density function p(x) is σ 2 = ∫ (x − µ)2 p(x)dx ✦ the mean and variance of the Poisson distribution are µ = λ σ 2 = λ ✦ the standard deviation is σ = λ Deviation grows slower than the average. -

News, Information, Rumors, Opinions, Etc

http://www.physics.miami.edu/~chris/srchmrk_nws.html ● Miami-Dade/Broward/Palm Beach News ❍ Miami Herald Online Sun Sentinel Palm Beach Post ❍ Miami ABC, Ch 10 Miami NBC, Ch 6 ❍ Miami CBS, Ch 4 Miami Fox, WSVN Ch 7 ● Local Government, Schools, Universities, Culture ❍ Miami Dade County Government ❍ Village of Pinecrest ❍ Miami Dade County Public Schools ❍ ❍ University of Miami UM Arts & Sciences ❍ e-Veritas Univ of Miami Faculty and Staff "news" ❍ The Hurricane online University of Miami Student Newspaper ❍ Tropic Culture Miami ❍ Culture Shock Miami ● Local Traffic ❍ Traffic Conditions in Miami from SmartTraveler. ❍ Traffic Conditions in Florida from FHP. ❍ Traffic Conditions in Miami from MSN Autos. ❍ Yahoo Traffic for Miami. ❍ Road/Highway Construction in Florida from Florida DOT. ❍ WSVN's (Fox, local Channel 7) live Traffic conditions in Miami via RealPlayer. News, Information, Rumors, Opinions, Etc. ● Science News ❍ EurekAlert! ❍ New York Times Science/Health ❍ BBC Science/Technology ❍ Popular Science ❍ Space.com ❍ CNN Space ❍ ABC News Science/Technology ❍ CBS News Sci/Tech ❍ LA Times Science ❍ Scientific American ❍ Science News ❍ MIT Technology Review ❍ New Scientist ❍ Physorg.com ❍ PhysicsToday.org ❍ Sky and Telescope News ❍ ENN - Environmental News Network ● Technology/Computer News/Rumors/Opinions ❍ Google Tech/Sci News or Yahoo Tech News or Google Top Stories ❍ ArsTechnica Wired ❍ SlashDot Digg DoggDot.us ❍ reddit digglicious.com Technorati ❍ del.ic.ious furl.net ❍ New York Times Technology San Jose Mercury News Technology Washington -

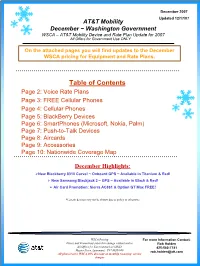

No Slide Title

December 2007 Updated 12/17/07 AT&T Mobility December ~ Washington Government WSCA – AT&T Mobility Device and Rate Plan Update for 2007 All Offers for Government Use ONLY On the attached pages you will find updates to the December WSCA pricing for Equipment and Rate Plans. Table of Contents Page 2: Voice Rate Plans Page 3: FREE Cellular Phones Page 4: Cellular Phones Page 5: BlackBerry Devices Page 6: SmartPhones (Microsoft, Nokia, Palm) Page 7: Push-to-Talk Devices Page 8: Aircards Page 9: Accessories Page 10: Nationwide Coverage Map December Highlights: ¾New Blackberry 8310 Curve! ~ Onboard GPS ~ Available in Titanium & Red! ¾ New Samsung Blackjack 2 – GPS ~ Available in Black & Red! ¾ Air Card Promotion: Sierra AC881 & Option GT Max FREE! *Certain devices may not be shown due to policy or otherwise WSCA Pricing. For more Information Contact: Prices and Promotions subject to change without notice Rob Holden All Offers for Government Use ONLY 425-580-7741 Master Price Agreement: T07-MST-069 [email protected] All plans receive WSCA 20% discount on monthly recurring service charges December 2007 December 2007 Updated 12/17/07 AT&T Mobility Oregon Government WSCA All plans receive 20% additional discount off of monthly recurring charges! AT&T Mobility Calling Plans REGIONAL Plan NATION Plans (Free Roaming and Long Distance Nationwide) Monthly Fee $9.99 (Rate Code ODNBRDS11) $39.99 $59.99 $79.99 $99.99 $149.99 Included mins 0 450 900 1,350 2,000 4,000 5000 N & W, Unlimited Nights & Weekends, Unlimited Mobile to 1000 Mobile to Mobile Unlim -

Estimation and Correction of the Distortion in Forensic Image Due to Rotation of the Photo Camera

Master Thesis Electrical Engineering February 2018 Master Thesis Electrical Engineering with emphasis on Signal Processing February 2018 Estimation and Correction of the Distortion in Forensic Image due to Rotation of the Photo Camera Sathwika Bavikadi Venkata Bharath Botta Department of Applied Signal Processing Blekinge Institute of Technology SE–371 79 Karlskrona, Sweden This thesis is submitted to the Department of Applied Signal Processing at Blekinge Institute of Technology in partial fulfillment of the requirements for the degree of Master of Science in Electrical Engineering with Emphasis on Signal Processing. Contact Information: Author(s): Sathwika Bavikadi E-mail: [email protected] Venkata Bharath Botta E-mail: [email protected] Supervisor: Irina Gertsovich University Examiner: Dr. Sven Johansson Department of Applied Signal Processing Internet : www.bth.se Blekinge Institute of Technology Phone : +46 455 38 50 00 SE–371 79 Karlskrona, Sweden Fax : +46 455 38 50 57 Abstract Images, unlike text, represent an effective and natural communica- tion media for humans, due to their immediacy and the easy way to understand the image content. Shape recognition and pattern recog- nition are one of the most important tasks in the image processing. Crime scene photographs should always be in focus and there should be always be a ruler be present, this will allow the investigators the ability to resize the image to accurately reconstruct the scene. There- fore, the camera must be on a grounded platform such as tripod. Due to the rotation of the camera around the camera center there exist the distortion in the image which must be minimized. -

Ira Sprague Bowen Papers, 1940-1973

http://oac.cdlib.org/findaid/ark:/13030/tf2p300278 No online items Inventory of the Ira Sprague Bowen Papers, 1940-1973 Processed by Ronald S. Brashear; machine-readable finding aid created by Gabriela A. Montoya Manuscripts Department The Huntington Library 1151 Oxford Road San Marino, California 91108 Phone: (626) 405-2203 Fax: (626) 449-5720 Email: [email protected] URL: http://www.huntington.org/huntingtonlibrary.aspx?id=554 © 1998 The Huntington Library. All rights reserved. Observatories of the Carnegie Institution of Washington Collection Inventory of the Ira Sprague 1 Bowen Papers, 1940-1973 Observatories of the Carnegie Institution of Washington Collection Inventory of the Ira Sprague Bowen Paper, 1940-1973 The Huntington Library San Marino, California Contact Information Manuscripts Department The Huntington Library 1151 Oxford Road San Marino, California 91108 Phone: (626) 405-2203 Fax: (626) 449-5720 Email: [email protected] URL: http://www.huntington.org/huntingtonlibrary.aspx?id=554 Processed by: Ronald S. Brashear Encoded by: Gabriela A. Montoya © 1998 The Huntington Library. All rights reserved. Descriptive Summary Title: Ira Sprague Bowen Papers, Date (inclusive): 1940-1973 Creator: Bowen, Ira Sprague Extent: Approximately 29,000 pieces in 88 boxes Repository: The Huntington Library San Marino, California 91108 Language: English. Provenance Placed on permanent deposit in the Huntington Library by the Observatories of the Carnegie Institution of Washington Collection. This was done in 1989 as part of a letter of agreement (dated November 5, 1987) between the Huntington and the Carnegie Observatories. The papers have yet to be officially accessioned. Cataloging of the papers was completed in 1989 prior to their transfer to the Huntington. -

BLUETOOTH SHUTTERBOSS User Manual THANK YOU for CHOOSING VELLO

BLUETOOTH SHUTTERBOSS User Manual THANK YOU FOR CHOOSING VELLO The Vello Bluetooth ShutterBoss ideal for eliminating vibrations shutter exposures in multiple Advanced Intervalometer during macro, close-up, and firing modes, the Bluetooth represents the new generation long exposure photography, ShutterBoss is the future of of wireless triggering. Utilizing as well as for taking images wireless camera controls. the power of Bluetooth of hard to approach subjects, technology, the Bluetooth such as wildlife. The Integrated ShutterBoss empowers the user intervalometer and 10 setting to an Apple® iPhone®, iPad®, schedules allow you to trigger iPad mini™, or iPod touch® up to 9,999 shots during a to wirelessly trigger their period of almost a full day – camera’s shutter. This makes 23 hours, 59 minutes, and 59 the Bluetooth ShutterBoss seconds. Capable of activating 2 FEATURES • Wireless Bluetooth • Multiple shooting modes communication with Apple iPhone, iPad, or iPod touch • Compact and easy to use • Advanced intervalometer with • Ideal for advanced up to 10 scheduling modes intervalometer photography, macro, close-up, and long • Free app on the App StoreSM exposures 3 PRECAUTIONS • Please read and follow these • Do not handle with wet hands • Observe caution when instructions and keep this or immerse in or expose handling batteries. Batteries manual in a safe place. to water or rain. Failure to may leak or explode if observe this precaution could improperly handled. Use • Do not attempt to result in fire or electric shock. only the batteries listed in disassemble or perform any this manual. Make certain to unauthorized modification. • Keep out of the reach of align batteries with correct children. -

Depth of Field PDF Only

Depth of Field for Digital Images Robin D. Myers Better Light, Inc. In the days before digital images, before the advent of roll film, photography was accomplished with photosensitive emulsions spread on glass plates. After processing and drying the glass negative, it was contact printed onto photosensitive paper to produce the final print. The size of the final print was the same size as the negative. During this period some of the foundational work into the science of photography was performed. One of the concepts developed was the circle of confusion. Contact prints are usually small enough that they are normally viewed at a distance of approximately 250 millimeters (about 10 inches). At this distance the human eye can resolve a detail that occupies an angle of about 1 arc minute. The eye cannot see a difference between a blurred circle and a sharp edged circle that just fills this small angle at this viewing distance. The diameter of this circle is called the circle of confusion. Converting the diameter of this circle into a size measurement, we get about 0.1 millimeters. If we assume a standard print size of 8 by 10 inches (about 200 mm by 250 mm) and divide this by the circle of confusion then an 8x10 print would represent about 2000x2500 smallest discernible points. If these points are equated to their equivalence in digital pixels, then the resolution of a 8x10 print would be about 2000x2500 pixels or about 250 pixels per inch (100 pixels per centimeter). The circle of confusion used for 4x5 film has traditionally been that of a contact print viewed at the standard 250 mm viewing distance. -

Down and Dirty Camera Tricks Even for Your CELL PHONE

Down and Dirty Camera Tricks Even for Your CELL PHONE Educational Seminar GCSAA Presenter - John R. Johnson Creating Images That Count The often quoted Yogi said, “When you come to a Fork in the Road – Take it!” What he meant was . “When you have a Decision in Life – Make it.” Let’s Make Good Decisions with Our Cameras I Communicate With Images You’ve known me for Years. Good Photography Is How You Can Communicate This one is from the Media Moses Pointe – WA Which Is Better? Same Course, 100 yards away – Shot by a Pro Moses Pointe – WA Ten Tricks That Work . On Big Cameras Too. Note image to left is cell phone shot of me shooting in NM See, Even Pros Use Cell Phones So Let’s Get Started. I Have My EYE On YOU Photography Must-Haves Light Exposure Composition This is a Cell Phone Photograph #1 - Spectacular Light = Spectacular Photography Colbert Hills - KS Light from the side or slightly behind. Cell phones require tons of light, so be sure it is BRIGHT. Sunsets can’t hurt either. #2 – Make it Interesting Change your angle, go higher, go lower, look for the unusual. Resist the temptation to just stand and shoot. This is a Cell Phone Photograph Mt. Rainier Coming Home #2 – Make it Interesting Same trip, but I shot it from Space just before the Shuttle re-entry . OK, just kidding, but this is a real shot, on a flight so experiment and expand your vision. This is a Cell Phone Photograph #3 – Get Closer In This Case Lower Too Typically, the lens is wide angle, so things are too small, when you try to enlarge, they get blurry, so get closer to start. -

Making Your Own Astronomical Camera by Susan Kern and Don Mccarthy

www.astrosociety.org/uitc No. 50 - Spring 2000 © 2000, Astronomical Society of the Pacific, 390 Ashton Avenue, San Francisco, CA 94112. Making Your Own Astronomical Camera by Susan Kern and Don McCarthy An Education in Optics Dissect & Modify the Camera Loading the Film Turning the Camera Skyward Tracking the Sky Astronomy Camp for All Ages For More Information People are fascinated by the night sky. By patiently watching, one can observe many astronomical and atmospheric phenomena, yet the permanent recording of such phenomena usually belongs to serious amateur astronomers using moderately expensive, 35-mm cameras and to scientists using modern telescopic equipment. At the University of Arizona's Astronomy Camps, we dissect, modify, and reload disposed "One- Time Use" cameras to allow students to study engineering principles and to photograph the night sky. Elementary school students from Silverwood School in Washington state work with their modified One-Time Use cameras during Astronomy Camp. Photo courtesy of the authors. Today's disposable cameras are a marvel of technology, wonderfully suited to a variety of educational activities. Discarded plastic cameras are free from camera stores. Students from junior high through graduate school can benefit from analyzing the cameras' optics, mechanisms, electronics, light sources, manufacturing techniques, and economics. Some of these educational features were recently described by Gene Byrd and Mark Graham in their article in the Physics Teacher, "Camera and Telescope Free-for-All!" (1999, vol. 37, p. 547). Here we elaborate on the cameras' optical properties and show how to modify and reload one for astrophotography. An Education in Optics The "One-Time Use" cameras contain at least six interesting optical components. -

Night Sky Monitoring Program for the Southeast Utah Group, 2003

NIGHT SKY United States Department of MONITORING Interior National Park Service PROGRAM Southeast Utah Group SOUTHEAST UTAH GROUP Resource Management Division Arches National Park Canyonlands National Park Moab Hovenweep National Monument Utah 84532 Natural Bridges National Monument General Technical Report SEUG-001-2003 2003 January 2004 Charles Schelz / SEUG Biologist Angie Richman / SEUG Physical Science Technician EXECUTIVE SUMMARY NIGHT SKY MONITORING PROGRAM Southeast Utah Group Arches and Canyonlands National Parks Hovenweep and Natural Bridges National Monuments Project Funded by the National Park Service and Canyonlands Natural History Association This report lays the foundation for a Night Sky Monitoring Program in all the park units of the Southeast Utah Group (SEUG). Long-term monitoring of night sky light levels at the units of the SEUG of the National Park Service (NPS) was initiated in 2001 and has evolved steadily through 2003. The Resource Management Division of the Southeast Utah Group (SEUG) performs all night sky monitoring and is based at NPS headquarters in Moab, Utah. All protocols are established in conjunction with the NPS National Night Sky Monitoring Team. This SEUG Night Sky Monitoring Program report contains a detailed description of the methodology and results of night sky monitoring at the four park units of the SEUG. This report also proposes a three pronged resource protection approach to night sky light pollution issues in the SEUG and the surrounding region. Phase 1 is the establishment of methodologies and permanent night sky monitoring locations in each unit of the SEUG. Phase 2 will be a Light Pollution Management Plan for the park units of the SEUG. -

Technology, Media, and Telecommunications Predictions

Technology, Media, and Telecommunications Predictions 2019 Deloitte’s Technology, Media, and Telecommunications (TMT) group brings together one of the world’s largest pools of industry experts—respected for helping companies of all shapes and sizes thrive in a digital world. Deloitte’s TMT specialists can help companies take advantage of the ever- changing industry through a broad array of services designed to meet companies wherever they are, across the value chain and around the globe. Contact the authors for more information or read more on Deloitte.com. Technology, Media, and Telecommunications Predictions 2019 Contents Foreword | 2 5G: The new network arrives | 4 Artificial intelligence: From expert-only to everywhere | 14 Smart speakers: Growth at a discount | 24 Does TV sports have a future? Bet on it | 36 On your marks, get set, game! eSports and the shape of media in 2019 | 50 Radio: Revenue, reach, and resilience | 60 3D printing growth accelerates again | 70 China, by design: World-leading connectivity nurtures new digital business models | 78 China inside: Chinese semiconductors will power artificial intelligence | 86 Quantum computers: The next supercomputers, but not the next laptops | 96 Deloitte Australia key contacts | 108 1 Technology, Media, and Telecommunications Predictions 2019 Foreword Dear reader, Welcome to Deloitte Global’s Technology, Media, and Telecommunications Predictions for 2019. The theme this year is one of continuity—as evolution rather than stasis. Predictions has been published since 2001. Back in 2009 and 2010, we wrote about the launch of exciting new fourth-generation wireless networks called 4G (aka LTE). A decade later, we’re now making predic- tions about 5G networks that will be launching this year.