The F-Word in Optics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Breaking Down the “Cosine Fourth Power Law”

Breaking Down The “Cosine Fourth Power Law” By Ronian Siew, inopticalsolutions.com Why are the corners of the field of view in the image captured by a camera lens usually darker than the center? For one thing, camera lenses by design often introduce “vignetting” into the image, which is the deliberate clipping of rays at the corners of the field of view in order to cut away excessive lens aberrations. But, it is also known that corner areas in an image can get dark even without vignetting, due in part to the so-called “cosine fourth power law.” 1 According to this “law,” when a lens projects the image of a uniform source onto a screen, in the absence of vignetting, the illumination flux density (i.e., the optical power per unit area) across the screen from the center to the edge varies according to the fourth power of the cosine of the angle between the optic axis and the oblique ray striking the screen. Actually, optical designers know this “law” does not apply generally to all lens conditions.2 – 10 Fundamental principles of optical radiative flux transfer in lens systems allow one to tune the illumination distribution across the image by varying lens design characteristics. In this article, we take a tour into the fascinating physics governing the illumination of images in lens systems. Relative Illumination In Lens Systems In lens design, one characterizes the illumination distribution across the screen where the image resides in terms of a quantity known as the lens’ relative illumination — the ratio of the irradiance (i.e., the power per unit area) at any off-axis position of the image to the irradiance at the center of the image. -

Geometrical Irradiance Changes in a Symmetric Optical System

Geometrical irradiance changes in a symmetric optical system Dmitry Reshidko Jose Sasian Dmitry Reshidko, Jose Sasian, “Geometrical irradiance changes in a symmetric optical system,” Opt. Eng. 56(1), 015104 (2017), doi: 10.1117/1.OE.56.1.015104. Downloaded From: https://www.spiedigitallibrary.org/journals/Optical-Engineering on 11/28/2017 Terms of Use: https://www.spiedigitallibrary.org/terms-of-use Optical Engineering 56(1), 015104 (January 2017) Geometrical irradiance changes in a symmetric optical system Dmitry Reshidko* and Jose Sasian University of Arizona, College of Optical Sciences, 1630 East University Boulevard, Tucson 85721, United States Abstract. The concept of the aberration function is extended to define two functions that describe the light irra- diance distribution at the exit pupil plane and at the image plane of an axially symmetric optical system. Similar to the wavefront aberration function, the irradiance function is expanded as a polynomial, where individual terms represent basic irradiance distribution patterns. Conservation of flux in optical imaging systems is used to derive the specific relation between the irradiance coefficients and wavefront aberration coefficients. It is shown that the coefficients of the irradiance functions can be expressed in terms of wavefront aberration coefficients and first- order system quantities. The theoretical results—these are irradiance coefficient formulas—are in agreement with real ray tracing. © 2017 Society of Photo-Optical Instrumentation Engineers (SPIE) [DOI: 10.1117/1.OE.56.1.015104] Keywords: aberration theory; wavefront aberrations; irradiance. Paper 161674 received Oct. 26, 2016; accepted for publication Dec. 20, 2016; published online Jan. 23, 2017. 1 Introduction the normalized field H~ and aperture ρ~ vectors. -

Panoramas Shoot with the Camera Positioned Vertically As This Will Give the Photo Merging Software More Wriggle-Room in Merging the Images

P a n o r a m a s What is a Panorama? A panoramic photo covers a larger field of view than a “normal” photograph. In general if the aspect ratio is 2 to 1 or greater then it’s classified as a panoramic photo. This sample is about 3 times wider than tall, an aspect ratio of 3 to 1. What is a Panorama? A panorama is not limited to horizontal shots only. Vertical images are also an option. How is a Panorama Made? Panoramic photos are created by taking a series of overlapping photos and merging them together using software. Why Not Just Crop a Photo? • Making a panorama by cropping deletes a lot of data from the image. • That’s not a problem if you are just going to view it in a small format or at a low resolution. • However, if you want to print the image in a large format the loss of data will limit the size and quality that can be made. Get a Really Wide Angle Lens? • A wide-angle lens still may not be wide enough to capture the whole scene in a single shot. Sometime you just can’t get back far enough. • Photos taken with a wide-angle lens can exhibit undesirable lens distortion. • Lens cost, an auto focus 14mm f/2.8 lens can set you back $1,800 plus. What Lens to Use? • A standard lens works very well for taking panoramic photos. • You get minimal lens distortion, resulting in more realistic panoramic photos. • Choose a lens or focal length on a zoom lens of between 35mm and 80mm. -

Introduction to CODE V: Optics

Introduction to CODE V Training: Day 1 “Optics 101” Digital Camera Design Study User Interface and Customization 3280 East Foothill Boulevard Pasadena, California 91107 USA (626) 795-9101 Fax (626) 795-0184 e-mail: [email protected] World Wide Web: http://www.opticalres.com Copyright © 2009 Optical Research Associates Section 1 Optics 101 (on a Budget) Introduction to CODE V Optics 101 • 1-1 Copyright © 2009 Optical Research Associates Goals and “Not Goals” •Goals: – Brief overview of basic imaging concepts – Introduce some lingo of lens designers – Provide resources for quick reference or further study •Not Goals: – Derivation of equations – Explain all there is to know about optical design – Explain how CODE V works Introduction to CODE V Training, “Optics 101,” Slide 1-3 Sign Conventions • Distances: positive to right t >0 t < 0 • Curvatures: positive if center of curvature lies to right of vertex VC C V c = 1/r > 0 c = 1/r < 0 • Angles: positive measured counterclockwise θ > 0 θ < 0 • Heights: positive above the axis Introduction to CODE V Training, “Optics 101,” Slide 1-4 Introduction to CODE V Optics 101 • 1-2 Copyright © 2009 Optical Research Associates Light from Physics 102 • Light travels in straight lines (homogeneous media) • Snell’s Law: n sin θ = n’ sin θ’ • Paraxial approximation: –Small angles:sin θ~ tan θ ~ θ; and cos θ ~ 1 – Optical surfaces represented by tangent plane at vertex • Ignore sag in computing ray height • Thickness is always center thickness – Power of a spherical refracting surface: 1/f = φ = (n’-n)*c -

Chapter 3 (Aberrations)

Chapter 3 Aberrations 3.1 Introduction In Chap. 2 we discussed the image-forming characteristics of optical systems, but we limited our consideration to an infinitesimal thread- like region about the optical axis called the paraxial region. In this chapter we will consider, in general terms, the behavior of lenses with finite apertures and fields of view. It has been pointed out that well- corrected optical systems behave nearly according to the rules of paraxial imagery given in Chap. 2. This is another way of stating that a lens without aberrations forms an image of the size and in the loca- tion given by the equations for the paraxial or first-order region. We shall measure the aberrations by the amount by which rays miss the paraxial image point. It can be seen that aberrations may be determined by calculating the location of the paraxial image of an object point and then tracing a large number of rays (by the exact trigonometrical ray-tracing equa- tions of Chap. 10) to determine the amounts by which the rays depart from the paraxial image point. Stated this baldly, the mathematical determination of the aberrations of a lens which covered any reason- able field at a real aperture would seem a formidable task, involving an almost infinite amount of labor. However, by classifying the various types of image faults and by understanding the behavior of each type, the work of determining the aberrations of a lens system can be sim- plified greatly, since only a few rays need be traced to evaluate each aberration; thus the problem assumes more manageable proportions. -

Section 10 Vignetting Vignetting the Stop Determines Determines the Stop the Size of the Bundle of Rays That Propagates On-Axis an the System for Through Object

10-1 I and Instrumentation Design Optical OPTI-502 © Copyright 2019 John E. Greivenkamp E. John 2019 © Copyright Section 10 Vignetting 10-2 I and Instrumentation Design Optical OPTI-502 Vignetting Greivenkamp E. John 2019 © Copyright On-Axis The stop determines the size of Ray Aperture the bundle of rays that propagates Bundle through the system for an on-axis object. As the object height increases, z one of the other apertures in the system (such as a lens clear aperture) may limit part or all of Stop the bundle of rays. This is known as vignetting. Vignetted Off-Axis Ray Rays Bundle z Stop Aperture 10-3 I and Instrumentation Design Optical OPTI-502 Ray Bundle – On-Axis Greivenkamp E. John 2018 © Copyright The ray bundle for an on-axis object is a rotationally-symmetric spindle made up of sections of right circular cones. Each cone section is defined by the pupil and the object or image point in that optical space. The individual cone sections match up at the surfaces and elements. Stop Pupil y y y z y = 0 At any z, the cross section of the bundle is circular, and the radius of the bundle is the marginal ray value. The ray bundle is centered on the optical axis. 10-4 I and Instrumentation Design Optical OPTI-502 Ray Bundle – Off Axis Greivenkamp E. John 2019 © Copyright For an off-axis object point, the ray bundle skews, and is comprised of sections of skew circular cones which are still defined by the pupil and object or image point in that optical space. -

502-13 Magnifiers and Telescopes

13-1 I and Instrumentation Design Optical OPTI-502 © Copyright 2019 John E. Greivenkamp E. John 2019 © Copyright Section 13 Magnifiers and Telescopes 13-2 I and Instrumentation Design Optical OPTI-502 Visual Magnification Greivenkamp E. John 2019 © Copyright All optical systems that are used with the eye are characterized by a visual magnification or a visual magnifying power. While the details of the definitions of this quantity differ from instrument to instrument and for different applications, the underlying principle remains the same: How much bigger does an object appear to be when viewed through the instrument? The size change is measured as the change in angular subtense of the image produced by the instrument compared to the angular subtense of the object. The angular subtense of the object is measured when the object is placed at the optimum viewing condition. 13-3 I and Instrumentation Design Optical OPTI-502 Magnifiers Greivenkamp E. John 2019 © Copyright As an object is brought closer to the eye, the size of the image on the retina increases and the object appears larger. The largest image magnification possible with the unaided eye occurs when the object is placed at the near point of the eye, by convention 250 mm or 10 in from the eye. A magnifier is a single lens that provides an enlarged erect virtual image of a nearby object for visual observation. The object must be placed inside the front focal point of the magnifier. f h uM h F z z s The magnifying power MP is defined as (stop at the eye): Angular size of the image (with lens) MP Angular size of the object at the near point uM MP d NP 250 mm uU 13-4 I and Instrumentation Design Optical OPTI-502 Magnifiers – Magnifying Power Greivenkamp E. -

Depth of Field PDF Only

Depth of Field for Digital Images Robin D. Myers Better Light, Inc. In the days before digital images, before the advent of roll film, photography was accomplished with photosensitive emulsions spread on glass plates. After processing and drying the glass negative, it was contact printed onto photosensitive paper to produce the final print. The size of the final print was the same size as the negative. During this period some of the foundational work into the science of photography was performed. One of the concepts developed was the circle of confusion. Contact prints are usually small enough that they are normally viewed at a distance of approximately 250 millimeters (about 10 inches). At this distance the human eye can resolve a detail that occupies an angle of about 1 arc minute. The eye cannot see a difference between a blurred circle and a sharp edged circle that just fills this small angle at this viewing distance. The diameter of this circle is called the circle of confusion. Converting the diameter of this circle into a size measurement, we get about 0.1 millimeters. If we assume a standard print size of 8 by 10 inches (about 200 mm by 250 mm) and divide this by the circle of confusion then an 8x10 print would represent about 2000x2500 smallest discernible points. If these points are equated to their equivalence in digital pixels, then the resolution of a 8x10 print would be about 2000x2500 pixels or about 250 pixels per inch (100 pixels per centimeter). The circle of confusion used for 4x5 film has traditionally been that of a contact print viewed at the standard 250 mm viewing distance. -

Depth of Focus (DOF)

Erect Image Depth of Focus (DOF) unit: mm Also known as ‘depth of field’, this is the distance (measured in the An image in which the orientations of left, right, top, bottom and direction of the optical axis) between the two planes which define the moving directions are the same as those of a workpiece on the limits of acceptable image sharpness when the microscope is focused workstage. PG on an object. As the numerical aperture (NA) increases, the depth of 46 focus becomes shallower, as shown by the expression below: λ DOF = λ = 0.55µm is often used as the reference wavelength 2·(NA)2 Field number (FN), real field of view, and monitor display magnification unit: mm Example: For an M Plan Apo 100X lens (NA = 0.7) The depth of focus of this objective is The observation range of the sample surface is determined by the diameter of the eyepiece’s field stop. The value of this diameter in 0.55µm = 0.6µm 2 x 0.72 millimeters is called the field number (FN). In contrast, the real field of view is the range on the workpiece surface when actually magnified and observed with the objective lens. Bright-field Illumination and Dark-field Illumination The real field of view can be calculated with the following formula: In brightfield illumination a full cone of light is focused by the objective on the specimen surface. This is the normal mode of viewing with an (1) The range of the workpiece that can be observed with the optical microscope. With darkfield illumination, the inner area of the microscope (diameter) light cone is blocked so that the surface is only illuminated by light FN of eyepiece Real field of view = from an oblique angle. -

Ground-Based Photographic Monitoring

United States Department of Agriculture Ground-Based Forest Service Pacific Northwest Research Station Photographic General Technical Report PNW-GTR-503 Monitoring May 2001 Frederick C. Hall Author Frederick C. Hall is senior plant ecologist, U.S. Department of Agriculture, Forest Service, Pacific Northwest Region, Natural Resources, P.O. Box 3623, Portland, Oregon 97208-3623. Paper prepared in cooperation with the Pacific Northwest Region. Abstract Hall, Frederick C. 2001 Ground-based photographic monitoring. Gen. Tech. Rep. PNW-GTR-503. Portland, OR: U.S. Department of Agriculture, Forest Service, Pacific Northwest Research Station. 340 p. Land management professionals (foresters, wildlife biologists, range managers, and land managers such as ranchers and forest land owners) often have need to evaluate their management activities. Photographic monitoring is a fast, simple, and effective way to determine if changes made to an area have been successful. Ground-based photo monitoring means using photographs taken at a specific site to monitor conditions or change. It may be divided into two systems: (1) comparison photos, whereby a photograph is used to compare a known condition with field conditions to estimate some parameter of the field condition; and (2) repeat photo- graphs, whereby several pictures are taken of the same tract of ground over time to detect change. Comparison systems deal with fuel loading, herbage utilization, and public reaction to scenery. Repeat photography is discussed in relation to land- scape, remote, and site-specific systems. Critical attributes of repeat photography are (1) maps to find the sampling location and of the photo monitoring layout; (2) documentation of the monitoring system to include purpose, camera and film, w e a t h e r, season, sampling technique, and equipment; and (3) precise replication of photographs. -

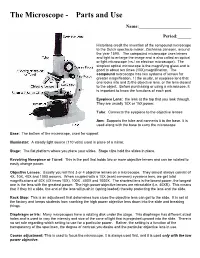

The Microscope Parts And

The Microscope Parts and Use Name:_______________________ Period:______ Historians credit the invention of the compound microscope to the Dutch spectacle maker, Zacharias Janssen, around the year 1590. The compound microscope uses lenses and light to enlarge the image and is also called an optical or light microscope (vs./ an electron microscope). The simplest optical microscope is the magnifying glass and is good to about ten times (10X) magnification. The compound microscope has two systems of lenses for greater magnification, 1) the ocular, or eyepiece lens that one looks into and 2) the objective lens, or the lens closest to the object. Before purchasing or using a microscope, it is important to know the functions of each part. Eyepiece Lens: the lens at the top that you look through. They are usually 10X or 15X power. Tube: Connects the eyepiece to the objective lenses Arm: Supports the tube and connects it to the base. It is used along with the base to carry the microscope Base: The bottom of the microscope, used for support Illuminator: A steady light source (110 volts) used in place of a mirror. Stage: The flat platform where you place your slides. Stage clips hold the slides in place. Revolving Nosepiece or Turret: This is the part that holds two or more objective lenses and can be rotated to easily change power. Objective Lenses: Usually you will find 3 or 4 objective lenses on a microscope. They almost always consist of 4X, 10X, 40X and 100X powers. When coupled with a 10X (most common) eyepiece lens, we get total magnifications of 40X (4X times 10X), 100X , 400X and 1000X. -

How Do the Lenses in a Microscope Work?

Student Name: _____________________________ Date: _________________ How do the lenses in a microscope work? Compound Light Microscope: A compound light microscope uses light to transmit an image to your eye. Compound deals with the microscope having more than one lens. Microscope is the combination of two words; "micro" meaning small and "scope" meaning view. Early microscopes, like Leeuwenhoek's, were called simple because they only had one lens. Simple scopes work like magnifying glasses that you have seen and/or used. These early microscopes had limitations to the amount of magnification no matter how they were constructed. The creation of the compound microscope by the Janssens helped to advance the field of microbiology light years ahead of where it had been only just a few years earlier. The Janssens added a second lens to magnify the image of the primary (or first) lens. Simple light microscopes of the past could magnify an object to 266X as in the case of Leeuwenhoek's microscope. Modern compound light microscopes, under optimal conditions, can magnify an object from 1000X to 2000X (times) the specimens original diameter. "The Compound Light Microscope." The Compound Light Microscope. Web. 16 Feb. 2017. http://www.cas.miamioh.edu/mbi-ws/microscopes/compoundscope.html Text is available under the Creative Commons Attribution-NonCommercial 4.0 International (CC BY-NC 4.0) license. - 1 – Student Name: _____________________________ Date: _________________ Now we will describe how a microscope works in somewhat more detail. The first lens of a microscope is the one closest to the object being examined and, for this reason, is called the objective.