Fast Fourier Color Constancy

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Rethinking Color Cameras

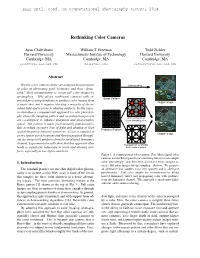

Rethinking Color Cameras Ayan Chakrabarti William T. Freeman Todd Zickler Harvard University Massachusetts Institute of Technology Harvard University Cambridge, MA Cambridge, MA Cambridge, MA [email protected] [email protected] [email protected] Abstract Digital color cameras make sub-sampled measurements of color at alternating pixel locations, and then “demo- saick” these measurements to create full color images by up-sampling. This allows traditional cameras with re- stricted processing hardware to produce color images from a single shot, but it requires blocking a majority of the in- cident light and is prone to aliasing artifacts. In this paper, we introduce a computational approach to color photogra- phy, where the sampling pattern and reconstruction process are co-designed to enhance sharpness and photographic speed. The pattern is made predominantly panchromatic, thus avoiding excessive loss of light and aliasing of high spatial-frequency intensity variations. Color is sampled at a very sparse set of locations and then propagated through- out the image with guidance from the un-aliased luminance channel. Experimental results show that this approach often leads to significant reductions in noise and aliasing arti- facts, especially in low-light conditions. Figure 1. A computational color camera. Top: Most digital color cameras use the Bayer pattern (or something like it) to sub-sample 1. Introduction color alternatingly; and then they demosaick these samples to create full-color images by up-sampling. Bottom: We propose The standard practice for one-shot digital color photog- an alternative that samples color very sparsely and is otherwise raphy is to include a color filter array in front of the sensor panchromatic. -

Spatial Filtering, Color Constancy, and the Color-Changing Dress Department of Psychology, American University, Erica L

Journal of Vision (2017) 17(3):7, 1–20 1 Spatial filtering, color constancy, and the color-changing dress Department of Psychology, American University, Erica L. Dixon Washington, DC, USA Department of Psychology and Department of Computer Arthur G. Shapiro Science, American University, Washington, DC, USA The color-changing dress is a 2015 Internet phenomenon divide among responders (as well as disagreement from in which the colors in a picture of a dress are reported as widely followed celebrity commentators) fueled a rapid blue-black by some observers and white-gold by others. spread of the photo across many online news outlets, The standard explanation is that observers make and the topic trended worldwide on Twitter under the different inferences about the lighting (is the dress in hashtag #theDress. The huge debate on the Internet shadow or bright yellow light?); based on these also sparked debate in the vision science community inferences, observers make a best guess about the about the implications of the stimulus with regard to reflectance of the dress. The assumption underlying this individual differences in color perception, which in turn explanation is that reflectance is the key to color led to a special issue of the Journal of Vision, for which constancy because reflectance alone remains invariant this article is written. under changes in lighting conditions. Here, we The predominant explanations in both scientific demonstrate an alternative type of invariance across journals (Gegenfurtner, Bloj, & Toscani, 2015; Lafer- illumination conditions: An object that appears to vary in Sousa, Hermann, & Conway, 2015; Winkler, Spill- color under blue, white, or yellow illumination does not change color in the high spatial frequency region. -

Color Constancy and Contextual Effects on Color Appearance

Chapter 6 Color Constancy and Contextual Effects on Color Appearance Maria Olkkonen and Vebjørn Ekroll Abstract Color is a useful cue to object properties such as object identity and state (e.g., edibility), and color information supports important communicative functions. Although the perceived color of objects is related to their physical surface properties, this relationship is not straightforward. The ambiguity in perceived color arises because the light entering the eyes contains information about both surface reflectance and prevailing illumination. The challenge of color constancy is to estimate surface reflectance from this mixed signal. In addition to illumination, the spatial context of an object may also affect its color appearance. In this chapter, we discuss how viewing context affects color percepts. We highlight some important results from previous research, and move on to discuss what could help us make further progress in the field. Some promising avenues for future research include using individual differences to help in theory development, and integrating more naturalistic scenes and tasks along with model comparison into color constancy and color appearance research. Keywords Color perception • Color constancy • Color appearance • Context • Psychophysics • Individual differences 6.1 Introduction Color is a useful cue to object properties such as object identity and state (e.g., edibility), and color information supports important communicative functions [1]. Although the perceived color of objects is related to their physical surface properties, M. Olkkonen, M.A. (Psych), Dr. rer. nat. (*) Department of Psychology, Science Laboratories, Durham University, South Road, Durham DH1 3LE, UK Institute of Behavioural Sciences, University of Helsinki, Siltavuorenpenger 1A, 00014 Helsinki, Finland e-mail: [email protected]; maria.olkkonen@helsinki.fi V. -

38 Color Constancy

38 Color Constancy DAVID H. BRAINARD AND ANA RADONJIC Vision is useful because it informs us about the physical provide a self-contained introduction, to highlight environment. In the case of colm~ two distinct functions some more recent results, and to outline what we see are generally emphasized (e.g., Jacobs, 1981; Mollon, as important challenges for the field. We focus on key 1989). First, color helps to segment objects from each concepts and avoid much in the way of technical devel other and the background. A canonical task for this opment. Other recent treatments complement the one function is locating fruit in foliage (Regan et al., 2001; provided here and provide a level of technical detail Sumner & Mollon, 2000). Second, color provides infm' beyond that introduced in this chapter (Brainard, 2009; mation about object properties (e.g., fresh fish versus Brainard & Maloney, 2011; Foster, 2011; Gilchrist, 2006; old fish; figure 38.1). Using color for this second func Kingdom, 2008; Shevell & Kingdom, 2008; Smithson, tion is enabled to the extent that perceived object color 2005; Stockman & Brainard, 2010). correlates well with object reflectance properties. Achieving such correlation is a nontrivial requirement, MEASURING CONSTANCY however, because the spectrum of the light reflected from an object confounds variation in object surface The study of constancy requires methods for measuring reflectance with variation in the illumination (figure it. The classic approach to such measurement is to 38.2; Brainard, Wandell, & Chichilnisky, 1993; Hurl assess the extent to which the color appearance of bert, 1998; Maloney, 1999). In particular, the spectrum objects varies as they are viewed under different illumi of the reflected light is the wavelength-by-wavelength nations. -

Color Blindness Fovea

Color Theory • Chapter 3b Perceiving Color • Color Constancy • Chapter 3 Perceiving Color Theory Color (so far) • Lens • Iris • Rods • Cones x3 • Fovea • Optic Nerve • Iodopsin & Rhodopsin • Chapter 3 Color Theory Perceiving Color (today) • Tri-Chromatic theory of color vision • Color Afterimage • Opponent Theory of color vision • Color Constancy • Monet’s series • Color Blindness Fovea • A tiny (1mm) area on the retina is covered with a dense collection of cones. • This region is where we see color distinctions best. Seeing Color • Still no proven model of how color perception works. • Cones contain light-sensitive pigments called Iodopsin. • Iodopsin changes when light hits it -- it is photo- sensitive. Light is effectively converted/translated into a nerve impulse…a signal to the brain. Trichromatic Theory & Opponent Theory • 19th c. Trichromatic Theory first proposed the idea of three types of cones. • Current theory -- Opponent Theory -- is that there are three types of iodopsin -- one that senses (or “sees”) red light, one that sees green, and one blue-violet. Each is “blind” to its complement. • We then combine the information from all three to perceive color. • We have mostly red- and green- sensitive cones – few blue-sensitive ones. • Diagram of suspected “wiring” of cones to ganglion Cones to Color (nerve) cells. • Light primaries are “read” individually, then results are combined. Cones to Color • Diagram of suspected trichromatic “wiring” of cones to ganglion (nerve) cells. • Light primaries are “read” individually, then results are combined. • http://www.handprint.com/HP /WCL/color1.html Opponent Processing • “As the diagram shows, the opponent processing pits the responses from one type of cone against the others. -

Chapter 7: Perceiving Color

Chapter 7: Perceiving Color -The physical dimensions of color -The psychological dimensions of color appearance (hue, saturation, brightness) -The relationship between the psychological and physical dimensions of color (Trichromacy Color opponency) - Other influences on color perception (color constancy, top-down effects) Sir Isaac Newton Newton’s Prism Experiment (1704) White light is composed of multiple colors Light Monochromatic light : one wavelength (like a laser) Physical parameters for monochromatic light 1. Wavelength 2. Intensity Heterochromatic light : many wavelengths (normal light sources) For heterochromatic light The spectral composition gives the intensity at each wavelength Monochromatic light Heterochromatic light Spectral composition of two common (heterochromatic) illuminants The spectral components of light entering the eye is the product of the illuminant and the surface reflectance of objects. Reflectance of some common surfaces and pigments 350 400 450 500 550 600 650 700 750 800 Surface reflectance of some common objects 350 400 450 500 550 600 650 700 750 800 Spectral composition of light entering the eye after being reflected from a surface = Spectral composition of the Reflectance of the surface illuminant X Consider a ripe orange illuminated with a bright monochromatic blue (420 nm) light. What color will the banana appear to be? a. bright orange b. dark/dim orange Blue (monochromatic) c. black d. dark/dim blue Relative amount of light of amount Relative Wavelength Spectral composition of light entering the -

Color Appearance Models Second Edition

Color Appearance Models Second Edition Mark D. Fairchild Munsell Color Science Laboratory Rochester Institute of Technology, USA Color Appearance Models Wiley–IS&T Series in Imaging Science and Technology Series Editor: Michael A. Kriss Formerly of the Eastman Kodak Research Laboratories and the University of Rochester The Reproduction of Colour (6th Edition) R. W. G. Hunt Color Appearance Models (2nd Edition) Mark D. Fairchild Published in Association with the Society for Imaging Science and Technology Color Appearance Models Second Edition Mark D. Fairchild Munsell Color Science Laboratory Rochester Institute of Technology, USA Copyright © 2005 John Wiley & Sons Ltd, The Atrium, Southern Gate, Chichester, West Sussex PO19 8SQ, England Telephone (+44) 1243 779777 This book was previously publisher by Pearson Education, Inc Email (for orders and customer service enquiries): [email protected] Visit our Home Page on www.wileyeurope.com or www.wiley.com All Rights Reserved. No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, scanning or otherwise, except under the terms of the Copyright, Designs and Patents Act 1988 or under the terms of a licence issued by the Copyright Licensing Agency Ltd, 90 Tottenham Court Road, London W1T 4LP, UK, without the permission in writing of the Publisher. Requests to the Publisher should be addressed to the Permissions Department, John Wiley & Sons Ltd, The Atrium, Southern Gate, Chichester, West Sussex PO19 8SQ, England, or emailed to [email protected], or faxed to (+44) 1243 770571. This publication is designed to offer Authors the opportunity to publish accurate and authoritative information in regard to the subject matter covered. -

Naming Versus Matching in Color Constancy

Perception & Psychophysics 1991,50 (6), 591-602 Naming versus matching in color constancy JIMMY M. TROOST and CHARLES M. M. DE WEERT Nijmegen Institute for Cognition Research and Information Technology, University ofNijmegen Nijmegen, The Netherlands In this paper, a replication of the color-constancy study of Arend and Reeves (1986) is reported. and an alternative method is presented that can be used for the study of higher order aspects of color constancy, such as memory, familiarity, and perceptual organization. Besides a simulta neous presentation of standard and test illuminants, we also carried out an experiment in which the illuminants were presented successively. The results were similar to Arend and Reeves's; however, in the object-matching condition of the successive experiment, we found an over estimation, instead of an underestimation, of the illuminant component. Because the results of matching experiments are difficult to interpret, mainly due to their sensitivity to instruction effects, we introduced another type of color-constancy task. In this task, subjects simply named the color of a simulated patch. It was found that, by applying such a task, a reliable measure of the degree of identification of object color can be obtained. Color constancy is the phenomenon that the color ap Sensory explanations of color constancy are mechanis pearance of objects is invariant, notwithstanding varia tic; informational cues to the illuminant are not taken into tions in illumination. In real-life situations, variations in account. It is implicitly assumed that the visual system illumination occur very often. For example, the spectral does not even notice differences in illumination. -

Evolution, Development and Function of Vertebrate Cone Oil Droplets

REVIEW published: 08 December 2017 doi: 10.3389/fncir.2017.00097 Evolution, Development and Function of Vertebrate Cone Oil Droplets Matthew B. Toomey* and Joseph C. Corbo* Department of Pathology and Immunology, Washington University School of Medicine, St. Louis, MO, United States To distinguish colors, the nervous system must compare the activity of distinct subtypes of photoreceptors that are maximally sensitive to different portions of the light spectrum. In vertebrates, a variety of adaptations have arisen to refine the spectral sensitivity of cone photoreceptors and improve color vision. In this review article, we focus on one such adaptation, the oil droplet, a unique optical organelle found within the inner segment of cone photoreceptors of a diverse array of vertebrate species, from fish to mammals. These droplets, which consist of neutral lipids and carotenoid pigments, are interposed in the path of light through the photoreceptor and modify the intensity and spectrum of light reaching the photosensitive outer segment. In the course of evolution, the optical function of oil droplets has been fine-tuned through changes in carotenoid content. Species active in dim light reduce or eliminate carotenoids to enhance sensitivity, whereas species active in bright light precisely modulate carotenoid double bond conjugation and concentration among cone subtypes to optimize color discrimination and color constancy. Cone oil droplets have sparked the curiosity of vision scientists for more than a century. Accordingly, we begin by briefly reviewing the history of research on oil droplets. We then discuss what is known about the developmental origins Edited by: of oil droplets. Next, we describe recent advances in understanding the function of oil Vilaiwan M. -

Color Vision Theory; Vision, Low-Level Are Opposite to Each Other, Would Cancel

Color Vision (e.g., spatial frequency, orientation, motion, depth) which the stimulus differs perceptually from a purely within a local cortical region. With respect to color achromatic (i.e., white, gray, black) axis. The third vision per se, the primary processing involves separ- dimension is brightness or lightness. That our per- ating color and luminance information, and further ceptual space is three-dimensional reflects the basic separating changes due to the illuminant from those trichromacy of vision. due to visual objects, by lateral interactions over large A normal observer can describe the hue of any light regions. (disregarding surface characteristics) by using one or To separate luminance and color information, the more of only four color names (red, yellow, green, and outputs of Pc cells are combined in two different ways. blue). These so-called unique hues form two opponent When their outputs are summed in one way, the pairs, red–green and blue–yellow. Red and green luminance components to their responses sum and the normally cannot be seen in the same place at the same color components cancel. Summed in a different time; if unique red and unique green lights are added in combination, the color components sum and the appropriate proportions, the colors cancel and one luminance components cancel. Consider a striate sees a neutral gray. Orange can be seen as a mixture of cortex cell that combines inputs from one or more red and yellow, and purple as a mixture of red and j j Lo and Mo cells in a region. The cortical cell would blue, but there is no color seen as a red–green mixture respond to luminance variations but not to color (or as a blue–yellow mixture). -

Chapter 3 Color Constancy

Chapter 3 Color Constancy In the previous chapter I gave an introduction to fundamental theories of color vision. These theories referred to stimuli that were viewed in isolation. A cen- tral issue within this framework was the assignment of primary color codes to isolated light stimuli. Hitherto we discussed the color appearance of such stimuli only marginally. In this chapter we will extend the examined stimulus to com- plex spatial patterns that contain more than one light. We will see that the color appearance of light stimuli in this context is not determined by corresponding primary color codes. Rather, the color appearance of a given light depends on temporal and spatial characteristics of the stimulus. We can study the influence of the context on the color appearance of a stimulus when we present two differ- ent lights either sequentially or spatially (as a center-surround configuration) to the observer. The corresponding phenomena are called successive contrast and simultaneous contrast. In this chapter we will focus on a related phenomenon that refers to color appearance of fixed objects under varying lighting conditions. This phenomenon is known as color constancy. Loosely speaking, color constancy is described as the ability of visual systems to assign stable color appearance to a fixed object under changing illuminant conditions. 3.1 The Problem of Color Constancy 3.1.1 Introduction Probably the most important function of human color vision is object recognition. In this sense, the color appearance of an object should persist with changes of the illumination. We can experience this phenomenon every day but we rarely notice it. -

Modeling Human Color Perception Under Extended Luminance Levels

Modeling Human Color Perception under Extended Luminance Levels Min H. Kim Tim Weyrich Jan Kautz University College London 100 100 400 90 90 360 80 80 320 70 70 280 60 60 240 50 50 200 40 40 160 30 30 120 20 20 80 10 10 40 HDR Input Lightness (J) 0 Colorfulness (M) 0 Hue (H) 0 Appearance Reproduction Figure 1: We take an high-dynamic-range image and predict perceptual lightness, colorfulness, and hue attributes. Based on these attributes, we can reproduce the perceptual appearance of the original image on different media, such as a low-dynamic-range display. Abstract experiments [Luo et al. 1993] were carried out with luminances mainly under about 690 cd/m2 — except for only four color sam- Display technology is advancing quickly with peak luminance ples between 1000 and 1280 cd/m2. Perceptual color attributes of increasing significantly, enabling high-dynamic-range displays. recent color appearance models [CIE 1998; Moroney et al. 2002] However, perceptual color appearance under extended luminance are mainly derived from this data set and, as a result, are geared levels has not been studied, mainly due to the unavailability of psy- towards low luminance levels. However, with the recent advent of chophysical data. Therefore, we conduct a psychophysical study in high-dynamic-range (HDR) images and very bright displays [Seet- order to acquire appearance data for many different luminance lev- 2 zen et al. 2004], a generalized color appearance model is much els (up to 16,860 cd/m ) covering most of the dynamic range of the needed.