Modeling Human Color Perception Under Extended Luminance Levels

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING Color space A color model is an abstract mathematical model describing the way colors can be represented as tuples of numbers, typically as three or four values or color components (e.g. RGB and CMYK are color models). However, a color model with no associated mapping function to an absolute color space is a more or less arbitrary color system with little connection to the requirements of any given application. Adding a certain mapping function between the color model and a certain reference color space results in a definite "footprint" within the reference color space. This "footprint" is known as a gamut, and, in combination with the color model, defines a new color space. For example, Adobe RGB and sRGB are two different absolute color spaces, both based on the RGB model. In the most generic sense of the definition above, color spaces can be defined without the use of a color model. These spaces, such as Pantone, are in effect a given set of names or numbers which are defined by the existence of a corresponding set of physical color swatches. This article focuses on the mathematical model concept. Understanding the concept Most people have heard that a wide range of colors can be created by the primary colors red, blue, and yellow, if working with paints. Those colors then define a color space. We can specify the amount of red color as the X axis, the amount of blue as the Y axis, and the amount of yellow as the Z axis, giving us a three-dimensional space, wherein every possible color has a unique position. -

Urban Land Grab Or Fair Urbanization?

Urban land grab or fair urbanization? Compulsory land acquisition and sustainable livelihoods in Hue, Vietnam Stedelijke landroof of eerlijke verstedelijking? Landonteigenlng en duurzaam levensonderhoud in Hue, Vietnam (met een samenvatting in het Nederlands) Chiếm đoạt đất đai đô thị hay đô thị hoá công bằng? Thu hồi đất đai cưỡng chế và sinh kế bền vững ở Huế, Việt Nam (với một phần tóm tắt bằng tiếng Việt) Proefschrift ter verkrijging van de graad van doctor aan de Universiteit Utrecht op gezag van de rector magnificus, prof.dr. G.J. van der Zwaan, ingevolge het besluit van het college voor promoties in het openbaar te verdedigen op maandag 21 december 2015 des middags te 12.45 uur door Nguyen Quang Phuc geboren op 10 december 1980 te Thua Thien Hue, Vietnam Promotor: Prof. dr. E.B. Zoomers Copromotor: Dr. A.C.M. van Westen This thesis was accomplished with financial support from Vietnam International Education Development (VIED), Ministry of Education and Training, and LANDac programme (the IS Academy on Land Governance for Equitable and Sustainable Development). ISBN 978-94-6301-026-9 Uitgeverij Eburon Postbus 2867 2601 CW Delft Tel.: 015-2131484 [email protected]/ www.eburon.nl Cover design and pictures: Nguyen Quang Phuc Cartography and design figures: Nguyen Quang Phuc © 2015 Nguyen Quang Phuc. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior permission in writing from the proprietor. © 2015 Nguyen Quang Phuc. -

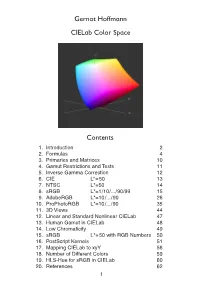

Cielab Color Space

Gernot Hoffmann CIELab Color Space Contents . Introduction 2 2. Formulas 4 3. Primaries and Matrices 0 4. Gamut Restrictions and Tests 5. Inverse Gamma Correction 2 6. CIE L*=50 3 7. NTSC L*=50 4 8. sRGB L*=/0/.../90/99 5 9. AdobeRGB L*=0/.../90 26 0. ProPhotoRGB L*=0/.../90 35 . 3D Views 44 2. Linear and Standard Nonlinear CIELab 47 3. Human Gamut in CIELab 48 4. Low Chromaticity 49 5. sRGB L*=50 with RGB Numbers 50 6. PostScript Kernels 5 7. Mapping CIELab to xyY 56 8. Number of Different Colors 59 9. HLS-Hue for sRGB in CIELab 60 20. References 62 1.1 Introduction CIE XYZ is an absolute color space (not device dependent). Each visible color has non-negative coordinates X,Y,Z. CIE xyY, the horseshoe diagram as shown below, is a perspective projection of XYZ coordinates onto a plane xy. The luminance is missing. CIELab is a nonlinear transformation of XYZ into coordinates L*,a*,b*. The gamut for any RGB color system is a triangle in the CIE xyY chromaticity diagram, here shown for the CIE primaries, the NTSC primaries, the Rec.709 primaries (which are also valid for sRGB and therefore for many PC monitors) and the non-physical working space ProPhotoRGB. The white points are individually defined for the color spaces. The CIELab color space was intended for equal perceptual differences for equal chan- ges in the coordinates L*,a* and b*. Color differences deltaE are defined as Euclidian distances in CIELab. This document shows color charts in CIELab for several RGB color spaces. -

Applying CIECAM02 for Mobile Display Viewing Conditions

Applying CIECAM02 for Mobile Display Viewing Conditions YungKyung Park*, ChangJun Li*, M. R. Luo*, Youngshin Kwak**, Du-Sik Park **, and Changyeong Kim**; * University of Leeds, Colour Imaging Lab, UK*, ** Samsung Advanced Institute of Technology, Yongin, South Korea** Abstract Small displays are widely used for mobile phones, PDA and 0.7 Portable DVD players. They are small to be carried around and 0.6 viewed under various surround conditions. An experiment was carried out to accumulate colour appearance data on a 2 inch 0.5 mobile phone display, a 4 inch PDA display and a 7 inch LCD 0.4 display using the magnitude estimation method. It was divided into v' 12 experimental phases according to four surround conditions 0.3 (dark, dim, average, and bright). The visual results in terms of 0.2 lightness, colourfulness, brightness and hue from different phases were used to test and refine the CIE colour appearance model, 0.1 CIECAM02 [1]. The refined model is based on continuous 0 functions to calculate different surround parameters for mobile 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 displays. There was a large improvement of the model u' performance, especially for bright surround condition. Figure 1. The colour gamut of the three displays studied. Introduction Many previous colour appearance studies were carried out using household TV or PC displays viewed under rather restricted viewing conditions. In practice, the colour appearance of mobile displays is affected by a variety of viewing conditions. First of all, the display size is much smaller than the other displays as it is built to be carried around easily. -

Prediction of Munsell Appearance Scales Using Various Color-Appearance Models

Prediction of Munsell Appearance Scales Using Various Color- Appearance Models David R. Wyble,* Mark D. Fairchild Munsell Color Science Laboratory, Rochester Institute of Technology, 54 Lomb Memorial Dr., Rochester, New York 14623-5605 Received 1 April 1999; accepted 10 July 1999 Abstract: The chromaticities of the Munsell Renotation predict the color of the objects accurately in these examples, Dataset were applied to eight color-appearance models. a color-appearance model is required. Modern color-appear- Models used were: CIELAB, Hunt, Nayatani, RLAB, LLAB, ance models should, therefore, be able to account for CIECAM97s, ZLAB, and IPT. Models were used to predict changes in illumination, surround, observer state of adapta- three appearance correlates of lightness, chroma, and hue. tion, and, in some cases, media changes. This definition is Model output of these appearance correlates were evalu- slightly relaxed for the purposes of this article, so simpler ated for their uniformity, in light of the constant perceptual models such as CIELAB can be included in the analysis. nature of the Munsell Renotation data. Some background is This study compares several modern color-appearance provided on the experimental derivation of the Renotation models with respect to their ability to predict uniformly the Data, including the specific tasks performed by observers to dimensions (appearance scales) of the Munsell Renotation evaluate a sample hue leaf for chroma uniformity. No par- Data,1 hereafter referred to as the Munsell data. Input to all ticular model excelled at all metrics. In general, as might be models is the chromaticities of the Munsell data, and is expected, models derived from the Munsell System per- more fully described below. -

ROCK-COLOR CHART with Genuine Munsell® Color Chips

geological ROCK-COLOR CHART with genuine Munsell® color chips Produced by geological ROCK-COLOR CHART with genuine Munsell® color chips 2009 Year Revised | 2009 Production Date put into use This publication is a revision of the previously published Geological Society of America (GSA) Rock-Color Chart prepared by the Rock-Color Chart Committee (representing the U.S. Geological Survey, GSA, the American Association of Petroleum Geologists, the Society of Economic Geologists, and the Association of American State Geologists). Colors within this chart are the same as those used in previous GSA editions, and the chart was produced in cooperation with GSA. Produced by 4300 44th Street • Grand Rapids, MI 49512 • Tel: 877-888-1720 • munsell.com The Rock-Color Chart The pages within this book are cleanable and can be exposed to the standard environmental conditions that are met in the field. This does not mean that the book will be able to withstand all of the environmental conditions that it is exposed to in the field. For the cleaning of the colored pages, please make sure not to use a cleaning agent or materials that are coarse in nature. These materials could either damage the surface of the color chips or cause the color chips to start to delaminate from the pages. With the specifying of the rock color it is important to remember to replace the rock color chart book on a regular basis so that the colors being specified are consistent from one individual to another. We recommend that you mark the date when you started to use the the book. -

Ph.D. Thesis Abstractindex

COLOR REPRODUCTION OF FACIAL PATTERN AND ENDOSCOPIC IMAGE BASED ON COLOR APPEARANCE MODELS December 1996 Francisco Hideki Imai Graduate School of Science and Technology Chiba University, JAPAN Dedicated to my parents ii COLOR REPRODUCTION OF FACIAL PATTERN AND ENDOSCOPIC IMAGE BASED ON COLOR APPEARANCE MODELS A dissertation submitted to the Graduate School of Science and Technology of Chiba University in partial fulfillment of the requirements for the degree of Doctor of Philosophy by Francisco Hideki Imai December 1996 iii Declaration This is to certify that this work has been done by me and it has not been submitted elsewhere for the award of any degree or diploma. Countersigned Signature of the student ___________________________________ _______________________________ Yoichi Miyake, Professor Francisco Hideki Imai iv The undersigned have examined the dissertation entitled COLOR REPRODUCTION OF FACIAL PATTERN AND ENDOSCOPIC IMAGE BASED ON COLOR APPEARANCE MODELS presented by _______Francisco Hideki Imai____________, a candidate for the degree of Doctor of Philosophy, and hereby certify that it is worthy of acceptance. ______________________________ ______________________________ Date Advisor Yoichi Miyake, Professor EXAMINING COMMITTEE ____________________________ Yoshizumi Yasuda, Professor ____________________________ Toshio Honda, Professor ____________________________ Hirohisa Yaguchi, Professor ____________________________ Atsushi Imiya, Associate Professor v Abstract In recent years, many imaging systems have been developed, and it became increasingly important to exchange image data through the computer network. Therefore, it is required to reproduce color independently on each imaging device. In the studies of device independent color reproduction, colorimetric color reproduction has been done, namely color with same chromaticity or tristimulus values is reproduced. However, even if the tristimulus values are the same, color appearance is not always same under different viewing conditions. -

Chapter 6 COLOR and COLOR VISION

Chapter 6 – page 1 You need to learn the concepts and formulae highlighted in red. The rest of the text is for your intellectual enjoyment, but is not a requirement for homework or exams. Chapter 6 COLOR AND COLOR VISION COLOR White light is a mixture of lights of different wavelengths. If you break white light from the sun into its components, by using a prism or a diffraction grating, you see a sequence of colors that continuously vary from red to violet. The prism separates the different colors, because the index of refraction n is slightly different for each wavelength, that is, for each color. This phenomenon is called dispersion. When white light illuminates a prism, the colors of the spectrum are separated and refracted at the first as well as the second prism surface encountered. They are deflected towards the normal on the first refraction and away from the normal on the second. If the prism is made of crown glass, the index of refraction for violet rays n400nm= 1.59, while for red rays n700nm=1.58. From Snell’s law, the greater n, the more the rays are deflected, therefore violet rays are deflected more than red rays. The infinity of colors you see in the real spectrum (top panel above) are called spectral colors. The second panel is a simplified version of the spectrum, with abrupt and completely artificial separations between colors. As a figure of speech, however, we do identify quite a broad range of wavelengths as red, another as orange and so on. -

Computational Color Harmony Based on Coloroid System

Computational Aesthetics in Graphics, Visualization and Imaging (2005) L. Neumann, M. Sbert, B. Gooch, W. Purgathofer (Editors) Computational Color Harmony based on Coloroid System László Neumanny, Antal Nemcsicsz, and Attila Neumannx yGrup de Gràfics de Girona, Universitat de Girona, and Institució Catalana de Recerca i Estudis Avançats, ICREA, Barcelona, Spain zBudapest University of Technology and Economics, Hungary xInstitute of Computer Graphics and Algorithms, Vienna University of Technology, Austria [email protected], [email protected], [email protected] (a) (b) Figure 1: (a) visualization of the overall appearance of a dichromatic color set with `caleidoscope' option of the Color Plan Designer software and (b) interactive color selection of a dichromatic color set in multi-layer mode, applying rotated regular grid. Abstract This paper presents experimentally based rules and methods for the creation of harmonic color sets. First, dichro- matic rules are presented which concern the harmony relationships of two hues. For an arbitrarily given hue pair, we define the just harmonic saturation values, resulting in minimally harmonic color pairs. These values express the fuzzy border between harmony and disharmony regions using a single scalar. Second, the value of harmony is defined corresponding to the contrast of lightness, i.e. the difference of perceptual lightness values. Third, we formulate the harmony value of the saturation contrast, depending on hue and lightness. The results of these investigations form a basis for a unified, coherent dichromatic harmony formula as well as for analysis of polychromatic color harmony. Introduced color harmony rules are based on Coloroid, which is one of the 5 6 main color-order systems and − furthermore it is an aesthetically uniform continuous color space. -

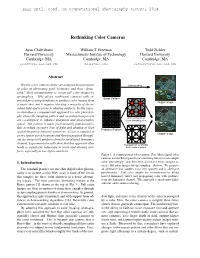

Rethinking Color Cameras

Rethinking Color Cameras Ayan Chakrabarti William T. Freeman Todd Zickler Harvard University Massachusetts Institute of Technology Harvard University Cambridge, MA Cambridge, MA Cambridge, MA [email protected] [email protected] [email protected] Abstract Digital color cameras make sub-sampled measurements of color at alternating pixel locations, and then “demo- saick” these measurements to create full color images by up-sampling. This allows traditional cameras with re- stricted processing hardware to produce color images from a single shot, but it requires blocking a majority of the in- cident light and is prone to aliasing artifacts. In this paper, we introduce a computational approach to color photogra- phy, where the sampling pattern and reconstruction process are co-designed to enhance sharpness and photographic speed. The pattern is made predominantly panchromatic, thus avoiding excessive loss of light and aliasing of high spatial-frequency intensity variations. Color is sampled at a very sparse set of locations and then propagated through- out the image with guidance from the un-aliased luminance channel. Experimental results show that this approach often leads to significant reductions in noise and aliasing arti- facts, especially in low-light conditions. Figure 1. A computational color camera. Top: Most digital color cameras use the Bayer pattern (or something like it) to sub-sample 1. Introduction color alternatingly; and then they demosaick these samples to create full-color images by up-sampling. Bottom: We propose The standard practice for one-shot digital color photog- an alternative that samples color very sparsely and is otherwise raphy is to include a color filter array in front of the sensor panchromatic. -

Illumination and Distance

PHYS 1400: Physical Science Laboratory Manual ILLUMINATION AND DISTANCE INTRODUCTION How bright is that light? You know, from experience, that a 100W light bulb is brighter than a 60W bulb. The wattage measures the energy used by the bulb, which depends on the bulb, not on where the person observing it is located. But you also know that how bright the light looks does depend on how far away it is. That 100W bulb is still emitting the same amount of energy every second, but if you are farther away from it, the energy is spread out over a greater area. You receive less energy, and perceive the light as less bright. But because the light energy is spread out over an area, it’s not a linear relationship. When you double the distance, the energy is spread out over four times as much area. If you triple the distance, the area is nine Twice the distance, ¼ as bright. Triple the distance? 11% as bright. times as great, meaning that you receive only 1/9 (or 11%) as much energy from the light source. To quantify the amount of light, we will use units called lux. The idea is simple: energy emitted per second (Watts), spread out over an area (square meters). However, a lux is not a W/m2! A lux is a lumen per m2. So, what is a lumen? Technically, it’s one candela emitted uniformly across a solid angle of 1 steradian. That’s not helping, is it? Examine the figure above. The source emits light (energy) in all directions simultaneously. -

Spatial Filtering, Color Constancy, and the Color-Changing Dress Department of Psychology, American University, Erica L

Journal of Vision (2017) 17(3):7, 1–20 1 Spatial filtering, color constancy, and the color-changing dress Department of Psychology, American University, Erica L. Dixon Washington, DC, USA Department of Psychology and Department of Computer Arthur G. Shapiro Science, American University, Washington, DC, USA The color-changing dress is a 2015 Internet phenomenon divide among responders (as well as disagreement from in which the colors in a picture of a dress are reported as widely followed celebrity commentators) fueled a rapid blue-black by some observers and white-gold by others. spread of the photo across many online news outlets, The standard explanation is that observers make and the topic trended worldwide on Twitter under the different inferences about the lighting (is the dress in hashtag #theDress. The huge debate on the Internet shadow or bright yellow light?); based on these also sparked debate in the vision science community inferences, observers make a best guess about the about the implications of the stimulus with regard to reflectance of the dress. The assumption underlying this individual differences in color perception, which in turn explanation is that reflectance is the key to color led to a special issue of the Journal of Vision, for which constancy because reflectance alone remains invariant this article is written. under changes in lighting conditions. Here, we The predominant explanations in both scientific demonstrate an alternative type of invariance across journals (Gegenfurtner, Bloj, & Toscani, 2015; Lafer- illumination conditions: An object that appears to vary in Sousa, Hermann, & Conway, 2015; Winkler, Spill- color under blue, white, or yellow illumination does not change color in the high spatial frequency region.