Ph.D. Thesis Abstractindex

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ROCK-COLOR CHART with Genuine Munsell® Color Chips

geological ROCK-COLOR CHART with genuine Munsell® color chips Produced by geological ROCK-COLOR CHART with genuine Munsell® color chips 2009 Year Revised | 2009 Production Date put into use This publication is a revision of the previously published Geological Society of America (GSA) Rock-Color Chart prepared by the Rock-Color Chart Committee (representing the U.S. Geological Survey, GSA, the American Association of Petroleum Geologists, the Society of Economic Geologists, and the Association of American State Geologists). Colors within this chart are the same as those used in previous GSA editions, and the chart was produced in cooperation with GSA. Produced by 4300 44th Street • Grand Rapids, MI 49512 • Tel: 877-888-1720 • munsell.com The Rock-Color Chart The pages within this book are cleanable and can be exposed to the standard environmental conditions that are met in the field. This does not mean that the book will be able to withstand all of the environmental conditions that it is exposed to in the field. For the cleaning of the colored pages, please make sure not to use a cleaning agent or materials that are coarse in nature. These materials could either damage the surface of the color chips or cause the color chips to start to delaminate from the pages. With the specifying of the rock color it is important to remember to replace the rock color chart book on a regular basis so that the colors being specified are consistent from one individual to another. We recommend that you mark the date when you started to use the the book. -

Medtronic Brand Color Chart

Medtronic Brand Color Chart Medtronic Visual Identity System: Color Ratios Navy Blue Medtronic Blue Cobalt Blue Charcoal Blue Gray Dark Gray Yellow Light Orange Gray Orange Medium Blue Sky Blue Light Blue Light Gray Pale Gray White Purple Green Turquoise Primary Blue Color Palette 70% Primary Neutral Color Palette 20% Accent Color Palette 10% The Medtronic Brand Color Palette was created for use in all material. Please use the CMYK, RGB, HEX, and LAB values as often as possible. If you are not able to use the LAB values, you can use the Pantone equivalents, but be aware the color output will vary from the other four color breakdowns. If you need a spot color, the preference is for you to use the LAB values. Primary Blue Color Palette Navy Blue Medtronic Blue C: 100 C: 99 R: 0 L: 15 R: 0 L: 31 M: 94 Web/HEX Pantone M: 74 Web/HEX Pantone G: 30 A: 2 G: 75 A: -2 Y: 47 #001E46 533 C Y: 17 #004B87 2154 C B: 70 B: -20 B: 135 B: -40 K: 43 K: 4 Cobalt Blue Medium Blue C: 81 C: 73 R: 0 L: 52 R: 0 L: 64 M: 35 Web/HEX Pantone M: 12 Web/HEX Pantone G: 133 A: -12 G: 169 A: -23 Y: 0 #0085CA 2382 C Y: 0 #00A9E0 2191 C B: 202 B: -45 B: 224 B: -39 K: 0 K : 0 Sky Blue Light Blue C: 55 C: 29 R: 113 L: 75 R: 185 L: 85 M: 4 Web/HEX Pantone M: 5 Web/HEX Pantone G: 197 A: -20 G: 217 A: -9 Y: 4 #71C5E8 297 C Y: 5 #B9D9EB 290 C B: 232 B: -26 B: 235 B: -13 K: 0 K: 0 Primary Neutral Color Palette Charcoal Gray Blue Gray C: 0 Pantone C: 68 R: 83 L: 36 R: 91 L: 51 M: 0 Web/HEX Cool M: 40 Web/HEX Pantone G: 86 A: 0 G: 127 A: -9 Y: 0 #53565a Gray Y: 28 #5B7F95 5415 -

Digital Color Workflows and the HP Dreamcolor Lp2480zx Professional Display

Digital Color Workflows and the HP DreamColor LP2480zx Professional Display Improving accuracy and predictability in color processing at the designer’s desk can increase productivity and improve quality of digital color projects in animation, game development, film/video post production, broadcast, product design, graphic arts and photography. Introduction ...................................................................................................................................2 Managing color .............................................................................................................................3 The property of color ...................................................................................................................3 The RGB color set ....................................................................................................................3 The CMYK color set .................................................................................................................3 Color spaces...........................................................................................................................4 Gamuts..................................................................................................................................5 The digital workflow ....................................................................................................................5 Color profiles..........................................................................................................................5 -

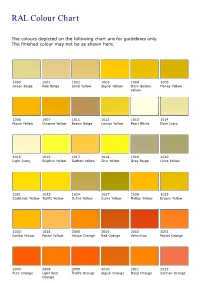

RAL Colour Chart

RAL Colour Chart The colours depicted on the following chart are for guidelines only. The finished colour may not be as shown here. 1000 1001 1002 1003 1004 1005 Green Beige Pale Beige Sand Yellow Signal Yellow Dark Golden Honey Yellow Yellow 1006 1007 1011 1012 1013 1014 Maize Yellow Chrome Yellow Brown Beige Lemon Yellow Pearl White Dark Ivory 1015 1016 1017 1018 1019 1020 Light Ivory Sulphur Yellow Saffron Yellow Zinc Yellow Grey Beige Olive Yellow 1021 1023 1024 1027 1028 1032 Cadmium Yellow Traffic Yellow Ochre Yellow Curry Yellow Mellon Yellow Broom Yellow 1033 1034 2000 2001 2002 2003 Dahlia Yellow Pastel Yellow Yellow Orange Red Orange Vermillion Pastel Orange 2004 2008 2009 2010 2011 2012 Pure Orange Light Red Traffic Orange Signal Orange Deep Orange Salmon Orange Orange 3000 3001 3002 3003 3004 3005 Flame Red RAL Signal Red Carmine Red Ruby Red Purple Red Wine Red 3007 3009 3011 3012 3013 3014 Black Red Oxide Red Brown Red Beige Red Tomato Red Antique Pink 3015 3016 3017 3018 3020 3022 Light Pink Coral Red Rose Strawberry Red Traffic Red Dark Salmon Red 3027 3031 4001 4002 4003 4004 Raspberry Red Orient Red Red Lilac Red Violet Heather Violet Claret Violet 4005 4006 4007 4008 4009 4010 Blue Lilac Traffic Purple Purple Violet Signal Violet Pastel Violet Telemagenta 5000 5001 5002 5003 5004 5005 Violet Blue Green Blue Ultramarine Blue dark Sapphire Black Blue Signal Blue Blue 5007 5008 5009 5010 5011 5012 Brilliant Blue Grey Blue Light Azure Blue Gentian Blue Steel Blue Light Blue 5013 5014 5015 5017 5018 5019 Dark Cobalt Blue -

RAL COLOR CHART ***** This Chart Is to Be Used As a Guide Only. Colors May Appear Slightly Different ***** Green Beige Purple V

RAL COLOR CHART ***** This Chart is to be used as a guide only. Colors May Appear Slightly Different ***** RAL 1000 Green Beige RAL 4007 Purple Violet RAL 7008 Khaki Grey RAL 4008 RAL 7009 RAL 1001 Beige Signal Violet Green Grey Tarpaulin RAL 1002 Sand Yellow RAL 4009 Pastel Violet RAL 7010 Grey RAL 1003 Signal Yellow RAL 5000 Violet Blue RAL 7011 Iron Grey RAL 1004 Golden Yellow RAL 5001 Green Blue RAL 7012 Basalt Grey Ultramarine RAL 1005 Honey Yellow RAL 5002 RAL 7013 Brown Grey Blue RAL 1006 Maize Yellow RAL 5003 Saphire Blue RAL 7015 Slate Grey Anthracite RAL 1007 Chrome Yellow RAL 5004 Black Blue RAL 7016 Grey RAL 1011 Brown Beige RAL 5005 Signal Blue RAL 7021 Black Grey RAL 1012 Lemon Yellow RAL 5007 Brillant Blue RAL 7022 Umbra Grey Concrete RAL 1013 Oyster White RAL 5008 Grey Blue RAL 7023 Grey Graphite RAL 1014 Ivory RAL 5009 Azure Blue RAL 7024 Grey Granite RAL 1015 Light Ivory RAL 5010 Gentian Blue RAL 7026 Grey RAL 1016 Sulfer Yellow RAL 5011 Steel Blue RAL 7030 Stone Grey RAL 1017 Saffron Yellow RAL 5012 Light Blue RAL 7031 Blue Grey RAL 1018 Zinc Yellow RAL 5013 Cobolt Blue RAL 7032 Pebble Grey Cement RAL 1019 Grey Beige RAL 5014 Pigieon Blue RAL 7033 Grey RAL 1020 Olive Yellow RAL 5015 Sky Blue RAL 7034 Yellow Grey RAL 1021 Rape Yellow RAL 5017 Traffic Blue RAL 7035 Light Grey Platinum RAL 1023 Traffic Yellow RAL 5018 Turquiose Blue RAL 7036 Grey RAL 1024 Ochre Yellow RAL 5019 Capri Blue RAL 7037 Dusty Grey RAL 1027 Curry RAL 5020 Ocean Blue RAL 7038 Agate Grey RAL 1028 Melon Yellow RAL 5021 Water Blue RAL 7039 Quartz Grey -

340 K2017D.Pdf

Contents / Contenido Introduction Game Introducción Color 4 49 Model Game Color Air 7 54 Liquid Color Cases Gold Maletines 16 56 Model Weathering Air Effects 17 60 Metal Pigments Color Pigmentos 42 68 Panzer Model Wash Aces Lavados 46 72 Surface Primer Paint Imprimación Stand 76 90 Diorama Accessories Effects Accesorios 78 92 Premium Publications RC Color Publicaciones 82 94 Auxiliaries Displays Auxiliares Expositores 84 97 Brushes Health & Safety Pinceles Salud y seguridad 88 102 Made in Spain All colors in our catalogue conform to ASTM Pictures courtesy by: Due to the printing process, the colors D-4236 standards and EEC regulation 67/548/ Imágenes cedidas por: reproduced in this catalogue are to be considered CEE, and do not require health labelling. as approximate only, and may not correspond Avatars of War, Juanjo Baron, José Brito, Murat exactly to the originals. Todos los colores en nuestro catálogo están Conform to Özgül, Chema Cabrero, Freebooterminiatures, ASTM D-4236 conformes con las normas ASTM D-4236 (American Angel Giraldez, Raúl Garcia La Torre, Jaime Ortiz, Debido a la impresión en cuatricromía, los colores and Society for Testing and Materials) y con la normativa en este catálogo pueden variar de los colores EEC regulation Plastic Soldier Company Ltd, Euromodelismo, 67/548/CEE Europea 67/548/ CEE. Robert Karlsson “Rogland”, Scratchmod. originales, y su reproducción es tan solo orientativa. Introduction The Vallejo Company was established in 1965, in New Jersey, EN U.S.A. In the first years the company specialized in the manufacture of Film Color, waterbased acrylic colors for animated films (cartoons). -

Gamut Mapping Algorithm Using Lightness Mapping and Multiple Anchor Points for Linear Tone and Maximum Chroma Reproduction

JOURNAL OF IMAGING SCIENCE AND TECHNOLOGY® • Volume 45, Number 3, May/June 2001 Feature Article Gamut Mapping Algorithm Using Lightness Mapping and Multiple Anchor Points for Linear Tone and Maximum Chroma Reproduction Chae-Soo Lee,L Yang-Woo Park, Seok-Je Cho,* and Yeong-Ho Ha† Department of Software Engineering, Kyungwoon University, Kyungbuk, Korea * Department of Control and Instrumentation Engineering, Korea Maritime University, Yeongdo-ku Pusan, Korea † School of Electronic and Electrical Engineering, Kyungpook National University, Taegu, Korea This article proposes a new gamut-mapping algorithm (GMA) that utilizes both lightness mapping and multiple anchor points. The proposed lightness mapping minimizes the lightness difference of the maximum chroma between two gamuts and produces the linear tone in bright and dark regions. In the chroma mapping, a separate mapping method that utilizes multiple anchor points with constant slopes plus a fixed anchor point is proposed to maintain the maximum chroma and produce a uniform tonal dynamic range. As a result, the proposed algorithm is able to reproduce high quality images using low-cost color devices. Journal of Imaging Science and Technology 45: 209–223 (2001) Introduction of the reproduction’s. Therefore, if the lightness values Some practical output systems are only capable of pro- of the maximum chroma in the two gamuts are not lo- ducing a limited range of colors. The range of produc- cated at the center of the lightness axis of the two media, ible colors on a device is referred to as its gamut. Often, the parametric GMA will produce a different color change an image will contain colors that are outside the gamut in the bright and dark regions. -

Computational RYB Color Model and Its Applications

IIEEJ Transactions on Image Electronics and Visual Computing Vol.5 No.2 (2017) -- Special Issue on Application-Based Image Processing Technologies -- Computational RYB Color Model and its Applications Junichi SUGITA† (Member), Tokiichiro TAKAHASHI†† (Member) †Tokyo Healthcare University, ††Tokyo Denki University/UEI Research <Summary> The red-yellow-blue (RYB) color model is a subtractive model based on pigment color mixing and is widely used in art education. In the RYB color model, red, yellow, and blue are defined as the primary colors. In this study, we apply this model to computers by formulating a conversion between the red-green-blue (RGB) and RYB color spaces. In addition, we present a class of compositing methods in the RYB color space. Moreover, we prescribe the appropriate uses of these compo- siting methods in different situations. By using RYB color compositing, paint-like compositing can be easily achieved. We also verified the effectiveness of our proposed method by using several experiments and demonstrated its application on the basis of RYB color compositing. Keywords: RYB, RGB, CMY(K), color model, color space, color compositing man perception system and computer displays, most com- 1. Introduction puter applications use the red-green-blue (RGB) color mod- Most people have had the experience of creating an arbi- el3); however, this model is not comprehensible for many trary color by mixing different color pigments on a palette or people who not trained in the RGB color model because of a canvas. The red-yellow-blue (RYB) color model proposed its use of additive color mixing. As shown in Fig. -

Ral Colour Chart.Pdf

RAL Classic Colour Chart Page 1 The colours depicted on the following chart are for guidance only. The displayed colour will depend on your printer, monitor and browser and pearl or metallic colours cannot be shown adequately. The finished colour, therefore, may not be as shown here. Colour-chart courtesy of RAL 1000 RAL 1001 RAL 1002 RAL 1003 RAL 1004 RAL 1005 Green beige Beige Sand yellow Signal yellow Golden yellow Honey yellow RAL 1006 RAL 1007 RAL 1011 RAL 1012 RAL 1013 RAL 1014 Maize yellow Daffodil yellow Brown beige Lemon yellow Oyster white Ivory RAL 1015 RAL 1016 RAL 1017 RAL 1018 RAL 1019 RAL 1020 Light ivory Sulphur yellow Saffron yellow Zinc yellow Grey beige Olive yellow RAL 1021 RAL 1023 RAL 1024 RAL 1026 RAL 1027 RAL 1028 Rape yellow Traffic yellow Ochre yellow Luminous yellow Curry Melon yellow RAL 1032 RAL 1033 RAL 1034 RAL 1035 RAL 1036 RAL 1037 Broom yellow Dahlia yellow Pastel yellow Pearl beige Pearl gold Sun yellow RAL 2000 RAL 2001 RAL 2002 RAL 2003 RAL 2004 RAL 2005 Yellow orange Red orange Vermilion Pastel orange Pure orange Luminous orange RAL 2007 RAL 2008 RAL 2009 RAL 2010 RAL 2011 RAL 2012 Luminous b't orange Bright red orange Traffic orange Signal orange Deep orange Salmon orange RAL 2013 RAL 3000 RAL 3001 RAL 3002 RAL 3003 RAL 3004 Pearl orange Flame red Signal red Carmine red Ruby red Purple red RAL 3005 RAL 3007 RAL 3009 RAL 3011 RAL 3012 RAL 3013 Wine red Black red Oxide red Brown red Beige red Tomato red RAL 3014 RAL 3015 RAL 3016 RAL 3017 RAL 3018 RAL 3020 Antique pink Light pink Coral red Rose Strawberry red Traffic red RAL Classic Colour Chart Page 2 The colours depicted on the following chart are for guidance only. -

Color Chart Colorchart

Color Chart AMERICANA ACRYLICS Snow (Titanium) White White Wash Cool White Warm White Light Buttermilk Buttermilk Oyster Beige Antique White Desert Sand Bleached Sand Eggshell Pink Chiffon Baby Blush Cotton Candy Electric Pink Poodleskirt Pink Baby Pink Petal Pink Bubblegum Pink Carousel Pink Royal Fuchsia Wild Berry Peony Pink Boysenberry Pink Dragon Fruit Joyful Pink Razzle Berry Berry Cobbler French Mauve Vintage Pink Terra Coral Blush Pink Coral Scarlet Watermelon Slice Cadmium Red Red Alert Cinnamon Drop True Red Calico Red Cherry Red Tuscan Red Berry Red Santa Red Brilliant Red Primary Red Country Red Tomato Red Naphthol Red Oxblood Burgundy Wine Heritage Brick Alizarin Crimson Deep Burgundy Napa Red Rookwood Red Antique Maroon Mulberry Cranberry Wine Natural Buff Sugared Peach White Peach Warm Beige Coral Cloud Cactus Flower Melon Coral Blush Bright Salmon Peaches 'n Cream Coral Shell Tangerine Bright Orange Jack-O'-Lantern Orange Spiced Pumpkin Tangelo Orange Orange Flame Canyon Orange Warm Sunset Cadmium Orange Dried Clay Persimmon Burnt Orange Georgia Clay Banana Cream Sand Pineapple Sunny Day Lemon Yellow Summer Squash Bright Yellow Cadmium Yellow Yellow Light Golden Yellow Primary Yellow Saffron Yellow Moon Yellow Marigold Golden Straw Yellow Ochre Camel True Ochre Antique Gold Antique Gold Deep Citron Green Margarita Chartreuse Yellow Olive Green Yellow Green Matcha Green Wasabi Green Celery Shoot Antique Green Light Sage Light Lime Pistachio Mint Irish Moss Sweet Mint Sage Mint Mint Julep Green Jadeite Glass Green Tree Jade -

Color Look-Up Table Design for Gamut Mapping and Color Space Conversion

DPP2003: IS&Ts International Conference on Digital Production Printing and Industrial Applications Color Look-Up Table Design for Gamut Mapping and Color Space Conversion Yun-Tae Kim,* Yang-Ho Cho,* Cheol-Hee Lee,** and Yeong-Ho Ha* *School of Electrical Eng. and Computer Sci., Kyungpook National Univ., Korea **Department of Computer Engineering, Kyungwoon Univ., Korea Abstract A 36 matrix is used to consider the complexity of the system implementation. This paper proposes a method for designing a color look- (c) = α +α +α +α +α +α up table (CLUT) that can simultaneously process gamut L 0 R 1G 2B 3RG 4GB 5BR mapping and color space conversion without any complex computation. After constructing a LUT (c) a = β R+ β G+ β B+ β RG+ β GB+ β BR (1) composed of the scanner and printer gamuts, fictive 0 1 2 3 4 5 sample points are uniformly selected in CIELAB space c and CMY values computed using gamut mapping. The b( ) = γ R + γ G + γ B + γ RG + γ GB + γ BR scanner input RGB image is then converted into 0 1 2 3 4 5 CIELAB using a regression function and the scanner α β γ where j , j , j are the coefficients of the polynomial RGB values converted into CMY values using the regression. After the 36 matrix is constructed using proposed CLUT that includes gamut mapping without these coefficients, the arbitrary RGB input is converted any additional gamut mapping process. In experiments, into CIELAB values using a matrix computation. the proposed method produced a similar color difference to the direct computation method, yet a reduced Input computational complexity when compared with discrete L*a*b* points gamut mapping and color space conversion. -

Securedge™ and Metal Flat Sheet Color Chart

EXPERIENCE THE CARLISLE DIFFERENCE SecurEdge™ and Metal Flat Sheet Color Chart STONE WHITE BONE WHITE REGAL WHITE SANDSTONE ALMOND SIERRA TAN BUCKSKIN MEDIUM BRONZE DARK BRONZE ANTIQUE BRONZE MIDNIGHT BRONZE AGED BRONZE MANSARD BROWN BLACK CITYSCAPE SLATE GRAY GRANITE L MUSKET GRAY CHARCOAL IRON ORE HEMLOCK GREEN PATINA GREEN FOREST GREEN HARTFORD GREEN BURGUNDY COLONIAL RED TERRA COTTA CARDINAL RED TEAL MILITARY BLUE PACIFIC BLUE INTERSTATE BLUE AWARD BLUE SILVER P ZINC P WEATHERED ZINC P CHAMPAGNE P COPPER PENNY P AGED COPPER P DREXLUME™ M P = PREMIUM M = MILL FINISH L = LOW GLOSS COLOR = Standard Product P = CRRC Approved Finishes Cool Roof Product Options SR SRI 24 ga x 20" 24 ga x 48" 22 ga x 48" 26 ga x 20" 26 ga x 27.5" 26 ga x 48" 0.032 x 20" 0.032 x 48" 0.040 X 48" 0.050 x 48" 0.063 x 48" Rated Standard Colors Aged Bronze 0.29 29 P Almond 0.53 62 P Antique Bronze 0.29 28 Black 0.20 17 Bone White 0.67 81 P Buckskin 0.38 41 Burgundy 0.24 23 Charcoal 0.27 27 P Cityscape 0.44 49 P Colonial Red 0.32 34 P Dark Bronze 0.26 24 P Forest Green 0.10 6 Hartford Green 0.29 29 Hemlock Green 0.29 29 P Interstate Blue 0.13 8 Iron Ore 0.27 26 Mansard Brown 0.29 29 P Medium Bronze 0.26 26 P Midnight Bronze 0.06 0 Military Blue 0.29 29 P Musket Gray 0.31 32 P Pacific Blue 0.25 24 P Patina Green 0.33 34 P Regal White 0.60 78 Sandstone 0.49 56 P Sierra Tan 0.36 39 P Slate Gray 0.37 40 P Stone White 0.64 77 P Teal 0.26 25 P Terra Cotta 0.36 39 P Low Gloss Colors Aspen Bronze 0.26 26 P Autumn Red 0.32 34 P Chestnut Brown 0.29 29 P Classic Bronze 0.29