Performance Analysis of Application-Specific Multicore Systems on Chip

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Embedded Systeme 11

B 13908 Elektronik-Magazin für Chip-, Board- & System-Design www.systeme-online.de 11/00 Ausgabe November DM 14,– ÖS 110,– Embedded SFR 14,– Systeme Optimierte Software- Entwicklung Telekom-Strategie übernehmen HA-Systeme für Telekom 99,999 Prozent Verfügbarkeit Echtzeit- Steuerungssysteme Deterministisch im ns-Bereich Besuchen Sie uns auf der Electronica Halle A3, Stand 228 Electronic Embedded Systeme Editorial Embedded-(R-)Evolution Charles Darwin erkannte bei seinen Unter- werden Software, Echtzeitbetriebssysteme suchungen zur Abstammungslehre, die er im und die Weiterentwicklung von Windows CE, 19. Jahrhundert durchführte, dass sich die Embedded NT und Embedded Linux Natur evolutionär weiterentwickelt. Revolu- beschrieben. Der Elektronik-Focus wird zu tionäre Entwicklungssprünge sind in der einem zweiten Schwerpunkt ausgeweitet und Natur selten. Parallelen zur technischen enthält jetzt branchenbezogene Themen zu Weiterentwicklung sind dabei unübersehbar. Embedded-Systemen aus allen Marktberei- So entwickelt sich auch die Technik evolu- chen. Die Rubriken »Markt«, jetzt mit der tionär weiter, das bedeutet, dass neue Pro- regelmäßigen Unterrubrik »Forschung & dukte, Systeme und Verfahren meist verbes- Technologie«, »Titelstory« und »Schwer- serte Varianten ihrer Vorgänger sind. Selbst punkt«, bleiben bestehen. technische »Revolutionen« wie z.B. die Sie als Leser unserer Zeitschrift sind jetzt Erfindung der Planartechnik, des Mikropro- gefragt: Sagen Sie uns Ihre Meinung. Dazu zessors und des Internets sind genau betrach- ist dieser und der nächsten Ausgabe ein Fra- tet Weiterentwicklungen, die allerdings gebogen beigelegt. Sollten Sie diesen Frage- »revolutionäre« Auswirkungen auf die In- bogen nicht mehr in den Heften finden, dustrie haben und völlig neue Chancen und können Sie auch ganz bequem via Internet Märkte eröffnen. An diese neuen Bedingun- (http://www.service-awi.de/leserumfrage) gen müssen sich die beteiligten Firmen – an der Leserumfrage mit Gewinnspiel teil- analog zu den Arten in der Natur – anpassen. -

Microprocessor Training

Microprocessors and Microcontrollers © 1999 Altera Corporation 1 Altera Confidential Agenda New Products - MicroController products (1 hour) n Microprocessor Systems n The Embedded Microprocessor Market n Altera Solutions n System Design Considerations n Uncovering Sales Opportunities © 2000 Altera Corporation 2 Altera Confidential Embedding microprocessors inside programmable logic opens the door to a multi-billion dollar market. Altera has solutions for this market today. © 2000 Altera Corporation 3 Altera Confidential Microprocessor Systems © 1999 Altera Corporation 4 Altera Confidential Processor Terminology n Microprocessor: The implementation of a computer’s central processor unit functions on a single LSI device. n Microcontroller: A self-contained system with a microprocessor, memory and peripherals on a single chip. “Just add software.” © 2000 Altera Corporation 5 Altera Confidential Examples Microprocessor: Motorola PowerPC 600 Microcontroller: Motorola 68HC16 © 2000 Altera Corporation 6 Altera Confidential Two Types of Processors Computational Embedded n Programmable by the end-user to n Performs a fixed set of functions that accomplish a wide range of define the product. User may applications configure but not reprogram. n Runs an operating system n May or may not use an operating system n Program exists on mass storage n Program usually exists in ROM or or network Flash n Tend to be: n Tend to be: – Microprocessors – Microcontrollers – More expensive (ASP $193) – Less expensive (ASP $12) n Examples n Examples – Work Station -

Table of Contents

Table of Contents Experiment #0 PC Hardware and Operating System........................................................... 2 Experiment #1 Introduction to Debug and Turbo Debugger ................................................ 7 Experiment #2 Addressing modes and data transfer instructions........................................ 15 Experiment #3 Arithmetic instructions ............................................................................ 23 Experiment #4 Shift and rotate instructions...................................................................... 27 Experiment #5 Using BIOS Services and DOS functions Part 1: Text-based Graphics........ 35 Experiment #6 Using BIOS Services and DOS functions Part 2: Pixel-based Graphics ....... 45 Experiment #7 Introduction to Flight86 Microprocessor Trainer and Application Board ..... 52 Experiment #8 Flight86 Application I – Traffic Lights ................................................ 63 Experiment #9 Flight86 Application II – Motor Control.............................................. 69 Experiment #10 Introduction to the 8051 Microcontroller ............................................. 78 Appendix A – 8051 Instruction Set ............................................................................... 92 Appendix B – 8051 Special Function Registers............................................................ 97 Appendix C - An Introduction to WinLV .................................................................... 98 1 Experiment #0 PC Hardware and Operating Systems Objective: The -

PSD4000 Series PSD4135G2 Flash In-System-Programmable Peripherals for 16-Bit Mcus

PSD4000 Series PSD4135G2 Flash In-System-Programmable Peripherals for 16-Bit MCUs November, 2000 Preliminary Information 47280 Kato Road, Fremont, California 94538 Tel: 510-656-5400 Fax: 510-657-8495 800-832-6974 Web Site: http://www.psdst.com E-mail: [email protected] PSD4000 Series PSD4135G2 Flash In-System-Programmable Peripherals for 16-Bit MCUs Table of Contents Introduction ........................................................................................................................................................................................1 In-System Programming (ISP) JTAG .......................................................................................................................................2 In-Application re-Programming (IAP) .......................................................................................................................................2 Key Features......................................................................................................................................................................................3 PSD4000 Family ................................................................................................................................................................................3 Block Diagram....................................................................................................................................................................................4 Architectural Overview .......................................................................................................................................................................5 -

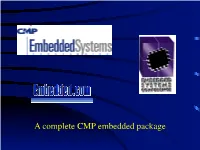

1999 Embedded Systems Programming Subscriber Study

A complete CMP embedded package 1999 Embedded Systems Programming Subscriber Study Mailed out 1,500 returned undeliverable 45 Base 1,455 returned unusable 17 returned usable 410 Total returned 427 Total preliminary report response rate: 29.3% (Conducted by Wilson Research Group) 1999 ESP Subscriber Study Survey Coverage • Programming Languages & • RTOSes/Kernals Host Operating Systems • Compilers • MCUs/Embedded MPUs • Software Debuggers • DSPs • Software Configuration • Memories Management Tools • Software Protocols/Stacks • Single Board Computers • Web Products/Tools • Intellectual Property • In-Circuit Emulators • FPGAs/CPLDs • Logic Analyzers • HW/SW Co-Design • Oscilloscopes • Embedded Systems Work • Device programmers Environments 160+ Questions Market & Mind Share Programming Language Trends 1997 1998 1999 C 80.7% 81.4% 79.0% Assembly 70.4% 70.1% 61.0% C ++ 35.9% 39.4% 46.6% Visual Basic 13.0% 16.2% 14.4% Pascal 4.2% 2.6% 2.0% Ada 6.4% 4.9% 6.1% Java 6.1% 7.0% 9.3% HDL / VHDL 6.1% 5.2% 6.6% Basic 12.5% 9.3% 8.5% Forth 3.4% 2.3% 2.2% eC++ - - .7% Base: 409 1997 345 1998 1997- 1999 ESP Subscriber Studies 410 1999 Have you used an object-oriented methodology for your embedded designs in the last 12 months? Yes…………………. 47.3% Are you considering an object-oriented methodology in the next 12 months? Yes…………………. 69.0% Base: 410 1999 ESP Subscriber Study Which of the following object-oriented programming methodologies have you used for your embedded designs? OMT/UML 44.8% Booch 31.4% Shlaer-Mellor 16.5% SDL 5.7% ROOM 2.1% S/ART 1.0% Base: -

Mqeminue" 4.925

Bonus: 8086 Microprocessor Family Pinout Reference August 1993 $2.95 THE PRACTICAL MAGAZINE FOR PERSONAL COMPUTERS & MICROCONTROLLERS (Canada $3.95) EZ-BOARD - clezroute19600p.pcb File Edit Draw View Reports IJO Help . E2 ( 3.320, ! -mQeminue" 4.925. 1 ] I ---1111111111111111111111.. New Win & PCB Software0/ , emtic O U C C) U C C c.) n î: C ,;:AddAtid - oun doEffeçtToYóur Projects-ect,s e o ., c ``Key" Prevents Software/HardvvareD Piracy ,f, __ `,, o _OC,It. C 0 -- 0 C) c _... ... C,_ __ O C ._ r=J v`,-_i_r ENTER:.ritart `,,eI,-c.t fouchlngAte,, f',f I..,n. How To Copy Internal BASIC -52 Into EPROM 0 Windows Compatibility And INI Files 10 74820 08559 oii Build A Quick And Dirty Data Interface www.americanradiohistory.com 68HC1 l Controller & Languages Pro ram it in C or Basic or $9 9 ram it in FO RT H or Program it in A ssemb l y Low Cost Development Package: Controller + Languages + Manuals on disk included! Package Price Breakthrough! a 000000000000000000000130000000ccc on000000000000n0000000000000con00000000000000000000000000000000 The NMIT-0020 Single Board Computer is perfect for 000000000000000000000000000000000000 dedicated controller with convenient interfaces for LCD 000000000000000000000000000000000000 000000000000000000000000000000000000000C100000000000000000000000000000000 displays and keypad. Intelligent LCD's up to 2 lines by 80 0001lo013000000000000000000000000000000 characters and matrix keypads up to 4x5 can be used. The 000000000000000000000000000000000000 00013 000000000000000000000 00 O 00000 O 00 processor is the popular F68HC 11 with many features, 000000000000000000000000000000000000 0000000000000000000000000000 001300 000 including SCI and SPI serial channels, 8 -bit 8 -ch. A/D, 20 000000000000000000000000000000000000 00000000000000000130013000000000000000 available YO lines, Watch Dog Timer, 1/2K EEPROM and 000000000000000000000000000000000000 000000000000000000000000000000000000 Max -FORTH w/Floating Point Package embedded in 12K 0000100000000000000000000000000000000 internal ROM. -

PCI32 Nios Target Nios Ethernet Development Kit (NEDK) Microtronix Linux Development Kit (LDK) Nios 2.0 Competitive Landscape

Excalibur-NiosExcalibur-Nios EmbeddedEmbedded SoftwareSoftware ProcessorProcessor CoreCore EnterEnter aa NewNew RealmRealm ofof Technology…Technology… ©2001 1 ® AgendaAgenda Quartus II Limited Edition PCI32 Nios Target Nios Ethernet Development Kit (NEDK) Microtronix Linux Development Kit (LDK) Nios 2.0 Competitive Landscape ©2001 2 ® ® QuartusQuartus IIII LimitedLimited EditionEdition ©2001 3 QuartusQuartus IIII LLiimitedmited EditionEdition Provides device compilation for Nios Development Kit customers Feature set based on Quartus II Web Edition, with some extra features to support Nios − TCL scripting − LeonardoSpectrum synthesis for Nios core − Development kit-specific devices: z EP20K200EFC484 – Used in NDK z EP20K200EBC652 z EP20K100EQC240 − See https://go.altera.com/extranet2001/products/literature/mb_2001/mb_411.doc for QII Web edition details. Provided to all Nios customers, starting in September − Shipped to all current Nios customers − Shipping today with all new Excalibur Nios ©2001 4Development Kits ® QuartusQuartus IIII LELE KeyKey PointsPoints Current Quartus II subscribers do not need to install QII LE Obtaining License Files − Altera sends an email that includes a new license file for QII LE to all current Nios customers. z Quartus II v1.1 enabled through October 31, 2001 z Quartus II Limited Edition enabled to eternity − The QII LE shipment also includes explicit instructions how to obtain a license online. NOTE: LogicLock is not enabled in QII LE − May affect customers with high performance needs “Where -

FD-V15N6.Pdf

SILICON COMPOSERS INC FAST Forth Native-Language Embedded Computers DUP >R R> Harris RTX 2000"" l&bit Forth Chip SC32"" 32-bit Forth Microprocessor 08 or 10 MHz operation and 15 MIPS speed. 08 or 10 MHz operation and 15 MIPS speed. 1-cycle 16 x 16 = 32-bi multiply. 1-clock cycle instruction execution. 1-cycle 1&prioritized interrupts. *Contiguous 16 GB data and 2 GB code space. *two 256-word stack memories. *Stack depths limited only by available memory. -&channel 1/0 bus & 3 timer/counters. *Bus request/bus grant lines with on-chip tristate. SC/FOX PCS (Parallel Coprocessor System) SC/FOX SBC32 (Single Board Computer32) *RTX 2000 industrial PGA CPU; 8 & 10 MHz. 032-bi SC32 industrial grade Forth PGA CPU. *System speed options: 8 or 10 MHz. *System speed options: 8 or 10 MHz. -32 KB to 1 MB 0-wait-state static RAM. 42 KB to 512 KB 0-wait-state static RAM. *Full-length PC/XT/AT plug-in (&layer) board. .100mm x 160mm Eurocard size (+layer) board. SC/FOX VME SBC (Single Board Computer) SC/FOX PCS32 (Parallel Coprocessor Sys) *RTX 2000 industrial PGA CPU; 8, 10, 12 MHz. 032-bi SC32 industrial grade Forth PGA CPU. *Bus Master, System Controller, or Bus Slave. *System speed options: 8 or 10 MHz. Up to 640 KB 0-wait-state static RAM. 064 KB to 1 MB 0-wait-state static RAM. -233mm x 160mm 6U size (Slayer) board. *FulClength PC/XT/AT plug-in (Slayer) board. SC/FOX CUB (Single Board Computer) SC/FOX SBC (Single Board Computer) *RTX 2000 PLCC or 2001A PLCC chip. -

Circuit Cellar, Inc. Binary Technology, Inc

WWW.GiURUMELE.Hi2.RO Weaving the Web CIRCUIT THECEUM~ COMPUTER APPLICATIONS JOURNAL believe it was Charlotte the spider who first 7published on a web (remember Charlotte’s Web?). FOUNDER/EDITORIAL DIRECTOR PUBLISHER ‘By weaving words for all to see, a tiny creature Steve Ciarcia Daniel Rodrigues rcommunicates to the world. EDITOR-IN-CHIEF PUBLISHER’S ASSISTANT Such is the case with the World Wide Web. The Web gives even the Ken Davidson Sue Hodge smallest of individual the capability to publish something for the world to see SENIOR TECHNICAL EDITOR CIRCULATION MANAGER without the expense of printing and distribution. Janice Marinelli Rose Mansella The growth of the Web over the past year has been remarkable. Only a TECHNICAL EDITOR CIRCULATION ASSISTANT year ago, the first version of Netscape was released and immediately Carole Boster Barbara Maleski rocketed to the top of the popularity charts. During that time, I’ve received ENGINEERING STAFF CIRCULATION CONSULTANT dozens of messages asking when we’re going to put up a Circuit Cellar Web Jeff Bachiochi & Ed Nisley Gregory Spitzfaden page. WEST COAST EDITOR BUSINESS MANAGER I’m happy to announce that we’ve done just that. As with most Web Tom Cantrell Jeannette Walters pages, this one still needs work and will be continually evolving. Currently CONTRIBUTING EDITORS ADVERTISING COORDINATOR available is subscription information, an ftp link to many of the magazine- Rick Lehrbaum Dan Gorsky Russ Reiss related files from the most recent issues, our author’s guide, next year’s CIRCUIT CELLAR INR, THE COMPUTER APPLICA- upcoming themes and deadlines, results from this year’s Design Contest NEW PRODUCTS EDITOR TIONS JOURNAL (ISSN 0696-6965) is published Harv Weiner monthly by Circuit Cellar Incorporated, 4 Park Street, (over a month before you’ll see them in print), and more. -

The Design and Implementation of an I/O Controller for the 386EX Evaluation Board By

The Design and Implementation of an I/O Controller for the 386EX Evaluation Board by Marcus-Alan Gilbert Submitted to the Department of Electrical Engineering and Computer Science in Partial Fulfillment of the Requirements for the Degree of Master of Engineering in Electrical Engineering and Computer Science at the Massachusetts Institute of Technology May 1995 Copyright © 1995, Marcus-Alan Gilbert. All rights reserved The author hereby grants to M.I.T. permission to reproduce and to distribute copies of this thesis document in whole or in part, and to grant others the right to do so. Author , (C 'U f' • • -. Department of Electrical Engineering and Computer Science - May 19, 1995 Certified by. _ I \i. Richitil DAThornton r visor Accepted by F.R. Morgenthaler Chairman, Department Com•tte on Graduate Theses 1MASSACHUSETTS INSTITUTE OF TECHNOLOGY AUG 1 01995 LIBRARIES jaarker ~a The Design and Implementation of an I/O Controller for the 386EX Evaluation Board by Marcus-Alan Gilbert Submitted to the Department of Electrical Engineering and Computer Science May 18, 1995 ABSTRACT This report describes an extension of the development system for MIT's Microprocessor Design Lab, course 6.115. The current development system for 6.115 is the 386 EX evaluation board. The current development system has been extended by having the 68HC 11 microcontroller control peripheral devices. The original intention was to develop such a system around the PowerPC line of processors, however the availability of the 386EX evaluation board, and its adoption into 6.115 led to its being the choice for the extension. The results of this initial development work are the creation of a microprocessor based system that is capable of processing data directly from an analog to digital converter under 68HC1 l's control. -

Embedded Bluetooth™ Protocol Stack

programming micros Spring 2002 From Idea to Target Editorial Why it’s normally not a good idea to pull your own teeth! Why then is it that many high-ranking an impressive 28%. We managed to com- managers and really smart engineers plete numerous development projects for develop their own software development our customers, which always resulted in tools? It can hardly be because they have the delivery of either a standardized soft- a lot of time to kill, nor can it be that ware development tool or a customized money isn’t important to them. solution for special needs. I don’t have the real answer to this Perhaps you didn’t know that IAR can question, but I see it happening repeated- build the tools you need. We can produce ly in our market…in big companies with a very cost efficient solution for you by considerable resources and mature devel- drawing on our long experience, together Tomas Wolf, President Wolf, Tomas opment processes as well as in smaller with a standard product portfolio that Most of us wouldn’t even dream of ones with less resources and less mature lends itself to a virtually unlimited num- trying to pull our own teeth when we processes. They’re everywhere…compa- ber of combinations. Our motto ”From have a toothache. And how many of nies that believe they need to develop idea to target in half the time” isn’t just a us have done the plumbing in our their own dental practice or plumbing promise we continue to fulfill for count- business to boot! less embedded systems developers. -

Real-Time Scheduling Analysis

DOT/FAA/AR-05/27 Real-Time Scheduling Analysis Office of Aviation Research and Development Washington, D.C. 20591 November 2005 Final Report This document is available to the U.S. public through the National Technical Information Service (NTIS), Springfield, Virginia 22161. U.S. Department of Transportation Federal Aviation Administration NOTICE This document is disseminated under the sponsorship of the U.S. Department of Transportation in the interest of information exchange. The United States Government assumes no liability for the contents or use thereof. The United States Government does not endorse products or manufacturers. Trade or manufacturer's names appear herein solely because they are considered essential to the objective of this report. This document does not constitute FAA certification policy. Consult your local FAA aircraft certification office as to its use. This report is available at the Federal Aviation Administration William J. Hughes Technical Center's Full-Text Technical Reports page: actlibrary.tc.faa.gov in Adobe Acrobat portable document format (PDF). Technical Report Documentation Page 1. Report No. 2. Government Accession No. 3. Recipient's Catalog No. DOT/FAA/AR-05/27 4. Title and Subtitle 5. Report Date November 2005 REAL-TIME SCHEDULING ANALYSIS 6. Performing Organization Code 7. Author(s) 8. Performing Organization Report No. Joseph Leung and Hairong Zhao 9. Performing Organization Name and Address 10. Work Unit No. (TRAIS) Department of Computer Science New Jersey Institute of Technology 11. Contract or Grant No. Newark, NJ 07102 NJIT/WOW 01/C/AW/NJIT Amendment 2 12. Sponsoring Agency Name and Address 13. Type of Report and Period Covered U.S.