V9fb: a Remote Framebuffer Infrastructure for Linux

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

UG1046 Ultrafast Embedded Design Methodology Guide

UltraFast Embedded Design Methodology Guide UG1046 (v2.3) April 20, 2018 Revision History The following table shows the revision history for this document. Date Version Revision 04/20/2018 2.3 • Added a note in the Overview section of Chapter 5. • Replaced BFM terminology with VIP across the user guide. 07/27/2017 2.2 • Vivado IDE updates and minor editorial changes. 04/22/2015 2.1 • Added Embedded Design Methodology Checklist. • Added Accessing Documentation and Training. 03/26/2015 2.0 • Added SDSoC Environment. • Added Related Design Hubs. 10/20/2014 1.1 • Removed outdated information. •In System Level Considerations, added information to the following sections: ° Performance ° Clocking and Reset 10/08/2014 1.0 Initial Release of document. UltraFast Embedded Design Methodology Guide Send Feedback 2 UG1046 (v2.3) April 20, 2018 www.xilinx.com Table of Contents Chapter 1: Introduction Embedded Design Methodology Checklist. 9 Accessing Documentation and Training . 10 Chapter 2: System Level Considerations Performance. 13 Power Consumption . 18 Clocking and Reset. 36 Interrupts . 41 Embedded Device Security . 45 Profiling and Partitioning . 51 Chapter 3: Hardware Design Considerations Configuration and Boot Devices . 63 Memory Interfaces . 69 Peripherals . 76 Designing IP Blocks . 94 Hardware Performance Considerations . 102 Dataflow . 108 PL Clocking Methodology . 112 ACP and Cache Coherency. 116 PL High-Performance Port Access. 120 System Management Hardware Assistance. 124 Managing Hardware Reconfiguration . 127 GPs and Direct PL Access from APU . 133 Chapter 4: Software Design Considerations Processor Configuration . 137 OS and RTOS Choices . 142 Libraries and Middleware . 152 Boot Loaders . 156 Software Development Tools . 162 UltraFast Embedded Design Methodology GuideSend Feedback 3 UG1046 (v2.3) April 20, 2018 www.xilinx.com Chapter 5: Hardware Design Flow Overview . -

Indexed Color

Indexed Color A browser may support only a certain number of specific colors, creating a palette from which to choose Figure 3.11 The Netscape color palette 1 QUIZ How many bits are needed to represent this palette? Show your work. 2 How to digitize a picture • Sample it → Represent it as a collection of individual dots called pixels • Quantize it → Represent each pixel as one of 224 possible colors (TrueColor) Resolution = The # of pixels used to represent a picture 3 Digitized Images and Graphics Whole picture Figure 3.12 A digitized picture composed of many individual pixels 4 Digitized Images and Graphics Magnified portion of the picture See the pixels? Hands-on: paste the high-res image from the previous slide in Paint, then choose ZOOM = 800 Figure 3.12 A digitized picture composed of many individual pixels 5 QUIZ: Images A low-res image has 200 rows and 300 columns of pixels. • What is the resolution? • If the pixels are represented in True-Color, what is the size of the file? • Same question in High-Color 6 Two types of image formats • Raster Graphics = Storage on a pixel-by-pixel basis • Vector Graphics = Storage in vector (i.e. mathematical) form 7 Raster Graphics GIF format • Each image is made up of only 256 colors (indexed color – similar to palette!) • But they can be a different 256 for each image! • Supports animation! Example • Optimal for line art PNG format (“ping” = Portable Network Graphics) Like GIF but achieves greater compression with wider range of color depth No animations 8 Bitmap format Contains the pixel color -

OM-Cube Project

OM-Cube project V. Hiribarren, N. Marchand, N. Talfer [email protected] - [email protected] - [email protected] Abstract. The OM-Cube project is composed of several components like a minimal operating system, a multi- media player, a LCD display and an infra-red controller. They should be chosen to fit the hardware of an em- bedded system. Several other similar projects can provide information on the software that can be chosen. This paper aims to examine the different available tools to build the OM-Multimedia machine. The main purpose is to explore different ways to build an embedded system that fits the hardware and fulfills the project. 1 A Minimal Operating System The operating system is the core of the embedded system, and therefore should be chosen with care. Because of its popu- larity, a Linux based system seems the best choice, but other open systems exist and should be considered. After having elected a system, all unnecessary components may be removed to get a minimal operating system. 1.1 A Linux Operating System Using a Linux kernel has several advantages. As it’s a popular kernel, many drivers and documentation are available. Linux is an open source kernel; therefore it enables anyone to modify its sources and to recompile it. Using Linux in an embedded system requires adapting the kernel to the hardware and to the system needs. A simple method for building a Linux embed- ded system is to create a partition on a development host and to mount it on a temporary mount point. This partition is filled as one goes along and then, the final distribution is put on the target host [Fich02] [LFS]. -

A New Steganographic Method for Palette-Based Images

IS&T's 1999 PICS Conference A New Steganographic Method for Palette-Based Images Jiri Fridrich Center for Intelligent Systems, SUNY Binghamton, Binghamton, New York Abstract messages. The gaps in human visual and audio systems can be used for information hiding. In the case of images, the In this paper, we present a new steganographic technique human eye is relatively insensitive to high frequencies. This for embedding messages in palette-based images, such as fact has been utilized in many steganographic algorithms, GIF files. The new technique embeds one message bit into which modify the least significant bits of gray levels in one pixel (its pointer to the palette). The pixels for message digital images or digital sound tracks. Additional bits of embedding are chosen randomly using a pseudo-random information can also be inserted into coefficients of image number generator seeded with a secret key. For each pixel transforms, such as discrete cosine transform, Fourier at which one message bit is to be embedded, the palette is transform, etc. Transform techniques are typically more searched for closest colors. The closest color with the same robust with respect to common image processing operations parity as the message bit is then used instead of the original and lossy compression. color. This has the advantage that both the overall change The steganographer’s job is to make the secretly hidden due to message embedding and the maximal change in information difficult to detect given the complete colors of pixels is smaller than in methods that perturb the knowledge of the algorithm used to embed the information least significant bit of indices to a luminance-sorted palette, except the secret embedding key.* This so called such as EZ Stego.1 Indeed, numerical experiments indicate Kerckhoff’s principle is the golden rule of cryptography and that the new technique introduces approximately four times is often accepted for steganography as well. -

Understanding Image Formats and When to Use Them

Understanding Image Formats And When to Use Them Are you familiar with the extensions after your images? There are so many image formats that it’s so easy to get confused! File extensions like .jpeg, .bmp, .gif, and more can be seen after an image’s file name. Most of us disregard it, thinking there is no significance regarding these image formats. These are all different and not cross‐ compatible. These image formats have their own pros and cons. They were created for specific, yet different purposes. What’s the difference, and when is each format appropriate to use? Every graphic you see online is an image file. Most everything you see printed on paper, plastic or a t‐shirt came from an image file. These files come in a variety of formats, and each is optimized for a specific use. Using the right type for the right job means your design will come out picture perfect and just how you intended. The wrong format could mean a bad print or a poor web image, a giant download or a missing graphic in an email Most image files fit into one of two general categories—raster files and vector files—and each category has its own specific uses. This breakdown isn’t perfect. For example, certain formats can actually contain elements of both types. But this is a good place to start when thinking about which format to use for your projects. Raster Images Raster images are made up of a set grid of dots called pixels where each pixel is assigned a color. -

Linux Kernal II 9.1 Architecture

Page 1 of 7 Linux Kernal II 9.1 Architecture: The Linux kernel is a Unix-like operating system kernel used by a variety of operating systems based on it, which are usually in the form of Linux distributions. The Linux kernel is a prominent example of free and open source software. Programming language The Linux kernel is written in the version of the C programming language supported by GCC (which has introduced a number of extensions and changes to standard C), together with a number of short sections of code written in the assembly language (in GCC's "AT&T-style" syntax) of the target architecture. Because of the extensions to C it supports, GCC was for a long time the only compiler capable of correctly building the Linux kernel. Compiler compatibility GCC is the default compiler for the Linux kernel source. In 2004, Intel claimed to have modified the kernel so that its C compiler also was capable of compiling it. There was another such reported success in 2009 with a modified 2.6.22 version of the kernel. Since 2010, effort has been underway to build the Linux kernel with Clang, an alternative compiler for the C language; as of 12 April 2014, the official kernel could almost be compiled by Clang. The project dedicated to this effort is named LLVMLinxu after the LLVM compiler infrastructure upon which Clang is built. LLVMLinux does not aim to fork either the Linux kernel or the LLVM, therefore it is a meta-project composed of patches that are eventually submitted to the upstream projects. -

Institutionen För Datavetenskap Department of Computer and Information Science

Institutionen för datavetenskap Department of Computer and Information Science Final thesis Simulation of Set-top box Components on an X86 Architecture by Implementing a Hardware Abstraction Layer by Faruk Emre Sahin Muhammad Salman Khan LITH-IDA-EX—10/050--SE 2010-12-25 Linköpings universitet Linköpings universitet SE-581 83 Linköping, Sweden 581 83 Linköping Linköping University Department of Computer and Information Science Final Thesis Simulation of Set-top box Components on an X86 Architecture by Implementing a Hardware Abstraction Layer by Faruk Emre Sahin Muhammad Salman Khan LITH-IDA-EX—10/050—SE 2010-12-25 Supervisors: Fredrik Hallenberg, Tomas Taleus R&D at Motorola (Linköping) Examiner: Prof. Dr. Christoph Kessler Dept. Of Computer and Information Science at Linköpings universitet Abstract The KreaTV Application Development Kit (ADK) product of Motorola en- ables application developers to create high level applications and browser plugins for the IPSTB system. As a result, customers will reduce develop- ment time, cost and supplier dependency. The main goal of this thesis was to port this platform to a standard Linux PC to make it easy to trace the bugs and debug the code. This work has been done by implementing a hardware abstraction layer(HAL)for Linux Operating System. HAL encapsulates the hardware dependent code and HAL APIs provide an abstraction of underlying architecture to the oper- ating system and to application software. So, the embedded platform can be emulated on a standard Linux PC by implementing a HAL for it. We have successfully built the basic building blocks of HAL with some performance degradation. -

Hdcp Support in Optee

HDCP SUPPORT IN OPTEE PRODUCT PRESENTATION Linaro Multimedia Working Group MICR ADVANCED TECHNOLOGIES • https://www.linaro.org/ SEPTEMBER 2019 Agenda • Quick introduction to HDCP • Secure Video Path overview • Current HDCP control in Linux • Proposal to control HDCP in OPTEE • Questions HDCP OVERVIEW 3 HDCP : High bandwidth Digital Content Protection • A digital copy protection developed by Intel™ to prevent copying of digital and audio video content. Before sending data, the source device shall check the destination device is authorized to received it. If so, the source device encrypts the data, only the destination device can decrypt. - data encryption - prevent non-licensed devices from receiving content • Android and Linux NXP bsp manage HDCP at Linux Level, through libDRM. So nothing prevent a user to disable HDCP protection while secure content is under playback. It is a security holes in the Secure Video Path. • HDCP support currently under development for wayland/Weston: https://gitlab.freedesktop.org/wayland/weston/merge_requests/48 • No Open Source solution exists to manage HDCP in secure mode. • HDCP versions: ▪ HDCP 1.X: Hacked: Master key published (leak/reverse engineering) ▪ HDCP 2.0: Hacked before release ▪ HDCP 2.1: Hacked before release ▪ HDCP 2.2: Not yet hacked 4 ▪ HDCP 2.3: Not yet hacked HDCP control state Machine Content with HDCP protection mandatory no yes Local display Local display yes no yes no Video displayed Video displayed without HDCP Digital Display without HDCP Digital Display encryption encryption yes no yes no It means we have analog display Video displayed Video displayed HDCP supported without HDCP HDCP supported without HDCP encryption encryption yes no yes no Video displayed Video displayed without Widevine/PlayReady To Video not displayed Application to decide if HDCP check current HDCP version Application to display a Warning 5 HDCP encryption message HDCP Unauthorized, encryption to be used >= expected HDCP version Content Disabled.' Error. -

Openbricks Embedded Linux Framework - User Manual I

OpenBricks Embedded Linux Framework - User Manual i OpenBricks Embedded Linux Framework - User Manual OpenBricks Embedded Linux Framework - User Manual ii Contents 1 OpenBricks Introduction 1 1.1 What is it ?......................................................1 1.2 Who is it for ?.....................................................1 1.3 Which hardware is supported ?............................................1 1.4 What does the software offer ?............................................1 1.5 Who’s using it ?....................................................1 2 List of supported features 2 2.1 Key Features.....................................................2 2.2 Applicative Toolkits..................................................2 2.3 Graphic Extensions..................................................2 2.4 Video Extensions...................................................3 2.5 Audio Extensions...................................................3 2.6 Media Players.....................................................3 2.7 Key Audio/Video Profiles...............................................3 2.8 Networking Features.................................................3 2.9 Supported Filesystems................................................4 2.10 Toolchain Features..................................................4 3 OpenBricks Supported Platforms 5 3.1 Supported Hardware Architectures..........................................5 3.2 Available Platforms..................................................5 3.3 Certified Platforms..................................................7 -

A Simplified Graphics System Based on Direct Rendering Manager System

J. lnf. Commun. Converg. Eng. 16(2): 125-129, Jun. 2018 Regular paper A Simplified Graphics System Based on Direct Rendering Manager System Nakhoon Baek* , Member, KIICE School of Computer Science and Engineering, Kyungpook National University, Daegu 41566, Korea Abstract In the field of computer graphics, rendering speed is one of the most important factors. Contemporary rendering is performed using 3D graphics systems with windowing system support. Since typical graphics systems, including OpenGL and the DirectX library, focus on the variety of graphics rendering features, the rendering process itself consists of many complicated operations. In contrast, early computer systems used direct manipulation of computer graphics hardware, and achieved simple and efficient graphics handling operations. We suggest an alternative method of accelerated 2D and 3D graphics output, based on directly accessing modern GPU hardware using the direct rendering manager (DRM) system. On the basis of this DRM support, we exchange the graphics instructions and graphics data directly, and achieve better performance than full 3D graphics systems. We present a prototype system for providing a set of simple 2D and 3D graphics primitives. Experimental results and their screen shots are included. Index Terms: Direct rendering manager, Efficient handling, Graphics acceleration, Light-weight implementation, Prototype system I. INTRODUCTION Rendering speed is one of the most important factors for 3D graphics application programs. Typical present-day graph- After graphics output devices became publicly available, a ics programs need to be able to handle very large quantities large number of graphics applications were developed for a of graphics data. The larger the data size, and the more sen- broad spectrum of uses including computer animations, com- sitive to the rendering speed, the better the speed-up that can puter games, user experiences, and human-computer inter- be achieved, even for minor aspects of the graphics pipeline. -

Amiga Graphics Reference Card 2Nd Edition

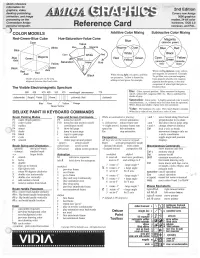

Quick reference information for graphics, video, 2nd Edition desktop publishing, Covers new Amiga animation, and image 3000 graphics processing on the modes, 24-bit color Commodore Amiga hardware, DOS 2.0 personal computer. Reference Card overscan, and PAL. ) COLOR MODELS Additive Color Mixing Subtractive Color Mixing Red-Green-Blue Cube Hue-Saturation-Value Cone Cyan Value White Blue Blue] Axis Yellow Black ~reen Axis When mixi'hgpigments, cyan, yellow, Red When mixing light, red, green, and blue and magenta are primaries. Example: are primaries. Yell ow is formed by To get blue, mix cyan and magenta. Shades of gray are on the long Cyan pigment absorbs red, magenta adding red and green, for example. diagonal between black and white. pigment absorbs green, so the only component of white light that gets re The Visible Electromagnetic Spectrum flected is blue. 380 420 470 495 535 575 wavelength (nanometers) 770 Hue: Color; spectral position. Often measured in degrees; red=0°, yellow=60°, magenta=300°, etc. Hue is undefined for shades of gray. (ultraviolet) Purple Violet yellowish Red (infrared) Saturation: Color purity. A highly-saturated color is nearly Blue Orange monochromatic, i.e., contains only one color from the spectrum. White, black, and shades of gray have zero saturation. Value: The darkness of a color. How much black it contain's. DELUXE PAINT Ill KEYBOARD COMMANDS White has a value of one, black has a value of zero. Brush Painting Modes Page and Screen Commands While an animation is playing: ; ana, move brush along fixed axis -

IT Acronyms.Docx

List of computing and IT abbreviations /.—Slashdot 1GL—First-Generation Programming Language 1NF—First Normal Form 10B2—10BASE-2 10B5—10BASE-5 10B-F—10BASE-F 10B-FB—10BASE-FB 10B-FL—10BASE-FL 10B-FP—10BASE-FP 10B-T—10BASE-T 100B-FX—100BASE-FX 100B-T—100BASE-T 100B-TX—100BASE-TX 100BVG—100BASE-VG 286—Intel 80286 processor 2B1Q—2 Binary 1 Quaternary 2GL—Second-Generation Programming Language 2NF—Second Normal Form 3GL—Third-Generation Programming Language 3NF—Third Normal Form 386—Intel 80386 processor 1 486—Intel 80486 processor 4B5BLF—4 Byte 5 Byte Local Fiber 4GL—Fourth-Generation Programming Language 4NF—Fourth Normal Form 5GL—Fifth-Generation Programming Language 5NF—Fifth Normal Form 6NF—Sixth Normal Form 8B10BLF—8 Byte 10 Byte Local Fiber A AAT—Average Access Time AA—Anti-Aliasing AAA—Authentication Authorization, Accounting AABB—Axis Aligned Bounding Box AAC—Advanced Audio Coding AAL—ATM Adaptation Layer AALC—ATM Adaptation Layer Connection AARP—AppleTalk Address Resolution Protocol ABCL—Actor-Based Concurrent Language ABI—Application Binary Interface ABM—Asynchronous Balanced Mode ABR—Area Border Router ABR—Auto Baud-Rate detection ABR—Available Bitrate 2 ABR—Average Bitrate AC—Acoustic Coupler AC—Alternating Current ACD—Automatic Call Distributor ACE—Advanced Computing Environment ACF NCP—Advanced Communications Function—Network Control Program ACID—Atomicity Consistency Isolation Durability ACK—ACKnowledgement ACK—Amsterdam Compiler Kit ACL—Access Control List ACL—Active Current