AN5072: Introduction to Embedded Graphics – Application Note

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

GLSL 4.50 Spec

The OpenGL® Shading Language Language Version: 4.50 Document Revision: 7 09-May-2017 Editor: John Kessenich, Google Version 1.1 Authors: John Kessenich, Dave Baldwin, Randi Rost Copyright (c) 2008-2017 The Khronos Group Inc. All Rights Reserved. This specification is protected by copyright laws and contains material proprietary to the Khronos Group, Inc. It or any components may not be reproduced, republished, distributed, transmitted, displayed, broadcast, or otherwise exploited in any manner without the express prior written permission of Khronos Group. You may use this specification for implementing the functionality therein, without altering or removing any trademark, copyright or other notice from the specification, but the receipt or possession of this specification does not convey any rights to reproduce, disclose, or distribute its contents, or to manufacture, use, or sell anything that it may describe, in whole or in part. Khronos Group grants express permission to any current Promoter, Contributor or Adopter member of Khronos to copy and redistribute UNMODIFIED versions of this specification in any fashion, provided that NO CHARGE is made for the specification and the latest available update of the specification for any version of the API is used whenever possible. Such distributed specification may be reformatted AS LONG AS the contents of the specification are not changed in any way. The specification may be incorporated into a product that is sold as long as such product includes significant independent work developed by the seller. A link to the current version of this specification on the Khronos Group website should be included whenever possible with specification distributions. -

Powervr SGX Series5xt IP Core Family

PowerVR SGX Series5XT IP Core Family The PowerVR™ SGX Series5XT Graphics Processing Unit (GPU) IP core family is a series Features of highly efficient graphics acceleration IP cores that meet the multimedia requirements of • Most comprehensive IP core family the next generation of consumer, communications and computing applications. and roadmap in the industry PowerVR SGX Series5XT architecture is fully scalable for a wide range of area and • USSE2 delivers twice the peak performance requirements, enabling it to target markets from low cost feature-rich mobile floating point and instruction multimedia products to very high performance consoles and computing devices. throughput of Series5 USSE • YUV and colour space accelerators The family incorporates the second-generation Universal Scalable Shader Engine (USSE2™), for improved performance with a feature set that exceeds the requirements of OpenGL 2.0 and Microsoft Shader • Upgraded PowerVR Series5XT Model 3, enabling 2D, 3D and general purpose (GP-GPU) processing in a single core. shader-driven tile-based deferred rendering (TBDR) architecture • Multi-processor options enable scalability to higher performance • Support for all industry standard PowerVR SGX Family mobile and desktop graphics APIs and operating sytems Series5XT SGX543MP1-16, SGX544MP1-16, SGX554MP1-16 • Fully backwards compatible with PowerVR MBX and SGX Series5 Series5 SGX520, SGX530, SGX531, SGX535, SGX540, SGX545 Benefits Multi-standard API and OS • Extensive product line supports all area/performance requirements OpenGL -

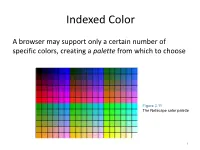

Indexed Color

Indexed Color A browser may support only a certain number of specific colors, creating a palette from which to choose Figure 3.11 The Netscape color palette 1 QUIZ How many bits are needed to represent this palette? Show your work. 2 How to digitize a picture • Sample it → Represent it as a collection of individual dots called pixels • Quantize it → Represent each pixel as one of 224 possible colors (TrueColor) Resolution = The # of pixels used to represent a picture 3 Digitized Images and Graphics Whole picture Figure 3.12 A digitized picture composed of many individual pixels 4 Digitized Images and Graphics Magnified portion of the picture See the pixels? Hands-on: paste the high-res image from the previous slide in Paint, then choose ZOOM = 800 Figure 3.12 A digitized picture composed of many individual pixels 5 QUIZ: Images A low-res image has 200 rows and 300 columns of pixels. • What is the resolution? • If the pixels are represented in True-Color, what is the size of the file? • Same question in High-Color 6 Two types of image formats • Raster Graphics = Storage on a pixel-by-pixel basis • Vector Graphics = Storage in vector (i.e. mathematical) form 7 Raster Graphics GIF format • Each image is made up of only 256 colors (indexed color – similar to palette!) • But they can be a different 256 for each image! • Supports animation! Example • Optimal for line art PNG format (“ping” = Portable Network Graphics) Like GIF but achieves greater compression with wider range of color depth No animations 8 Bitmap format Contains the pixel color -

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27

Case M:07-cv-01826-WHA Document 249 Filed 11/08/2007 Page 1 of 34 1 BOIES, SCHILLER & FLEXNER LLP WILLIAM A. ISAACSON (pro hac vice) 2 5301 Wisconsin Ave. NW, Suite 800 Washington, D.C. 20015 3 Telephone: (202) 237-2727 Facsimile: (202) 237-6131 4 Email: [email protected] 5 6 BOIES, SCHILLER & FLEXNER LLP BOIES, SCHILLER & FLEXNER LLP JOHN F. COVE, JR. (CA Bar No. 212213) PHILIP J. IOVIENO (pro hac vice) 7 DAVID W. SHAPIRO (CA Bar No. 219265) ANNE M. NARDACCI (pro hac vice) KEVIN J. BARRY (CA Bar No. 229748) 10 North Pearl Street 8 1999 Harrison St., Suite 900 4th Floor Oakland, CA 94612 Albany, NY 12207 9 Telephone: (510) 874-1000 Telephone: (518) 434-0600 Facsimile: (510) 874-1460 Facsimile: (518) 434-0665 10 Email: [email protected] Email: [email protected] [email protected] [email protected] 11 [email protected] 12 Attorneys for Plaintiff Jordan Walker Interim Class Counsel for Direct Purchaser 13 Plaintiffs 14 15 UNITED STATES DISTRICT COURT 16 NORTHERN DISTRICT OF CALIFORNIA 17 18 IN RE GRAPHICS PROCESSING UNITS ) Case No.: M:07-CV-01826-WHA ANTITRUST LITIGATION ) 19 ) MDL No. 1826 ) 20 This Document Relates to: ) THIRD CONSOLIDATED AND ALL DIRECT PURCHASER ACTIONS ) AMENDED CLASS ACTION 21 ) COMPLAINT FOR VIOLATION OF ) SECTION 1 OF THE SHERMAN ACT, 15 22 ) U.S.C. § 1 23 ) ) 24 ) ) JURY TRIAL DEMANDED 25 ) ) 26 ) ) 27 ) 28 THIRD CONSOLIDATED AND AMENDED CLASS ACTION COMPLAINT BY DIRECT PURCHASERS M:07-CV-01826-WHA Case M:07-cv-01826-WHA Document 249 Filed 11/08/2007 Page 2 of 34 1 Plaintiffs Jordan Walker, Michael Bensignor, d/b/a Mike’s Computer Services, Fred 2 Williams, and Karol Juskiewicz, on behalf of themselves and all others similarly situated in the 3 United States, bring this action for damages and injunctive relief under the federal antitrust laws 4 against Defendants named herein, demanding trial by jury, and complaining and alleging as 5 follows: 6 NATURE OF THE CASE 7 1. -

Driver Riva Tnt2 64

Driver riva tnt2 64 click here to download The following products are supported by the drivers: TNT2 TNT2 Pro TNT2 Ultra TNT2 Model 64 (M64) TNT2 Model 64 (M64) Pro Vanta Vanta LT GeForce. The NVIDIA TNT2™ was the first chipset to offer a bit frame buffer for better quality visuals at higher resolutions, bit color for TNT2 M64 Memory Speed. NVIDIA no longer provides hardware or software support for the NVIDIA Riva TNT GPU. The last Forceware unified display driver which. version now. NVIDIA RIVA TNT2 Model 64/Model 64 Pro is the first family of high performance. Drivers > Video & Graphic Cards. Feedback. NVIDIA RIVA TNT2 Model 64/Model 64 Pro: The first chipset to offer a bit frame buffer for better quality visuals Subcategory, Video Drivers. Update your computer's drivers using DriverMax, the free driver update tool - Display Adapters - NVIDIA - NVIDIA RIVA TNT2 Model 64/Model 64 Pro Computer. (In Windows 7 RC1 there was the build in TNT2 drivers). http://kemovitra. www.doorway.ru Use the links on this page to download the latest version of NVIDIA RIVA TNT2 Model 64/Model 64 Pro (Microsoft Corporation) drivers. All drivers available for. NVIDIA RIVA TNT2 Model 64/Model 64 Pro - Driver Download. Updating your drivers with Driver Alert can help your computer in a number of ways. From adding. Nvidia RIVA TNT2 M64 specs and specifications. Price comparisons for the Nvidia RIVA TNT2 M64 and also where to download RIVA TNT2 M64 drivers. Windows 7 and Windows Vista both fail to recognize the Nvidia Riva TNT2 ( Model64/Model 64 Pro) which means you are restricted to a low. -

(GPU) Computing

Graphics Processing Unit (GPU) computing This section describes the graphics processing unit (GPU) computing feature of OptiSystem. Note: The GPU computing feature is only configurable with OptiSystem Version 11 (or higher) What is GPU computing? GPU computing or GPGPU takes advantage of a computer’s grahics processing card to augment the speed of general purpose scientific and engineering computing tasks. Compute Unified Device Architecture (CUDA) implementation for OptiSystem NVIDIA revolutionized the GPGPU and accelerated computing when it introduced a new parallel computing architecture: Compute Unified Device Architecture (CUDA). CUDA is both a hardware and software architecture for issuing and managing computations within the GPU, thus allowing it to operate as a generic data-parallel computing device. CUDA allows the programmer to take advantage of the parallel computing power of an NVIDIA graphics card to perform general purpose computations. OptiSystem CUDA implementation The OptiSystem model for GPU computing involves using a central processing unit (CPU) and GPU together in a heterogeneous co-processing computing model. The sequential part of the application runs on the CPU and the computationally-intensive part is accelerated by the GPU. In the OptiSystem GPU programming model, the application has been modified to map the compute-intensive kernels to the GPU. The remainder of the application remains within the CPU. CUDA parallel computing architecture The NVIDIA CUDA parallel computing architecture is enabled on GeForce®, Quadro®, and Tesla™ products. Whereas GeForce and Quadro are designed for consumer graphics and professional visualization respectively, the Tesla product family is designed ground-up for parallel computing and offers exclusive computing 3 GRAPHICS PROCESSING UNIT (GPU) COMPUTING features, and is the recommended choice for the OptiSystem GPU. -

Opengl ES / Openvg / Opencl / Webgl / Etc

KNU-3DC : Education and Training Plan 24 July 2013 Hwanyong LEE, Ph.D. Principal Engineer & Industry Cooperation Prof., KNU 3DC [email protected] KNU-3DC Introduction • Kyungpook National Univ. 3D Convergence Technology Center . Korea Government Funded Org. 23 staffs (10 Ph.D) . Research / Supporting Industry . Training and Education • Training and Education . Dassault Training Center . KIKS(Korea Institute of Khronos Study) • Constructing New Center Building . HUGE ! 7 Floors ! (2014E) 2 KNU-3DC KIKS • KIKS(Korea Institute of Khronos Study) . Leading Role in Korea for Training and Education of Khronos Standard – Collaboration with Khronos Group . Open Lecture + Develop Coursework for • OpenGL / OpenGL ES / OpenVG / OpenCL / WebGL / etc. • Opening / Sponsoring Workshop and Forum . Participating Khronos Activities • Contributor Member (Plan, now Processing) • Active participation of Khronos WG . Other Standard Activities • Make Khronos Standard into Korea National Standard. (WebGL) • W3C, ISO/IEC JTC1, IEEE 3333.X . Research and Consulting for Industry and Academy 3 KIKS Course • KIKS Course will be categorized into . Basic / Advanced / Packaged . Special Course - For instance Overview / Optimization / Consulting • Developing Courseware (for Khronos API) . OpenGL ES Basic & Advanced . OpenGL Basic & Advanced . OpenVG . OpenCL Basic & Advanced . WebGL . Etc. – new standards (Red - Started / Orange – Start at 4Q2013 / Dark Blue – Start at 2014) 4 Different View of Courses University Computer Game Image Parallel View Graphics Develop Processing … Processing JavaScript Khronos Canvas View … iPhone Android Company Web-App Parallel App App Application Develop View Develop Develop … Develop Course Development – Packaging Example • Android Application with OpenGL ES . General Android API’s – JNI, Java etc. OpenGL ES • iPhone App. Development with OpenGL ES . General iPhone APP API – cocoa, Objective-C, etc. -

A New Steganographic Method for Palette-Based Images

IS&T's 1999 PICS Conference A New Steganographic Method for Palette-Based Images Jiri Fridrich Center for Intelligent Systems, SUNY Binghamton, Binghamton, New York Abstract messages. The gaps in human visual and audio systems can be used for information hiding. In the case of images, the In this paper, we present a new steganographic technique human eye is relatively insensitive to high frequencies. This for embedding messages in palette-based images, such as fact has been utilized in many steganographic algorithms, GIF files. The new technique embeds one message bit into which modify the least significant bits of gray levels in one pixel (its pointer to the palette). The pixels for message digital images or digital sound tracks. Additional bits of embedding are chosen randomly using a pseudo-random information can also be inserted into coefficients of image number generator seeded with a secret key. For each pixel transforms, such as discrete cosine transform, Fourier at which one message bit is to be embedded, the palette is transform, etc. Transform techniques are typically more searched for closest colors. The closest color with the same robust with respect to common image processing operations parity as the message bit is then used instead of the original and lossy compression. color. This has the advantage that both the overall change The steganographer’s job is to make the secretly hidden due to message embedding and the maximal change in information difficult to detect given the complete colors of pixels is smaller than in methods that perturb the knowledge of the algorithm used to embed the information least significant bit of indices to a luminance-sorted palette, except the secret embedding key.* This so called such as EZ Stego.1 Indeed, numerical experiments indicate Kerckhoff’s principle is the golden rule of cryptography and that the new technique introduces approximately four times is often accepted for steganography as well. -

Understanding Image Formats and When to Use Them

Understanding Image Formats And When to Use Them Are you familiar with the extensions after your images? There are so many image formats that it’s so easy to get confused! File extensions like .jpeg, .bmp, .gif, and more can be seen after an image’s file name. Most of us disregard it, thinking there is no significance regarding these image formats. These are all different and not cross‐ compatible. These image formats have their own pros and cons. They were created for specific, yet different purposes. What’s the difference, and when is each format appropriate to use? Every graphic you see online is an image file. Most everything you see printed on paper, plastic or a t‐shirt came from an image file. These files come in a variety of formats, and each is optimized for a specific use. Using the right type for the right job means your design will come out picture perfect and just how you intended. The wrong format could mean a bad print or a poor web image, a giant download or a missing graphic in an email Most image files fit into one of two general categories—raster files and vector files—and each category has its own specific uses. This breakdown isn’t perfect. For example, certain formats can actually contain elements of both types. But this is a good place to start when thinking about which format to use for your projects. Raster Images Raster images are made up of a set grid of dots called pixels where each pixel is assigned a color. -

A Configurable General Purpose Graphics Processing Unit for Power, Performance, and Area Analysis

Iowa State University Capstones, Theses and Creative Components Dissertations Summer 2019 A configurable general purpose graphics processing unit for power, performance, and area analysis Garrett Lies Follow this and additional works at: https://lib.dr.iastate.edu/creativecomponents Part of the Digital Circuits Commons Recommended Citation Lies, Garrett, "A configurable general purpose graphics processing unit for power, performance, and area analysis" (2019). Creative Components. 329. https://lib.dr.iastate.edu/creativecomponents/329 This Creative Component is brought to you for free and open access by the Iowa State University Capstones, Theses and Dissertations at Iowa State University Digital Repository. It has been accepted for inclusion in Creative Components by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. A Configurable General Purpose Graphics Processing Unit for Power, Performance, and Area Analysis by Garrett Joseph Lies A Creative Component submitted to the graduate faculty in partial fulfillment of the requirements for the degree of MASTER OF SCIENCE Major: Computer Engineering (Very-Large Scale Integration) Program of Study Committee: Joseph Zambreno, Major Professor The student author, whose presentation of the scholarship herein was approved by the program of study committee, is solely responsible for the content of this dissertation/thesis. The Graduate College will ensure this dissertation/thesis is globally accessible and will not permit alterations after a degree is conferred. Iowa State University Ames, Iowa 2019 Copyright c Garrett Joseph Lies, 2019. All rights reserved. ii TABLE OF CONTENTS Page LIST OF TABLES . iv LIST OF FIGURES . .v ACKNOWLEDGMENTS . vi ABSTRACT . vii CHAPTER 1. -

Openvg 1.1 API Quick Reference Card - Page 1

OpenVG 1.1 API Quick Reference Card - Page 1 OpenVG® is an API for hardware-accelerated two-dimensional Errors [4.1] vector and raster graphics. It provides a device-independent Error codes and their numerical values are defined by the VGErrorCode enumeration and can be and vendor-neutral interface for sophisticated 2D graphical obtained with the function: VGErrorCode vgGetError(void). The possible values are as follows: applications, while allowing device manufacturers to provide VG_NO_ERROR 0 VG_UNSUPPORTED_IMAGE_FORMAT_ERROR 0x1004 hardware acceleration where appropriate. VG_BAD_HANDLE_ERROR 0x1000 VG_UNSUPPORTED_PATH_FORMAT_ERROR 0x1005 • [n.n.n] refers to sections and tables in the OpenVG 1.1 API VG_ILLEGAL_ARGUMENT_ERROR 0x1001 VG_IMAGE_IN_USE_ERROR 0x1006 specification available at www.khronos.org/openvg/ VG_OUT_OF_MEMORY_ERROR 0x1002 VG_NO_CONTEXT_ERROR 0x1007 • Default values are shown in blue. VG_PATH_CAPABILITY_ERROR 0x1003 Data Types & Number Representations Colors [3.4] The sRGB color space defines values R’sRGB, G’sRGB, B’sRGB in terms of the linear lRGB primaries. Primitive Data Types [3.2] Colors in OpenVG other than those stored in image pixels are represented as non-premultiplied sRGBA color values. openvg.h khronos_type.h range Convert from lRGB to sRGB Convert from sRGB to lRGB Image pixel color and alpha values lie in the range [0,1] (gamma mapping) (1) (inverse gamma mapping) (2) VGbyte khronos_int8_t [-128, 127] unless otherwise noted. -1 VGubyte khronos_uint8_t [0, 255] R’sRGB = γ(R) R = γ (R’sRGB) Color Space Definitions -1 The linear lRGB color space is defined in terms of the G’sRGB = γ(G) G = γ (G’sRGB) VGshort khronos_int16_t [-32768, 32767] -1 standard CIE XYZ color space, following ITU Rec. -

Hardware Implementation of a Tessellation Accelerator for the Openvg Standard

IEICE Electronics Express, Vol.7, No.6, 440–446 Hardware implementation of a tessellation accelerator for the OpenVG standard Seung Hun Kim, Yunho Oh, Karam Park, and Won Woo Roa) School of Electrical and Electronic Engineering, Yonsei University, 134 Shinchon-Dong, Seodaemun-Gu, SEOUL, 120–749, KOREA a) [email protected] Abstract: The OpenVG standard has been introduced as an efficient vector graphics API for embedded systems. There have been several OpenVG implementations that are based on the software rendering of image. However, the software rendering needs more execution time and power consumption than hardware accelerated rendering. For the effi- cient hardware implementation, we merge eight pipeline stages in the original specification to four pipeline stages. The first hardware accel- eration stage is the tessellation part which is one of the pipeline stages that calculates the edge of vector graphics. In this paper, we provide an efficient hardware design for the tessellation stage and claim this would eventually reduce the execution time and hardware complexity. Keywords: OpenVG, vector graphics, tessellation, hardware acceler- ator Classification: Electron devices, circuits, and systems References [1] K. Pulli, “New APIs for mobile graphics,” Proc. SPIE - The International Society for Optical Engineering, vol. 6074, pp. 1–13, 2006. [2] Khronos Group Inc., “OpenVG specification Version 1.0.1” [Online] http://www.khronos.org/openvg/ [3] S.-Y. Lee, S. Kim, J. Chung, and B.-U. Choi, “Salable Vector Graphics (OpenVG) for Creating Animation Image in Embedded Systems,” Lecture Notes in Computer Science, vol. 4693, pp. 99–108, 2007. [4] G. He, B. Bai, Z. Pan, and X.