Fellowship Program

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Mala Radhakrishnan an Interview by Mindy Levine

DED UN 18 O 98 F http://www.nesacs.org N Y O T R E I T H C E N O A E S S S L T A E A C R C I th N S M 90 Anniversary Issue of The NUCLEUS S E E H C C TI N November 2011 Vol. XC, No. 3 O CA N • AMERI Monthly Meeting 2011 James Flack Norris Award to Prof. Peter Mahaffy Meeting at Astra-Zeneca, Waltham Mala Radhakrishnan An Interview by Mindy Levine ACS Governance A Summary from the Fall ACS Meeting Arno Heyn Award 2011 Award to Harvey C. Steiner 2 The Nucleus November 2011 The Northeastern Section of the American Chemical Society, Inc. Contents Office: Anna Singer, 12 Corcoran Road, Burlington, MA 01803 (Voice or FAX) 781-272-1966. Mala Radhakrishnan ____________________________________4 e-mail: secretary(at)nesacs.org NESACS Homepage: An interview by Mindy Levine http://www.NESACS.org Officers 2011 Monthly Meeting _______________________________________5 Chair: 2011 James Flack Norris Award to Prof. Peter Mahaffy Patrick M. Gordon 1 Brae Circle Meeting at Astra-Zeneca, Waltham Woburn, MA 01801 [email protected] ACS Awards to NESACS Members _________________________6 Chair-Elect Ruth Tanner To be presented at the 243rd ACS National Meeting, San Diego, CA Olney Hall 415B March 27, 2012 Lowell, MA 01854 University of Mass Lowell Ruth_Tanner(at)uml.edu Report from Denver ____________________________________6 978-934-3662 Revamping the MCAT Exams. By Morton Z. Hoffman Immediate Past Chair: John McKew Historical Notes 7 John.McKew(at)gmail.com _______________________________________ Secretary: Virginia C. -

Audio Production Techniques (206) Unit 1

Audio Production Techniques (206) Unit 1 Characteristics of Audio Medium Digital audio is technology that can be used to record, store, generate, manipulate, and reproduce sound using audio signals that have been encoded in digital form. Following significant advances in digital audio technology during the 1970s, it gradually replaced analog audio technology in many areas of sound production, sound recording (tape systems were replaced with digital recording systems), sound engineering and telecommunications in the 1990s and 2000s. A microphone converts sound (a singer's voice or the sound of an instrument playing) to an analog electrical signal, then an analog-to-digital converter (ADC)—typically using pulse-code modulation—converts the analog signal into a digital signal. This digital signal can then be recorded, edited and modified using digital audio tools. When the sound engineer wishes to listen to the recording on headphones or loudspeakers (or when a consumer wishes to listen to a digital sound file of a song), a digital-to-analog converter performs the reverse process, converting a digital signal back into an analog signal, which analog circuits amplify and send to aloudspeaker. Digital audio systems may include compression, storage, processing and transmission components. Conversion to a digital format allows convenient manipulation, storage, transmission and retrieval of an audio signal. Unlike analog audio, in which making copies of a recording leads to degradation of the signal quality, when using digital audio, an infinite number of copies can be made without any degradation of signal quality. Development and expansion of radio network in India FM broadcasting began on 23 July 1977 in Chennai, then Madras, and was expanded during the 1990s, nearly 50 years after it mushroomed in the US.[1] In the mid-nineties, when India first experimented with private FM broadcasts, the small tourist destination ofGoa was the fifth place in this country of one billion where private players got FM slots. -

Australian Biochemist the Magazine of the Australian Society for Biochemistry and Molecular Biology Inc

ISSN 1443-0193 Australian Biochemist The Magazine of the Australian Society for Biochemistry and Molecular Biology Inc. Volume 47 AUGUST 2016 No.2 SHOWCASE ON RESEARCH Protein Misfolding and Proteostasis THIS ISSUE INCLUDES Showcase on Research Regular Departments A Short History of Amyloid SDS (Students) Page Molecular Chaperones: The Cutting Edge Guardians of the Proteome Off the Beaten Track When Proteostasis Goes Bad: Intellectual Property Protein Aggregation in the Cell Our Sustaining Members Extracellular Chaperones and Forthcoming Meetings Proteostasis Directory INSIDE ComBio2016 International Speaker Profiles Vol 47 No 2 August 2016 AUSTRALIAN BIOCHEMIST Page 1 ‘OSE’ Fill-in Puzzle We have another competition for the readers of the Australian Biochemist. All correct entries received by the Editor (email [email protected]) before 3 October 2016 will enter the draw to receive a gift voucher. With thanks to Rebecca Lew. The purpOSE is to choOSE from thOSE words listed and transpOSE them into the grid. So, clOSE your door, repOSE in a chair, and diagnOSE the answers – you don’t want to lOSE! 6 letters 8 letters ALDOSE FRUCTOSE FUCOSE FURANOSE HEXOSE PYRANOSE KETOSE RIBOSE 9 letters XYLOSE CELLULOSE GALACTOSE 7 letters RAFFINOSE AMYLOSE TREHALOSE GLUCOSE LACTOSE 11 letters MALTOSE DEOXYRIBOSE PENTOSE Australian Biochemist – Editor Chu Kong Liew, Editorial Officer Liana Friedman © 2016 Australian Society for Biochemistry and Molecular Biology Inc. All rights reserved. Page 2 AUSTRALIAN BIOCHEMIST Vol 47 No 2 August 2016 SHOWCASE ON RESEARCH EDITORIAL Molecular Origami: the Importance of Managing Protein Folding In my humble opinion, the most important biological transcription, RNA processing and transport, translation, molecule is the protein. -

Postmaster & the Merton Record 2020

Postmaster & The Merton Record 2020 Merton College Oxford OX1 4JD Telephone +44 (0)1865 276310 Contents www.merton.ox.ac.uk College News From the Warden ..................................................................................4 Edited by Emily Bruce, Philippa Logan, Milos Martinov, JCR News .................................................................................................8 Professor Irene Tracey (1985) MCR News .............................................................................................10 Front cover image Merton Sport .........................................................................................12 Wick Willett and Emma Ball (both 2017) in Fellows' Women’s Rowing, Men’s Rowing, Football, Squash, Hockey, Rugby, Garden, Michaelmas 2019. Photograph by John Cairns. Sports Overview, Blues & Haigh Ties Additional images (unless credited) Clubs & Societies ................................................................................24 4: © Ian Wallman History Society, Roger Bacon Society, Neave Society, Christian 13: Maria Salaru (St Antony’s, 2011) Union, Bodley Club, Mathematics Society, Quiz Society, Art Society, 22: Elina Cotterill Music Society, Poetry Society, Halsbury Society, 1980 Society, 24, 60, 128, 236: © John Cairns Tinbergen Society, Chalcenterics 40: Jessica Voicu (St Anne's, 2015) 44: © William Campbell-Gibson Interdisciplinary Groups ...................................................................40 58, 117, 118, 120, 130: Huw James Ockham Lectures, History of the Book -

The Eagle 2020

The Eagle 2020 The Eagle 2020 Photo: Emma Dellar, Lead Clinical Nurse, living on-site during the lockdown Credit: (2017) VOLUME 102 THE EAGLE 2020 1 WELCOME Published in the United Kingdom in 2020 by St John’s College, Cambridge First published in the United Kingdom in 1858 by St John’s College, Cambridge Cover photo credit: Jo Tynan Designed by Out of the Bleu (07759 919440; www.outofthebleu.co.uk) Printed by CDP (01517 247000; www.cdp.co.uk) The Eagle is published annually by St John’s College, Cambridge, and is provided free of charge to members of the College and other interested parties. 2 Photo: Komorebi Credit: Paul Everest WELCOME THE EAGLE 2020 3 WELCOME Contents Welcome Contributors .................................................................................................... 6 Editorial .......................................................................................................... 7 Message from the Vice-Master . 8 Articles Research at the Centre for Misfolding Diseases ...................................................... 14 A word for Wordsworth .................................................................................... 18 Dyslexia, poetry, rhythm and the brain . 21 Portrait of a Lady ............................................................................................. 24 The Cambridge Carthaginians ............................................................................ 27 Innovation and entrepreneurship at St John’s ......................................................... 31 The academic -

1991 Edition / Bdition 1991

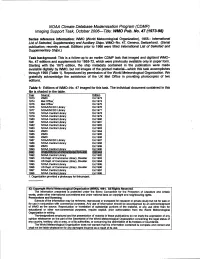

NOAA Climate Database Modernization Program (CDMP) Imaging Support Task, October 20OtL77tle: WOPub. No. 47 (1973-98) Series reference information: WMO (World Meteorological Organization), 195% lntemational List of Selected, Supplementary and Auxiliary Ships. WO-No. 47, Geneva, Switzerland. (Serial publication; recently annual. Editions prior to 1966 were titled lntemational List of Selected and Supplementary Ships.) Task background: This is a follow-up to an earlier CDMP task that imaged and digitized WMO- No. 47 editions and supplements for 1955-72, which were previously available only in paper form. Starting with the 1973 edition, the ship metadata contained in the 'publication were made available digitally by WMO, but not images of the printed material-which this task accomplishes through 1998 (Table 1). Reproduced by permission of the World Meteorological Organization. We .' gratefully acknowledge the assistance of the UK Met Office in providing photocopies of two editions. Table 1: Editions of WMO-No. 47 imaged- for this task. The individual document contained in this file is shaded in the table. -Year Source' Edition 1973 WMO Ed. 1973 1974 Met Office' Ed.1974 1975 Met Offii' Ed. 1975 1976 NOANNCDC Library Ed. 1976 1977 NOWNCDC Library Ed. 1977 1978 NOMCentral Library Ed. 1978 1979 NOAA Central Library Ed. I979 1980 NOMCentral Library Ed.1980 1981 NOAA Central Library Ed.1981 I. 1982 NOMCentral Library Ed. I982 1983 NOMCentral Library Ed.1983 I984 WMO Ed. 1984 1985 WMO Ed. 1985 I986 WMO Ed. 1986 1987 NOWNCDC Library Ed. 1987 1988 NOAA Central Library Ed.1988 1989 WMO Ed.1989 1990 NOAA Central Library Ed.1990 @gji ~~~~~~~~ L!aB-Ba 1992 NOAA Central Library Ed. -

Communicating Biochemistry: Meetings and Events

© The Authors. Volume compilation © 2011 Portland Press Limited Chapter 3 Communicating Biochemistry: Meetings and Events Ian Dransfield and Brian Beechey Scientific conferences organized by the Biochemical Society represent a key facet of activity throughout the Society’s history and remain central to the present mission of promoting the advancement of molecular biosciences. Importantly, scientific conferences are an important means of communicating research findings, establishing collaborations and, critically, a means of cementing the community of biochemical scientists together. However, in the past 25 years, we have seen major changes to the way in which science is communicated and also in the way that scientists interact and establish collabo- rations. For example, the ability to show videos, “fly through” molecular structures or show time-lapse or real-time movies of molecular events within cells has had a very positive impact on conveying difficult concepts in presentations. However, increased pressures on researchers to obtain/maintain funding can mean that there is a general reluctance to present novel, unpublished data. In addition, the development of email and electronic access to scientific journals has dramatically altered the potential for communi- cation and accessibility of information, perhaps reducing the necessity of attending meetings to make new contacts and to hear exciting new science. The Biochemical Society has responded to these challenges by progressive development of the meetings format to better match the -

Meeting 150 6-May-2010

British Biophysical Society – Building Better Science http://www.britishbiophysics.org.uk/ Registered Charity No. 25474 Minutes of the 150th Committee Meeting of the British Biophysical Society held on Thursday 6th May 2010 at Imperial College, Chemistry Dept, room 234 MINUTES Present: Anthony Watts (Chair), John Seddon, Mike Ferenczi, Guy Grant, Ehmke Pohl, Gordon Roberts, Julea Butt, Liz Hounsell, Jeremy Craven, Mark Wallace, Paul O’Shea, Dave Klenerman, Matthew Hicks, Dave Sheehan. In Attendance: Althea Hartley-Forbes 1. Apologies for Absence Apologies were received from, Rob Cooke, David Hornby, Mark Szczelkun, Sabine Flitsch, Chris Cooper, Mark Leake. 2. Minutes of the Previous Meeting Tony Watts opened the meeting explaining he will be taking over as chair from Liz Hounsell. The Minutes of the 149th meeting were approved. Amendments were made as follows: Minute 149.1: Paul O’Shea not noted in apologies for absence. Chairman’s Report Mark Leake is the 2010 BBS Young Investigator Award winner. He will give a talk at the BBS anniversary conference in July, where the Award will be presented. BBS Newsletter – Tony thanked Matthew for all his hard work. Society of Biology launch – This was held at Fishmonger’s Hall, and Liz and John attended. Paul Nurse and David Attenborough gave the opening talks. The BBS Committee are yet to confirm whether to continue BBS membership. Jeremy confirmed that the 2010 subscription of £420 has been paid. 1 3. Matters Arising: No new matters. 4. Chairman’s Report (Liz Hounsell / Tony Watts) Liz gave a summary of her last Chairman’s report. Julea Butt will be attending Faraday Discussion 148 in Nottingham in early July. -

Radio Bygones Indexes

INDEX MUSEUM PIECES Broadcast Receivers 78 C2-C4 Radio Bygones, Issues Nos 73-78 Command Sets 73 C1-C4 ARTICLES & FEATURES Crystal Sets from Bill Journeaux’s Collection 74 C4 K. P. Barnsdale’s ZC-1 77 C2 AERONAUTICAL ISSUE PAGE Keith Bentley Collection 75 C2-C4 The Command Set by Trevor Sanderson Michael O’Beirne’s MI TF1417 77 C4 Part 1 73 4 National Wireless Museum, Isle of Wight 74 C3 Part 2 74 28 Replica Lancaster at Pitstone Green Museum 76 C1-C4 Letter 75 32 Russian Volna-K 74 C1-C2 Firing up a WWII Night Fighter Radar AI Mk.4 Tony Thompson’s Ekco PB505 77 C3 by Norman Groom 76 6 NEWS & EVENTS AMATEUR AirWaves (On the Air Ltd) 76 2 Amateur Radio in the 1920s 73 27 Amberley Working Museum 74 2 Maintaining the HRO by Gerald Stancey 76 27 Antique Radio Classified 75 3 77 3 BOOKS 78 3 Tickling the Crystal 75 15 ARI Surplus Team 73 3 Classic Book Review by Richard Q. Marris BBC History Lives! (Website) 76 2 Modern Practical Radio and Television 76 10 BVWS and 405-Alive Merge! 74 3 CHiDE Conservation 74 3 CIRCUITRY Club Antique Radio Magazine 73 2 Invention of the Superhet by Ian Poole 76 22 HMS Collingwood Museum 74 3 Mallory ‘Inductuner’ by Michael O’Beirne 76 28 Confucius He Say Loudly! 74 2 Duxford Radio Society 78 3 CLANDESTINE Eddystone User Group Lighthouse 74 3 Clandestine Radio in the Pacific by Peter Lankshear 73 16 77 3 Spying Mystery by Ben Nock 74 18 78 3 Letter 75 32 Felix Crystalised (BVWS) 77 2 Talking to Mosquitoes by Brian Cannon 77 10 Hallo Hallo 75 3 Letter 78 38 77 3 Jackson Capacitors 76 2 COMMENT Medium Wave Circle -

Novel Data Transmission Wireless Based Technology for Frontier Applications R

Novel Data Transmission wireless based technology for Frontier Applications R. Brenner With recent material from colleges at Dept. of Physics and Astronomy, Uppsala Universiy and Physikalisches Institut, University of Heidelberg Richard Brenner ± Uppsala University 1/(54) INFIERI, Paris July 22, 2014 OUTLINE Motivation (personal context) Short historical background Wireless technology with mm-waves Application in trackers (HEP) Application in non-HEP detectors Summary and outlook Richard Brenner ± Uppsala University 2/(54) INFIERI, Paris July 22, 2014 (MY) MOTIVATION FOR WIRELESS DATA TRANSFER IN PARTICLE PHYSICS Richard Brenner ± Uppsala University 3/(54) INFIERI, Paris July 22, 2014 Topology Physics events propagate from the collision point radially outwards in - CMS ATLAS Physics events are triggered in RoI that are conical Example: CMS Crystal Calorimeter is tiled to match regions radial from the interaction point in and Event topology The first trigger decision in the LHC detectors is done within 3ms Fast signal transfer Fast extraction of trigger/physics objects Efficient to partition detector in topological regions (Region-of-Interest) Combination of objects from several sub-detectors Richard Brenner ± Uppsala University 4/(54) INFIERI, Paris July 22, 2014 Silicon tracking detectors Readout ALICE Axial tracker readout resulting in long paths, Long latency etc. CMS Silicon tracking detectors are built for convenience with a axial central part (Barrel) with disks in forward-backward direction. Several drawbacks: Short radiation length because of massive services in region between Barrel and Disks Long data path Not segmented in ROI Richard Brenner ± Uppsala University 5/(54) INFIERI, Paris July 22, 2014 Pile-up and data rates at HL-LHC current ~2018 ~2023 H → tt → mmnn The only sub-detector currently not used for fast trigger are the tracking detectors. -

NOBEL MOLECULAR Frontiers

NOBEL WORKSHOP & MOLECULAR FRONTIERS SYMPOSIUM Nobel Workshop & Molecular Frontiers Symposium organized by: An Amazing Week at Chalmers May 4th-8th 2015 RunAn Conference Hall, Chalmers University of Technology Chalmersplatsen 1, Gothenburg, Sweden !"#$%&'(") )*!%)!") Welcome!( The!Nobel!Workshop!and!Molecular!Frontiers!Symposium!in!Gothenburg!are!spanning!over! widely!distant!horizons!of!the!molecular!paradigm.!From!addressing!intriguing!questions!of! life! itself,! how! it! once! began! and! how! molecules! like! cogwheels! work! together! in! the! complex! machinery! of! the! cell! E! to! various! practical! applications! of! molecules! in! novel! materials!and!in!energy!research;!from!how!biology!is!exploiting!its!molecules!for!driving!the! various! processes! of! life,! to! how! insight! into! the! fundamentals! of! photophysics! and! photochemistry!of!molecules!may!give!us!clues!about!solar!energy!and!tools!by!which!we! may!tame!it!for!the!benefit!of!all!of!us,!and!our!environment.!! ! ! Science!is!sometimes!artificially!divided!into!“fundamental”!and!”applied”!but!these! terms!are!irrelevant!because!research!is!judged!to!be!groundbreaking!by!the!consequences! it!may!have.!Any!groundbreaking!fundamental!result!has!sooner!or!later!consequences!in! applications,!and!the!limits!are!often!only!drawn!by!our!imagination.!! ! ! Science!is!very!much!a!matter!of!communication:!we!not!only!learn!from!each!other! (facts,!ideas!and!concepts),!we!also!need!interactions!for!inspiration!and!as!testing!ground! for!our!ideas.!A!successful!scientific!communication!(publication!or!lecture)!always!requires! -

How Hong Kong People Use Hong Kong Disneyland

Lingnan University Digital Commons @ Lingnan University Theses & Dissertations Department of Cultural Studies 2007 Remade in Hong Kong : how Hong Kong people use Hong Kong Disneyland Wing Yee, Kimburley CHOI Follow this and additional works at: https://commons.ln.edu.hk/cs_etd Part of the Race, Ethnicity and Post-Colonial Studies Commons, and the Sociology of Culture Commons Recommended Citation Choi, W. Y. K. (2007). Remade in Hong Kong: How Hong Kong people use Hong Kong Disneyland (Doctor's thesis, Lingnan University, Hong Kong). Retrieved from http://dx.doi.org/10.14793/cs_etd.6 This Thesis is brought to you for free and open access by the Department of Cultural Studies at Digital Commons @ Lingnan University. It has been accepted for inclusion in Theses & Dissertations by an authorized administrator of Digital Commons @ Lingnan University. Terms of Use The copyright of this thesis is owned by its author. Any reproduction, adaptation, distribution or dissemination of this thesis without express authorization is strictly prohibited. All rights reserved. REMADE IN HONG KONG HOW HONG KONG PEOPLE USE HONG KONG DISNEYLAND CHOI WING YEE KIMBURLEY PHD LINGNAN UNIVERSITY 2007 REMADE IN HONG KONG HOW HONG KONG PEOPLE USE HONG KONG DISNEYLAND by CHOI Wing Yee Kimburley A thesis submitted in partial fulfillment of the requirements for the Degree of Doctor of Philosophy in Arts (Cultural Studies) Lingnan University 2007 ABSTRACT Remade in Hong Kong How Hong Kong People Use Hong Kong Disneyland by CHOI Wing Yee Kimburley Doctor of Philosophy Recent studies of globalization provide contrasting views of the cultural and sociopolitical effects of such major corporations as Disney as they invest transnationally and circulate their offerings around the world.