Beyond QWERTY: Augmenting Touch Screen Keyboards with Multi-Touch Gestures for Non-Alphanumeric Input

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Backspace

spumante e rosato* (choose 2) per la tavola glera NV lunetta prosecco, veneto straw, baked apple, peach 11 43 farm greens :: giardiniera, red onion, pecorino romano lambrusco NV medici ermete, emilia romagna black currant, violet, graphite 10 39 lamb & pork meatballs :: stewed tomato, bread crumb, asiago, mint NV l’onesta, emilia romagna fresh strawberry, violet, orange zest 11 43 baked ricotta :: lemon, poached tomato, olive oil * lambrusco whipped lardo bruschetta :: herbs, citrus segment * aglianico 2015 feudi di san gregorio ‘ros‘aura’, irpinia, campania fresh strawberry, rose petal 12 47 shishito peppers :: melon, basil roasted corn :: ricotta, pesto bianco bombino bianco 2016 poderi dal nespoli “pagadebit”, romagna gooseberry, apple, hawthorn blossom 11 43 antipasti (choose 3) pinot grigio 2015 ronchi di pietro, friuli hazelnut, white peach, limestone 48 sweet potato :: cippolini onion, mushroom, rosemary friulano 2014 villa chiopris, friuli grave straw, white flowers, almond 10 39 braised greens :: golden raisins, pecan, red pepper falanghina 2014 terredora, campagna lemon, quince, pear 13 51 :: tomato, basil, balsamic house-made mozzarella chardonnay 2014 tenute del cabreo ‘la pietra’, chianti classico peach preserves, salted popcorn, vanilla 85 roasted beets :: celery, lemon zest, pepperoncini moscato 2015 oddera cascina di fiori, asti tangerine, peach, sage 12 47 the backspace marinated olives :: lemon, rosemary, oregano green beans :: pancetta, garlic, lemon family-style menu rosso $20 per guest pizze (choose 2) pergola rosso -

Orthographies in Grammar Books

Preprints (www.preprints.org) | NOT PEER-REVIEWED | Posted: 30 July 2018 doi:10.20944/preprints201807.0565.v1 Tomislav Stojanov, [email protected], [email protected] Institute of Croatian Language and Linguistic Republike Austrije 16, 10.000 Zagreb, Croatia Orthographies in Grammar Books – Antiquity and Humanism Summary This paper researches the as yet unstudied topic of orthographic content in antique, medieval, and Renaissance grammar books in European languages, as part of a wider research of the origin of orthographic standards in European languages. As a central place for teachings about language, grammar books contained orthographic instructions from the very beginning, and such practice continued also in later periods. Understanding the function, content, and orthographic forms in the past provides for a better description of the nature of the orthographic standard in the present. The evolution of grammatographic practice clearly shows the continuity of development of orthographic content from a constituent of grammar studies through the littera unit gradually to an independent unit, then into annexed orthographic sections, and later into separate orthographic manuals. 5 antique, 22 Latin, and 17 vernacular grammars were analyzed, describing 19 European languages. The research methodology is based on distinguishing orthographic content in the narrower sense (grapheme to meaning) from the broader sense (grapheme to phoneme). In this way, the function of orthographic description was established separately from the study of spelling. As for the traditional description of orthographic content in the broader sense in old grammar books, it is shown that orthographic content can also be studied within the grammatographic framework of a specific period, similar to the description of morphology or syntax. -

1St Rule: FANBOYS and Compound Sentences FANBOYS Is a Mnemonic Device, Which Stands for the Coordinating Conjunctions: For, And, Nor, But, Or, Yet, and So

1st Rule: FANBOYS and Compound Sentences FANBOYS is a mnemonic device, which stands for the coordinating conjunctions: For, And, Nor, But, Or, Yet, and So. These words, when used to connect two independent clauses (two complete thoughts), must be preceded by a comma. A sentence is a complete thought, consisting of a Subject and a Verb. Conjunctions should not be confused with conjunctive adverbs, such as However, Therefore, and Moreover or with conjunctions, such as Because. 2nd Rule: Introductory Bits When using an introductory word, phrase, or clause to begin a sentence, it is important to place a comma between the introduction and the main sentence. The introduction is not a complete thought on its own; it simply introduces the main clause. This lets the reader know that the “meat” of the sentence is what follows the comma. You should be able to remove the part which comes before the comma and still have a complete thought. Examples: Generally, John is opposed to overt acts of affection. However, Lucy inspires him to be kind. If the hamster is launched fifty feet, there is too much pressure in the cannon. Although the previous sentence has little to do with John and Lucy, it is a good example of how to use commas with introductory clauses. 3rd Rule: Separating Items in a Series Commas belong between each item in a list. However, in a series of three items, the comma between the second and third item and the conjunction (generally and or or) is optional, whereas in a list of four items, the comma is necessary. -

Programmer Guide: Advanced Data Formatting (ADF)

Advanced Data Formatting (ADF) 72E-69680-07 PROGRAMMER GUIDE ADVANCED DATA FORMATTING PROGRAMMER GUIDE 72E-69680-07 Revision A June 2019 ii Advanced Data Formatting Programmer Guide No part of this publication may be reproduced or used in any form, or by any electrical or mechanical means, without permission in writing from Zebra. This includes electronic or mechanical means, such as photocopying, recording, or information storage and retrieval systems. The material in this manual is subject to change without notice. The software is provided strictly on an “as is” basis. All software, including firmware, furnished to the user is on a licensed basis. Zebra grants to the user a non-transferable and non-exclusive license to use each software or firmware program delivered hereunder (licensed program). Except as noted below, such license may not be assigned, sublicensed, or otherwise transferred by the user without prior written consent of Zebra. No right to copy a licensed program in whole or in part is granted, except as permitted under copyright law. The user shall not modify, merge, or incorporate any form or portion of a licensed program with other program material, create a derivative work from a licensed program, or use a licensed program in a network without written permission from Zebra. The user agrees to maintain Zebra’s copyright notice on the licensed programs delivered hereunder, and to include the same on any authorized copies it makes, in whole or in part. The user agrees not to decompile, disassemble, decode, or reverse engineer any licensed program delivered to the user or any portion thereof. -

KEYBOARD SHORTCUTS (Windows)

KEYBOARD SHORTCUTS (Windows) Note: For Mac users, please substitute the Command key for the Ctrl key. This substitution with work for the majority of commands _______________________________________________________________________ General Commands Navigation Windows key + D Desktop to foreground Context menu Right click Alt + underlined letter Menu drop down, Action selection Alt + Tab Toggle between open applications Alt, F + X or Alt + F4 Exit application Alt, Spacebar + X Maximize window Alt, Spacebar + N Minimize window Ctrl + W Closes window F2 Renames a selected file or folder Open Programs To open programs from START menu: Create a program shortcut and drop it into START menu To open programs/files on Desktop: Select first letter, and then press Enter to open Dialog Boxes Enter Selects highlighted button Tab Selects next button Arrow keys Selects next (>) or previous button (<) Shift + Tab Selects previous button _______________________________________________________________________ Microsoft Word Formatting Ctrl + P Print Ctrl + S Save Ctrl + Z Undo Ctrl + Y Redo CTRL+B Make text bold CTRL+I Italicize CTRL+U Underline Ctrl + C Copy Ctrl + V Paste Ctrl + X Copy + delete Shift + F3 Change case of letters Ctrl+Shift+> Increase font size Ctrl+Shift+< Decrease font size Highlight Text Shift + Arrow Keys Selects one letter at a time Shift + Ctrl + Arrow keys Selects one word at a time Shift + End or Home Selects lines of text Change or resize the font CTRL+SHIFT+ > Increase the font size 1 KEYBOARD SHORTCUTS (Windows) CTRL+SHIFT+ < -

How to Enter Foreign Language Characters on Computers

How to Enter Foreign Language Characters on Computers Introduction Current word processors and operating systems provide a large number of methods for writing special characters such as accented letters used in foreign languages. Unfortunately, it is not always obvious just how to enter such characters. Moreover, even when one knows a method of typing an accented letter, there may be a much simpler method for doing the same thing. This note may help you find the most convenient method for typing such characters. The choice of method will largely depend on how frequently you have to type in foreign languages. 1 The “ALT Key” Method This is the most common method of entering special characters. It always works, regardless of what pro- gram you are using. On both PCs and Macs, you can write foreign characters in any application by combining the ALT key (the key next to the space bar) with some alphabetic characters (on the Mac) or numbers (on PCs), pro- vided you type numbers on the numeric keypad, rather than using the numbers at the top of the keyboard. To do that, of course, also requires your NumLock Key to be turned on, which it normally will be. For example, On the Mac, ALT + n generates “ñ”. On the PC, ALT + (number pad) 164 or ALT + (number pad) 0241 generate “ñ”. A list of three- and four-digit PC codes for some common foreign languages appears at the end of this note. 2 The “Insert Symbol” Method Most menus in word processors and other applications offer access to a window displaying all the printable characters in a particular character set. -

Chapter 1 -Using the Command-Line Interface

CHAPTER 1 Using the Command-Line Interface The command-line interface (CLI) is a line-oriented user interface that provides commands for configuring, managing, and monitoring the Cisco wireless LAN controller. This chapter contains the following topics: • CLI Command Keyboard Shortcuts, page 1-2 • Using the Interactive Help Feature, page 1-3 Cisco Wireless LAN Controller Command Reference OL-19843-02 1-1 Chapter 1 Using the Command-Line Interface CLI Command Keyboard Shortcuts CLI Command Keyboard Shortcuts Table 1-1 lists CLI keyboard shortcuts to help you enter and edit command lines on the controller. Table 1-1 CLI Command Keyboard Shortcuts Action Description Keyboard Shortcut Change The word at the cursor to lowercase. Esc I The word at the cursor to uppercase. Esc u Delete A character to the left of the cursor. Ctrl-h, Delete, or Backspace All characters from the cursor to the beginning of Ctrl-u the line. All characters from the cursor to the end of the line. Ctrl-k All characters from the cursor to the end of the Esc d word. The word to the left of the cursor. Ctrw-w or Esc Backspace Display MORE Exit from MORE output. q, Q, or Ctrl-C output Next additional screen. The default is one screen. Spacebar To display more than one screen, enter a number before pressing the Spacebar key. Next line. The default is one line. To display more Enter than one line, enter the number before pressing the Enter key. Enter an Enter or Return key character. Ctrl-m Expand the command or abbreviation. -

International Language Environments Guide

International Language Environments Guide Sun Microsystems, Inc. 4150 Network Circle Santa Clara, CA 95054 U.S.A. Part No: 806–6642–10 May, 2002 Copyright 2002 Sun Microsystems, Inc. 4150 Network Circle, Santa Clara, CA 95054 U.S.A. All rights reserved. This product or document is protected by copyright and distributed under licenses restricting its use, copying, distribution, and decompilation. No part of this product or document may be reproduced in any form by any means without prior written authorization of Sun and its licensors, if any. Third-party software, including font technology, is copyrighted and licensed from Sun suppliers. Parts of the product may be derived from Berkeley BSD systems, licensed from the University of California. UNIX is a registered trademark in the U.S. and other countries, exclusively licensed through X/Open Company, Ltd. Sun, Sun Microsystems, the Sun logo, docs.sun.com, AnswerBook, AnswerBook2, Java, XView, ToolTalk, Solstice AdminTools, SunVideo and Solaris are trademarks, registered trademarks, or service marks of Sun Microsystems, Inc. in the U.S. and other countries. All SPARC trademarks are used under license and are trademarks or registered trademarks of SPARC International, Inc. in the U.S. and other countries. Products bearing SPARC trademarks are based upon an architecture developed by Sun Microsystems, Inc. SunOS, Solaris, X11, SPARC, UNIX, PostScript, OpenWindows, AnswerBook, SunExpress, SPARCprinter, JumpStart, Xlib The OPEN LOOK and Sun™ Graphical User Interface was developed by Sun Microsystems, Inc. for its users and licensees. Sun acknowledges the pioneering efforts of Xerox in researching and developing the concept of visual or graphical user interfaces for the computer industry. -

List of Approved Special Characters

List of Approved Special Characters The following list represents the Graduate Division's approved character list for display of dissertation titles in the Hooding Booklet. Please note these characters will not display when your dissertation is published on ProQuest's site. To insert a special character, simply hold the ALT key on your keyboard and enter in the corresponding code. This is only for entering in a special character for your title or your name. The abstract section has different requirements. See abstract for more details. Special Character Alt+ Description 0032 Space ! 0033 Exclamation mark '" 0034 Double quotes (or speech marks) # 0035 Number $ 0036 Dollar % 0037 Procenttecken & 0038 Ampersand '' 0039 Single quote ( 0040 Open parenthesis (or open bracket) ) 0041 Close parenthesis (or close bracket) * 0042 Asterisk + 0043 Plus , 0044 Comma ‐ 0045 Hyphen . 0046 Period, dot or full stop / 0047 Slash or divide 0 0048 Zero 1 0049 One 2 0050 Two 3 0051 Three 4 0052 Four 5 0053 Five 6 0054 Six 7 0055 Seven 8 0056 Eight 9 0057 Nine : 0058 Colon ; 0059 Semicolon < 0060 Less than (or open angled bracket) = 0061 Equals > 0062 Greater than (or close angled bracket) ? 0063 Question mark @ 0064 At symbol A 0065 Uppercase A B 0066 Uppercase B C 0067 Uppercase C D 0068 Uppercase D E 0069 Uppercase E List of Approved Special Characters F 0070 Uppercase F G 0071 Uppercase G H 0072 Uppercase H I 0073 Uppercase I J 0074 Uppercase J K 0075 Uppercase K L 0076 Uppercase L M 0077 Uppercase M N 0078 Uppercase N O 0079 Uppercase O P 0080 Uppercase -

Top Ten Tips for Effective Punctuation in Legal Writing

TIPS FOR EFFECTIVE PUNCTUATION IN LEGAL WRITING* © 2005 The Writing Center at GULC. All Rights Reserved. Punctuation can be either your friend or your enemy. A typical reader will seldom notice good punctuation (though some readers do appreciate truly excellent punctuation). However, problematic punctuation will stand out to your reader and ultimately damage your credibility as a writer. The tips below are intended to help you reap the benefits of sophisticated punctuation while avoiding common pitfalls. But remember, if a sentence presents a particularly thorny punctuation problem, you may want to consider rephrasing for greater clarity. This handout addresses the following topics: THE COMMA (,)........................................................................................................................... 2 PUNCTUATING QUOTATIONS ................................................................................................. 4 THE ELLIPSIS (. .) ..................................................................................................................... 4 THE APOSTROPHE (’) ................................................................................................................ 7 THE HYPHEN (-).......................................................................................................................... 8 THE DASH (—) .......................................................................................................................... 10 THE SEMICOLON (;) ................................................................................................................ -

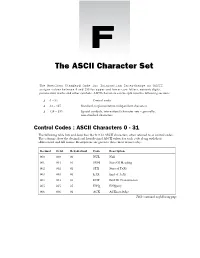

The ASCII Character Set

The ASCII Character Set The American Standard Code for Information Interchange or ASCII assigns values between 0 and 255 for upper and lower case letters, numeric digits, punctuation marks and other symbols. ASCII characters can be split into the following sections: ❑ 0 – 31 Control codes ❑ 32 – 127 Standard, implementation-independent characters ❑ 128 – 255 Special symbols, international character sets – generally, non-standard characters. Control Codes : ASCII Characters 0 - 31 The following table lists and describes the first 32 ASCII characters, often referred to as control codes. The columns show the decimal and hexadecimal ASCII values for each code along with their abbreviated and full names. Descriptions are given to those most in use today. Decimal Octal Hexadecimal Code Description 000 000 00 NUL Null 001 001 01 SOH Start Of Heading 002 002 02 STX Start of TeXt 003 003 03 ETX End of TeXt 004 004 04 EOT End Of Transmission 005 005 05 ENQ ENQuiry 006 006 06 ACK ACKnowledge Table continued on following page Appendix F Decimal Octal Hexadecimal Code Description 007 007 07 BEL BELl. Caused teletype machines to ring a bell. Causes a beep in many common terminals and terminal emulation programs. 008 010 08 BS BackSpace. Moves the cursor move backwards (left) one space. 009 011 09 HT Horizontal Tab. Moves the cursor right to the next tab stop. The spacing of tab stops is dependent on the output device, but is often either 8 or 10 characters wide. 010 012 0A LF Line Feed. Moves the cursor to a new line. On Unix systems, moves to a new line AND all the way to the left. -

Character Input/Output for Multics J

TO: MSPM Distribution FROM: J. H. Saltzer SUBJ: BC.2.00 DATE: 04/14/67 The introduction to character 1/0 1 BC.2.00 1 has been updated and revised to include suggestions \iiJhich have come from many sources. The primary change is that the order of erase editing and escape processing is reversed. MULTICS SYSTEM-PROGRAMMERS' MANUAL SECTION BC.2.00 PAGE 1 Published: 04/14/67 (Supersedes: BC.2.00 1 01/02/66) Identification Introduction: Character Input/Output for Multics J. H. Saltzer 1 F. J. Corbat6 1 J. F. Ossanna purpose The following subsections of this section set forth standards and conventions for character input 1 output 1 and storage within Multics. The subsections are divided as follows: BC.2.00 Introduction: Character Input/Output for Multics. BC.2.01 ASCII character set. BC.2.02 The canonical form. BC.2.03 Erase and Kill conventions. BC.2.04 Escape conventions. BC.2.05 Requirements for DIM specifications Character Set and £scapes 'All character input and output on Multics is done in terms of a single standard character set, the revised ASCII set. All of the graphics of the ASCII set are acceptable in the interface between the Multics I/0 system and the user. Therefore~ one character (the left slant) has been reserved by the I/0 system as an escaQg character to allow input and output of missing characters on devices which do not have the full ASCII graphic complement. (To minimize the visual impact of escape sequences for commonly used characters~ certain overstrike combinations are also reserved to represent these characters.) Certain of the ASCII controls (e.g., "NelrJ 1 ine" or "Backspace") are given specific meanings by the I/O system and are functionally performed wherever possible on output, or else they are printed with a graphic escape.