Black Hat and DEF CON

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Porting Darwin to the MV88F6281 Arming the Snowleopard

Porting Darwin to the MV88F6281 ARMing the SnowLeopard. Tristan Schaap 1269011 Apple Inc. Platform Technologies Group Delft University of Technology Dept. of Computer Science Committee: Ir. B.R. Sodoyer Dr. K. van der Meer Preface! 3 Introduction! 4 Summary! 5 Building a new platform! 6 Booting iBoot! 7 Building the kernelcache! 8 Booting the kernel! 10 THUMBs down! 16 Conclusion! 18 Future Work! 19 Glossary! 20 References! 21 Appendix A! 22 Appendix B! 23 Skills! 23 Process! 26 Reflection! 27 Appendix C! 28 Plan of Approach! 28 2 Preface Due to innovative nature of this project, I have had to limit myself in the detail in which I describe my work. This means that this report will be lacking in such things as product specific- and classified information. I would like to thank a few people who made it possible for me to successfully complete my internship at the Platform Technologies Group at Apple. First off, the people who made this internship possible, John Kelley, Ben Byer and my manager John Wright. Mike Smith, Tom Duffy and Anthony Yvanovich for helping me through the rough patches of this project. And the entirety of Core OS for making my stay an unforgettable experience. 3 Introduction About the Platform Technologies Group As it was described by a manager: “We do the plumbing, if we do our jobs right, you never see it.”. The Platform Technologies Group, a subdivision of the Core OS department, works on the embedded platforms that Apple maintains. Here, platforms are brought up and the embedded kernel and lower level support for the platforms is maintained. -

Linux on the Road

Linux on the Road Linux with Laptops, Notebooks, PDAs, Mobile Phones and Other Portable Devices Werner Heuser <wehe[AT]tuxmobil.org> Linux Mobile Edition Edition Version 3.22 TuxMobil Berlin Copyright © 2000-2011 Werner Heuser 2011-12-12 Revision History Revision 3.22 2011-12-12 Revised by: wh The address of the opensuse-mobile mailing list has been added, a section power management for graphics cards has been added, a short description of Intel's LinuxPowerTop project has been added, all references to Suspend2 have been changed to TuxOnIce, links to OpenSync and Funambol syncronization packages have been added, some notes about SSDs have been added, many URLs have been checked and some minor improvements have been made. Revision 3.21 2005-11-14 Revised by: wh Some more typos have been fixed. Revision 3.20 2005-11-14 Revised by: wh Some typos have been fixed. Revision 3.19 2005-11-14 Revised by: wh A link to keytouch has been added, minor changes have been made. Revision 3.18 2005-10-10 Revised by: wh Some URLs have been updated, spelling has been corrected, minor changes have been made. Revision 3.17.1 2005-09-28 Revised by: sh A technical and a language review have been performed by Sebastian Henschel. Numerous bugs have been fixed and many URLs have been updated. Revision 3.17 2005-08-28 Revised by: wh Some more tools added to external monitor/projector section, link to Zaurus Development with Damn Small Linux added to cross-compile section, some additions about acoustic management for hard disks added, references to X.org added to X11 sections, link to laptop-mode-tools added, some URLs updated, spelling cleaned, minor changes. -

Why We Should Consider the Plurality of Hacker and Maker Cultures 2017

Repositorium für die Medienwissenschaft Sebastian Kubitschko; Annika Richterich; Karin Wenz „There Simply Is No Unified Hacker Movement.“ Why We Should Consider the Plurality of Hacker and Maker Cultures 2017 https://doi.org/10.25969/mediarep/1115 Veröffentlichungsversion / published version Zeitschriftenartikel / journal article Empfohlene Zitierung / Suggested Citation: Kubitschko, Sebastian; Richterich, Annika; Wenz, Karin: „There Simply Is No Unified Hacker Movement.“ Why We Should Consider the Plurality of Hacker and Maker Cultures. In: Digital Culture & Society, Jg. 3 (2017), Nr. 1, S. 185– 195. DOI: https://doi.org/10.25969/mediarep/1115. Erstmalig hier erschienen / Initial publication here: https://doi.org/10.14361/dcs-2017-0112 Nutzungsbedingungen: Terms of use: Dieser Text wird unter einer Creative Commons - This document is made available under a creative commons - Namensnennung - Nicht kommerziell - Keine Bearbeitungen 4.0 Attribution - Non Commercial - No Derivatives 4.0 License. For Lizenz zur Verfügung gestellt. Nähere Auskünfte zu dieser Lizenz more information see: finden Sie hier: https://creativecommons.org/licenses/by-nc-nd/4.0 https://creativecommons.org/licenses/by-nc-nd/4.0 “There Simply Is No Unified Hacker Movement.” Why We Should Consider the Plurality of Hacker and Maker Cultures Sebastian Kubitschko in Conversation with Annika Richterich and Karin Wenz Sebastian Kubitschko is a postdoctoral researcher at the Centre for Media, Communication and Information Research (ZeMKI) at the University of Bremen in Germany. His main research fields are political communication, social movements and civil society organisations. In order to address the relevance of new forms of techno-political civic engagement, he has conducted qualitative, empirical research on one of the world’s oldest and largest hacker organisations, the Chaos Computer Club (CCC). -

Oracle Solaris 11.4 Security Target, V1.3

Oracle Solaris 11.4 Security Target Version 1.3 February 2021 Document prepared by www.lightshipsec.com Oracle Security Target Document History Version Date Author Description 1.0 09 Nov 2020 G Nickel Update TOE version 1.1 19 Nov 2020 G Nickel Update IDR version 1.2 25 Jan 2021 L Turner Update TLS and SSH. 1.3 8 Feb 2021 L Turner Finalize for certification. Page 2 of 40 Oracle Security Target Table of Contents 1 Introduction ........................................................................................................................... 5 1.1 Overview ........................................................................................................................ 5 1.2 Identification ................................................................................................................... 5 1.3 Conformance Claims ...................................................................................................... 5 1.4 Terminology ................................................................................................................... 6 2 TOE Description .................................................................................................................... 9 2.1 Type ............................................................................................................................... 9 2.2 Usage ............................................................................................................................. 9 2.3 Logical Scope ................................................................................................................ -

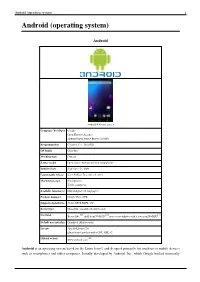

Android (Operating System) 1 Android (Operating System)

Android (operating system) 1 Android (operating system) Android Android 4.4 home screen Company / developer Google Open Handset Alliance Android Open Source Project (AOSP) Programmed in C (core), C++, Java (UI) OS family Unix-like Working state Current Source model Open source with proprietary components Initial release September 23, 2008 Latest stable release 4.4.2 KitKat / December 9, 2013 Marketing target Smartphones Tablet computers Available language(s) Multi-lingual (46 languages) Package manager Google Play, APK Supported platforms 32-bit ARM, MIPS, x86 Kernel type Monolithic (modified Linux kernel) [1] [2] [3] Userland Bionic libc, shell from NetBSD, native core utilities with a few from NetBSD Default user interface Graphical (Multi-touch) License Apache License 2.0 Linux kernel patches under GNU GPL v2 [4] Official website www.android.com Android is an operating system based on the Linux kernel, and designed primarily for touchscreen mobile devices such as smartphones and tablet computers. Initially developed by Android, Inc., which Google backed financially Android (operating system) 2 and later bought in 2005, Android was unveiled in 2007 along with the founding of the Open Handset Alliance: a consortium of hardware, software, and telecommunication companies devoted to advancing open standards for mobile devices. The first publicly available smartphone running Android, the HTC Dream, was released on October 22, 2008. The user interface of Android is based on direct manipulation, using touch inputs that loosely correspond to real-world actions, like swiping, tapping, pinching and reverse pinching to manipulate on-screen objects. Internal hardware such as accelerometers, gyroscopes and proximity sensors are used by some applications to respond to additional user actions, for example adjusting the screen from portrait to landscape depending on how the device is oriented. -

The Dragonflybsd Operating System

1 The DragonFlyBSD Operating System Jeffrey M. Hsu, Member, FreeBSD and DragonFlyBSD directories with slightly over 8 million lines of code, 2 million Abstract— The DragonFlyBSD operating system is a fork of of which are in the kernel. the highly successful FreeBSD operating system. Its goals are to The project has a number of resources available to the maintain the high quality and performance of the FreeBSD 4 public, including an on-line CVS repository with mirror sites, branch, while exploiting new concepts to further improve accessible through the web as well as the cvsup service, performance and stability. In this paper, we discuss the motivation for a new BSD operating system, new concepts being mailing list forums, and a bug submission system. explored in the BSD context, the software infrastructure put in place to explore these concepts, and their application to the III. MOTIVATION network subsystem in particular. A. Technical Goals Index Terms— Message passing, Multiprocessing, Network The DragonFlyBSD operating system has several long- operating systems, Protocols, System software. range technical goals that it hopes to accomplish within the next few years. The first goal is to add lightweight threads to the BSD kernel. These threads are lightweight in the sense I. INTRODUCTION that, while user processes have an associated thread and a HE DragonFlyBSD operating system is a fork of the process context, kernel processes are pure threads with no T highly successful FreeBSD operating system. Its goals are process context. The threading model makes several to maintain the high quality and performance of the FreeBSD guarantees with respect to scheduling to ensure high 4 branch, while exploring new concepts to further improve performance and simplify reasoning about concurrency. -

Cybersecurity Forum Für Datensicherheit, Datenschutz Und Datenethik 23

3. JAHRESTAGUNG CYBERSECURITY FORUM FÜR DATENSICHERHEIT, DATENSCHUTZ UND DATENETHIK 23. APRIL 2020, FRANKFURT AM MAIN #cyberffm Premium-Partner: Veranstaltungspartner: 3. JAHRESTAGUNG CYBERSECURITY DEUTSCHLAND — DIGITAL — SICHER — BSI Die Schadsoftware »Emotet« hat uns in den letzten Wochen und Monaten erneut schmerzhaft vor Augen geführt, welche Auswirkungen es haben kann, wenn man die Vorteile der Digitalisierung genießt, ohne die dafür unabdingbar notwendige Infor- mationssicherheit zu gewährleisten. Stadtverwaltungen, Behörden, Krankenhäuser und Universitäten wurden lahmgelegt, Unternehmen mussten zeitweise den Betrieb einstellen. Die Folgen sind für jeden von uns spürbar: Arbeitsplätze sind in Gefahr, Waren und Dienstleistungen können nicht mehr angeboten und verkauft werden, Krankenhäuser müssen die Patientenannahme ablehnen. Stadtverwaltungen sind nicht mehr arbeitsfähig und schließen ihre Bürgerbüros. Bürgerinnen und Bürger konnten keine Ausweise und Führerscheine beantragen, keine Autos anmelden und keine Sperrmüllabfuhr bestellen. Sogar Hochzeiten mussten verschoben werden. Und wie würde die Lage wohl erst aussehen, wenn wir tatsächlich in einer voll digitalisierten Welt lebten? Das BSI beschäftigt sich damit, in welchen Anwendungsfeldern der Digitalisierung Risiken entstehen könnten und wie wir diese Risiken kalkulierbar und beherrschbar machen können. Unsere Stärke ist es, Themen der Informationssicherheit gebündelt fachlich zu analysieren und aus der gemeinsamen Analyse heraus konkrete Angebote für unterschiedliche Zielgruppen -

Ethical Hacking

Ethical Hacking Alana Maurushat University of Ottawa Press ETHICAL HACKING ETHICAL HACKING Alana Maurushat University of Ottawa Press 2019 The University of Ottawa Press (UOP) is proud to be the oldest of the francophone university presses in Canada and the only bilingual university publisher in North America. Since 1936, UOP has been “enriching intellectual and cultural discourse” by producing peer-reviewed and award-winning books in the humanities and social sciences, in French or in English. Library and Archives Canada Cataloguing in Publication Title: Ethical hacking / Alana Maurushat. Names: Maurushat, Alana, author. Description: Includes bibliographical references. Identifiers: Canadiana (print) 20190087447 | Canadiana (ebook) 2019008748X | ISBN 9780776627915 (softcover) | ISBN 9780776627922 (PDF) | ISBN 9780776627939 (EPUB) | ISBN 9780776627946 (Kindle) Subjects: LCSH: Hacking—Moral and ethical aspects—Case studies. | LCGFT: Case studies. Classification: LCC HV6773 .M38 2019 | DDC 364.16/8—dc23 Legal Deposit: First Quarter 2019 Library and Archives Canada © Alana Maurushat, 2019, under Creative Commons License Attribution— NonCommercial-ShareAlike 4.0 International (CC BY-NC-SA 4.0) https://creativecommons.org/licenses/by-nc-sa/4.0/ Printed and bound in Canada by Gauvin Press Copy editing Robbie McCaw Proofreading Robert Ferguson Typesetting CS Cover design Édiscript enr. and Elizabeth Schwaiger Cover image Fragmented Memory by Phillip David Stearns, n.d., Personal Data, Software, Jacquard Woven Cotton. Image © Phillip David Stearns, reproduced with kind permission from the artist. The University of Ottawa Press gratefully acknowledges the support extended to its publishing list by Canadian Heritage through the Canada Book Fund, by the Canada Council for the Arts, by the Ontario Arts Council, by the Federation for the Humanities and Social Sciences through the Awards to Scholarly Publications Program, and by the University of Ottawa. -

Hacktivism Cyberspace Has Become the New Medium for Political Voices

White Paper Hacktivism Cyberspace has become the new medium for political voices By François Paget, McAfee Labs™ Table of Contents The Anonymous Movement 4 Origins 4 Defining the movement 6 WikiLeaks meets Anonymous 7 Fifteen Months of Activity 10 Arab Spring 10 HBGary 11 The Sony ordeal 11 Lulz security and denouncements 12 Groups surrounding LulzSec 13 Green rights 14 Other operations 15 AntiSec, doxing, and copwatching 16 Police responses 17 Anonymous in the streets 18 Manipulation and pluralism 20 Operation Megaupload 21 Communications 21 Social networks and websites 21 IRC 22 Anonymity 23 DDoS Tools 24 Cyberdissidents 25 Telecomix 26 Other achievements 27 Patriots and Cyberwarriors 28 Backlash against Anonymous 29 TeaMp0isoN 30 Other achievements 30 Conclusion 32 2 Hacktivism What is hacktivism? It combines politics, the Internet, and other elements. Let’s start with the political. Activism, a political movement emphasising direct action, is the inspiration for hacktivism. Think of Greenpeace activists who go to sea to disrupt whaling campaigns. Think of the many demonstrators who protested against human rights violations in China by trying to put out the Olympic flame during its world tour in 2008. Think of the thousands of activists who responded to the Adbusters call in July 2011 to peacefully occupy a New York City park as part of Occupy Wall Street. Adding the online activity of hacking (with both good and bad connotations) to political activism gives us hacktivism. One source claims this term was first used in an article on the filmmaker Shu Lea Cheang; the article was written by Jason Sack and published in InfoNation in 1995. -

Superseding Indictment

_.1{:~~")li~~lti:f~{ FilED ~'Y.:{fs;v, ~,;;rin>---- f-:V' . .. mOPEN COURT .. .1 ''· ··\wi IN THE UNITED STATES DISTRICT COURT F ~~ ffHE f 'f.i 2 EASTERN DISTRICT OF VIRGINIA I?_! ~ 'iJII~ J .·· CLEHI\ U.S. DISTRICT COURT Alexandria Division illgi\NDRIA, VIRGIN!~--~~-' UNITED STATES OF AMERICA Criminal No. 1:18-cr-111 (CMH) v. Count 1: 18 U.S.C. § 793(g) Conspiracy To Obtain and Disclose National JULIAN PAUL ASSANGE, Defense Information Defendant. Count 2: 18 U.S.C. § 371 Conspiracy to Commit Computer Intrusions Counts 3, 4: 18: 18 U.S.C §§ 793(b) and2 Obtaining National Defense Infmmation Counts 5-8: 18 U.S.C. §§ 793(c) and 2 Obtaining National Defense Information Counts 9-11: 18 U.S.C. §§ 793(d) and2 Disclosure ofNational Defense Infmmation Counts 12-14: 18 U.S.C. §§ 793(e) and 2 Disclosure ofNational Defense Information Counts 15-17: 18 U.S.C. § 793(e) Disclosure ofNational Defense Information SECOND SUPERSEDING INDICTMENT June 2020 Term- at Alexandria, Virginia . THE GRAND JURY CHARGES THAT: GENERAL ALLEGATIONS A. ASSANGE and WikiLeaks 1. From at least 2007,1 JULIAN PAUL ASSANGE ("ASSANGE"} was the public 1 When the Grand Jury alleges in this Superseding Indictment that an event occurred on a particular date, the Grand Jury means to convey that the event occmTed "on or about" that date. face of"WikiLeaks," a website he founded with others as an "intelligence agency ofthe people." To obtain information to release on the WikiLeaks website, ASSANGE recruited sources and predicated the success of WikiLeaks in pmi upon the recruitment of sources to (i) illegally circumvent legal safeguards on infonnation, including classification restrictions and computer and network access restrictions; (ii) provide that illegally obtained information to WikiLeaks for public dissemination; and (iii) continue the pattern of illegally procuring and providing classified and hacked information to WikiLeaks for distribution to the public. -

Cyber-Attacks As an Instrument of Terrorism? – Motives – Skills – Likelihood – Prevention

Cyber-Attacks as an Instrument of Terrorism? – Motives – Skills – Likelihood – Prevention DRAFT VERSION Hendrik Hoffmann / Kai Masser German University of Administrative Sciences Speyer / German Research Institute for Public Administration Speyer 05/08/2018 Paper to be presented at the 2018 IIAS (International Institute of Administrative Sciences) Congress, 25-29 June, Tunis, Tunisia: Strive, Adapt, Maintain: Resilience of Governance Systems Abstract “Cyber-Attacks” are mostly “Cyber-Crime”. Most attacks are “financially driven”. However, the most notorious ”cyber-attacks” like Stuxnet, WannaCry and Petya/NonPetya are linked to state or state- partisan activities. Seemingly, there is a growing market for “Cyber-Defense”. However, the cyber- attack arena is very much reminiscent to piracy of the 16th and 17th century with the ingredients money, power and a legal void. In the second part of the paper, we investigate technical aspects of the attacks introduced in the first part. First, we look at attack vectors with a critical examination of how to minimize the attack surface. We then suggest defensive approaches which lead to best practices. We conclude with a consideration of the cyber inherent issue of unattributeability in conjunction with false flag operations. Table of Contents Abstract ................................................................................................................................................... 1 A Introduction: The Pirates of the Cyberspace ...................................................................................... -

Getting Started with Openbsd Device Driver Development

Getting started with OpenBSD device driver development Stefan Sperling <[email protected]> EuroBSDcon 2017 Clarifications (1/2) Courtesy of Jonathan Gray (jsg@): We don't want source code to firmware We want to be able to redistribute firmware freely Vendor drivers are often poorly written, we need to be able to write our own We want documentation on the hardware interfaces Source code is not documentation OpenBSD Device Drivers 2/46 Clarifications (2/2) I would like to add: Device driver code runs in our kernel Firmware code runs on a peripheral device, not in our kernel When we say \Binary Blobs" we are talking about closed-source device drivers, not firmware Firmware often runs with high privileges; use at your own risk If you do not want closed-source firmware, then don't buy and use hardware which needs it We are software developers, we do not build hardware We will not sign NDAs Please talk to hardware vendors and advocate for change; the current situation is very bad for free software OpenBSD Device Drivers 3/46 This talk is for ... somewhat competent C programmers who use OpenBSD and would like to use a device which lacks support so have an itch to scratch (enhance or write a driver) but are not sure where to start perhaps are not sure how the development process works and will research all further details themselves The idea for this talk came about during conversations I had at EuroBSDcon 2016. Common question: "How do I add support for my laptop's wifi device?" OpenBSD Device Drivers 4/46 Itches I have scratched..