The Dragonflybsd Operating System

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Porting Darwin to the MV88F6281 Arming the Snowleopard

Porting Darwin to the MV88F6281 ARMing the SnowLeopard. Tristan Schaap 1269011 Apple Inc. Platform Technologies Group Delft University of Technology Dept. of Computer Science Committee: Ir. B.R. Sodoyer Dr. K. van der Meer Preface! 3 Introduction! 4 Summary! 5 Building a new platform! 6 Booting iBoot! 7 Building the kernelcache! 8 Booting the kernel! 10 THUMBs down! 16 Conclusion! 18 Future Work! 19 Glossary! 20 References! 21 Appendix A! 22 Appendix B! 23 Skills! 23 Process! 26 Reflection! 27 Appendix C! 28 Plan of Approach! 28 2 Preface Due to innovative nature of this project, I have had to limit myself in the detail in which I describe my work. This means that this report will be lacking in such things as product specific- and classified information. I would like to thank a few people who made it possible for me to successfully complete my internship at the Platform Technologies Group at Apple. First off, the people who made this internship possible, John Kelley, Ben Byer and my manager John Wright. Mike Smith, Tom Duffy and Anthony Yvanovich for helping me through the rough patches of this project. And the entirety of Core OS for making my stay an unforgettable experience. 3 Introduction About the Platform Technologies Group As it was described by a manager: “We do the plumbing, if we do our jobs right, you never see it.”. The Platform Technologies Group, a subdivision of the Core OS department, works on the embedded platforms that Apple maintains. Here, platforms are brought up and the embedded kernel and lower level support for the platforms is maintained. -

Freebsd-And-Git.Pdf

FreeBSD and Git Ed Maste - FreeBSD Vendor Summit 2018 Purpose ● History and Context - ensure we’re starting from the same reference ● Identify next steps for more effective use / integration with Git / GitHub ● Understand what needs to be resolved for any future decision on Git as the primary repository Version control history ● CVS ○ 1993-2012 ● Subversion ○ src/ May 31 2008, r179447 ○ doc/www May 19, 2012 r38821 ○ ports July 14, 2012 r300894 ● Perforce ○ 2001-2018 ● Hg Mirror ● Git Mirror ○ 2011- Subversion Repositories svnsync repo svn Subversion & Git Repositories today svn2git git push svnsync git svn repo svn git github Repositories Today fork repo / Freebsd Downstream svn github github Repositories Today fork repo / Freebsd Downstream svn github github “Git is not a Version Control System” phk@ missive, reproduced at https://blog.feld.me/posts/2018/01/git-is-not-revision-control/ Subversion vs. Git: Myths and Facts https://svnvsgit.com/ “Git has a number of advantages in the popularity race, none of which are really to do with the technology” https://chapmanworld.com/2018/08/25/why-im-now-using-both-git-and-subversion-for-one-project/ 10 things I hate about Git https://stevebennett.me/2012/02/24/10-things-i-hate-about-git Git popularity Nobody uses Subversion anymore False. A myth. Despite all the marketing buzz related to Git, such notable open source projects as FreeBSD and LLVM continue to use Subversion as the main version control system. About 47% of other open source projects use Subversion too (while only 38% are on Git). (2016) https://svnvsgit.com/ Git popularity (2018) Git UI/UX Yes, it’s a mess. -

Introduction to Debugging the Freebsd Kernel

Introduction to Debugging the FreeBSD Kernel John H. Baldwin Yahoo!, Inc. Atlanta, GA 30327 [email protected], http://people.FreeBSD.org/˜jhb Abstract used either directly by the user or indirectly via other tools such as kgdb [3]. Just like every other piece of software, the The Kernel Debugging chapter of the FreeBSD kernel has bugs. Debugging a ker- FreeBSD Developer’s Handbook [4] covers nel is a bit different from debugging a user- several details already such as entering DDB, land program as there is nothing underneath configuring a system to save kernel crash the kernel to provide debugging facilities such dumps, and invoking kgdb on a crash dump. as ptrace() or procfs. This paper will give a This paper will not cover these topics. In- brief overview of some of the tools available stead, it will demonstrate some ways to use for investigating bugs in the FreeBSD kernel. FreeBSD’s kernel debugging tools to investi- It will cover the in-kernel debugger DDB and gate bugs. the external debugger kgdb which is used to perform post-mortem analysis on kernel crash dumps. 2 Kernel Crash Messages 1 Introduction The first debugging service the FreeBSD kernel provides is the messages the kernel prints on the console when the kernel crashes. When a userland application encounters a When the kernel encounters an invalid condi- bug the operating system provides services for tion (such as an assertion failure or a memory investigating the bug. For example, a kernel protection violation) it halts execution of the may save a copy of the a process’ memory current thread and enters a “panic” state also image on disk as a core dump. -

Oracle Database Licensing Information, 11G Release 2 (11.2) E10594-26

Oracle® Database Licensing Information 11g Release 2 (11.2) E10594-26 July 2012 Oracle Database Licensing Information, 11g Release 2 (11.2) E10594-26 Copyright © 2004, 2012, Oracle and/or its affiliates. All rights reserved. Contributor: Manmeet Ahluwalia, Penny Avril, Charlie Berger, Michelle Bird, Carolyn Bruse, Rich Buchheim, Sandra Cheevers, Leo Cloutier, Bud Endress, Prabhaker Gongloor, Kevin Jernigan, Anil Khilani, Mughees Minhas, Trish McGonigle, Dennis MacNeil, Paul Narth, Anu Natarajan, Paul Needham, Martin Pena, Jill Robinson, Mark Townsend This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, the following notice is applicable: U.S. GOVERNMENT END USERS: Oracle programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, delivered to U.S. Government end users are "commercial computer software" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. -

Linux on the Road

Linux on the Road Linux with Laptops, Notebooks, PDAs, Mobile Phones and Other Portable Devices Werner Heuser <wehe[AT]tuxmobil.org> Linux Mobile Edition Edition Version 3.22 TuxMobil Berlin Copyright © 2000-2011 Werner Heuser 2011-12-12 Revision History Revision 3.22 2011-12-12 Revised by: wh The address of the opensuse-mobile mailing list has been added, a section power management for graphics cards has been added, a short description of Intel's LinuxPowerTop project has been added, all references to Suspend2 have been changed to TuxOnIce, links to OpenSync and Funambol syncronization packages have been added, some notes about SSDs have been added, many URLs have been checked and some minor improvements have been made. Revision 3.21 2005-11-14 Revised by: wh Some more typos have been fixed. Revision 3.20 2005-11-14 Revised by: wh Some typos have been fixed. Revision 3.19 2005-11-14 Revised by: wh A link to keytouch has been added, minor changes have been made. Revision 3.18 2005-10-10 Revised by: wh Some URLs have been updated, spelling has been corrected, minor changes have been made. Revision 3.17.1 2005-09-28 Revised by: sh A technical and a language review have been performed by Sebastian Henschel. Numerous bugs have been fixed and many URLs have been updated. Revision 3.17 2005-08-28 Revised by: wh Some more tools added to external monitor/projector section, link to Zaurus Development with Damn Small Linux added to cross-compile section, some additions about acoustic management for hard disks added, references to X.org added to X11 sections, link to laptop-mode-tools added, some URLs updated, spelling cleaned, minor changes. -

BSD UNIX Toolbox: 1000+ Commands for Freebsd, Openbsd and Netbsd Christopher Negus, Francois Caen

To purchase this product, please visit https://www.wiley.com/en-bo/9780470387252 BSD UNIX Toolbox: 1000+ Commands for FreeBSD, OpenBSD and NetBSD Christopher Negus, Francois Caen E-Book 978-0-470-38725-2 April 2008 $16.99 DESCRIPTION Learn how to use BSD UNIX systems from the command line with BSD UNIX Toolbox: 1000+ Commands for FreeBSD, OpenBSD and NetBSD. Learn to use BSD operation systems the way the experts do, by trying more than 1,000 commands to find and obtain software, monitor system health and security, and access network resources. Apply your newly developed skills to use and administer servers and desktops running FreeBSD, OpenBSD, NetBSD, or any other BSD variety. Become more proficient at creating file systems, troubleshooting networks, and locking down security. ABOUT THE AUTHOR Christopher Negus served for eight years on development teams for the UNIX operating system at the AT&T labs, where UNIX was created and developed. He also worked with Novell on UNIX and UnixWare development. Chris is the author of the bestselling Fedora and Red Hat Linux Bible series, Linux Toys II, Linux Troubleshooting Bible, and Linux Bible 2008 Edition. Francois Caen hosts and manages business application infrastructures through his company Turbosphere LLC. As an open- source advocate, he has lectured on OSS network management and Internet services, and served as president of the Tacoma Linux User Group. He is a Red Hat Certified Engineer (RHCE). To purchase this product, please visit https://www.wiley.com/en-bo/9780470387252. -

Getting Started With... Berkeley Software for UNIX† on the VAX‡ (The Third Berkeley Software Distribution)

Getting started with... Berkeley Software for UNIX† on the VAX‡ (The third Berkeley Software Distribution) A package of software for UNIX developed at the Computer Science Division of the University of California at Berkeley is installed on our system. This package includes a new version of the operating sys- tem kernel which supports a virtual memory, demand-paged environment. While the kernel change should be transparent to most programs, there are some things you should know if you plan to run large programs to optimize their performance in a virtual memory environment. There are also a number of other new pro- grams which are now installed on our machine; the more important of these are described below. Documentation The new software is described in two new volumes of documentation. The first is a new version of volume 1 of the UNIX programmers manual which has integrated manual pages for the distributed software and incorporates changes to the system made while introducing the virtual memory facility. The second volume of documentation is numbered volume 2c, of which this paper is a section. This volume contains papers about programs which are in the distribution package. Where are the programs? Most new programs from Berkeley reside in the directory /usr/ucb. A major exception is the C shell, csh, which lives in /bin. We will later describe how you can arrange for the programs in the distribution to be part of your normal working environment. Making use of the Virtual Memory With a virtual memory system, it is no longer necessary for running programs to be fully resident in memory. -

Examining the Viability of MINIX 3 As a Consumer Operating

Examining the Viability of MINIX 3 as a Consumer Operating System Joshua C. Loew March 17, 2016 Abstract The developers of the MINIX 3 operating system (OS) believe that a computer should work like a television set. You should be able to purchase one, turn it on, and have it work flawlessly for the next ten years [6]. MINIX 3 is a free and open-source microkernel-based operating system. MINIX 3 is still in development, but it is capable of running on x86 and ARM processor architectures. Such processors can be found in computers such as embedded systems, mobile phones, and laptop computers. As a light and simple operating system, MINIX 3 could take the place of the software that many people use every day. As of now, MINIX 3 is not particularly useful to a non-computer scientist. Most interactions with MINIX 3 are done through a command-line interface or an obsolete window manager. Moreover, its tools require some low-level experience with UNIX-like systems to use. This project will examine the viability of MINIX 3 from a performance standpoint to determine whether or not it is relevant to a non-computer scientist. Furthermore, this project attempts to measure how a microkernel-based operating system performs against a traditional monolithic kernel-based OS. 1 Contents 1 Introduction 5 2 Background and Related Work 6 3 Part I: The Frame Buffer Driver 7 3.1 Outline of Approach . 8 3.2 Hardware and Drivers . 8 3.3 Challenges and Strategy . 9 3.4 Evaluation . 10 4 Progress 10 4.1 Compilation and Installation . -

Oracle Solaris 11.4 Security Target, V1.3

Oracle Solaris 11.4 Security Target Version 1.3 February 2021 Document prepared by www.lightshipsec.com Oracle Security Target Document History Version Date Author Description 1.0 09 Nov 2020 G Nickel Update TOE version 1.1 19 Nov 2020 G Nickel Update IDR version 1.2 25 Jan 2021 L Turner Update TLS and SSH. 1.3 8 Feb 2021 L Turner Finalize for certification. Page 2 of 40 Oracle Security Target Table of Contents 1 Introduction ........................................................................................................................... 5 1.1 Overview ........................................................................................................................ 5 1.2 Identification ................................................................................................................... 5 1.3 Conformance Claims ...................................................................................................... 5 1.4 Terminology ................................................................................................................... 6 2 TOE Description .................................................................................................................... 9 2.1 Type ............................................................................................................................... 9 2.2 Usage ............................................................................................................................. 9 2.3 Logical Scope ................................................................................................................ -

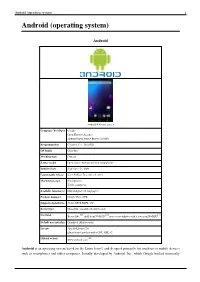

Android (Operating System) 1 Android (Operating System)

Android (operating system) 1 Android (operating system) Android Android 4.4 home screen Company / developer Google Open Handset Alliance Android Open Source Project (AOSP) Programmed in C (core), C++, Java (UI) OS family Unix-like Working state Current Source model Open source with proprietary components Initial release September 23, 2008 Latest stable release 4.4.2 KitKat / December 9, 2013 Marketing target Smartphones Tablet computers Available language(s) Multi-lingual (46 languages) Package manager Google Play, APK Supported platforms 32-bit ARM, MIPS, x86 Kernel type Monolithic (modified Linux kernel) [1] [2] [3] Userland Bionic libc, shell from NetBSD, native core utilities with a few from NetBSD Default user interface Graphical (Multi-touch) License Apache License 2.0 Linux kernel patches under GNU GPL v2 [4] Official website www.android.com Android is an operating system based on the Linux kernel, and designed primarily for touchscreen mobile devices such as smartphones and tablet computers. Initially developed by Android, Inc., which Google backed financially Android (operating system) 2 and later bought in 2005, Android was unveiled in 2007 along with the founding of the Open Handset Alliance: a consortium of hardware, software, and telecommunication companies devoted to advancing open standards for mobile devices. The first publicly available smartphone running Android, the HTC Dream, was released on October 22, 2008. The user interface of Android is based on direct manipulation, using touch inputs that loosely correspond to real-world actions, like swiping, tapping, pinching and reverse pinching to manipulate on-screen objects. Internal hardware such as accelerometers, gyroscopes and proximity sensors are used by some applications to respond to additional user actions, for example adjusting the screen from portrait to landscape depending on how the device is oriented. -

Lecture 1: Introduction to UNIX

The Operating System Course Overview Getting Started Lecture 1: Introduction to UNIX CS2042 - UNIX Tools September 29, 2008 Lecture 1: UNIX Intro The Operating System Description and History Course Overview UNIX Flavors Getting Started Advantages and Disadvantages Lecture Outline 1 The Operating System Description and History UNIX Flavors Advantages and Disadvantages 2 Course Overview Class Specifics 3 Getting Started Login Information Lecture 1: UNIX Intro The Operating System Description and History Course Overview UNIX Flavors Getting Started Advantages and Disadvantages What is UNIX? One of the first widely-used operating systems Basis for many modern OSes Helped set the standard for multi-tasking, multi-user systems Strictly a teaching tool (in its original form) Lecture 1: UNIX Intro The Operating System Description and History Course Overview UNIX Flavors Getting Started Advantages and Disadvantages A Brief History of UNIX Origins The first version of UNIX was created in 1969 by a group of guys working for AT&T's Bell Labs. It was one of the first big projects written in the emerging C language. It gained popularity throughout the '70s and '80s, although non-AT&T versions eventually took the lion's share of the market. Predates Microsoft's DOS by 12 years! Lecture 1: UNIX Intro The Operating System Description and History Course Overview UNIX Flavors Getting Started Advantages and Disadvantages Lecture Outline 1 The Operating System Description and History UNIX Flavors Advantages and Disadvantages 2 Course Overview Class Specifics 3 -

CS 350 Operating Systems Spring 2021

CS 350 Operating Systems Spring 2021 4. Process II Discussion 1: Question? • Why do UNIX OSes use the combination of “fork() + exec()” to create a new process (from a program)? • Can we directly call exec() to create a new process? Or, do you have better ideas/designs for creating a new process? • Hints: To answer this question: think about the possible advantages of this solution. Fork Parent process Child process Exec New program image in execution 2 Discussion 1: Background • Motived by the UNIX shell • $ echo “Hello world!” • $ Hello world! • shell figures out where in the file system the executable “echo” resides, calls fork() to create a new child process, calls exec() to run the command, and then waits for the command to complete by calling wait(). • You can see that you can use fork(), exec() and wait() to implement a shell program – our project! • But what is the benefit of separating fork() and exec() in creating a new process? 3 Discussion 1: Case study • The following program implements the command: • $ wc p3.c > p4.output # wc counts the word number in p3.c • # the result is redirected to p4.output Close default standard output (to terminal) open file p4.output 4 Discussion 1: Case study • $ wc p3.c # wc outputs the result to the default standard output, specified by STDOUT_FILENO • By default, STDOUT_FILENO points to the terminal output. • However, we close this default standard output → close(STDOUT_FILENO ); • Then, when we open the file “p4.output” • A file descriptor will be assigned to this file • UNIX systems start looking for free file descriptors from the beginning of the file descriptor table (i.e., at zero).