ECE5917 Soc Architecture: MP Soc

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Microcode Revision Guidance August 31, 2019 MCU Recommendations

microcode revision guidance August 31, 2019 MCU Recommendations Section 1 – Planned microcode updates • Provides details on Intel microcode updates currently planned or available and corresponding to Intel-SA-00233 published June 18, 2019. • Changes from prior revision(s) will be highlighted in yellow. Section 2 – No planned microcode updates • Products for which Intel does not plan to release microcode updates. This includes products previously identified as such. LEGEND: Production Status: • Planned – Intel is planning on releasing a MCU at a future date. • Beta – Intel has released this production signed MCU under NDA for all customers to validate. • Production – Intel has completed all validation and is authorizing customers to use this MCU in a production environment. -

"PCOM-B216VG-ECC.Pdf" (581 Kib)

Intel® Arrandale processor based Type II COM Express module support ECC DDR3 SDRAM PCOM-B216VG-ECC with Gigabit Ethernet 204pin ECC DDR3 SODIMM socket ® Intel QM57 ® Intel Arrandale processor FEATURES GENERAL CPU & Package: Intel® 45nm Arrandale Core i7/i5/i3 or Celeron P4505 ® Processor The Intel Arrandale and QM57 platform processor up to 2.66 GHz with 4MB Cache in FC-BGA package with turbo boost technology to maximize DMI x4 Link: 4.8GT/s (Full-Duplex) CPU & Graphic performance Chipset/Core Logic Intel® QM57 ® Intel Arrandale platform support variously System Memory Up to 8GB ECC DDR3 800/1066 SDRAM on two SODIMM sockets powerful processor from ultra low power AMI BIOS to mainstream performance type BIOS Storage Devices SATA: Support four SATA 300 and one IDE Supports Intel® intelligent power sharing Expansion Interface VGA and LVDS technology to reduce TDP (Thermal Six PCI Express x1 Design Power) LPC & SPI Interface High definition audio interface ® ® Enhance Intel vPro efficiency by Intel PCI 82577LM GbE PHY and AMT6.0 Hardware Monitoring CPU Voltage and Temperature technology Power Requirement TBA Support two ECC DDR3 800/1066 SDRAM on Dimension Dimension: 125(L) x 95(W) mm two SODIMM sockets, up to 8GB memory size Environment Operation Temperature: 0~60 °C Storage Temperature: -20~80 °C Operation Humidity: 5~90% I/O ORDERING GUIDE MIO N/A IrDA N/A PCOM-B216VG-ECC ® Standard Ethernet One Intel 82577LM Gigabit Ethernet PHY Intel® Arrandale processor based Type II COM Express module with ECC DDR3 SDRAM and Audio N/A Gigabit Ethernet USB Eight USB ports Keyboard & Mouse N/A CPU Intel® Core i7-610E SV (4M Cache, 2.53 GHz) Support List Intel® Core i7-620LE LV (4M Cache, 2.00 GHz) Intel® Core i7-620UE ULV (4M Cache, 1.06 GHz) Intel® Core i5-520E SV (3M Cache, 2.40 GHz) Intel® Celeron P4505 SV (2M Cache, 1.86 GHz) DISPLAY Graphic Controller Intel® Arrandale integrated Graphics Media Accelerator (Gen 5.75 with 12 execution units) * Specifications are subject to change without notice. -

Multiprocessing Contents

Multiprocessing Contents 1 Multiprocessing 1 1.1 Pre-history .............................................. 1 1.2 Key topics ............................................... 1 1.2.1 Processor symmetry ...................................... 1 1.2.2 Instruction and data streams ................................. 1 1.2.3 Processor coupling ...................................... 2 1.2.4 Multiprocessor Communication Architecture ......................... 2 1.3 Flynn’s taxonomy ........................................... 2 1.3.1 SISD multiprocessing ..................................... 2 1.3.2 SIMD multiprocessing .................................... 2 1.3.3 MISD multiprocessing .................................... 3 1.3.4 MIMD multiprocessing .................................... 3 1.4 See also ................................................ 3 1.5 References ............................................... 3 2 Computer multitasking 5 2.1 Multiprogramming .......................................... 5 2.2 Cooperative multitasking ....................................... 6 2.3 Preemptive multitasking ....................................... 6 2.4 Real time ............................................... 7 2.5 Multithreading ............................................ 7 2.6 Memory protection .......................................... 7 2.7 Memory swapping .......................................... 7 2.8 Programming ............................................. 7 2.9 See also ................................................ 8 2.10 References ............................................. -

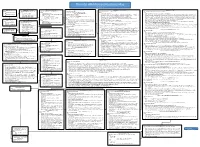

The Intel X86 Microarchitectures Map Version 2.0

The Intel x86 Microarchitectures Map Version 2.0 P6 (1995, 0.50 to 0.35 μm) 8086 (1978, 3 µm) 80386 (1985, 1.5 to 1 µm) P5 (1993, 0.80 to 0.35 μm) NetBurst (2000 , 180 to 130 nm) Skylake (2015, 14 nm) Alternative Names: i686 Series: Alternative Names: iAPX 386, 386, i386 Alternative Names: Pentium, 80586, 586, i586 Alternative Names: Pentium 4, Pentium IV, P4 Alternative Names: SKL (Desktop and Mobile), SKX (Server) Series: Pentium Pro (used in desktops and servers) • 16-bit data bus: 8086 (iAPX Series: Series: Series: Series: • Variant: Klamath (1997, 0.35 μm) 86) • Desktop/Server: i386DX Desktop/Server: P5, P54C • Desktop: Willamette (180 nm) • Desktop: Desktop 6th Generation Core i5 (Skylake-S and Skylake-H) • Alternative Names: Pentium II, PII • 8-bit data bus: 8088 (iAPX • Desktop lower-performance: i386SX Desktop/Server higher-performance: P54CQS, P54CS • Desktop higher-performance: Northwood Pentium 4 (130 nm), Northwood B Pentium 4 HT (130 nm), • Desktop higher-performance: Desktop 6th Generation Core i7 (Skylake-S and Skylake-H), Desktop 7th Generation Core i7 X (Skylake-X), • Series: Klamath (used in desktops) 88) • Mobile: i386SL, 80376, i386EX, Mobile: P54C, P54LM Northwood C Pentium 4 HT (130 nm), Gallatin (Pentium 4 Extreme Edition 130 nm) Desktop 7th Generation Core i9 X (Skylake-X), Desktop 9th Generation Core i7 X (Skylake-X), Desktop 9th Generation Core i9 X (Skylake-X) • Variant: Deschutes (1998, 0.25 to 0.18 μm) i386CXSA, i386SXSA, i386CXSB Compatibility: Pentium OverDrive • Desktop lower-performance: Willamette-128 -

Lecture Note 7. IA: History and Features

System Programming Lecture Note 7. IA: History and Features October 30, 2020 Jongmoo Choi Dept. of Software Dankook University http://embedded.dankook.ac.kr/~choijm (Copyright © 2020 by Jongmoo Choi, All Rights Reserved. Distribution requires permission) Objectives Discuss Issues on ISA (Instruction Set Architecture) ü Opcode and operand addressing modes Apprehend how ISA affects system program ü Context switch, memory alignment, stack overflow protection Describe the history of IA (Intel Architecture) Grasp the key technologies in recent IA ü Pipeline and Moore’s law Refer to Chapter 3, 4 in the CSAPP and Intel SW Developer Manual 2 Issues on ISA (1/2) Consideration on ISA (Instruction Set Architecture) asm_sum: addl $1, %ecx movl -4(%ebx, %ebp, 4), %eax call func1 leave ü opcode issues § how many? (add vs. inc è RISC vs. CISC) § multi functions? (SISD vs. SIMD vs. MIMD …) ü operand issues § fixed vs. variable operands f bits n bits n bits n bits § fixed: how many? opcode operand 1 operand 2 operand 3 § operand addressing modes f bits n bits n bits ü performance issues opcode operand 1 operand 2 § pipeline f bits n bits § superscalar opcode operand 1 § multicore 3 Issues on ISA (2/2) Features of IA (Intel Architecture) ü Basically CISC (Complex Instruction Set Computing) § Variable length instruction § Variable number of operands (0~3) § Diverse operand addressing modes § Stack based function call § Supporting SIMD (Single Instruction Multiple Data) ü Try to take advantage of RISC (Reduced Instruction Set Computing) § Micro-operations -

M39 Sandy Bridge-PDF

SANDY BRIDGE SPANS GENERATIONS Intel Focuses on Graphics, Multimedia in New Processor Design By Linley Gwennap {9/27/10-01} ................................................................................................................... Intel’s processor clock has tocked, delivering a next- periods. For notebook computers, these improvements can generation architecture for PCs and servers. At the recent significantly extend battery life by completing tasks more Intel Developer’s Forum (IDF), the company unveiled its quickly and allowing the system to revert to a sleep state. Sandy Bridge processor architecture, the next tock in its tick-tock roadmap. The new CPU is an evolutionary im- Integration Boosts Graphics Performance provement over its predecessor, Nehalem, tweaking the Intel had a false start with integrated graphics: the ill-fated branch predictor, register renaming, and instruction de- Timna project, which was canceled in 2000. More recently, coding. These changes will slightly improve performance Nehalem-class processors known as Arrandale and Clark- on traditional integer applications, but we may be reaching dale “integrated” graphics into the processor, but these the point where the CPU microarchitecture is so efficient, products actually used two chips in one package, as Figure few ways remain to improve performance. 1 shows. By contrast, Sandy Bridge includes the GPU on The big changes in Sandy Bridge target multimedia the processor chip, providing several benefits. The GPU is applications such as 3D graphics, image processing, and now built in the same leading-edge manufacturing process video processing. The chip is Intel’s first to integrate the as the CPU, rather than an older process, as in earlier graphics processing unit (GPU) on the processor itself. -

The Intel X86 Microarchitectures Map Version 2.2

The Intel x86 Microarchitectures Map Version 2.2 P6 (1995, 0.50 to 0.35 μm) 8086 (1978, 3 µm) 80386 (1985, 1.5 to 1 µm) P5 (1993, 0.80 to 0.35 μm) NetBurst (2000 , 180 to 130 nm) Skylake (2015, 14 nm) Alternative Names: i686 Series: Alternative Names: iAPX 386, 386, i386 Alternative Names: Pentium, 80586, 586, i586 Alternative Names: Pentium 4, Pentium IV, P4 Alternative Names: SKL (Desktop and Mobile), SKX (Server) Series: Pentium Pro (used in desktops and servers) • 16-bit data bus: 8086 (iAPX Series: Series: Series: Series: • Variant: Klamath (1997, 0.35 μm) 86) • Desktop/Server: i386DX Desktop/Server: P5, P54C • Desktop: Willamette (180 nm) • Desktop: Desktop 6th Generation Core i5 (Skylake-S and Skylake-H) • Alternative Names: Pentium II, PII • 8-bit data bus: 8088 (iAPX • Desktop lower-performance: i386SX Desktop/Server higher-performance: P54CQS, P54CS • Desktop higher-performance: Northwood Pentium 4 (130 nm), Northwood B Pentium 4 HT (130 nm), • Desktop higher-performance: Desktop 6th Generation Core i7 (Skylake-S and Skylake-H), Desktop 7th Generation Core i7 X (Skylake-X), • Series: Klamath (used in desktops) 88) • Mobile: i386SL, 80376, i386EX, Mobile: P54C, P54LM Northwood C Pentium 4 HT (130 nm), Gallatin (Pentium 4 Extreme Edition 130 nm) Desktop 7th Generation Core i9 X (Skylake-X), Desktop 9th Generation Core i7 X (Skylake-X), Desktop 9th Generation Core i9 X (Skylake-X) • New instructions: Deschutes (1998, 0.25 to 0.18 μm) i386CXSA, i386SXSA, i386CXSB Compatibility: Pentium OverDrive • Desktop lower-performance: Willamette-128 -

Microcode Revision Guidance April2 2018 MCU Recommendations the Following Table Provides Details of Availability for Microcode Updates Currently Planned by Intel

microcode revision guidance april2 2018 MCU Recommendations The following table provides details of availability for microcode updates currently planned by Intel. Changes since the previous version are highlighted in yellow. LEGEND: Production Status: • Planning – Intel has not yet determined a schedule for this MCU. • Pre-beta – Intel is performing early validation for this MCU. • Beta – Intel has released this production signed MCU under NDA for all customers to validate. • Production – Intel has completed all validation and is authorizing customers to use this MCU in a production environment. • Stopped – After a comprehensive investigation of the microarchitectures and microcode capabilities for these products, Intel has determined to not release microcode updates for these products for one or more reasons including, but not limited to the following: • Micro-architectural characteristics that preclude a practical implementation of features mitigating Variant 2 (CVE-2017-5715) • Limited Commercially Available System Software support • Based on customer inputs, most of these products are implemented as “closed systems” and therefore are expected to have a lower likelihood of exposure to these vulnerabilities. Pre-Mitigation Production MCU: • For products that do not have a Production MCU with mitigations for Variant 2 (Spectre), Intel recommends using this version of MCU. This does not impact mitigations for Variant 1 (Spectre) and Variant 3 (Meltdown). STOP deploying these MCU revs: • Intel recommends to discontinue using these select versions of MCU that were previously released with mitigations for Variant 2 (Spectre) due to system stability issues. • Lines with “***” were previously recommended to discontinue use. Subsequent testing by Intel has determined that these were unaffected by the stability issues and have been re-released without modification. -

Intel I7 Core Processor – a New Order Processor Level

© 2014 IJIRT | Volume 1 Issue 6 | ISSN : 2349-6002 Intel i7 core processor – A new order processor level Arpit Yadav ; Anurag Parmar; Abhishek Sharma Electronics and Communication Engineering ABSTRACT: The paper bestowed up here may be a higher and economical compactibility , advanced complete exposure to the exactly used technologies in response towards the users demand. Now, the Intel i7 core processor.Intel i7 processor may be a fresh question arise why solely Intel core? .. Intel on the market and latest core processor gift within the Penrynmicroarchitecture includes Core a pair of market. It uses all the new technologies gift with within family of the processors that was the primary thought the corporative world. it's redesigned by a high repetitive quality of software package technocrats so it's . Intel microarchitecture isbased on the 45nm subtle style of all of the core processors gift.Intel Core i7 fabrication method. this enables Intel to create the sometimes applies to any or all families of desktop and next performance vary of processorsthat apace portable computer 64-bit x86-64processors that uses the consumes similar or less power than the antecedently Westmere , Nehalaem , Ivy , Sandy Bridge and also the generation processors. Intel Core primarily may be a Haswell microarchitectures. The Core i7 complete brand that Intel uses for the assorted vary of middle principally targets all the business and high-end to high-end customers and business based mostly shopper markets for each desktop and portable microprocessors. Generally, processors computer computers, and is distinguished from the oversubscribed within the name of Core square (entry-level consumer)Core i3, (mainstream consumer) Core i5, and (server and workstation) Xeon brands. -

White Paper Introduction to Intel's 32Nm Process Technology

White Paper Introduction to Intel’s 32nm Process Technology White Paper Introduction to Intel’s 32nm Process Technology Intel introduces 32nm process technology the P1266 process technology Intel promised a with second generation high-k + metal gate fast and meaningful ramp of the 45nm transistors. This process technology builds technology. Intel has kept its promise and is today upon the tremendously successful 45nm the only company with production 45nm with process technology that enabled the launch of high-k + metal gate transistors. the Intel® microarchitecture codename Nehalem and the Intel® Core™ i7 processor. In fact, the 45 nm production ramp has been the fastest in Intel history. 45nm processor unit Building upon the tremendous success of the production has ramped twice as fast as the 65nm 45nm process technology with high-k + metal process technology in its first year. Today, 45nm gate transistors, Intel is nearing the ramp of products are being manufactured across 32nm process technology with second computing segments. Single core Intel® Atom™ generation high-k + metal gate transistors. This processors, dual core Intel® Core™2 Duo new process technology will be used to processors, Intel® Core™ i7 processors with four manufacture the 32nm Westmere version of the cores, and even the 6 core Intel® Xeon® processor Intel® microarchitecture codename Nehalem. are all today manufactured on the 45nm process. Westmere based products are planned across segments: mobile, desktop, and server. Intel is the first company to demonstrate working 32nm Intel takes another major leap ahead on the processors and is on track with their cadence of 32nm process technology with second new product innovation – known as the „Tick- generation high-k + metal gate transistors. -

Intel's Core 2 Family

Intel’s Core 2 family - TOCK lines References Dezső Sima Vers. 1.0 Januar 2019 Contents (1) • 1. Introduction • 2. The Core 2 line • 3. The Nehalem line • 4. The Sandy Bridge line • 5. The Haswell line • 6. The Skylake line • 7. The Kaby Lake line • 8. The Kaby Lake Refresh line • 9. The Coffee Lake line • 10. The Coffee Lake line Refresh Contents (2) • 11. The Cannon Lake line (outlook) • 12. Sunny Cove • 13. References 13. References 12. References (1) [1]: Singhal R., “Next Generation Intel Microarchitecture (Nehalem) Family: Architecture Insight and Power Management, IDF Taipeh, Oct. 2008, http://intel.wingateweb.com/taiwan08/ published/sessions/TPTS001/FA08%20IDFTaipei_TPTS001_100.pdf [2]: Bryant D., “Intel Hitting on All Cylinders,” UBS Conf., Nov. 2007, http://files.shareholder.com/downloads/INTC/0x0x191011/e2b3bcc5-0a37-4d06- aa5a-0c46e8a1a76d/UBSConfNov2007Bryant.pdf [3]: Fisher S., “Technical Overview of the 45 nm Next Generation Intel Core Microarchitecture (Penryn),” IDF 2007, ITPS001, http://isdlibrary.intel-dispatch.com/isd/89/45nm.pdf [4]:Pabst T., The New Athlon Processor: AMD Is Finally Overtaking Intel, Tom's Hardware, August 9, 1999, http://www.tomshardware.com/reviews/athlon-processor,121-2.html [5]: Carmean D., “Inside the Pentium 4 Processor Micro-architecture,” Aug. 2000, http://people.virginia.edu/~zl4j/CS854/pda_s01_cd.pdf [6]: Shimpi A. L. & Clark J., “AMD Opteron 248 vs. Intel Xeon 2.8: 2-way Web Servers go Head to Head,” AnandTech, Dec. 17 2003, http://www.anandtech.com/showdoc.aspx?i=1935&p=1 [7]: Völkel F., “Duel of the Titans: Opteron vs. Xeon : Hammer Time: AMD On The Attack,” Tom’s Hardware, Apr. -

Intel's Core 2 Family

Intel’s Core 2 family - TOCK lines II Nehalem to Haswell Dezső Sima Vers. 3.11 August 2018 Contents • 1. Introduction • 2. The Core 2 line • 3. The Nehalem line • 4. The Sandy Bridge line • 5. The Haswell line • 6. The Skylake line • 7. The Kaby Lake line • 8. The Kaby Lake Refresh line • 9. The Coffee Lake line • 10. The Cannon Lake line 3. The Nehalem line 3.1 Introduction to the 1. generation Nehalem line • (Bloomfield) • 3.2 Major innovations of the 1. gen. Nehalem line 3.3 Major innovations of the 2. gen. Nehalem line • (Lynnfield) 3.1 Introduction to the 1. generation Nehalem line (Bloomfield) 3.1 Introduction to the 1. generation Nehalem line (Bloomfield) (1) 3.1 Introduction to the 1. generation Nehalem line (Bloomfield) Developed at Hillsboro, Oregon, at the site where the Pentium 4 was designed. Experiences with HT Nehalem became a multithreaded design. The design effort took about five years and required thousands of engineers (Ronak Singhal, lead architect of Nehalem) [37]. The 1. gen. Nehalem line targets DP servers, yet its first implementation appeared in the desktop segment (Core i7-9xx (Bloomfield)) 4C in 11/2008 1. gen. 2. gen. 3. gen. 4. gen. 5. gen. West- Core 2 Penryn Nehalem Sandy Ivy Haswell Broad- mere Bridge Bridge well New New New New New New New New Microarch. Process Microarchi. Microarch. Process Microarch. Process Process 45 nm 65 nm 45 nm 32 nm 32 nm 22 nm 22 nm 14 nm TOCK TICK TOCK TICK TOCK TICK TOCK TICK (2006) (2007) (2008) (2010) (2011) (2012) (2013) (2014) Figure : Intel’s Tick-Tock development model (Based on [1]) * 3.1 Introduction to the 1.