Biofeedback and Virtual Environments Erik Champion and Andrew Dekker

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

MSU Receives Accreditation Discussions for Choosing an Interim President Administrator at MSU

lontana mourns loss of MSU President Mike Malone provost, remembered Malone's who had a particular affection vn Lehmann passion for history. for the students. nem News Editor "Michael was someone, no "Michael involved the matter what the issue, that could students more than any bring up a piece of history or a president I know or even heard Michael Malone, '.\1SU storv that not onlv enlightened of," Dooley said. "He was ·ident, scholar, and avid and.entertained, but also made someone who took pride in his rian died Dec. 21 of a heart an important point," Dooley work, but especially in the k at Gallatin Field Airport. said. students and faculty." Malone had just returned as59. Malone had authored nine Malone had arrived at the books and 20 articles during his from Spokane after meeting with rt on a late flight. He then career, and at the time of his officials from Washington State e parking lot in his car and death, he was under contract University who were considering eled a few yards before with Yale University Press for a him for the position of president. ·ng a light post. Efforts by tenth book about western Malone turned it down. citing his ·ersb\ to resuscitate him American history since 1930. commitment to MSU. >d and he was pronounced Under l\lalone's ASMSU president Jared at the scene. Malone was leader hip O\'e r the past nine Harris felt that Malone was one nosed in 1995 with vears. '.\!SU sav. great strides in o f-a-kind. -

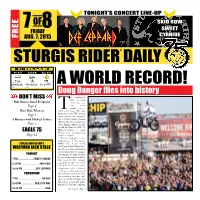

A World Record!

TONight’s CONCERT LINE-UP OF SKID ROW EE 7 8 SWEET FRIDAY CYANIDE FR AUG. 7, 2015 ® STURGIS RIDER DAILY Fri 8/7 Sat 8/8 Sun 8/9 A WORLD RecORD! Doug Danger flies into history he undisputed DON’t Miss king of stunt Bob Hansen Award Recipients men? Sure, cer- Page 4 tain names might come to Rat’s Hole Winners mind at that phrase. But since Tyesterday, at 6:03 PM, the only Page 5 name people are mention- 5 Minutes with Michael Lichter ing is Doug Danger. Because that was the time on the clock Page 3 when Danger jumped 22 cars aboard Evel Knievel’s XR-750 Harley-Davidson, a stunt EAGLE 75 Knievel once attempted but Page 12 failed to complete. The feat took place in the amphitheater at the Sturgis STURGIS BUFFALo Chip’s Buffalo Chip as part of the Evel Knievel Thrill Show. Dan- WOLFMAN JACK STAGE ger, who has been performing motorcycle jumps for decades, TONIGHT was inspired by Knievel when he was young and got to know 7 PM ..................SWEET CYANIDE him later in life. Danger 8:30 PM .....................SKID ROW regarded this stunt not as way to best his hero but as a favor, 10:30 PM ...............DEF LEPPARD completing a task for a friend. Danger is fully cognizant of TOMORROW the potential peril of his cho- sen profession and he’s real- 7 PM ............................ NICNOS istic; he knows firsthand the flip side of a successful jump. 8:30 PM ............... ADELITAS WAY But he felt solid and confident 10:30 PM ..........................WAR Continued on Page 2 PAGE 2 STURGIS RIDER DAILY FRIDAY, AUG. -

Top 40 Singles Top 40 Albums

10 May 1992 CHART #809 Top 40 Singles Top 40 Albums Tears In Heaven Viva Las Vegas Woodface We Can't Dance 1 Eric Clapton 21 ZZ Top 1 Crowded House 21 Genesis Last week - / 1 weeks WARNER Last week 18 / 2 weeks WARNER Last week 1 / 39 weeks Platinum / EMI Last week 24 / 22 weeks Platinum / VIRGIN On A Sunday Afternoon You Showed Me Zz Top Greatest Hits The Very Best Of 2 A Lighter Shade of Brown 22 Salt N Pepa 2 ZZ Top 22 Howard Keel & Harry Secombe Last week 1 / 7 weeks BG Last week 17 / 8 weeks POLYGRAM Last week 8 / 2 weeks WARNER Last week 39 / 2 weeks BMG Under The Bridge America: What Time Is Love? Wish A Trillion Shades Of Happy 3 Red Hot Chili Peppers 23 The KLF 3 The Cure 23 Push Push Last week 8 / 7 weeks WARNER Last week 31 / 3 weeks FESTIVAL Last week - / 1 weeks WARNER Last week 25 / 8 weeks Gold / FESTIVAL Come To Me Justified And Ancient The Commitments OST Nevermind 4 Diesel 24 The KLF 4 Various 24 Nirvana Last week 6 / 9 weeks EMI Last week 16 / 15 weeks Gold / FESTIVAL Last week 2 / 17 weeks Platinum / BMG Last week 17 / 22 weeks Platinum / UNIVERSAL Stay What My Baby Likes Blood Sugar Sex Majik Diamonds And Pearls 5 Shakespears Sister 25 Push Push 5 Red Hot Chili Peppers 25 Prince Last week 5 / 7 weeks POLYGRAM Last week 27 / 8 weeks FESTIVAL Last week 4 / 27 weeks Gold / WARNER Last week 29 / 28 weeks Gold / WARNER Remedy Smells Like Teen Spirit The Singles Lucky Town 6 The Black Crowes 26 Nirvana 6 The Clash 26 Bruce Springsteen Last week 2 / 12 weeks WARNER Last week 26 / 17 weeks Gold / BMG Last week 3 / 8 weeks -

1Ssues• May Stall Pact for Faculty

In Sports I" Section 2 ·An Associated Collegiate Press Four-Star All-American Newspaper Coles soars in The Boss is NCAA slam back with two dunk contest new albums page 85 page 81 Economic 1ssues• may stall pact for faculty By Doug Donovan ltdmindltllitie news Editor He said, she said. So went the latest round of contract negotiations between the faculty and the administration. The faculty's contract negotiating team contends that administrative bargaining tactics have the potential to stall the talks and delay the signing of a new contract. But the administration says the negotiations are moving at a normal pace. Robert Carroll, president of the l~cal chapter of the Association of American University Professors (AAUP), said he was disappointed with the March 27 talk~ because the administrative bargaining team came to the session stating it was "not prepared to discuss economic issues." • "It was an amicable session and a number of issues were discussed at length," said Carroll, a professor in the plant and soil science depanment. "But very little progress was made." However, Maxine R. Colm, leader of the administrative b~rgaining team, said an agreement was reached with the AAUP to THE REVIEW / Lori Barbag pursue non-economic issues of the proposed A delegation from the university was among the 500,000 who attended Sunday's rally for what supporters called "reproductive freedom." contract before economic issues. "We agreed to discuss non-economic issues first and we did precisely that," said Colm, who also serves as the university's vice president for Employee Relations. ' . Colm said that "not prepared" was a Half million rally for abortion rights common phrase used by negotiating parties when they are not going to discuss a certain topic. -

Cash Box Takes a Look at the Artists, Music and Trends That Will Make Their GREGORY S

9 VOL. LV, NO. 36, MAY 2, 1992 STAFF BOX GEORGE ALBERT President and Publisher KEITH ALBERT Vice President/General Manager FRED L GOODMAN Editor In Chief CAMILLE GOMPASIO Director, Coin Machine Operations LEEJESKE New York Editor MARKETING LEON BELL Director, Los Angeles MARK WAGNER Director, Nashville KEN PIOTROWSKI (LA) EDITORIAL INSIDE THE BOX RANDY CLARK, Assoc. Ed. (LA) COVER STORY f BRYAN DeVANEY, Assoc. Ed. (LA) BERNETTA GREEN (New York) New For ’92 STEVE GIUFFRIDA (Nashville) CORY CHESHIRE, Nashville Editor Cash Box takes a look at the artists, music and trends that will make their GREGORY S. COOPER—Gspel,(Na^vfe) CHART RESEARCH marks on the industry this year. From the worlds of pop, rock, r&b, rap, CHERRY URESTI (LA) JIMMY PASCHAL (LA) jazz, country, gospel and others, we provide our readers with a look into RAYMOND BALLARD (LA) MIA TROY (LA) the near future and discover who the next stars will be. There's also a special COREY BELL (LA) JOHN COSSIBOOM (Nash) section on the new technologies that will affect the products and services CHRIS BERKEY (Nash) PRODUCTION that both industry insiders and consumers will be using soon. JIM GONZALEZ Ad Director —see page 11 CIRCULAVON NINATREGUB, Manager CYNTHIA BANTA Madonna + Millions = Maverick PUBLICATION OFFICES Superstar Madonna, her longtime manager Freddy DeMann and Time NEW YORK 157 W.57th Street (Suite 503) New Warner Inc., have formed Maverick, a multi -media entertainment company. York, NY 10019 Phone:(212)586-2640 Maverick will encompass many facets of entertainment including records, Fax: (212) 582-2571 HOLLYWOOD music publishing, film, merchandising and book publishing. -

Egents Get Raise, Enrollment Gets

1"4«k msu Career Fair 92' JDYStery solved page2 easts for pear arlng lovers page 10 musical sport page 13 t night page 13 . TN R H.. -OPO NENT Strand Union Ballrooms were full of activity Thursday as MSU students met with potential employers. Over 100 corporations sent recruiters to talk to students who were interested in post-graduation employment. egents get raise, enrollment gets cut gents vote for pay raise Downsizing is here have supported raises al this time." Regent KenniL Schwancke of Missoula defended the raises, saying thal Montana's universities tagged behind by Chris Junghans red Freedman cenain peer institutions, such as the University of Norlh Exponent staff writer ~nt news writer Dakota, in their pay of dean-level and above employees. He also noted that those employees have the highest turnover rate within the Montana University System (MUS). Raising admission standards would be the fairest way of administer oard ofRegents recently gave itself and high-level He emphasized that deans are pan of that system, as well. ing a Board of Regents' plan to cul 10.49 percent of Montana State's y employees a 3 1(2 percent raise. The Regents This was not the first Lime that Farmer had differences enrollment over the next four years. elena on July 30-31 and gave themselves and all with the Board of Regents. That's what MSU President Mike Malone told the Exponent about s Dean-level and above a pay increase. The raise ''The Board of Regents has no checks and balances the tentative plan 10 cut enrollment at Montana colleges by nearly 4,000 verage increase of 3 1(2 percent, with 1(2 percent whatsoever. -

Ef Eppard in 19 Formation: Ste E Clark, Phil Collen, Oe Lliott, Rick Sa Ae, and Rick Llen

ef eppard in 19 formation: Stee Clark, Phil Collen, oe lliott, Rick Saae, and Rick llen (from left) > Def > 20494_RNRHF_Text_Rev1_22-61.pdf 9 3/11/19 5:23 PM 21333_RNRHF_DefLeppard_30-37_ACG.inddLeppard 30 4/25/19 4:11 PM A THEY FORGED A NEW POP-METAL SOUND WITH MULTIPLATINUM RESULTS BY ROBERT BURKE WARREN issing his bus that autumn day States. Their club-mates: Van Halen, Led Zeppelin, in Sheeld, England, 1977, may Pink Floyd, the Eagles, and the Beatles. But while the have inconvenienced 18-year- band’s journey includes these and many other strato- old wannabe rocker Joe Elliott, spheric highs of rock stardom, it also features some but it set in motion a series of unimaginable lows – legendary dues paying, it seems, events that would lead to his for all those dreams come true. fronting one of the biggest Looking back to the murky beginnings, Elliott re- bands in history: Def Leppard. An undeniable eight- called passing 19-year-old local guitar player Pete ies juggernaut still vital today, Def Leppard stand as Willis on that unplanned walk home from the bus one of a handful of rock bands to reach the RIAA’s stop. It was, he said, “a sliding-doors moment.” Elliott “Double Diamond” status, i.e., ten million units of had just taken up guitar and asked if Willis’ new Mtwo or more original studio albums sold in the United metal band, Atomic Mass – which included drummer 21333_RNRHF_DefLeppard_30-37_ACG.indd 31 4/25/19 4:11 PM Pete Willis, Allen, Elliott, Clark, and Savage (clockwise from bottom), before a gig at Atlanta’s Fox Theatre, 1981 20494_RNRHF_Text_Rev1_22-61.pdf 11 3/11/19 5:23 PM Above: Elliott surprised by Union Jack-ed fans, c. -

DB-Discography-Albums

David Bates Discography – Albums Artist Album Title Released UK USA AUS CAN GER FRA NZ Awards DB Role Paul Carrack Nightbird 1979 - - Signed A&R Blitz Brothers Deerfrance (The Rose Tattoo) 1979 Signed A&R Huey Lewis American Exo Disco 1979 Signed A&R Express Blitz Brothers Gloria 1979 Discovered Signed A&R Dalek I Love You Compass Kumpass 1980 - - Signed A&R Def Leppard On Through The Night 1980 15 US Plat- Can Plat Signed A&R Teardrop Explodes Kilimanjaro 1980 24 156 25 UK Silver Signed A&R Dire Straits Making Movies 1980 4 19 6 UK 2 x Plat, US Plat, Ger - Gold - France - Gold A&R Teardrop Explodes Wilder 1981 29 196 19 UK Silver Signed A&R Def Leppard High n Dry 1981 26 38 US 2X Pla - Can Plat Discovered Signed Bill Nelson Quit Dreaming Get On The 1981 Signed Beam Bill Nelson Sounding The Ritual 1981 Signed Bill Nelson The Love That Whirls 1982 Signed Monsoon Third Eye 1983 Discovered Signed A&R Tears For Fears The Hurting 1983 1 73 15 7 15 16 30 UK Plat - US Gold - Can Plat - Fra Gold Discovered Signed A&R Bill Nelson Chimera 1983 Signed Def Leppard Pyromania 1983 18 2 70 26 UK Silver - US 15X Plat - Can 7x Plat - Fra Gold Discovered Signed Julian Cope World Shut Your Mouth 1984 40 Signed A&R Julian Cope Fried 1984 87 Signed A&R Tears For Fears Songs From The Big Chair 1985 2 1 5 1 1 12 2 UK 3 X plat - US 5 X Plat - Can 7 X Plat Discovered Signed A&R Aus Plat - Ger Gold - Fra - Gold - NZ Plat Green On Red No Free Lunch 1985 95 Signed A&R Hipsway Hipsway 1986 42 55 UK Goldr Signed Status Quo In The Army Now 1986 7 4 16 UK Gold A&R Wet -

DEF LEPPARD Hysteria (Hard Rock)

DEF LEPPARD Hysteria (Hard Rock) Année de sortie : 1987 Nombre de pistes : 12 Durée : 62' Support : Vinyl Provenance : Acheté Nous avons décidé de vous faire découvrir (ou redécouvrir) les albums qui ont marqué une époque et qui nous paraissent importants pour comprendre l'évolution de notre style préféré. Nous traiterons de l'album en le réintégrant dans son contexte originel (anecdotes, etc.)... Une chronique qui se veut 100% "passionnée" et "nostalgique" et qui nous l'espérons, vous fera réagir par le biais des commentaires ! ...... Bon voyage ! En 1987, le Hard-Rock mélodique est à son apogée ! L'année que choisit le groupe anglais DEF LEPPARD pour sortir l'album de sa consécration mondiale, le bien nommé et exceptionnel Hysteria ! DEF LEPPARD, formé à la toute fin 70' sort son quatrième album, fort d'un travail acharné avec Robert John Mutt LANGE, déjà auteur de prouesses avec AC/DC entre autre ! Ce dernier a repéré le groupe début 80' et travaille avec DEF LEPPARD dès le deuxième opus High'n'Dry dont le single Bringin' On The Heartbreak propulse le groupe sous les projecteurs en 1981 ! Pyromania voit le jour en 1983 et est considéré par les puristes comme le chef-d’œuvre de DEF LEPPARD ! Je le pense aussi ! Cela dit, c'est avec Hysteria que DEF LEPPARD connaît la consécration ! Alors oui, Hysteria sonne différemment, est plus commerciale aussi dans un certain sens ! Le son de batterie est à mon sens la raison principale de changement car Rick ALLEN joue sur une batterie électronique adapté pour lui ! Pour mémoire, le 31 Décembre -

The Wooster Voice Sports Page 9 MEN's TENNIS TRACK AFIELD Fernandez Gomes from Behind 'We're at the Most in Third Set to Ace Oberlin Important Juncture

The College of Wooster Open Works The oV ice: 1991-2000 "The oV ice" Student Newspaper Collection 4-24-1992 The oW oster Voice (Wooster, OH), 1992-04-24 Wooster Voice Editors Follow this and additional works at: https://openworks.wooster.edu/voice1991-2000 Recommended Citation Editors, Wooster Voice, "The oosW ter Voice (Wooster, OH), 1992-04-24" (1992). The Voice: 1991-2000. 40. https://openworks.wooster.edu/voice1991-2000/40 This Book is brought to you for free and open access by the "The oV ice" Student Newspaper Collection at Open Works, a service of The oC llege of Wooster Libraries. It has been accepted for inclusion in The oV ice: 1991-2000 by an authorized administrator of Open Works. For more information, please contact [email protected]. VnLCVnLIgue26 ; THE COLLEGE OF WOOSTER 1HE WOOSTER VOICE April 24, 1992 Wooster. Ohio 44691 Off-Camp-us Residents Given Reprieve x MELISSA LAKE way there is sail choice lbr cn-camp- us Voice Staff Writer .students, and there is also a gradual CoOege of Wooster students who decrease,' said Marc Smith 93, who off-camp- currently reside in us bousing has met with Penney on the issue. wiQ be givraautoroatk: approval forthe One hundred and seven students had 1992-9- 3 academic year, according to been given perrnissicxi to live off-campu- s, SqphieFmoey, Associate Dean of Stu- as of Wednesday, according to dents. Penney. Restrictions do apply, however, as This includes 86 students who have off-camp- kn-rneKsubmkthdroffar- eligible us ffoiTf most npusap- already submitted their applications, 14 off-carnp- current us residents who need to submit their applications, and seven Director of Housing, if they have not tnirVnr mfm am MjyfJfii tn he re- already done so. -

Sound to Sense, Sense to Sound a State of the Art in Sound and Music Computing

Sound to Sense, Sense to Sound A State of the Art in Sound and Music Computing Pietro Polotti and Davide Rocchesso, editors Pietro Polotti and Davide Rocchesso (Editors) Dipartimento delle Arti e del Disegno Industriale Universita` IUAV di Venezia Dorsoduro 2206 30123 Venezia, Italia This book has been developed within the Coordination Action S2S2 (Sound to Sense, Sense to Sound) of the 6th Framework Programme - IST Future and Emerging Technologies: http://www.soundandmusiccomputing.org/ The Deutsche Nationalbibliothek lists this publication in the Deutsche Nationalbibliografie; detailed bibliographic data are available in the Internet at http://dnb.d-nb.de. ©Copyright Logos Verlag Berlin GmbH 2008 All rights reserved. ISBN 978-3-8325-1600-0 Logos Verlag Berlin GmbH Comeniushof, Gubener Str. 47, 10243 Berlin Tel.: +49 (0)30 42 85 10 90 Fax: +49 (0)30 42 85 10 92 INTERNET: http://www.logos-verlag.de Contents Introduction 11 1 Sound, Sense and Music Mediation 15 1.1 Introduction .............................. 16 1.2 From music philosophy to music science .............. 16 1.3 The cognitive approach ........................ 20 1.3.1 Psychoacoustics ........................ 20 1.3.2 Gestalt psychology ...................... 21 1.3.3 Information theory ...................... 21 1.3.4 Phenomenology ........................ 22 1.3.5 Symbol-based modelling of cognition ........... 23 1.3.6 Subsymbol-based modelling of cognition ......... 23 1.4 Beyond cognition ........................... 24 1.4.1 Subjectivism and postmodern musicology ........ 24 1.4.2 Embodied music cognition ................. 25 1.4.3 Music and emotions ..................... 28 1.4.4 Gesture modelling ...................... 29 4 CONTENTS 1.4.5 Physical modelling ...................... 30 1.4.6 Motor theory of perception ................ -

PDF of This Issue

MfT'S The Weathern Oldest and Largest Today: Partly cloudy, 65°F (I 7°C) Tonight: Increasing clouds, 44°F (7°C) Newspaper Tomorrow: Early showers, 58°F (14'C) Details, Page 2 Volume II 2, Number 24 Cambridge, Massachusetts 02139 Friday, May 1, 1992 Clay Appointed Head Of Urban Studies I By Sarah Y. Keightley department hopes to emphasize ASSOCIA TE NERWS EDITOR more undergraduate teaching. The Professor Phillip L. Clay PhD department is working on "attractive '75 was recently named head of the plans" to be announced in the fall, Department of Urban Studies and involving classes where students Planning. His appointment will take will apply scientific knowledge to effect July 1. social problems, Clay said. Jean P. De Monchaux, dean of With the new plans, international the School of Architecture and students from developing areas Planning, appointed Clay to replace could be given policy perspectives Donald A. Schon, the current to make their knowledge useful at department head. The department home and in international organiza- head serves a four-year term. tions, Clay said. On the domestic Clay has been the associate head side, issues like transportation and of the urban studies and planning environmental policy may be incor- department for two years. porated into classes. Schon said, "I think it's a splen- Clay said there is a "similar did appointment. Phil will be a first- interest on the graduate side." class department head." Schon also Though the programs are currently said that Clay has a natural talent in good shape, the department hopes for administration.