The Mathematics of Quantum Mechanics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Glossary Physics (I-Introduction)

1 Glossary Physics (I-introduction) - Efficiency: The percent of the work put into a machine that is converted into useful work output; = work done / energy used [-]. = eta In machines: The work output of any machine cannot exceed the work input (<=100%); in an ideal machine, where no energy is transformed into heat: work(input) = work(output), =100%. Energy: The property of a system that enables it to do work. Conservation o. E.: Energy cannot be created or destroyed; it may be transformed from one form into another, but the total amount of energy never changes. Equilibrium: The state of an object when not acted upon by a net force or net torque; an object in equilibrium may be at rest or moving at uniform velocity - not accelerating. Mechanical E.: The state of an object or system of objects for which any impressed forces cancels to zero and no acceleration occurs. Dynamic E.: Object is moving without experiencing acceleration. Static E.: Object is at rest.F Force: The influence that can cause an object to be accelerated or retarded; is always in the direction of the net force, hence a vector quantity; the four elementary forces are: Electromagnetic F.: Is an attraction or repulsion G, gravit. const.6.672E-11[Nm2/kg2] between electric charges: d, distance [m] 2 2 2 2 F = 1/(40) (q1q2/d ) [(CC/m )(Nm /C )] = [N] m,M, mass [kg] Gravitational F.: Is a mutual attraction between all masses: q, charge [As] [C] 2 2 2 2 F = GmM/d [Nm /kg kg 1/m ] = [N] 0, dielectric constant Strong F.: (nuclear force) Acts within the nuclei of atoms: 8.854E-12 [C2/Nm2] [F/m] 2 2 2 2 2 F = 1/(40) (e /d ) [(CC/m )(Nm /C )] = [N] , 3.14 [-] Weak F.: Manifests itself in special reactions among elementary e, 1.60210 E-19 [As] [C] particles, such as the reaction that occur in radioactive decay. -

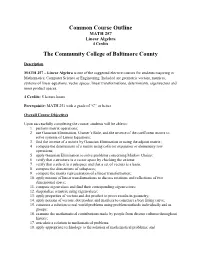

Common Course Outline MATH 257 Linear Algebra 4 Credits

Common Course Outline MATH 257 Linear Algebra 4 Credits The Community College of Baltimore County Description MATH 257 – Linear Algebra is one of the suggested elective courses for students majoring in Mathematics, Computer Science or Engineering. Included are geometric vectors, matrices, systems of linear equations, vector spaces, linear transformations, determinants, eigenvectors and inner product spaces. 4 Credits: 5 lecture hours Prerequisite: MATH 251 with a grade of “C” or better Overall Course Objectives Upon successfully completing the course, students will be able to: 1. perform matrix operations; 2. use Gaussian Elimination, Cramer’s Rule, and the inverse of the coefficient matrix to solve systems of Linear Equations; 3. find the inverse of a matrix by Gaussian Elimination or using the adjoint matrix; 4. compute the determinant of a matrix using cofactor expansion or elementary row operations; 5. apply Gaussian Elimination to solve problems concerning Markov Chains; 6. verify that a structure is a vector space by checking the axioms; 7. verify that a subset is a subspace and that a set of vectors is a basis; 8. compute the dimensions of subspaces; 9. compute the matrix representation of a linear transformation; 10. apply notions of linear transformations to discuss rotations and reflections of two dimensional space; 11. compute eigenvalues and find their corresponding eigenvectors; 12. diagonalize a matrix using eigenvalues; 13. apply properties of vectors and dot product to prove results in geometry; 14. apply notions of vectors, dot product and matrices to construct a best fitting curve; 15. construct a solution to real world problems using problem methods individually and in groups; 16. -

Path Integrals in Quantum Mechanics

Path Integrals in Quantum Mechanics Dennis V. Perepelitsa MIT Department of Physics 70 Amherst Ave. Cambridge, MA 02142 Abstract We present the path integral formulation of quantum mechanics and demon- strate its equivalence to the Schr¨odinger picture. We apply the method to the free particle and quantum harmonic oscillator, investigate the Euclidean path integral, and discuss other applications. 1 Introduction A fundamental question in quantum mechanics is how does the state of a particle evolve with time? That is, the determination the time-evolution ψ(t) of some initial | i state ψ(t ) . Quantum mechanics is fully predictive [3] in the sense that initial | 0 i conditions and knowledge of the potential occupied by the particle is enough to fully specify the state of the particle for all future times.1 In the early twentieth century, Erwin Schr¨odinger derived an equation specifies how the instantaneous change in the wavefunction d ψ(t) depends on the system dt | i inhabited by the state in the form of the Hamiltonian. In this formulation, the eigenstates of the Hamiltonian play an important role, since their time-evolution is easy to calculate (i.e. they are stationary). A well-established method of solution, after the entire eigenspectrum of Hˆ is known, is to decompose the initial state into this eigenbasis, apply time evolution to each and then reassemble the eigenstates. That is, 1In the analysis below, we consider only the position of a particle, and not any other quantum property such as spin. 2 D.V. Perepelitsa n=∞ ψ(t) = exp [ iE t/~] n ψ(t ) n (1) | i − n h | 0 i| i n=0 X This (Hamiltonian) formulation works in many cases. -

Unit 1 Old Quantum Theory

UNIT 1 OLD QUANTUM THEORY Structure Introduction Objectives li;,:overy of Sub-atomic Particles Earlier Atom Models Light as clectromagnetic Wave Failures of Classical Physics Black Body Radiation '1 Heat Capacity Variation Photoelectric Effect Atomic Spectra Planck's Quantum Theory, Black Body ~diation. and Heat Capacity Variation Einstein's Theory of Photoelectric Effect Bohr Atom Model Calculation of Radius of Orbits Energy of an Electron in an Orbit Atomic Spectra and Bohr's Theory Critical Analysis of Bohr's Theory Refinements in the Atomic Spectra The61-y Summary Terminal Questions Answers 1.1 INTRODUCTION The ideas of classical mechanics developed by Galileo, Kepler and Newton, when applied to atomic and molecular systems were found to be inadequate. Need was felt for a theory to describe, correlate and predict the behaviour of the sub-atomic particles. The quantum theory, proposed by Max Planck and applied by Einstein and Bohr to explain different aspects of behaviour of matter, is an important milestone in the formulation of the modern concept of atom. In this unit, we will study how black body radiation, heat capacity variation, photoelectric effect and atomic spectra of hydrogen can be explained on the basis of theories proposed by Max Planck, Einstein and Bohr. They based their theories on the postulate that all interactions between matter and radiation occur in terms of definite packets of energy, known as quanta. Their ideas, when extended further, led to the evolution of wave mechanics, which shows the dual nature of matter -

1 Postulate (QM4): Quantum Measurements

Part IIC Lent term 2019-2020 QUANTUM INFORMATION & COMPUTATION Nilanjana Datta, DAMTP Cambridge 1 Postulate (QM4): Quantum measurements An isolated (closed) quantum system has a unitary evolution. However, when an exper- iment is done to find out the properties of the system, there is an interaction between the system and the experimentalists and their equipment (i.e., the external physical world). So the system is no longer closed and its evolution is not necessarily unitary. The following postulate provides a means of describing the effects of a measurement on a quantum-mechanical system. In classical physics the state of any given physical system can always in principle be fully determined by suitable measurements on a single copy of the system, while leaving the original state intact. In quantum theory the corresponding situation is bizarrely different { quantum measurements generally have only probabilistic outcomes, they are \invasive", generally unavoidably corrupting the input state, and they reveal only a rather small amount of information about the (now irrevocably corrupted) input state identity. Furthermore the (probabilistic) change of state in a quantum measurement is (unlike normal time evolution) not a unitary process. Here we outline the associated mathematical formalism, which is at least, easy to apply. (QM4) Quantum measurements and the Born rule In Quantum Me- chanics one measures an observable, i.e. a self-adjoint operator. Let A be an observable acting on the state space V of a quantum system Since A is self-adjoint, its eigenvalues P are real. Let its spectral projection be given by A = n anPn, where fang denote the set of eigenvalues of A and Pn denotes the orthogonal projection onto the subspace of V spanned by eigenvectors of A corresponding to the eigenvalue Pn. -

Quantum Circuits with Mixed States Is Polynomially Equivalent in Computational Power to the Standard Unitary Model

Quantum Circuits with Mixed States Dorit Aharonov∗ Alexei Kitaev† Noam Nisan‡ February 1, 2008 Abstract Current formal models for quantum computation deal only with unitary gates operating on “pure quantum states”. In these models it is difficult or impossible to deal formally with several central issues: measurements in the middle of the computation; decoherence and noise, using probabilistic subroutines, and more. It turns out, that the restriction to unitary gates and pure states is unnecessary. In this paper we generalize the formal model of quantum circuits to a model in which the state can be a general quantum state, namely a mixed state, or a “density matrix”, and the gates can be general quantum operations, not necessarily unitary. The new model is shown to be equivalent in computational power to the standard one, and the problems mentioned above essentially disappear. The main result in this paper is a solution for the subroutine problem. The general function that a quantum circuit outputs is a probabilistic function. However, the question of using probabilistic functions as subroutines was not previously dealt with, the reason being that in the language of pure states, this simply can not be done. We define a natural notion of using general subroutines, and show that using general subroutines does not strengthen the model. As an example of the advantages of analyzing quantum complexity using density ma- trices, we prove a simple lower bound on depth of circuits that compute probabilistic func- arXiv:quant-ph/9806029v1 8 Jun 1998 tions. Finally, we deal with the question of inaccurate quantum computation with mixed states. -

21. Orthonormal Bases

21. Orthonormal Bases The canonical/standard basis 011 001 001 B C B C B C B0C B1C B0C e1 = B.C ; e2 = B.C ; : : : ; en = B.C B.C B.C B.C @.A @.A @.A 0 0 1 has many useful properties. • Each of the standard basis vectors has unit length: q p T jjeijj = ei ei = ei ei = 1: • The standard basis vectors are orthogonal (in other words, at right angles or perpendicular). T ei ej = ei ej = 0 when i 6= j This is summarized by ( 1 i = j eT e = δ = ; i j ij 0 i 6= j where δij is the Kronecker delta. Notice that the Kronecker delta gives the entries of the identity matrix. Given column vectors v and w, we have seen that the dot product v w is the same as the matrix multiplication vT w. This is the inner product on n T R . We can also form the outer product vw , which gives a square matrix. 1 The outer product on the standard basis vectors is interesting. Set T Π1 = e1e1 011 B C B0C = B.C 1 0 ::: 0 B.C @.A 0 01 0 ::: 01 B C B0 0 ::: 0C = B. .C B. .C @. .A 0 0 ::: 0 . T Πn = enen 001 B C B0C = B.C 0 0 ::: 1 B.C @.A 1 00 0 ::: 01 B C B0 0 ::: 0C = B. .C B. .C @. .A 0 0 ::: 1 In short, Πi is the diagonal square matrix with a 1 in the ith diagonal position and zeros everywhere else. -

Quantum Field Theory*

Quantum Field Theory y Frank Wilczek Institute for Advanced Study, School of Natural Science, Olden Lane, Princeton, NJ 08540 I discuss the general principles underlying quantum eld theory, and attempt to identify its most profound consequences. The deep est of these consequences result from the in nite number of degrees of freedom invoked to implement lo cality.Imention a few of its most striking successes, b oth achieved and prosp ective. Possible limitation s of quantum eld theory are viewed in the light of its history. I. SURVEY Quantum eld theory is the framework in which the regnant theories of the electroweak and strong interactions, which together form the Standard Mo del, are formulated. Quantum electro dynamics (QED), b esides providing a com- plete foundation for atomic physics and chemistry, has supp orted calculations of physical quantities with unparalleled precision. The exp erimentally measured value of the magnetic dip ole moment of the muon, 11 (g 2) = 233 184 600 (1680) 10 ; (1) exp: for example, should b e compared with the theoretical prediction 11 (g 2) = 233 183 478 (308) 10 : (2) theor: In quantum chromo dynamics (QCD) we cannot, for the forseeable future, aspire to to comparable accuracy.Yet QCD provides di erent, and at least equally impressive, evidence for the validity of the basic principles of quantum eld theory. Indeed, b ecause in QCD the interactions are stronger, QCD manifests a wider variety of phenomena characteristic of quantum eld theory. These include esp ecially running of the e ective coupling with distance or energy scale and the phenomenon of con nement. -

18.102 Introduction to Functional Analysis Spring 2009

MIT OpenCourseWare http://ocw.mit.edu 18.102 Introduction to Functional Analysis Spring 2009 For information about citing these materials or our Terms of Use, visit: http://ocw.mit.edu/terms. 108 LECTURE NOTES FOR 18.102, SPRING 2009 Lecture 19. Thursday, April 16 I am heading towards the spectral theory of self-adjoint compact operators. This is rather similar to the spectral theory of self-adjoint matrices and has many useful applications. There is a very effective spectral theory of general bounded but self- adjoint operators but I do not expect to have time to do this. There is also a pretty satisfactory spectral theory of non-selfadjoint compact operators, which it is more likely I will get to. There is no satisfactory spectral theory for general non-compact and non-self-adjoint operators as you can easily see from examples (such as the shift operator). In some sense compact operators are ‘small’ and rather like finite rank operators. If you accept this, then you will want to say that an operator such as (19.1) Id −K; K 2 K(H) is ‘big’. We are quite interested in this operator because of spectral theory. To say that λ 2 C is an eigenvalue of K is to say that there is a non-trivial solution of (19.2) Ku − λu = 0 where non-trivial means other than than the solution u = 0 which always exists. If λ =6 0 we can divide by λ and we are looking for solutions of −1 (19.3) (Id −λ K)u = 0 −1 which is just (19.1) for another compact operator, namely λ K: What are properties of Id −K which migh show it to be ‘big? Here are three: Proposition 26. -

Curl, Divergence and Laplacian

Curl, Divergence and Laplacian What to know: 1. The definition of curl and it two properties, that is, theorem 1, and be able to predict qualitatively how the curl of a vector field behaves from a picture. 2. The definition of divergence and it two properties, that is, if div F~ 6= 0 then F~ can't be written as the curl of another field, and be able to tell a vector field of clearly nonzero,positive or negative divergence from the picture. 3. Know the definition of the Laplace operator 4. Know what kind of objects those operator take as input and what they give as output. The curl operator Let's look at two plots of vector fields: Figure 1: The vector field Figure 2: The vector field h−y; x; 0i: h1; 1; 0i We can observe that the second one looks like it is rotating around the z axis. We'd like to be able to predict this kind of behavior without having to look at a picture. We also promised to find a criterion that checks whether a vector field is conservative in R3. Both of those goals are accomplished using a tool called the curl operator, even though neither of those two properties is exactly obvious from the definition we'll give. Definition 1. Let F~ = hP; Q; Ri be a vector field in R3, where P , Q and R are continuously differentiable. We define the curl operator: @R @Q @P @R @Q @P curl F~ = − ~i + − ~j + − ~k: (1) @y @z @z @x @x @y Remarks: 1. -

Determinants Math 122 Calculus III D Joyce, Fall 2012

Determinants Math 122 Calculus III D Joyce, Fall 2012 What they are. A determinant is a value associated to a square array of numbers, that square array being called a square matrix. For example, here are determinants of a general 2 × 2 matrix and a general 3 × 3 matrix. a b = ad − bc: c d a b c d e f = aei + bfg + cdh − ceg − afh − bdi: g h i The determinant of a matrix A is usually denoted jAj or det (A). You can think of the rows of the determinant as being vectors. For the 3×3 matrix above, the vectors are u = (a; b; c), v = (d; e; f), and w = (g; h; i). Then the determinant is a value associated to n vectors in Rn. There's a general definition for n×n determinants. It's a particular signed sum of products of n entries in the matrix where each product is of one entry in each row and column. The two ways you can choose one entry in each row and column of the 2 × 2 matrix give you the two products ad and bc. There are six ways of chosing one entry in each row and column in a 3 × 3 matrix, and generally, there are n! ways in an n × n matrix. Thus, the determinant of a 4 × 4 matrix is the signed sum of 24, which is 4!, terms. In this general definition, half the terms are taken positively and half negatively. In class, we briefly saw how the signs are determined by permutations. -

28. Exterior Powers

28. Exterior powers 28.1 Desiderata 28.2 Definitions, uniqueness, existence 28.3 Some elementary facts 28.4 Exterior powers Vif of maps 28.5 Exterior powers of free modules 28.6 Determinants revisited 28.7 Minors of matrices 28.8 Uniqueness in the structure theorem 28.9 Cartan's lemma 28.10 Cayley-Hamilton Theorem 28.11 Worked examples While many of the arguments here have analogues for tensor products, it is worthwhile to repeat these arguments with the relevant variations, both for practice, and to be sensitive to the differences. 1. Desiderata Again, we review missing items in our development of linear algebra. We are missing a development of determinants of matrices whose entries may be in commutative rings, rather than fields. We would like an intrinsic definition of determinants of endomorphisms, rather than one that depends upon a choice of coordinates, even if we eventually prove that the determinant is independent of the coordinates. We anticipate that Artin's axiomatization of determinants of matrices should be mirrored in much of what we do here. We want a direct and natural proof of the Cayley-Hamilton theorem. Linear algebra over fields is insufficient, since the introduction of the indeterminate x in the definition of the characteristic polynomial takes us outside the class of vector spaces over fields. We want to give a conceptual proof for the uniqueness part of the structure theorem for finitely-generated modules over principal ideal domains. Multi-linear algebra over fields is surely insufficient for this. 417 418 Exterior powers 2. Definitions, uniqueness, existence Let R be a commutative ring with 1.