Big Numbers Tom Davis [email protected] September 21, 2011

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Large but Finite

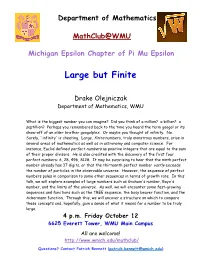

Department of Mathematics MathClub@WMU Michigan Epsilon Chapter of Pi Mu Epsilon Large but Finite Drake Olejniczak Department of Mathematics, WMU What is the biggest number you can imagine? Did you think of a million? a billion? a septillion? Perhaps you remembered back to the time you heard the term googol or its show-off of an older brother googolplex. Or maybe you thought of infinity. No. Surely, `infinity' is cheating. Large, finite numbers, truly monstrous numbers, arise in several areas of mathematics as well as in astronomy and computer science. For instance, Euclid defined perfect numbers as positive integers that are equal to the sum of their proper divisors. He is also credited with the discovery of the first four perfect numbers: 6, 28, 496, 8128. It may be surprising to hear that the ninth perfect number already has 37 digits, or that the thirteenth perfect number vastly exceeds the number of particles in the observable universe. However, the sequence of perfect numbers pales in comparison to some other sequences in terms of growth rate. In this talk, we will explore examples of large numbers such as Graham's number, Rayo's number, and the limits of the universe. As well, we will encounter some fast-growing sequences and functions such as the TREE sequence, the busy beaver function, and the Ackermann function. Through this, we will uncover a structure on which to compare these concepts and, hopefully, gain a sense of what it means for a number to be truly large. 4 p.m. Friday October 12 6625 Everett Tower, WMU Main Campus All are welcome! http://www.wmich.edu/mathclub/ Questions? Contact Patrick Bennett ([email protected]) . -

Grade 7/8 Math Circles the Scale of Numbers Introduction

Faculty of Mathematics Centre for Education in Waterloo, Ontario N2L 3G1 Mathematics and Computing Grade 7/8 Math Circles November 21/22/23, 2017 The Scale of Numbers Introduction Last week we quickly took a look at scientific notation, which is one way we can write down really big numbers. We can also use scientific notation to write very small numbers. 1 × 103 = 1; 000 1 × 102 = 100 1 × 101 = 10 1 × 100 = 1 1 × 10−1 = 0:1 1 × 10−2 = 0:01 1 × 10−3 = 0:001 As you can see above, every time the value of the exponent decreases, the number gets smaller by a factor of 10. This pattern continues even into negative exponent values! Another way of picturing negative exponents is as a division by a positive exponent. 1 10−6 = = 0:000001 106 In this lesson we will be looking at some famous, interesting, or important small numbers, and begin slowly working our way up to the biggest numbers ever used in mathematics! Obviously we can come up with any arbitrary number that is either extremely small or extremely large, but the purpose of this lesson is to only look at numbers with some kind of mathematical or scientific significance. 1 Extremely Small Numbers 1. Zero • Zero or `0' is the number that represents nothingness. It is the number with the smallest magnitude. • Zero only began being used as a number around the year 500. Before this, ancient mathematicians struggled with the concept of `nothing' being `something'. 2. Planck's Constant This is the smallest number that we will be looking at today other than zero. -

WRITING and READING NUMBERS in ENGLISH 1. Number in English 2

WRITING AND READING NUMBERS IN ENGLISH 1. Number in English 2. Large Numbers 3. Decimals 4. Fractions 5. Power / Exponents 6. Dates 7. Important Numerical expressions 1. NUMBERS IN ENGLISH Cardinal numbers (one, two, three, etc.) are adjectives referring to quantity, and the ordinal numbers (first, second, third, etc.) refer to distribution. Number Cardinal Ordinal In numbers 1 one First 1st 2 two second 2nd 3 three third 3rd 4 four fourth 4th 5 five fifth 5th 6 six sixth 6th 7 seven seventh 7th 8 eight eighth 8th 9 nine ninth 9th 10 ten tenth 10th 11 eleven eleventh 11th 12 twelve twelfth 12th 13 thirteen thirteenth 13th 14 fourteen fourteenth 14th 15 fifteen fifteenth 15th 16 sixteen sixteenth 16th 17 seventeen seventeenth 17th 18 eighteen eighteenth 18th 19 nineteen nineteenth 19th 20 twenty twentieth 20th 21 twenty-one twenty-first 21st 22 twenty-two twenty-second 22nd 23 twenty-three twenty-third 23rd 1 24 twenty-four twenty-fourth 24th 25 twenty-five twenty-fifth 25th 26 twenty-six twenty-sixth 26th 27 twenty-seven twenty-seventh 27th 28 twenty-eight twenty-eighth 28th 29 twenty-nine twenty-ninth 29th 30 thirty thirtieth 30th st 31 thirty-one thirty-first 31 40 forty fortieth 40th 50 fifty fiftieth 50th 60 sixty sixtieth 60th th 70 seventy seventieth 70 th 80 eighty eightieth 80 90 ninety ninetieth 90th 100 one hundred hundredth 100th 500 five hundred five hundredth 500th 1,000 One/ a thousandth 1000th thousand one thousand one thousand five 1500th 1,500 five hundred, hundredth or fifteen hundred 100,000 one hundred hundred thousandth 100,000th thousand 1,000,000 one million millionth 1,000,000 ◊◊ Click on the links below to practice your numbers: http://www.manythings.org/wbg/numbers-jw.html https://www.englisch-hilfen.de/en/exercises/numbers/index.php 2 We don't normally write numbers with words, but it's possible to do this. -

Simple Statements, Large Numbers

University of Nebraska - Lincoln DigitalCommons@University of Nebraska - Lincoln MAT Exam Expository Papers Math in the Middle Institute Partnership 7-2007 Simple Statements, Large Numbers Shana Streeks University of Nebraska-Lincoln Follow this and additional works at: https://digitalcommons.unl.edu/mathmidexppap Part of the Science and Mathematics Education Commons Streeks, Shana, "Simple Statements, Large Numbers" (2007). MAT Exam Expository Papers. 41. https://digitalcommons.unl.edu/mathmidexppap/41 This Article is brought to you for free and open access by the Math in the Middle Institute Partnership at DigitalCommons@University of Nebraska - Lincoln. It has been accepted for inclusion in MAT Exam Expository Papers by an authorized administrator of DigitalCommons@University of Nebraska - Lincoln. Master of Arts in Teaching (MAT) Masters Exam Shana Streeks In partial fulfillment of the requirements for the Master of Arts in Teaching with a Specialization in the Teaching of Middle Level Mathematics in the Department of Mathematics. Gordon Woodward, Advisor July 2007 Simple Statements, Large Numbers Shana Streeks July 2007 Page 1 Streeks Simple Statements, Large Numbers Large numbers are numbers that are significantly larger than those ordinarily used in everyday life, as defined by Wikipedia (2007). Large numbers typically refer to large positive integers, or more generally, large positive real numbers, but may also be used in other contexts. Very large numbers often occur in fields such as mathematics, cosmology, and cryptography. Sometimes people refer to numbers as being “astronomically large”. However, it is easy to mathematically define numbers that are much larger than those even in astronomy. We are familiar with the large magnitudes, such as million or billion. -

1 Powers of Two

A. V. GERBESSIOTIS CS332-102 Spring 2020 Jan 24, 2020 Computer Science: Fundamentals Page 1 Handout 3 1 Powers of two Definition 1.1 (Powers of 2). The expression 2n means the multiplication of n twos. Therefore, 22 = 2 · 2 is a 4, 28 = 2 · 2 · 2 · 2 · 2 · 2 · 2 · 2 is 256, and 210 = 1024. Moreover, 21 = 2 and 20 = 1. Several times one might write 2 ∗ ∗n or 2ˆn for 2n (ˆ is the hat/caret symbol usually co-located with the numeric-6 keyboard key). Prefix Name Multiplier d deca 101 = 10 h hecto 102 = 100 3 Power Value k kilo 10 = 1000 6 0 M mega 10 2 1 9 1 G giga 10 2 2 12 4 T tera 10 2 16 P peta 1015 8 2 256 E exa 1018 210 1024 d deci 10−1 216 65536 c centi 10−2 Prefix Name Multiplier 220 1048576 m milli 10−3 Ki kibi or kilobinary 210 − 230 1073741824 m micro 10 6 Mi mebi or megabinary 220 40 n nano 10−9 Gi gibi or gigabinary 230 2 1099511627776 −12 40 250 1125899906842624 p pico 10 Ti tebi or terabinary 2 f femto 10−15 Pi pebi or petabinary 250 Figure 1: Powers of two Figure 2: SI system prefixes Figure 3: SI binary prefixes Definition 1.2 (Properties of powers). • (Multiplication.) 2m · 2n = 2m 2n = 2m+n. (Dot · optional.) • (Division.) 2m=2n = 2m−n. (The symbol = is the slash symbol) • (Exponentiation.) (2m)n = 2m·n. Example 1.1 (Approximations for 210 and 220 and 230). -

World Happiness REPORT Edited by John Helliwell, Richard Layard and Jeffrey Sachs World Happiness Report Edited by John Helliwell, Richard Layard and Jeffrey Sachs

World Happiness REPORT Edited by John Helliwell, Richard Layard and Jeffrey Sachs World Happiness reporT edited by John Helliwell, richard layard and Jeffrey sachs Table of ConTenTs 1. Introduction ParT I 2. The state of World Happiness 3. The Causes of Happiness and Misery 4. some Policy Implications references to Chapters 1-4 ParT II 5. Case study: bhutan 6. Case study: ons 7. Case study: oeCd 65409_Earth_Chapter1v2.indd 1 4/30/12 3:46 PM Part I. Chapter 1. InTrodUCTIon JEFFREY SACHS 2 Jeffrey D. Sachs: director, The earth Institute, Columbia University 65409_Earth_Chapter1v2.indd 2 4/30/12 3:46 PM World Happiness reporT We live in an age of stark contradictions. The world enjoys technologies of unimaginable sophistication; yet has at least one billion people without enough to eat each day. The world economy is propelled to soaring new heights of productivity through ongoing technological and organizational advance; yet is relentlessly destroying the natural environment in the process. Countries achieve great progress in economic development as conventionally measured; yet along the way succumb to new crises of obesity, smoking, diabetes, depression, and other ills of modern life. 1 These contradictions would not come as a shock to the greatest sages of humanity, including Aristotle and the Buddha. The sages taught humanity, time and again, that material gain alone will not fulfi ll our deepest needs. Material life must be harnessed to meet these human needs, most importantly to promote the end of suffering, social justice, and the attainment of happiness. The challenge is real for all parts of the world. -

36 Scientific Notation

36 Scientific Notation Scientific notation is used to express extremely large or extremely small numbers efficiently. The main idea is to write the number you want as a number between 1 and 10 multiplied by an integer power of 10. Fill in the blanks in the table below. Write the decimal form of the number given in scientific notation. Then give the calculator’s notation for the scientific notation. This is the only time you should write this calculator notation. Calculator notation is not acceptable in your written work; use a human language! The last column is for a word name for the number. In a few rows, you will write the scientific notation yourself. Scientific Decimal Notation Calculator Word name for the notation notation number One billion one billion dollars dollars 1.0 ×10 9 dollars One year 3.16 ×10 7 seconds Distance from earth to moon 3.8 ×10 8 meters 6.24 ×10 23 Mass of Mars 624 sextillion kg kilograms 1.0 ×10 -9 Nanosecond seconds 8 Speed of tv 3.0 ×10 signals meters/second -27 -27 Atomic mass 1.66 ×10 Just say, “1.66 ×10 unit kilograms kg”! × 12 Light year 6 10 miles 15 Light year 9.5 ×10 meters One billion light years meters 16 Parsec 3.1 ×10 meters 6 Megaparsec 10 parsecs Megabyte = 220 = 1,048,576 20 2 bytes bytes bytes Micron One millionth of a meter The number of About one hundred stars in the billion Milky Way -14 Diameter of a 7.5 ×10 carbon-12 meters atom Rosalie A. -

The Costs of Inaction

Climate Change – the Costs of Inaction Report to Friends of the Earth England, Wales and Northern Ireland Frank Ackerman and Elizabeth Stanton Global Development and Environment Institute, Tufts University October 11, 2006 Table of Contents Executive Summary ................................................................................................. iii Introduction................................................................................................................1 Categorizing climate risks........................................................................................................... 2 The UK in 2050: hot, and getting hotter....................................................................7 Impacts of Climate Change......................................................................................11 Agriculture ................................................................................................................................ 11 Industry and infrastructure........................................................................................................ 15 Fresh water................................................................................................................................ 17 Human health............................................................................................................................ 18 Ecosystems and extinctions ...................................................................................................... 22 Summary measures of -

What Fun! It's Practice with Scientific Notation!

What Fun! It's Practice with Scientific Notation! Review of Scientific Notation Scientific notation provides a place to hold the zeroes that come after a whole number or before a fraction. The number 100,000,000 for example, takes up a lot of room and takes time to write out, while 108 is much more efficient. Though we think of zero as having no value, zeroes can make a number much bigger or smaller. Think about the difference between 10 dollars and 100 dollars. Even one zero can make a big difference in the value of the number. In the same way, 0.1 (one-tenth) of the US military budget is much more than 0.01 (one-hundredth) of the budget. The small number to the right of the 10 in scientific notation is called the exponent. Note that a negative exponent indicates that the number is a fraction (less than one). The line below shows the equivalent values of decimal notation (the way we write numbers usually, like "1,000 dollars") and scientific notation (103 dollars). For numbers smaller than one, the fraction is given as well. smaller bigger Fraction 1/100 1/10 Decimal notation 0.01 0.1 1 10 100 1,000 ____________________________________________________________________________ Scientific notation 10-2 10-1 100 101 102 103 Practice With Scientific Notation Write out the decimal equivalent (regular form) of the following numbers that are in scientific notation. Section A: Model: 101 = 10 1) 102 = _______________ 4) 10-2 = _________________ 2) 104 = _______________ 5) 10-5 = _________________ 3) 107 = _______________ 6) 100 = __________________ Section B: Model: 2 x 102 = 200 7) 3 x 102 = _________________ 10) 6 x 10-3 = ________________ 8) 7 x 104 = _________________ 11) 900 x 10-2 = ______________ 9) 2.4 x 103 = _______________ 12) 4 x 10-6 = _________________ Section C: Now convert from decimal form into scientific notation. -

Download.Oracle.Com/Docs/Cd/B28359 01/Network.111/B28530/Asotr Ans.Htm

SCUOLA DI DOTTORATO IN INFORMATICA Tesi di Dottorato XXIV Ciclo A Distributed Approach to Privacy on the Cloud Francesco Pagano Relatore: Prof. Ernesto Damiani Correlatore: Prof. Stelvio Cimato Direttore della Scuola di Dottorato in Informatica: Prof. Ernesto Damiani Anno Accademico 2010/2011 Abstract The increasing adoption of Cloud-based data processing and storage poses a number of privacy issues. Users wish to preserve full control over their sensitive data and cannot accept it to be fully accessible to an external storage provider. Previous research in this area was mostly addressed at techniques to protect data stored on untrusted database servers; however, I argue that the Cloud architecture presents a number of specific problems and issues. This dissertation contains a detailed analysis of open issues. To handle them, I present a novel approach where confidential data is stored in a highly distributed partitioned database, partly located on the Cloud and partly on the clients. In my approach, data can be either private or shared; the latter is shared in a secure manner by means of simple grant-and-revoke permissions. I have developed a proof-of-concept implementation using an in-memory RDBMS with row-level data encryption in order to achieve fine-grained data access control. This type of approach is rarely adopted in conventional outsourced RDBMSs because it requires several complex steps. Benchmarks of my proof- of-concept implementation show that my approach overcomes most of the problems. 2 Acknowledgements I want to thank -

Ever Heard of a Prillionaire? by Carol Castellon Do You Watch the TV Show

Ever Heard of a Prillionaire? by Carol Castellon Do you watch the TV show “Who Wants to Be a Millionaire?” hosted by Regis Philbin? Have you ever wished for a million dollars? "In today’s economy, even the millionaire doesn’t receive as much attention as the billionaire. Winners of a one-million dollar lottery find that it may not mean getting to retire, since the million is spread over 20 years (less than $3000 per month after taxes)."1 "If you count to a trillion dollars one by one at a dollar a second, you will need 31,710 years. Our government spends over three billion per day. At that rate, Washington is going through a trillion dollars in a less than one year. or about 31,708 years faster than you can count all that money!"1 I’ve heard people use names such as “zillion,” “gazillion,” “prillion,” for large numbers, and more recently I hear “Mega-Million.” It is fairly obvious that most people don’t know the correct names for large numbers. But where do we go from million? After a billion, of course, is trillion. Then comes quadrillion, quintrillion, sextillion, septillion, octillion, nonillion, and decillion. One of my favorite challenges is to have my math class continue to count by "illions" as far as they can. 6 million = 1x10 9 billion = 1x10 12 trillion = 1x10 15 quadrillion = 1x10 18 quintillion = 1x10 21 sextillion = 1x10 24 septillion = 1x10 27 octillion = 1x10 30 nonillion = 1x10 33 decillion = 1x10 36 undecillion = 1x10 39 duodecillion = 1x10 42 tredecillion = 1x10 45 quattuordecillion = 1x10 48 quindecillion = 1x10 51 -

Large Numbers from Wikipedia, the Free Encyclopedia

Large numbers From Wikipedia, the free encyclopedia This article is about large numbers in the sense of numbers that are significantly larger than those ordinarily used in everyday life, for instance in simple counting or in monetary transactions. The term typically refers to large positive integers, or more generally, large positive real numbers, but it may also be used in other contexts. Very large numbers often occur in fields such as mathematics, cosmology, cryptography, and statistical mechanics. Sometimes people refer to numbers as being "astronomically large". However, it is easy to mathematically define numbers that are much larger even than those used in astronomy. Contents 1 Using scientific notation to handle large and small numbers 2 Large numbers in the everyday world 3 Astronomically large numbers 4 Computers and computational complexity 5 Examples 6 Systematically creating ever faster increasing sequences 7 Standardized system of writing very large numbers 7.1 Examples of numbers in numerical order 8 Comparison of base values 9 Accuracy 9.1 Accuracy for very large numbers 9.2 Approximate arithmetic for very large numbers 10 Large numbers in some noncomputable sequences 11 Infinite numbers 12 Notations 13 See also 14 Notes and references Using scientific notation to handle large and small numbers See also: scientific notation, logarithmic scale and orders of magnitude Scientific notation was created to handle the wide range of values that occur in scientific study. 1.0 × 109, for example, means one billion, a 1 followed by nine zeros: 1 000 000 000, and 1.0 × 10−9 means one billionth, or 0.000 000 001.